Docker Containers: A Brief Overview

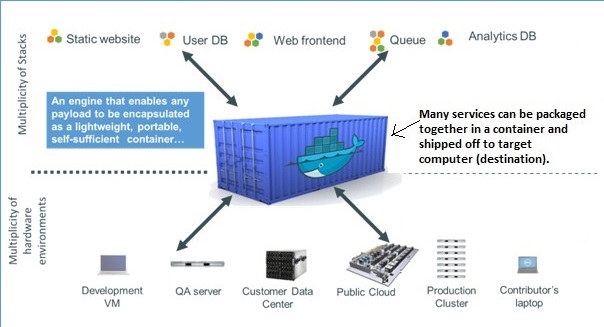

Docker containers have revolutionized the way developers build, ship, and run applications. They are a lightweight, standalone, and executable software package that includes everything needed to run a piece of software, including the code, a runtime, libraries, environment variables, and config files. Containers are isolated from each other and from the host system, ensuring consistent execution across different environments.

Docker containers differ from virtual machines (VMs) in several ways. While VMs virtualize the entire hardware stack, containers virtualize the operating system, making them much more resource-efficient. A single host can run multiple containers, each with its own environment and resources, without the need for separate operating systems. This makes Docker containers an ideal choice for applications requiring rapid deployment, scalability, and portability.

Key Features and Benefits of Docker Containers

Docker containers offer numerous advantages for developers and organizations alike. Their resource efficiency is a significant benefit, as containers share the host system’s OS kernel and use fewer resources than virtual machines. This makes it possible to run multiple containers on a single host, reducing hardware and maintenance costs.

Another key advantage of Docker containers is their portability. Applications packaged within containers can be deployed across different platforms and environments without requiring modifications. This ensures consistent execution and reduces the risk of compatibility issues, making it easier to manage and scale applications.

Docker containers also enable rapid deployment, as they can be started and stopped in seconds. This allows developers to quickly test, iterate, and deploy changes, significantly improving productivity and reducing time-to-market. Additionally, containers can be version-controlled, enabling easy rollbacks and facilitating collaboration among teams.

In summary, Docker containers are used for their resource efficiency, portability, and rapid deployment capabilities. These features make them an ideal solution for modern software development, enabling organizations to build, ship, and run applications more efficiently and reliably.

Popular Use Cases for Docker Containers

Docker containers have become increasingly popular in various real-world scenarios, thanks to their resource efficiency, portability, and rapid deployment capabilities. Here are some common use cases:

- Web application development: Docker containers simplify web application development by providing a consistent environment for building, testing, and deploying applications. They enable developers to package an application and its dependencies into a single container, ensuring consistent execution across different platforms and environments.

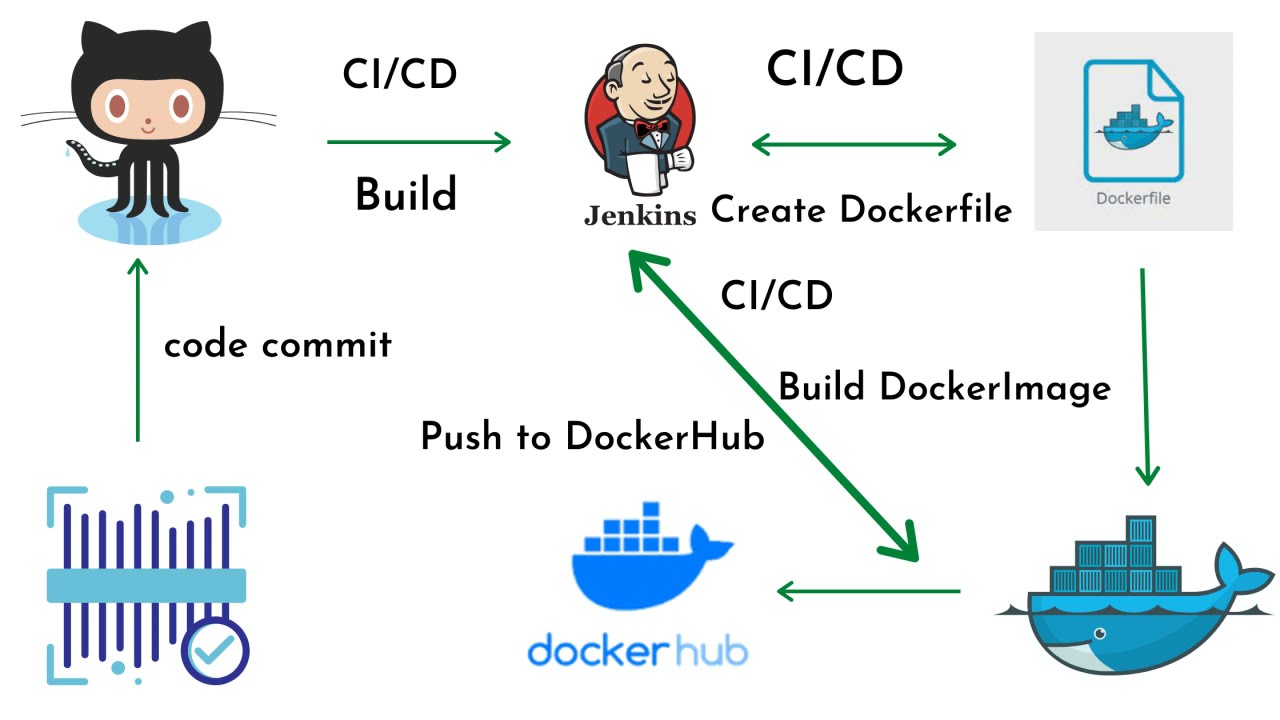

- Continuous integration and delivery: Docker containers can be integrated into continuous integration and delivery (CI/CD) pipelines, allowing developers to automate the testing and deployment process. By using containers, organizations can ensure consistent execution of their applications throughout the development lifecycle, reducing the risk of compatibility issues and accelerating time-to-market.

- Microservices architectures: Docker containers are ideal for implementing microservices architectures, where applications are composed of small, independently deployable services. Containers enable developers to package each microservice into a separate container, simplifying deployment, scaling, and management.

- Platform-as-a-Service (PaaS) providers: Docker containers are often used by PaaS providers, such as Heroku, Google App Engine, and Microsoft Azure, to offer lightweight, isolated environments for running customer applications. This allows PaaS providers to efficiently manage resources and offer a consistent experience to their users.

- Legacy application modernization: Docker containers can help organizations modernize legacy applications by providing a more efficient and portable execution environment. By containerizing legacy applications, organizations can reduce hardware and maintenance costs, while ensuring consistent execution across different platforms and environments.

In summary, Docker containers are used in a wide range of real-world scenarios, including web application development, continuous integration, microservices architectures, PaaS providers, and legacy application modernization. By offering resource efficiency, portability, and rapid deployment, Docker containers enable organizations to build, ship, and run applications more efficiently and reliably.

How to Create and Manage Docker Containers

Creating and managing Docker containers involves several steps, from installing Docker to running and managing containers. Here’s a step-by-step guide:

Step 1: Install Docker

Before creating and managing Docker containers, you need to install Docker on your system. Visit the official Docker website (https://www.docker.com/products/docker-desktop) to download and install Docker for your operating system.

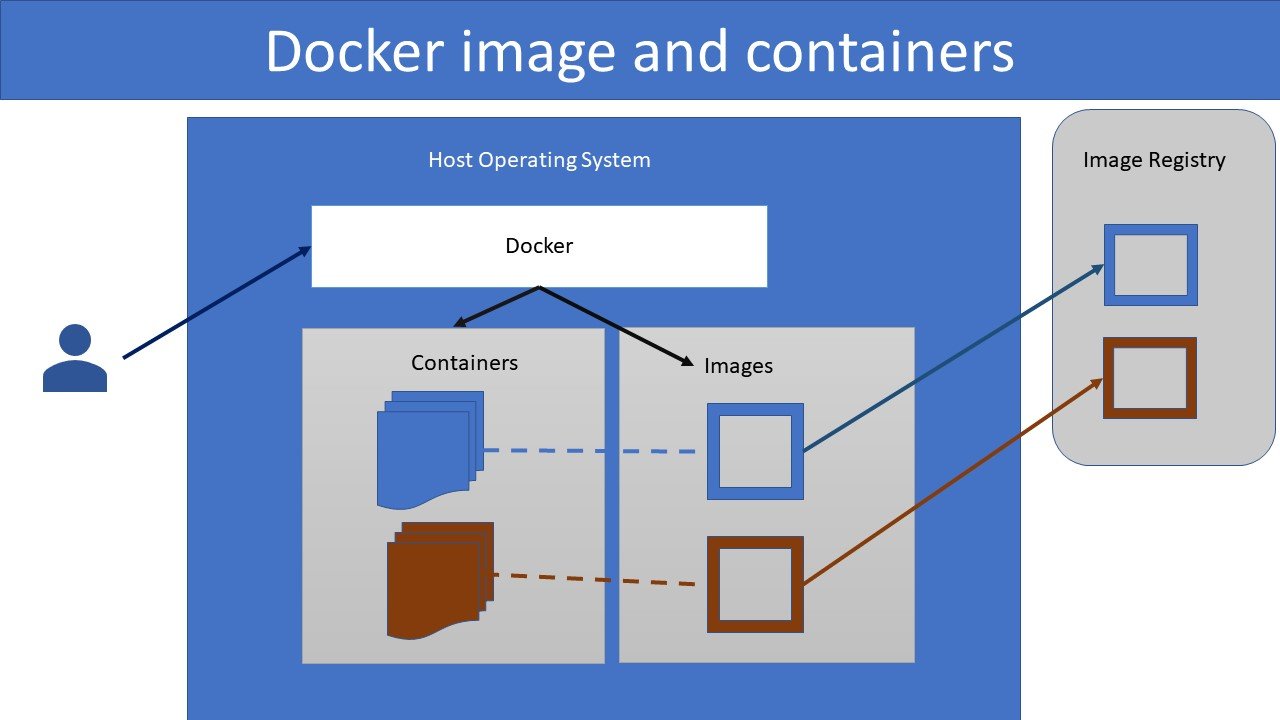

Step 2: Pull a Docker Image

Docker images are the basis for creating Docker containers. To pull a Docker image, use the following command:

docker pull [image_name]:[tag] Replace [image\_name] with the name of the Docker image and [tag] with the version or tag you want to use.

Step 3: Run a Docker Container

To run a Docker container from a Docker image, use the following command:

docker run -d -p [host_port]:[container_port] [image_name]:[tag] Replace [host\_port] with the port number on your host system, [container\_port] with the port number inside the container, [image\_name] with the name of the Docker image, and [tag] with the version or tag you want to use.

Step 4: Manage Docker Containers

To manage Docker containers, you can use various commands, such as:

docker ps: List all running Docker containersdocker stop [container_id]: Stop a specific Docker containerdocker rm [container_id]: Remove a specific Docker containerdocker images: List all Docker images on your systemdocker rmi [image_id]: Remove a specific Docker image

By following these steps, you can create, run, and manage Docker containers effectively. Keep in mind that Docker containers are designed for ephemeral workloads, meaning they should be created, used, and discarded as needed. This approach ensures optimal resource utilization and efficient container management.

Integrating Docker Containers with CI/CD Pipelines

Docker containers can be seamlessly integrated into continuous integration and continuous delivery (CI/CD) pipelines, enabling efficient and reliable software delivery. By using Docker containers in CI/CD pipelines, organizations can ensure consistent execution of their applications throughout the development lifecycle, reduce the risk of compatibility issues, and accelerate time-to-market.

Benefits of Integrating Docker Containers with CI/CD Pipelines

- Consistent environment: Docker containers provide a consistent environment for building, testing, and deploying applications, ensuring that the application runs the same way in development, testing, and production.

- Isolation: Docker containers ensure that each component of the application is isolated from others, reducing the risk of conflicts and compatibility issues.

- Efficient resource utilization: Docker containers use fewer resources than virtual machines, enabling organizations to run more containers on a single host and reducing infrastructure costs.

- Rapid deployment: Docker containers can be started and stopped in seconds, allowing organizations to quickly deploy changes and accelerate time-to-market.

How to Integrate Docker Containers with CI/CD Pipelines

To integrate Docker containers with CI/CD pipelines, follow these steps:

- Create a Docker image for each component of the application.

- Push the Docker images to a Docker registry, such as Docker Hub, Google Container Registry, or Amazon Elastic Container Registry.

- Configure the CI/CD pipeline to pull the Docker images from the registry and run them in containers.

- Configure the CI/CD pipeline to test, build, and deploy the application using Docker containers.

By following these steps, organizations can integrate Docker containers into their CI/CD pipelines, ensuring consistent execution of their applications throughout the development lifecycle, reducing the risk of compatibility issues, and accelerating time-to-market.

Real-World Examples of Docker Containers in Action

Docker containers have been successfully implemented in various popular projects and platforms, demonstrating their versatility and effectiveness. Here are some examples:

Docker Compose

Docker Compose is a tool for defining and running multi-container Docker applications. It allows developers to define the services, networks, and volumes required for their application in a single YAML file, making it easier to manage and scale the application. Docker Compose is often used for local development, testing, and staging environments.

Docker Swarm

Docker Swarm is a native orchestration tool for Docker containers, enabling developers to create and manage a swarm of Docker nodes. Docker Swarm allows developers to deploy services across multiple nodes, ensuring high availability and load balancing. It also supports rolling updates and rollbacks, making it easier to manage changes to the application.

Kubernetes

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It supports various container runtimes, including Docker, and allows developers to define the desired state of their application, such as the number of replicas, resources, and networks. Kubernetes also supports rolling updates and rollbacks, making it easier to manage changes to the application.

Jenkins

Jenkins is a popular continuous integration and continuous delivery (CI/CD) tool that supports Docker containers. By using Docker containers, Jenkins can ensure consistent execution of the build, test, and deployment environment, reducing the risk of compatibility issues and accelerating time-to-market.

Docker Hub

Docker Hub is a cloud-based registry for Docker images, allowing developers to store and share their Docker images with others. Docker Hub also supports automated builds, enabling developers to automatically build and test their Docker images when they push changes to their source code repository.

These examples demonstrate the versatility and effectiveness of Docker containers in various real-world scenarios. By using Docker containers, organizations can ensure consistent execution of their applications, reduce the risk of compatibility issues, and accelerate time-to-market.

Addressing Security Concerns in Docker Containers

While Docker containers offer numerous benefits, such as resource efficiency, portability, and rapid deployment, they also introduce potential security risks. Here are some security concerns associated with Docker containers and recommendations for mitigating these risks:

Container Escape

A container escape occurs when an attacker manages to break out of a container and gain access to the host system. To mitigate this risk, follow these best practices:

- Keep the Docker engine and all related components up-to-date.

- Limit the privileges of the Docker daemon and container processes.

- Use a dedicated Docker host for running containers.

- Implement mandatory access control mechanisms, such as SELinux or AppArmor.

Image Vulnerabilities

Docker images may contain vulnerabilities that can be exploited by attackers. To mitigate this risk, follow these best practices:

- Scan Docker images for vulnerabilities before deploying them.

- Use official Docker images whenever possible.

- Minimize the number of layers in a Docker image.

- Remove unnecessary packages and files from Docker images.

Network Security

Docker containers share the host network, introducing potential network security risks. To mitigate this risk, follow these best practices:

- Use a dedicated network for Docker containers.

- Limit the ports exposed by Docker containers.

- Implement firewall rules to restrict traffic to and from Docker containers.

- Use secure communication protocols, such as HTTPS or SSH.

Data Security

Docker containers may contain sensitive data that needs to be protected. To mitigate this risk, follow these best practices:

- Use encrypted communication channels for transmitting data.

- Store sensitive data outside of Docker containers, using external storage solutions.

- Implement access controls and permissions for Docker volumes and bind mounts.

- Regularly back up Docker volumes and bind mounts.

By following these best practices, organizations can mitigate the potential security risks associated with Docker containers and ensure the secure and reliable deployment of their applications.

The Future of Docker Containers and Containerization Technologies

Docker containers and containerization technologies have revolutionized the way software is developed, deployed, and managed. As these technologies continue to evolve, we can expect to see even more innovative use cases and applications in the future. Here are some trends and prospects to watch:

- Container Orchestration: As container adoption continues to grow, so does the need for managing and scaling containers in production environments. Container orchestration tools, such as Kubernetes, Docker Swarm, and Apache Mesos, will become even more critical for managing large-scale container deployments and ensuring high availability, load balancing, and auto-scaling.

- Edge Computing: Edge computing is an emerging trend that involves processing data and running applications on distributed devices, such as IoT devices, gateways, and edge servers. Docker containers are ideal for edge computing scenarios, as they offer lightweight, portable, and consistent execution environments for running applications on distributed devices.

- Serverless Computing: Serverless computing is a model where the cloud provider dynamically manages the allocation of machine resources. Docker containers can be used as the underlying technology for serverless computing platforms, enabling developers to build and deploy applications without worrying about infrastructure management.

- Artificial Intelligence and Machine Learning: Docker containers can be used to package and deploy machine learning models and AI applications, enabling developers to easily move these workloads across different environments and clouds. Containerization technologies can also help ensure consistent execution environments for AI and ML applications, reducing the risk of compatibility issues and ensuring reproducibility.

- Security and Compliance: Security and compliance will continue to be critical concerns for organizations adopting container technologies. New security and compliance features, such as image scanning, vulnerability management, and access controls, will become even more important for ensuring the secure and reliable deployment of containerized applications.

In conclusion, Docker containers and containerization technologies have the potential to transform the way software is developed, deployed, and managed. As these technologies continue to evolve, we can expect to see even more innovative use cases and applications, from container orchestration and edge computing to serverless computing, AI and ML, and security and compliance. By staying up-to-date with these trends and prospects, organizations can leverage the full potential of containerization technologies and ensure their long-term success in a rapidly changing technological landscape.