Understanding Containerization: A Modern Approach to Virtualization

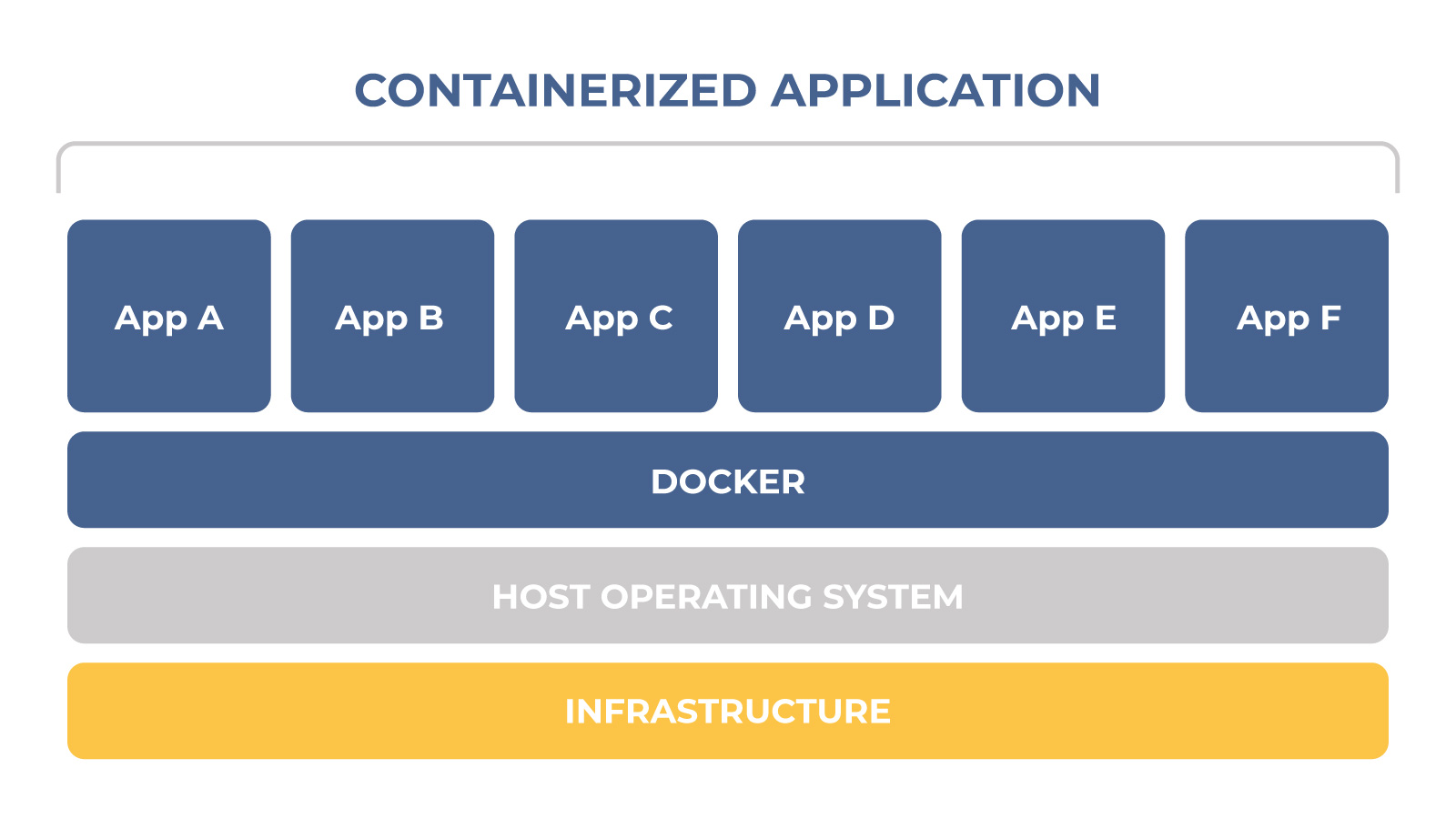

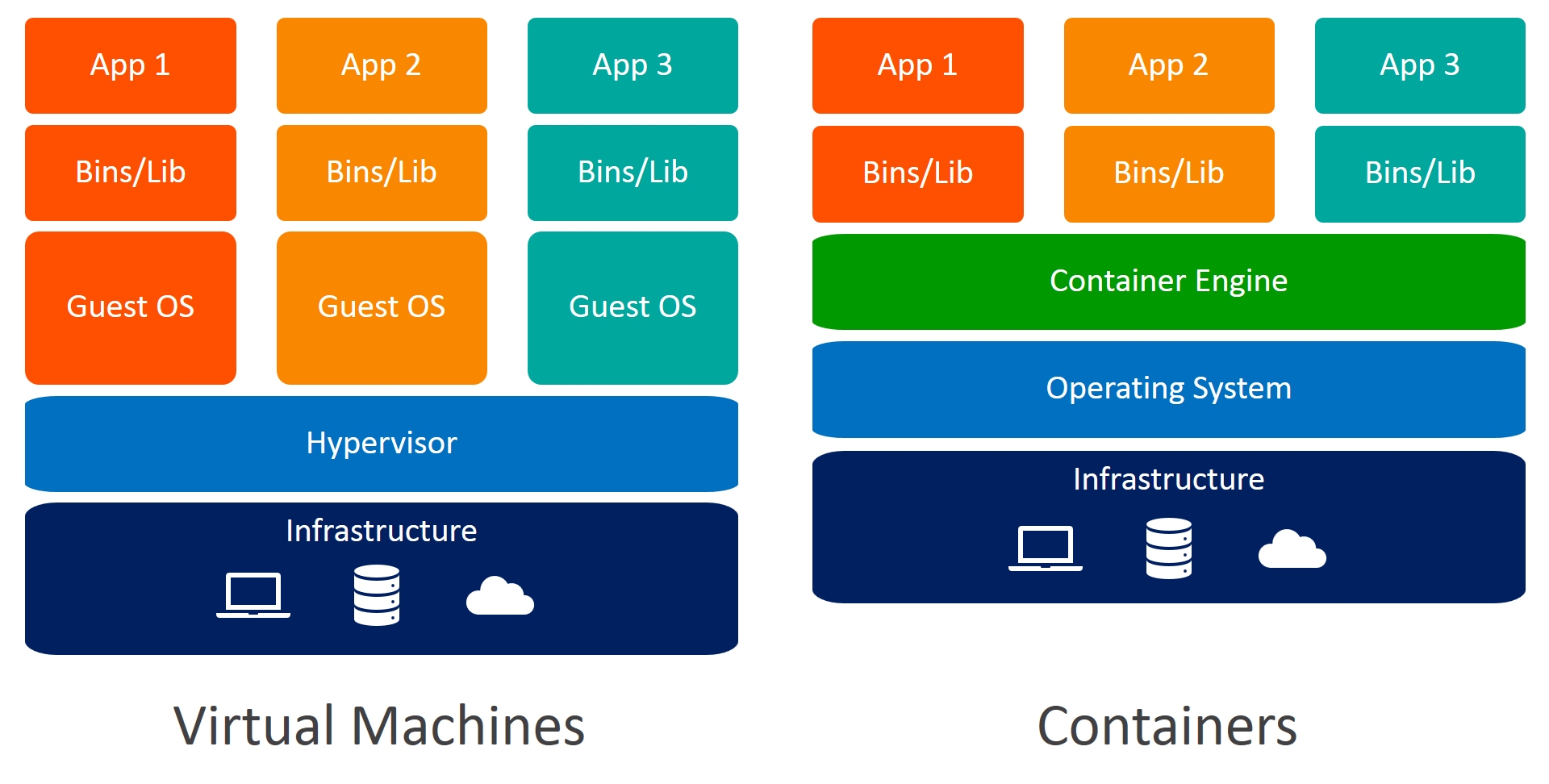

Containerization is a modern approach to virtualization that enables the creation of lightweight, portable, and self-contained environments for running applications and services. By packaging an application and its dependencies into a single container, developers can ensure consistent execution across different platforms and infrastructure. This approach significantly reduces the risk of compatibility issues, conflicts, and configuration errors.

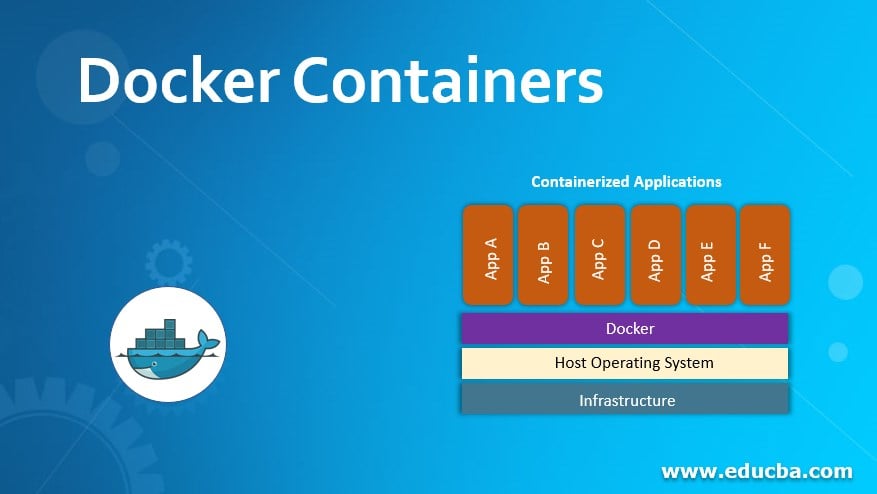

Docker is a leading containerization platform that has revolutionized the way developers build, ship, and run applications. With its powerful features and capabilities, Docker has become an essential tool for businesses and individuals seeking to improve resource efficiency, accelerate deployment times, and enhance application portability. By leveraging Docker’s containerization technology, organizations can streamline their development workflows, reduce operational costs, and improve overall productivity.

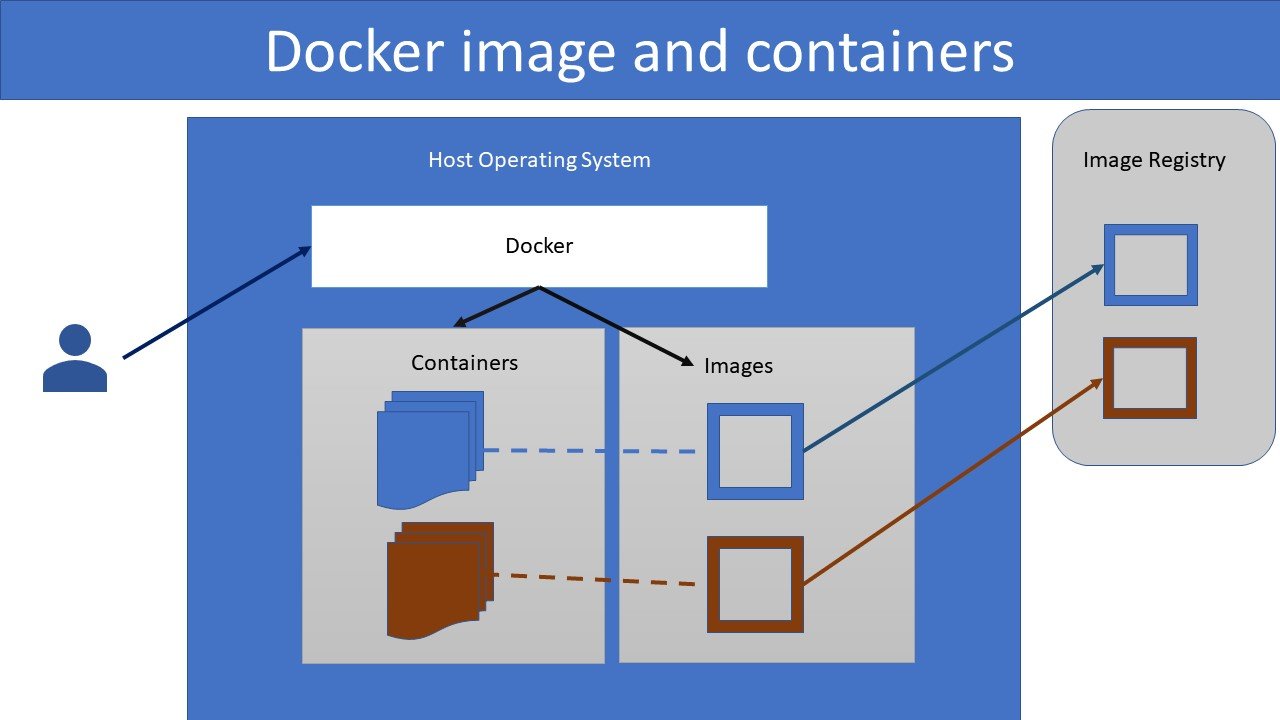

At the core of Docker’s containerization technology are several fundamental concepts and components. Docker images are lightweight, standalone, and executable packages that include an application and its dependencies. Containers are instances of Docker images that can be run, started, stopped, and managed as independent units. Registries, such as Docker Hub, serve as centralized repositories for sharing and distributing Docker images, enabling collaboration and reusability across teams and projects.

Orchestration tools, such as Docker Swarm, Kubernetes, and Docker Compose, play a crucial role in managing and scaling containerized applications in production environments. These tools simplify the process of deploying, networking, and monitoring containers, ensuring high availability, load balancing, and fault tolerance. By automating the management of containerized applications, organizations can achieve greater efficiency, reliability, and security in their production environments.

Docker: A Leading Containerization Platform

Docker is a widely-adopted and popular open-source containerization platform that has significantly transformed the software development landscape. Its features and capabilities make it an ideal choice for developers, businesses, and organizations seeking to improve resource efficiency, accelerate deployment times, and enhance application portability. Docker simplifies the process of creating, deploying, and managing applications, empowering developers to focus on writing code and delivering value to their users.

At the heart of Docker’s success is its innovative containerization technology, which enables the creation of lightweight, portable, and self-contained environments for running applications and services. By packaging an application and its dependencies into a single container, Docker ensures consistent execution across different platforms and infrastructure, reducing the risk of compatibility issues, conflicts, and configuration errors. This approach significantly improves resource utilization, as containers share the host system’s operating system and resources, resulting in a more efficient and eco-friendly solution compared to traditional virtual machines.

Docker’s extensive ecosystem and vibrant community contribute to its popularity and success. The platform offers a rich set of features, including a robust command-line interface, a comprehensive API, and native support for networking, storage, and security. Docker also integrates seamlessly with popular development tools, continuous integration and delivery (CI/CD) pipelines, and cloud platforms, making it a versatile and adaptable solution for modern software development.

Key Concepts and Components of Docker

To effectively utilize Docker for containerization, it’s essential to understand the fundamental concepts and components that form its core functionality. These elements provide a solid foundation for working with Docker and ensure that you can harness its full potential in various projects and use cases.

Docker Images

Docker images are lightweight, standalone, and executable packages that include an application and its dependencies. They serve as the basis for creating Docker containers and can be shared and distributed through registries, such as Docker Hub. Docker images are built using Dockerfiles, which are text documents containing instructions for creating an image.

Docker Containers

Docker containers are instances of Docker images that can be run, started, stopped, and managed as independent units. Containers share the host system’s operating system and resources, resulting in a more efficient and eco-friendly solution compared to traditional virtual machines. They provide a consistent and isolated environment for running applications and services, ensuring predictable execution and minimizing compatibility issues.

Docker Registries

Docker registries are centralized repositories for sharing and distributing Docker images. They enable collaboration and reusability across teams and projects, streamlining the development workflow and accelerating the delivery of applications and services. Popular Docker registries include Docker Hub, Docker Registry, and Docker Trusted Registry.

Docker Orchestration Tools

Docker orchestration tools, such as Docker Swarm, Kubernetes, and Docker Compose, play a crucial role in managing and scaling containerized applications in production environments. These tools simplify the process of deploying, networking, and monitoring containers, ensuring high availability, load balancing, and fault tolerance. By automating the management of containerized applications, organizations can achieve greater efficiency, reliability, and security in their production environments.

How to Create and Manage Docker Images

Docker images are the foundation of containerization, serving as the basis for creating and deploying applications and services using Docker. Understanding how to create, manage, and share Docker images is crucial for streamlining your development workflow and collaborating effectively with your team. This section covers best practices for working with Docker images, including writing Dockerfiles, versioning, and sharing images through registries.

Writing Dockerfiles

Dockerfiles are text documents containing instructions for building a Docker image. When executed, these instructions are run in order, creating a new image layer for each step. To write efficient and maintainable Dockerfiles, follow these best practices:

- Use a .dockerignore file to exclude unnecessary files and directories from the build context.

- Minimize the number of layers in your Dockerfile by combining instructions when possible.

- Use multi-stage builds to separate build-time and runtime dependencies, reducing the final image size.

- Leverage build arguments to parameterize your Dockerfile, making it more flexible and reusable.

Versioning Docker Images

Properly versioning your Docker images ensures that you can track changes, roll back to previous versions, and maintain a clear history of updates. Use a versioning strategy, such as semantic versioning, to manage your Docker image tags and maintain a consistent and organized versioning scheme.

Sharing Docker Images through Registries

Docker registries, such as Docker Hub, serve as centralized repositories for sharing and distributing Docker images. To share your Docker images with others, you can push them to a registry, making them accessible for others to pull and use in their projects. When sharing Docker images, ensure that you follow best practices for security and access control, such as using multi-factor authentication and limiting access to sensitive resources.

Running and Managing Docker Containers

Docker containers provide a consistent and isolated environment for running applications and services. To make the most of Docker containers, it’s essential to understand how to run, manage, and monitor them effectively. This section covers key topics related to working with Docker containers, including networking, storage, resource allocation, and logging.

Networking

Docker containers can communicate with each other and the host system using various networking configurations. To manage container networking, Docker provides a built-in networking stack that supports multiple drivers, such as bridge, overlay, and macvlan. By understanding these networking options and how to configure them, you can ensure seamless communication between containers and the host system.

Storage

Docker containers require storage for storing data and configuration files. Docker supports several storage options, including volumes, bind mounts, and tmpfs mounts. By understanding these storage options and how to use them, you can ensure that your containers have the necessary resources to store and manage data effectively.

Resource Allocation

Docker containers share the host system’s resources, so it’s crucial to manage resource allocation effectively to ensure that containers have the necessary resources to run smoothly. Docker provides various options for managing resource allocation, such as CPU and memory limits, which can be configured using the –cpus and –memory flags when running a container.

Logging

Monitoring and analyzing container logs is essential for troubleshooting issues and understanding the behavior of your applications and services. Docker provides built-in logging support using the json-file driver, which writes container logs to JSON-formatted files. Additionally, you can integrate Docker with external logging solutions, such as ELK Stack or Splunk, for more advanced logging and analysis capabilities.

Docker Orchestration Tools: Scaling and Managing Containerized Applications

As the use of containerization with Docker grows, managing and scaling containerized applications in production environments becomes increasingly important. Docker provides several orchestration tools, such as Docker Swarm, Kubernetes, and Docker Compose, that simplify the process of deploying, managing, and scaling containerized applications. This section introduces these orchestration tools and discusses their role in managing containerized applications.

Docker Compose

Docker Compose is a tool for defining and running multi-container Docker applications. It allows you to define a multi-container application in a YAML file, called a docker-compose.yml file, and then manage the application’s services using a single command. Docker Compose is particularly useful for developing and testing applications locally, as it simplifies the process of managing multiple containers and their dependencies.

Docker Swarm

Docker Swarm is a native Docker orchestration tool for managing and scaling containerized applications across a cluster of Docker nodes. It provides features such as service discovery, load balancing, and rolling updates, making it an ideal choice for managing and scaling applications in production environments. With Docker Swarm, you can create a swarm, add nodes, and deploy services, all from the command line.

Kubernetes

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It provides features such as self-healing, automatic scaling, and rolling updates, making it a popular choice for managing containerized applications in production environments. Kubernetes is more complex than Docker Swarm but offers greater flexibility and scalability, making it suitable for large-scale and complex applications.

By understanding and utilizing these Docker orchestration tools, you can effectively manage and scale containerized applications in production environments, ensuring high availability, reliability, and performance.

Real-World Applications and Success Stories of Docker

Docker has become an essential tool for modern software development and deployment, offering numerous benefits such as resource efficiency, portability, and faster deployment. Countless organizations and projects have adopted Docker, leading to numerous success stories and practical use cases across various industries. This section highlights some of the real-world applications and success stories of Docker, demonstrating its practical value and benefits.

Streamlining Development Workflows

Docker has helped many organizations streamline their development workflows by providing a consistent and isolated environment for building, testing, and deploying applications. By using Docker, development teams can reduce the time and effort required to set up development environments, ensuring that everyone is working with the same tools and configurations. This consistency leads to fewer compatibility issues and more efficient collaboration, ultimately resulting in faster development cycles and higher-quality software.

Containerizing Legacy Applications

Docker has also been used to containerize legacy applications, enabling organizations to modernize their IT infrastructure without the need for extensive re-engineering or refactoring. By containerizing legacy applications, organizations can take advantage of the benefits of containerization, such as resource efficiency and portability, while still maintaining their existing investments in legacy systems. This approach allows organizations to gradually migrate to newer technologies and platforms at their own pace, minimizing disruption and risk.

Microservices Architecture

Docker has played a crucial role in the adoption of microservices architecture, which involves breaking down monolithic applications into smaller, independently deployable components or services. By using Docker, organizations can easily package and deploy these microservices, ensuring that they have the necessary resources and dependencies. Additionally, Docker orchestration tools, such as Docker Swarm and Kubernetes, simplify the process of managing and scaling microservices in production environments, ensuring high availability, reliability, and performance.

These are just a few examples of the real-world applications and success stories of Docker. By adopting Docker and its ecosystem, organizations can achieve significant benefits, such as faster development cycles, reduced operational costs, and improved application reliability and performance.

Staying Updated with Docker: Best Practices and Resources

Staying updated with the latest developments, best practices, and features of Docker is essential for developers and businesses that want to maximize the benefits of containerization. By following official resources, engaging with the community, and continuously learning and experimenting with new features and updates, you can ensure that you’re making the most of Docker in your projects and workflows. This section provides best practices and resources for staying updated with Docker.

Official Docker Resources

Docker provides several official resources for staying updated with the latest developments and best practices. These resources include:

- Docker Blog: The Docker blog features articles, tutorials, and announcements related to Docker and containerization. By following the Docker blog, you can stay up-to-date with the latest developments and best practices.

- Docker Documentation: The Docker documentation provides comprehensive guides, tutorials, and reference materials for Docker and its ecosystem. By consulting the documentation, you can find answers to your questions and learn how to use Docker effectively.

- Docker Resources: The Docker resources page features a variety of materials, such as whitepapers, case studies, and webinars, that provide insights and best practices for using Docker in various scenarios.

Engaging with the Docker Community

Engaging with the Docker community is an excellent way to stay updated with the latest developments and best practices. The Docker community includes forums, social media channels, and user groups, where you can connect with other Docker users, ask questions, and share your experiences. By participating in the Docker community, you can learn from others, gain new insights, and contribute to the growth and development of the Docker ecosystem.

Continuous Learning and Experimentation

Continuous learning and experimentation are essential for staying updated with Docker and containerization. By regularly trying out new features, experimenting with new use cases, and learning new skills, you can ensure that you’re making the most of Docker in your projects and workflows. Additionally, by sharing your experiences and insights with others, you can contribute to the growth and development of the Docker community and ecosystem.

By following these best practices and resources, you can stay updated with Docker and containerization, ensuring that you’re making the most of this powerful technology in your projects and workflows.