Unraveling Docker Compose: A Powerful Tool for Container Orchestration

Docker Compose is an essential tool for managing multi-container Docker applications. It simplifies the development and deployment processes by allowing developers to define and run applications using a YAML file. This file, known as the “docker-compose.yml” file, contains all the necessary configurations for services, networks, and volumes. Using Docker Compose, you can easily manage the services that make up your application, ensuring smooth communication and interaction between them.

The primary benefit of using Docker Compose is its ability to streamline the management of complex Docker applications. Instead of manually executing various Docker commands to start, stop, or rebuild services, Docker Compose lets you handle these tasks with a single command. This not only saves time but also reduces the chances of human error during the development and deployment process.

Moreover, Docker Compose promotes consistency between development and production environments. By defining the application’s environment in a YAML file, you can ensure that the same configurations are used across different stages of the software development lifecycle. This consistency helps in identifying and resolving issues early on, ultimately leading to a more stable and reliable application.

Getting Started: Installing Docker Compose on Your System

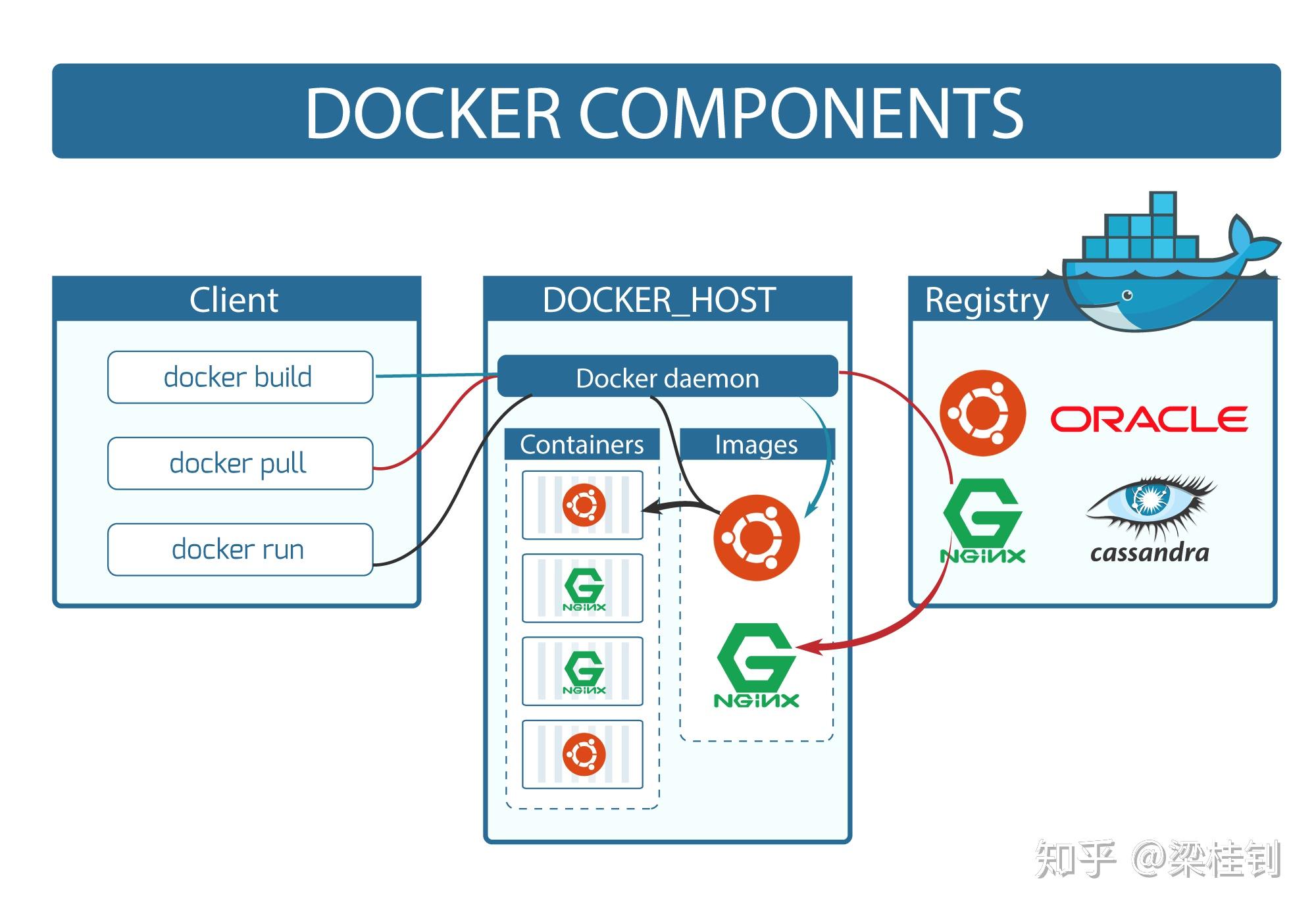

Before diving into the world of Docker Compose, you need to ensure that Docker is installed on your system. Docker Compose is a companion tool to Docker, simplifying the management of multi-container applications. Once Docker is set up, follow these steps to install Docker Compose on your preferred operating system:

Installing Docker Compose on Linux

For Linux users, you can install Docker Compose using the package manager of your distribution. For example, on Ubuntu, you can use the following commands:

sudo curl -L "https://github.com/docker/compose/releases/download/1.29.2/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose sudo chmod +x /usr/local/bin/docker-compose Replace “1.29.2” with the latest Docker Compose version available at https://github.com/docker/compose/releases.

Installing Docker Compose on macOS

For macOS users, Docker Desktop, which includes Docker Engine, Docker Compose, and other Docker tools, is the recommended way to install Docker. Download Docker Desktop from the official Docker website and follow the installation instructions.

Installing Docker Compose on Windows

Similar to macOS, Docker Desktop for Windows is the recommended way to install Docker on Windows systems. Download Docker Desktop from the official Docker website and follow the installation instructions. Docker Desktop for Windows includes Docker Compose and other Docker tools.

After installing Docker Compose on your system, you can verify the installation by running the following command:

docker-compose --version This command will display the version of Docker Compose installed on your system, ensuring that you have the necessary tools to manage multi-container Docker applications.

A Taste of Docker Compose: A Basic Example to Whet Your Appetite

To demonstrate the power and simplicity of using Docker Compose, let’s walk through a basic example. This example will define a simple multi-container application, consisting of a web server and a database server. The “docker-compose.yml” file for this application will look like this:

version: '3' services: web: build: . ports: - "5000:5000" depends_on: - db db: image: postgres volumes: - db_data:/var/lib/postgresql/data volumes: db_data: Here’s a breakdown of each section:

- version: This specifies the Docker Compose file format version. In this example, we use version ‘3’.

- services: This section contains the configuration for each service in the application. In this case, we have two services: “web” and “db”.

- web: This service represents the web server. It is built from the current directory (“.”) and maps port 5000 to the host system. Additionally, it has a dependency on the “db” service, meaning that the web server container will only start once the database container is up and running.

- db: This service represents the database server. It uses the official PostgreSQL image and mounts a volume for persistent data storage.

- volumes: This section defines a named volume, “db\_data”, which is used by the “db” service to store data persistently.

By using this “docker-compose.yml” file, you can easily manage the web server and database server containers as a single unit, making it simple to start, stop, and rebuild the entire application with a single command.

Diving Deeper: Core Concepts and Features of Docker Compose

Docker Compose offers several powerful features that simplify the management of multi-container Docker applications. In this section, we’ll discuss three essential concepts: service dependencies, environment variables, and scaling.

Service Dependencies

Service dependencies allow you to define the order in which services start and stop. By specifying dependencies, you can ensure that dependent services are running before their dependencies start. In the previous example, we defined a dependency between the “web” and “db” services, ensuring that the database server is running before the web server starts.

Environment Variables

Environment variables enable you to customize service configurations without modifying the service code or Dockerfile. You can define environment variables in the “docker-compose.yml” file or override them using command-line arguments. For example, you can set a custom database name, username, or password for your application:

services: db: image: postgres environment: - POSTGRES_USER=myuser - POSTGRES_PASSWORD=mysecretpassword - POSTGRES_DB=mydb Scaling

Scaling is the process of increasing or decreasing the number of instances of a service. With Docker Compose, you can scale services up or down using the “up” command with the “–scale” flag. For instance, to scale the “web” service to three instances, you can run:

docker-compose up --scale web=3 Scaling services is particularly useful in load-balancing scenarios, where you want to distribute incoming traffic across multiple instances of a service. Docker Compose supports built-in load balancing using the “links” option or third-party load balancers like NGINX or HAProxy.

By mastering these core concepts and features, you can effectively manage complex Docker applications using Docker Compose, improving the development and deployment process for your projects.

How to Leverage Docker Compose for Local Development

Using Docker Compose for local development offers several benefits, including consistent environments, easier setup, and more efficient resource management. Here are some best practices for integrating Docker Compose into your local development workflow:

Setting Up Databases

Docker Compose simplifies the process of setting up databases for local development. Instead of manually installing and configuring a database server, you can define the database service in your “docker-compose.yml” file. This approach ensures that your development environment matches the production environment, reducing the risk of compatibility issues.

Caching

Caching is essential for improving the performance of your development environment. With Docker Compose, you can leverage volume mounts to cache data, such as application code or database contents. This technique allows you to quickly spin up and tear down development environments without losing valuable data.

Load Balancing

Load balancing is another powerful feature of Docker Compose that can be utilized in local development. By distributing incoming traffic across multiple instances of a service, you can simulate real-world scenarios and test the scalability and resilience of your application.

Consistent Development and Production Environments

By using Docker Compose for local development, you can ensure that your development environment closely mirrors the production environment. This consistency simplifies the deployment process and reduces the likelihood of unexpected issues arising in production.

Incorporating Docker Compose into your local development workflow can significantly improve the efficiency and reliability of your development process. By following these best practices, you can harness the full potential of Docker Compose for local development.

Optimizing Docker Compose for Production Deployments

Using Docker Compose in production environments can help streamline the deployment process and ensure consistent configurations across different stages. However, there are some considerations and best practices to keep in mind when using Docker Compose in production:

Container Linking

Container linking is the process of connecting containers so that they can communicate with each other. In Docker Compose, you can use the “links” option to establish connections between containers. This technique is useful when you have services that need to interact with each other, such as a web application and a database server.

Health Checks

Health checks are essential for monitoring the status and availability of your services in production. Docker Compose supports built-in health checks, which can be configured in the “docker-compose.yml” file. By defining health checks, you can ensure that your services are running as expected and automatically restart or replace failed containers.

Rolling Updates

Rolling updates are a strategy for updating services without causing downtime. With Docker Compose, you can perform rolling updates using the “up” command with the “–no-recreate” flag. This approach allows you to update services one at a time, ensuring that your application remains available during the update process.

Security Concerns

Security is a critical consideration when deploying applications in production. To enhance security when using Docker Compose, consider the following best practices:

- Limit container network access using firewall rules or network policies.

- Use secure credentials, such as environment variables or secrets, to store sensitive information.

- Regularly update your Docker Compose files and Docker images to incorporate the latest security patches and updates.

Performance Optimizations

Performance is another important factor to consider in production environments. To optimize the performance of your Docker Compose deployments, consider the following best practices:

- Use multi-stage builds to minimize the size of your Docker images.

- Configure resource limits, such as CPU and memory, to prevent resource contention and ensure predictable performance.

- Leverage caching techniques, such as volume mounts, to improve the performance of your services.

By following these best practices, you can effectively use Docker Compose in production environments, ensuring consistent configurations, streamlined deployments, and optimized performance.

Troubleshooting Common Docker Compose Issues: A Practical Guide

Using Docker Compose in your development and deployment workflows can significantly improve the efficiency and consistency of your projects. However, you may encounter issues when working with Docker Compose. In this guide, we’ll discuss common Docker Compose pitfalls and offer solutions to help you debug and resolve problems.

Handling Volume Mounts

Volume mounts are a powerful feature of Docker Compose, allowing you to share data between the host system and containers. However, incorrect volume mount configurations can lead to issues. To avoid problems, ensure that you specify the correct mount path and access permissions for your volumes. Additionally, consider using named volumes to simplify volume management.

Network Configurations

Docker Compose enables you to define custom networks for your services, ensuring seamless communication between containers. However, misconfigured networks can result in connectivity issues. When defining networks, make sure to use the correct network driver, specify appropriate subnets, and configure proper port mappings. Additionally, consider using container linking or service discovery to simplify network configurations.

Service Discovery

Service discovery is the process of identifying and locating services within a network. Docker Compose supports built-in service discovery using the DNS server. However, you may encounter issues when resolving service names or IP addresses. To troubleshoot service discovery issues, ensure that your network configurations are correct, and your services are running as expected. Additionally, consider using third-party service discovery tools, such as Consul or Etcd, for more advanced use cases.

Debugging and Resolving Issues

When troubleshooting Docker Compose issues, consider the following best practices:

- Check the Docker Compose logs for error messages and relevant information.

- Inspect the status of your services using the “docker-compose ps” command.

- Examine the configuration of your Docker Compose files, looking for misconfigurations or typos.

- Consider using Docker Compose events to monitor the lifecycle of your services in real-time.

By understanding these common Docker Compose pitfalls and best practices for debugging and resolving issues, you can ensure a smooth and efficient development and deployment experience.

Integrating Docker Compose with Continuous Integration and Continuous Deployment (CI/CD) Workflows

Using Docker Compose in your CI/CD pipelines can help automate the build, test, and deployment processes, ensuring consistent and reliable deployments across different environments. In this guide, we’ll discuss popular CI/CD tools and their compatibility with Docker Compose.

GitHub Actions

GitHub Actions is a powerful CI/CD tool integrated directly into the GitHub platform. With GitHub Actions, you can create custom workflows for your Docker Compose projects, automating tasks such as building, testing, and deploying your applications. To use GitHub Actions with Docker Compose, simply create a new workflow file in your repository’s “.github/workflows” directory and define the necessary steps using the “docker-compose” command.

Jenkins

Jenkins is a widely-used open-source CI/CD tool that supports Docker Compose integration through plugins and Docker images. By using the “Docker Compose Plugin” or running Jenkins within a Docker container, you can automate the build, test, and deployment processes for your Docker Compose projects. Additionally, you can leverage Jenkins pipelines to create custom workflows tailored to your specific requirements.

CircleCI

CircleCI is a cloud-based CI/CD platform that supports Docker Compose through its Docker executor. With CircleCI, you can easily configure your build, test, and deployment processes using a simple “config.yml” file. By leveraging Docker Compose within CircleCI, you can ensure consistent and reliable deployments across different environments, from development to production.

Tips for Integrating Docker Compose with CI/CD Workflows

When integrating Docker Compose with your CI/CD pipelines, consider the following best practices:

- Ensure that your Docker Compose files are version-controlled and stored in a central repository.

- Use multi-stage builds to minimize the size of your Docker images and improve build times.

- Leverage caching techniques, such as volume mounts, to speed up build and test processes.

- Configure environment variables and secrets to secure sensitive information, such as API keys and database credentials.

- Monitor your CI/CD pipelines using tools like Prometheus, Grafana, or ELK Stack to identify and resolve issues quickly.

By integrating Docker Compose into your CI/CD workflows, you can automate the build, test, and deployment processes, ensuring consistent and reliable deployments across different environments.