An Overview of SQL Server Integration Services (SSIS)

SQL Server Integration Services (SSIS) is a powerful data integration and ETL (Extract, Transform, Load) tool provided by Microsoft as part of the SQL Server suite. SSIS enables data professionals to automate and streamline the process of extracting data from various sources, transforming it into the desired format, and loading it into a target destination. This powerful tool is essential for organizations that rely on data-driven decision-making, as it simplifies the process of managing and manipulating large volumes of data.

Understanding SSIS is crucial for data professionals, developers, and interview candidates working with SQL Server or other data platforms. Familiarity with SSIS architecture, components, and best practices demonstrates a candidate’s expertise in data integration and ETL processes, making them a valuable asset to any team. This article compiles a list of top SQL Server Integration Services interview questions, providing in-depth answers to help candidates prepare for interviews and showcase their knowledge effectively.

Top SQL Server Integration Services Interview Questions

The following section covers essential SQL Server Integration Services (SSIS) interview questions, designed to test your understanding of this powerful data integration and ETL tool. These questions touch upon various aspects of SSIS, including its architecture, components, best practices, data transformation techniques, and troubleshooting methods. Familiarizing yourself with these questions and their answers will help you feel confident and well-prepared for any SSIS-related interview.

1. What is SQL Server Integration Services (SSIS), and How Does It Work?

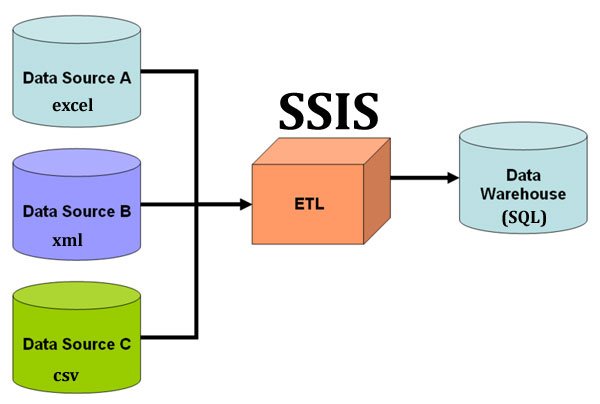

SQL Server Integration Services (SSIS) is a powerful data integration and ETL (Extract, Transform, Load) tool that enables data professionals to automate and streamline the process of extracting data from various sources, transforming it into the desired format, and loading it into a target destination. SSIS supports a wide range of data sources, including flat files, Excel, CSV, XML, and various databases, such as SQL Server, Oracle, and MySQL. This versatility makes SSIS an indispensable tool for managing and manipulating large volumes of data in diverse environments.

SSIS plays a critical role in data management, providing a centralized platform for handling data integration and ETL processes. Its primary functions include:

- Data integration: Combining data from multiple sources into a single, unified view.

- ETL processes: Extracting data from various sources, transforming it into the desired format, and loading it into a target destination.

- Data transformation: Converting data into a format suitable for analysis, reporting, or further processing.

- Data cleansing: Validating, correcting, and enhancing data quality by removing duplicates, filling in missing values, and standardizing formats.

- Workflow automation: Automating repetitive data-related tasks, reducing manual intervention, and minimizing the risk of errors.

Understanding SSIS and its capabilities is essential for data professionals and interview candidates, as it demonstrates expertise in data integration and ETL processes, which are vital for modern data-driven organizations.

2. Can You Explain the SSIS Architecture and Its Main Components?

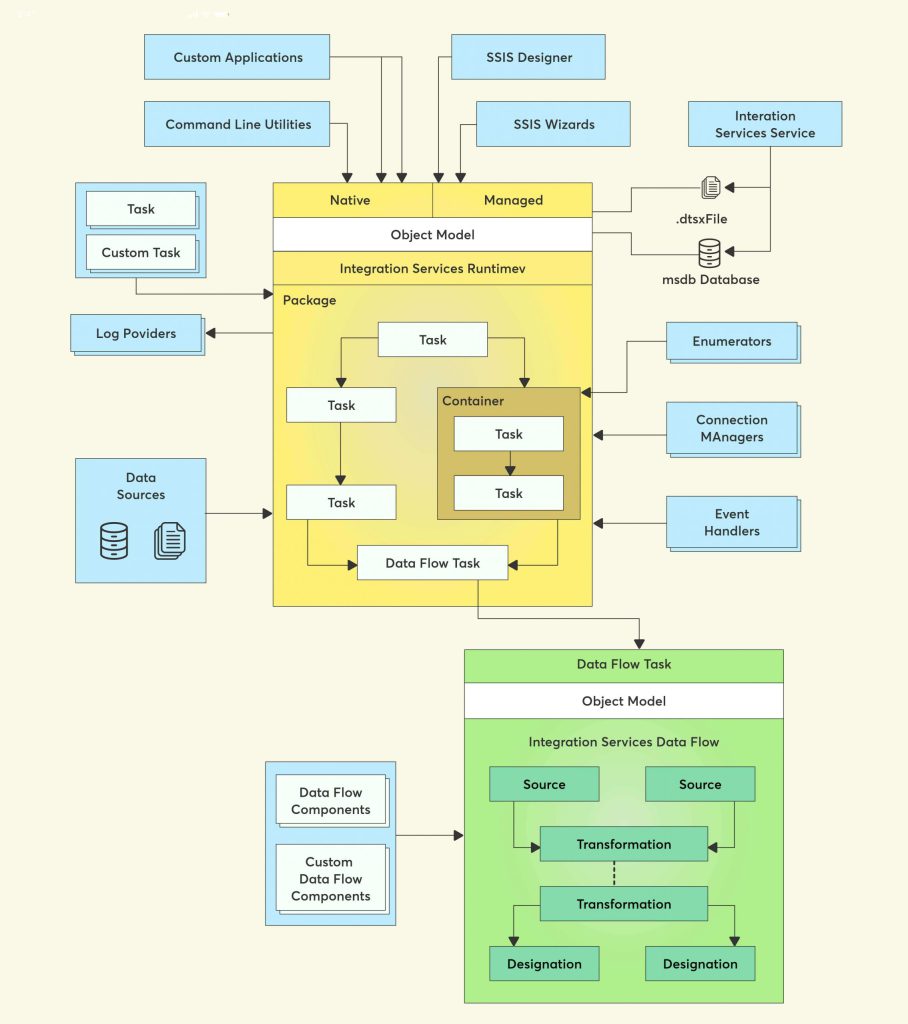

SSIS architecture consists of several main components that work together to enable seamless data integration and ETL processes. Understanding these components and their interactions is crucial for effectively designing, implementing, and managing SSIS packages.

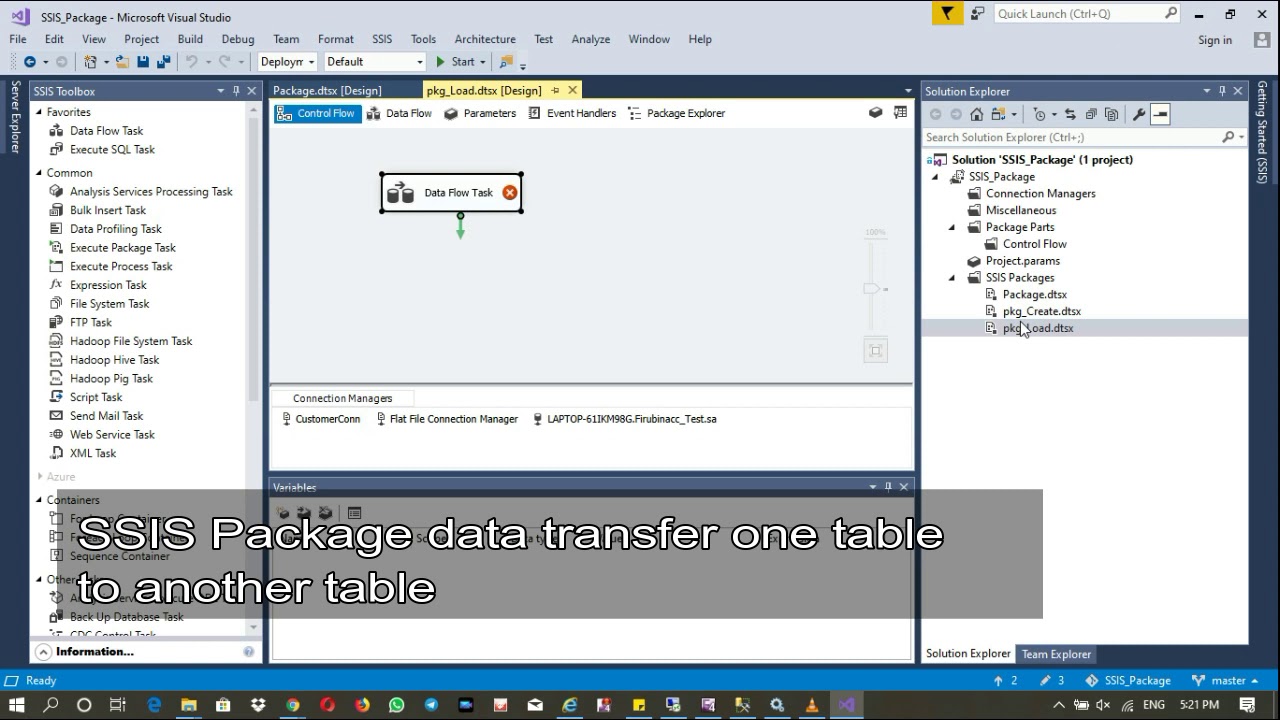

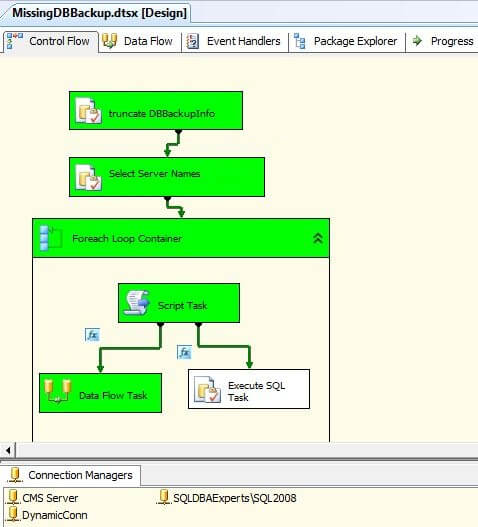

Control Flow

The control flow is the primary orchestration component in SSIS, responsible for managing the sequence of tasks and containers within a package. It includes various tasks, such as data flow tasks, script tasks, and execute SQL tasks, which can be connected using precedence constraints to define the execution order and conditions.

Data Flow

The data flow component is responsible for handling the actual data movement and transformation within an SSIS package. It includes data sources, data destinations, and transformation components, such as derived columns, merge joins, and conditional splits, which can be combined to create complex data transformation pipelines.

Event Handlers

Event handlers in SSIS are used to manage exceptions, system events, and custom events. They can be created to perform specific actions when certain events occur, such as logging errors, sending notifications, or executing custom scripts. Event handlers help ensure that packages run smoothly and can recover from unexpected situations.

Connection Managers

Connection managers in SSIS store the connection information required to access various data sources and destinations. They provide a consistent and centralized way to manage connections, ensuring that packages can easily connect to different data stores without requiring hard-coded connection strings.

By understanding the SSIS architecture and its main components, data professionals can design and implement efficient, reliable, and scalable SSIS packages that meet their organization’s data integration and ETL needs.

3. How Do You Approach Designing and Implementing an SSIS Package?

Designing and implementing SSIS packages requires careful planning, adherence to best practices, and thorough testing to ensure optimal performance, reliability, and maintainability. The following best practices should be considered when designing and implementing SSIS packages:

Performance Optimization

Performance optimization is crucial for SSIS packages to process data efficiently. Techniques for improving performance include:

- Minimizing data manipulation within the data flow task.

- Using the correct data types and precision for columns.

- Avoiding unnecessary transformations and conversions.

- Using buffer optimization techniques, such as setting the defaultBufferMaxRows and defaultBufferSize properties.

- Implementing parallel processing when possible.

Error Handling

Implementing robust error handling in SSIS packages helps ensure that packages can recover from unexpected situations and continue processing. Techniques for error handling include:

- Using the OnError, OnWarning, and OnInformation events to handle errors, warnings, and informational messages.

- Configuring constraints and precedence conditions to control the flow of execution based on success or failure.

- Using the Try…Catch construct in script tasks to handle custom errors.

- Logging errors and warnings to a file or database for further analysis.

Logging

Logging is essential for monitoring package execution, diagnosing issues, and troubleshooting errors. Techniques for logging in SSIS packages include:

- Configuring logging providers, such as text files, SQL Server, or Windows Event Log.

- Selecting the appropriate log events and details to capture.

- Storing logs in a centralized location for easy access and analysis.

- Reviewing logs regularly to identify trends, issues, and areas for improvement.

By following these best practices, data professionals can design and implement efficient, reliable, and maintainable SSIS packages that meet their organization’s data integration and ETL needs.

4. How Do You Handle Data Transformation and Cleansing in SSIS?

Data transformation and cleansing are essential aspects of ETL processes, ensuring that data is accurate, consistent, and ready for analysis. SQL Server Integration Services (SSIS) provides various data transformation techniques and data cleansing methods to help achieve these goals. This section covers some of the most common data transformation techniques and data cleansing methods in SSIS.

Data Transformation Techniques

SSIS offers numerous data transformation techniques, including:

- Deriving columns: Creating new columns based on expressions or existing columns.

- Merging data: Combining data from multiple inputs into a single output, based on a common set of columns.

- Splitting data: Separating data from a single input into multiple outputs, based on specific criteria or conditions.

- Aggregating data: Summarizing data by grouping rows and calculating aggregate values, such as sum, count, or average.

- Sorting data: Reordering data based on specific columns or expressions.

- Conditional split: Splitting data based on conditional expressions, routing rows to different outputs based on their values.

Data Cleansing Methods

In addition to data transformation techniques, SSIS also offers data cleansing methods, such as:

- Data validation: Validating data against specific rules or constraints, flagging or correcting rows that violate these rules.

- Duplicate removal: Identifying and eliminating duplicate rows, ensuring data consistency and accuracy.

- Null value handling: Replacing or removing rows with null values, ensuring data completeness.

- Data typing: Converting data types to ensure consistency and compatibility across data sources and destinations.

- Exception handling: Handling exceptions or errors during data transformation and cleansing, ensuring package execution continues even when issues arise.

By mastering these data transformation and cleansing techniques, data professionals can ensure that their SSIS packages produce high-quality, accurate, and consistent data, ready for analysis and decision-making.

5. What Are Some Common Challenges in SSIS Development and How Do You Overcome Them?

SSIS development comes with its own set of challenges, including performance issues, error handling, and data type compatibility. Addressing these challenges effectively is crucial for creating high-quality, efficient, and maintainable SSIS packages. This section discusses common challenges in SSIS development and provides solutions and best practices for overcoming them.

Performance Issues

Performance issues can arise from inefficient data transformations, excessive disk I/O, or insufficient memory allocation. To address performance issues:

- Minimize data manipulation within the data flow task.

- Use the correct data types and precision for columns.

- Avoid unnecessary transformations and conversions.

- Implement buffer optimization techniques, such as setting the defaultBufferMaxRows and defaultBufferSize properties.

- Use the Data Profiling Task to analyze data and identify potential performance bottlenecks.

Error Handling

Error handling is essential for ensuring that packages can recover from unexpected situations and continue processing. To improve error handling:

- Use the OnError, OnWarning, and OnInformation events to handle errors, warnings, and informational messages.

- Configure constraints and precedence conditions to control the flow of execution based on success or failure.

- Use the Try…Catch construct in script tasks to handle custom errors.

- Log errors and warnings to a file or database for further analysis.

Data Type Compatibility

Data type compatibility issues can occur when working with different data sources and destinations. To address data type compatibility challenges:

- Use the Data Conversion Transformation to convert data types within the data flow.

- Configure data types in source and destination components carefully to avoid data loss or truncation.

- Use the Script Component to perform custom data type conversions when necessary.

By understanding and addressing these common challenges, data professionals can create more efficient, reliable, and maintainable SSIS packages that meet their organization’s data integration and ETL needs.

6. How Do You Monitor and Troubleshoot SSIS Packages?

Monitoring and troubleshooting SSIS packages is crucial for ensuring their smooth execution and identifying potential issues. Various tools and techniques are available for monitoring and troubleshooting SSIS packages, including the SSIS catalog, SQL Server Agent jobs, and SQL Profiler. This section discusses these tools and techniques, as well as how to interpret logs, identify bottlenecks, and resolve errors in SSIS packages.

SSIS Catalog

The SSIS catalog is a built-in repository for storing, managing, and executing SSIS packages. It provides comprehensive monitoring and reporting capabilities, including execution history, performance metrics, and error information. To monitor SSIS packages using the SSIS catalog:

- Browse to the Integration Services Catalog in SQL Server Management Studio (SSMS).

- Expand the SSISDB node and navigate to the desired project and package.

- Right-click on the package and select Execute to run the package and view its execution details.

- Use the various reports and views available in the SSIS catalog to analyze execution history, performance, and errors.

SQL Server Agent Jobs

SQL Server Agent jobs can be used to schedule and execute SSIS packages automatically. To monitor SSIS packages executed through SQL Server Agent jobs:

- Open SQL Server Agent in SSMS and navigate to the Jobs node.

- Select a job and view its execution history, including start and end times, duration, and any errors or messages.

- Configure alerts and notifications to be notified when a job fails or encounters errors.

SQL Profiler

SQL Profiler is a powerful tool for monitoring and troubleshooting SQL Server-related activities, including SSIS package executions. To monitor SSIS packages using SQL Profiler:

- Create a new SQL Profiler trace and select the appropriate events and data columns.

- Execute the SSIS package and observe the trace output for any performance issues, errors, or warnings.

- Analyze the trace output to identify bottlenecks, optimize performance, and resolve errors.

By mastering these monitoring and troubleshooting techniques, data professionals can ensure that their SSIS packages run smoothly, efficiently, and with minimal errors, providing valuable insights and decision-making capabilities for their organizations.