Understanding the Need for Service Mesh

Managing microservices within a complex, distributed system presents significant challenges. Service discovery, efficient routing, robust security measures, comprehensive observability, and resilient operation are crucial yet demanding aspects. Manual solutions often prove inadequate, leading to operational bottlenecks and increased complexity. A more automated, centralized approach is necessary. Service mesh architecture emerges as a powerful solution, addressing these challenges and providing a robust foundation for managing microservices. This architecture offers significant benefits, streamlining operations and enhancing overall system reliability. The inherent complexities of a microservices-based application demand a sophisticated management solution; service mesh architecture provides just that.

Traditional approaches to inter-service communication struggle to scale effectively. They often lack the necessary features for advanced traffic management, security enforcement, and comprehensive observability. The resulting operational burden hinders agility and increases the risk of outages. A service mesh architecture introduces a dedicated infrastructure layer for managing service-to-service communication. This layer abstracts away the complexities of network management, allowing developers to focus on building applications rather than infrastructure. The benefits include improved security, enhanced resilience, and simplified observability, making service mesh architecture a key component of modern microservices deployments. By centralizing these functions, a service mesh improves operational efficiency and enhances the overall reliability of the system.

The adoption of service mesh architecture offers numerous advantages. It simplifies the management of complex microservices deployments, improving security, resilience, and observability. The automated nature of a service mesh reduces operational overhead, allowing teams to focus on delivering business value. This architecture provides a unified control plane for managing the entire service mesh, enabling consistent policy enforcement and improved operational efficiency. Investing in a robust service mesh architecture ensures the long-term scalability and maintainability of your microservices ecosystem. It’s a strategic investment that yields substantial returns in terms of improved operational efficiency and reduced risk.

Delving into Service Mesh Architecture: Core Components

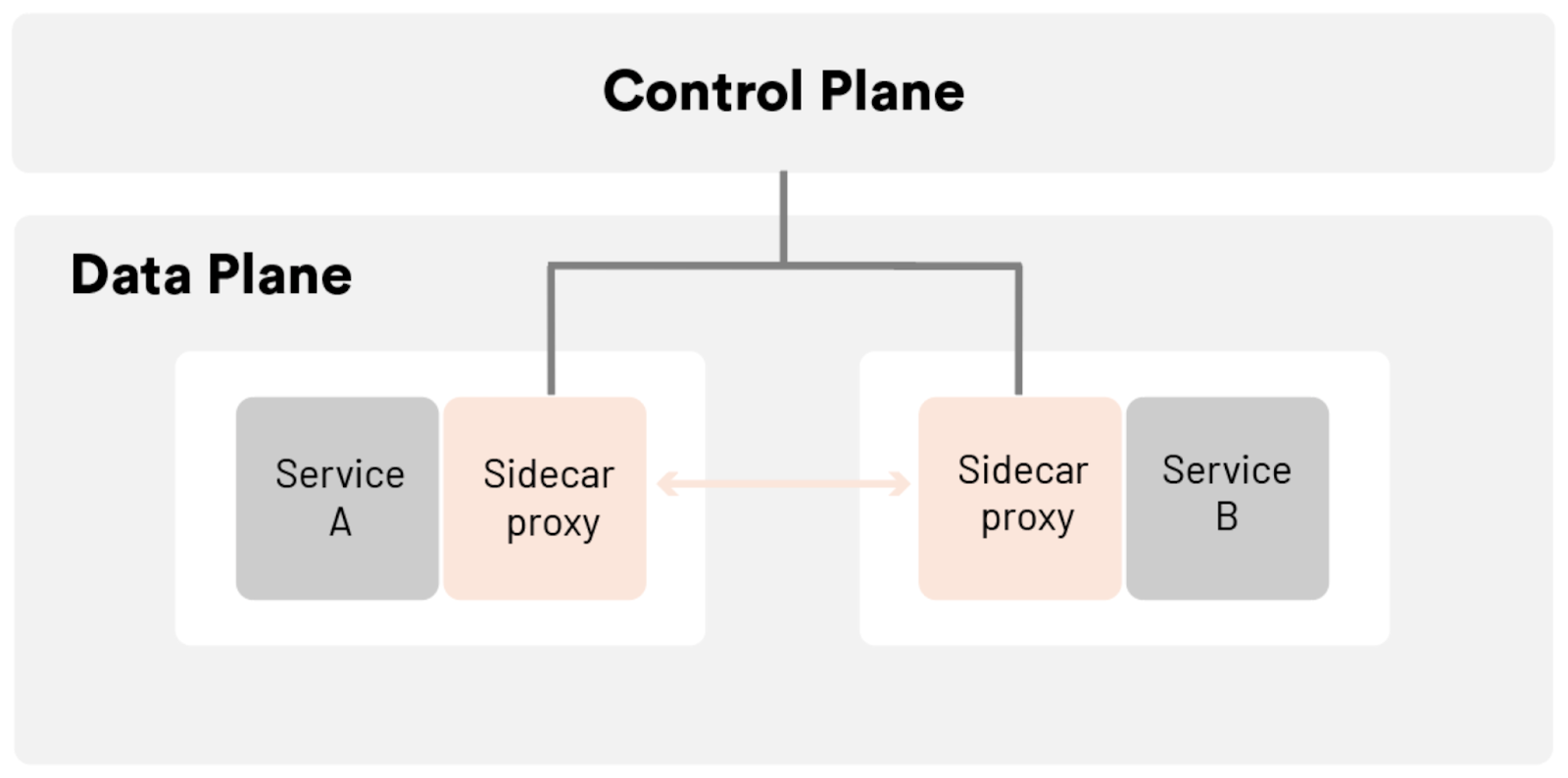

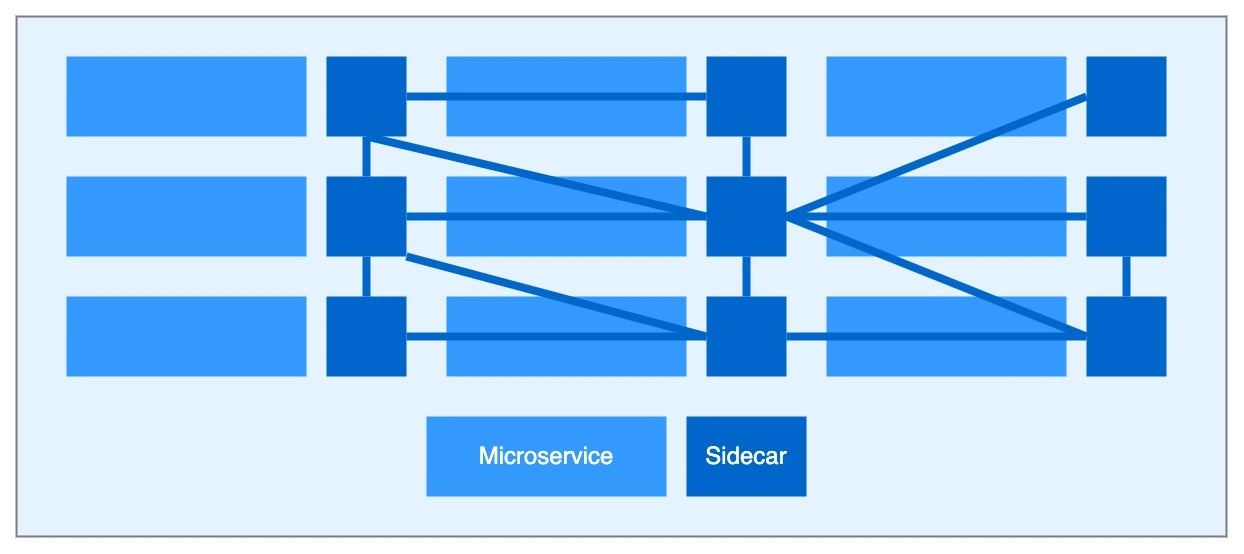

A service mesh architecture forms the backbone of modern microservices. Its core components are crucial to managing communication and functionality. The data plane acts as the intermediary between services, often implemented using sidecar proxies like Envoy. These proxies sit alongside the microservices, intercepting all network traffic between them. This allows for sophisticated traffic management, security enforcement, and monitoring. The control plane provides the governance and configuration for the data plane. It manages policies, service discovery, and routing rules for the service mesh architecture. The control plane facilitates communication between the data plane proxies, enabling coordinated actions across the entire system.

This architecture is characterized by its clear separation of concerns. The control plane focuses on policy management, while the data plane facilitates the communication. This separation promotes agility and scalability. Visual representations of this interaction between data and control planes are helpful. This arrangement enables a more streamlined and effective approach to handling the complexities of modern distributed systems. The control plane’s ability to define and enforce rules enables fine-grained control over the traffic flowing through the service mesh architecture. Common implementations, like Istio and Linkerd, utilize these foundational components for managing distributed applications. Each choice presents unique capabilities and trade-offs.

The interconnectedness of the data plane and control plane is vital for proper functioning. By ensuring consistent communication and enforcement of policies, the service mesh architecture facilitates a reliable and scalable platform for microservices. Robust governance and management are integral parts of service mesh architecture design, allowing for a centralized and consistent approach to traffic management. This structure ensures consistency in handling communications throughout the entire system, preventing isolated failures and promoting overall performance. Understanding the intricate workings of this interplay is essential for effectively deploying and maintaining complex microservices applications.

Key Features of a Robust Service Mesh

A robust service mesh architecture provides a crucial foundation for modern microservices. Essential features of a well-designed service mesh include comprehensive service discovery, enabling efficient communication between services. Traffic management plays a significant role in routing, load balancing, and fault injection, enhancing the resilience and performance of the system. Security mechanisms, like mutual TLS (mTLS) and authorization, protect sensitive data and control access to services, ensuring a secure service mesh architecture. Observability tools, incorporating metrics, tracing, and logging, provide valuable insights into the application’s behavior. Finally, resilience strategies, like retries and circuit breakers, handle unexpected failures, minimizing disruptions in service delivery within a service mesh architecture. Each component contributes to the overall performance, reliability, and security of the microservices application.

Effective service discovery allows services to dynamically locate and communicate with each other. Traffic management mechanisms route traffic optimally, distributing loads efficiently. Fault injection facilitates controlled testing and validation of error handling capabilities. Security features protect data in transit and verify the identity of services. Robust observability fosters continuous monitoring of the service mesh architecture, making it easier to identify and resolve problems. Resilience strategies enable the service mesh to gracefully handle failures and maintain operational stability. These key elements ensure the smooth functioning of a complex microservices ecosystem through a service mesh architecture.

A well-designed service mesh architecture offers various tools for handling network traffic, including load balancing to spread incoming requests across multiple instances of a service. Implementing circuit breakers helps prevent cascading failures by isolating faulty components. Service discovery mechanisms facilitate reliable communication between microservices. Robust logging enables efficient troubleshooting. These features significantly contribute to the health and reliability of a service mesh architecture, ensuring the efficient and secure operation of a microservices ecosystem.

How to Implement a Service Mesh in Your Microservices Ecosystem

Implementing a service mesh architecture requires a strategic approach. Begin by carefully evaluating your specific microservices needs. Consider the size and complexity of your application. Factors like the number of services, their communication patterns, and the expected traffic volume should influence your choice of service mesh technology. Selecting the ideal service mesh architecture solution is crucial for long-term performance and scalability.

Next, choose a service mesh implementation. Popular options include Istio and Linkerd. Istio, known for its comprehensive features and extensive community support, is a compelling choice for larger, more complex applications. Linkerd, with its focus on speed and simplicity, might suit smaller projects or teams seeking a more streamlined experience. Consider factors such as ease of integration with existing infrastructure, community support, and available documentation when making your decision. Appropriate integration of the service mesh architecture is essential for streamlined operations. Thoroughly examine available documentation for the selected service mesh technology, paying particular attention to deployment and configuration guides. This meticulous process is essential for smooth integration.

Following a detailed installation process is critical. This involves deploying the service mesh components, such as the control plane and data plane. Careful configuration of service mesh components and proper integration with existing systems are critical for a successful implementation. Configure service-to-service communication through the service mesh. Define rules for traffic routing, security, and observability. Ensure seamless integration with your existing infrastructure, including logging and monitoring systems. Proper configuration is key for optimal service mesh architecture performance. Thoroughly test the integration to confirm functionality and identify potential issues.

Comparing Popular Service Mesh Solutions: Istio vs. Linkerd

This section delves into a comparative analysis of two leading service mesh technologies, Istio and Linkerd. Understanding their strengths and weaknesses is crucial for selecting the ideal solution for specific needs. Choosing the right service mesh architecture solution directly impacts the operational efficiency of a microservices application.

Istio, a popular open-source service mesh architecture, often garners praise for its comprehensive feature set and extensive community support. Its versatility allows for intricate traffic management configurations, making it a potent option for complex microservices environments. However, Istio’s more extensive configuration process can pose a learning curve for some users.

Conversely, Linkerd, another prominent open-source service mesh architecture, often receives positive feedback for its simplicity and performance. Its streamlined configuration offers a faster time to deployment. However, some may find its feature set less comprehensive compared to Istio, potentially limiting its applicability to certain use cases. Several factors, including complexity and specific needs, will dictate whether Istio or Linkerd aligns better with a given microservices ecosystem.

A crucial consideration for selecting a service mesh architecture is scalability. Both Istio and Linkerd demonstrate remarkable scalability for large, complex applications. However, the specifics of how each scales depend on the specific implementation and overall architecture design. The performance characteristics of each service mesh architecture can also be significantly impacted by several factors, including network conditions and application specifics.

A table summarizing key differences between Istio and Linkerd facilitates a clearer comparison, enabling a more informed decision:

| Feature | Istio | Linkerd |

|---|---|---|

| Ease of Use | Steeper learning curve due to complexity | Easier to learn and deploy |

| Scalability | High | High |

| Performance | Often competitive with Linkerd | Often reported as performing well |

| Community Support | Strong and active | Good |

| Feature Set | Comprehensive, offering a wide range of features | Well-rounded, but potentially less extensive than Istio |

Advanced Service Mesh Concepts: Traffic Splitting and Canary Deployments

A critical aspect of a robust service mesh architecture involves the management and control of traffic flow during deployments. Traffic splitting and canary deployments are advanced strategies that streamline the rollout of new service versions. This approach minimizes disruption and allows for rigorous testing before a complete release to production.

Traffic splitting enables controlled traffic distribution between different versions of a service. A portion of requests are routed to the current production version, and the rest are routed to a new, potentially improved version. This form of A/B testing allows for real-world evaluation of performance, functionality, and stability. Service mesh architecture facilitates such split traffic flow, enabling gradual transitions. Canary deployments represent a more cautious approach to releasing new service versions. A small percentage of users receive the new version, and monitoring closely follows the new release. Issues or negative impacts can be identified early on, allowing for immediate rollback if necessary, reducing the risk of deploying defective code in the production environment. This method enhances resilience in service mesh architecture deployments and provides better control over release cycles.

Implementing these strategies within a service mesh involves configuration settings within the chosen service mesh solution. Detailed configuration options are available within platforms like Istio or Linkerd, allowing for intricate traffic distribution patterns. Using these advanced features in service mesh architecture provides a more predictable and controlled deployment process, leading to a more stable and reliable application environment. Service mesh architecture significantly benefits from the implementation of traffic splitting and canary deployments to achieve gradual and cautious deployments that minimize risk and maximize application stability.

Monitoring and Troubleshooting Your Service Mesh

Effective monitoring and troubleshooting are crucial for a smoothly functioning service mesh architecture. A robust monitoring strategy allows for proactive identification and resolution of issues, ensuring high availability and performance. This involves collecting and analyzing various telemetry data, including metrics, traces, and logs, generated by the service mesh components and the applications running within it. Key metrics to track include request latency, error rates, and resource utilization. Tracing provides insights into the flow of requests across different services, helping pinpoint bottlenecks or failure points. Logs offer granular details about individual service interactions, providing valuable context for debugging. Service mesh solutions often integrate with existing monitoring systems, making it simple to centralize observability for the entire microservices ecosystem.

Many service mesh technologies provide built-in monitoring tools. These tools often offer dashboards and APIs that allow users to visualize metrics, explore traces, and search logs. Istio, for example, provides a comprehensive monitoring stack that integrates with Prometheus, Grafana, and Jaeger. Linkerd also offers strong observability capabilities. These tools offer advanced features for troubleshooting issues within the service mesh architecture. They enable filtering and querying of data, providing granular insights into specific aspects of your service mesh performance. Real-time alerts can be configured based on specific thresholds, enabling quick responses to critical issues. The choice of monitoring tools will depend on your existing infrastructure and your overall monitoring strategy for the whole application. However, integrating the service mesh’s monitoring with your broader system is crucial for a holistic view of application performance.

Proactive monitoring is key to maintaining a healthy service mesh architecture. Regular review of metrics and logs helps to identify potential problems before they escalate. The ability to quickly diagnose issues is vital for minimizing downtime and ensuring a positive user experience. By implementing a robust monitoring strategy and utilizing the built-in tools of your chosen service mesh technology, organizations can effectively manage their service mesh, ensuring optimal performance and reliability. This proactive approach helps avoid costly outages and improves overall operational efficiency in managing the complex service mesh architecture.

The Future of Service Mesh Architecture: Trends and Predictions

The service mesh architecture continues to evolve at a rapid pace. Several key trends are shaping its future. Serverless computing’s integration with service meshes is becoming increasingly important. This integration aims to streamline the management of serverless functions within the broader microservices ecosystem. Improved security features, such as enhanced encryption and more robust authentication mechanisms, will be crucial for maintaining the security of applications built upon service mesh architectures. Expect to see advancements in zero-trust security models and integration with advanced threat detection systems.

Enhanced observability capabilities are another crucial area of development. Service meshes will likely incorporate more sophisticated tools for collecting, analyzing, and visualizing telemetry data. This will provide more comprehensive insights into application performance and behavior. Artificial intelligence (AI) and machine learning (ML) will play a larger role in automating tasks within the service mesh. AI-powered anomaly detection and self-healing capabilities are expected to become more prevalent, reducing the need for manual intervention in managing the complexities of distributed systems. The seamless integration of service meshes with other cloud-native technologies, such as Kubernetes and container orchestration platforms, will also continue to be a major focus, further improving the overall efficiency and scalability of cloud-based deployments.

Predicting the future of service mesh architecture involves considering the increasing complexity of microservices deployments. As organizations adopt more sophisticated cloud-native strategies, demands for advanced management tools will intensify. The service mesh, therefore, will likely evolve to encompass more comprehensive capabilities beyond its current functionality. This includes automated deployment processes, advanced traffic management strategies, and intelligent resource allocation. The adoption of service mesh architecture is expected to grow significantly in the coming years. Its ability to efficiently manage complex microservices environments will prove essential in this era of rapid digital transformation. Expect greater standardization and maturity in service mesh technologies, simplifying adoption and integration for organizations of all sizes. The ongoing development of service mesh architecture promises to deliver even more robust, secure, and efficient distributed application environments.