Understanding Sequential Data and Neural Networks

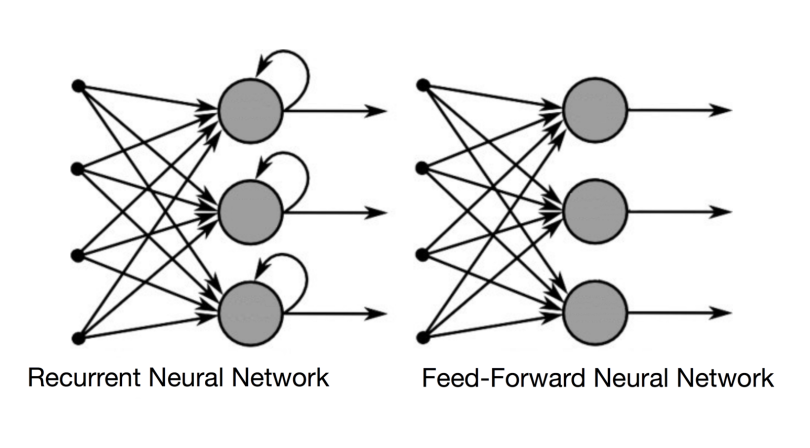

Sequential data, such as time series, text, and audio, presents unique challenges for machine learning models. Traditional feedforward neural networks, while powerful for many tasks, often struggle with this type of data. These networks typically operate on fixed-size input vectors. This limitation makes them unsuitable for processing sequences of varying lengths. Moreover, standard neural networks lack an inherent memory mechanism. Each input is processed independently, without considering the context provided by previous inputs in the sequence. An rnn neural network excels where feedforward networks fall short.

Consider the task of predicting the next word in a sentence. A feedforward network would need to process each word independently, ignoring the relationships between words and the overall sentence structure. This leads to poor performance. Similarly, in time series analysis, such as predicting stock prices, the temporal dependencies between past and present values are crucial. A feedforward network’s inability to retain information about past inputs makes it difficult to capture these dependencies effectively. The lack of memory and the requirement for fixed-size inputs are major drawbacks when dealing with sequential information. An rnn neural network addresses these challenges by incorporating a recurrent connection. This connection allows information to persist over time, enabling the network to learn from the sequence’s history.

The architecture of feedforward networks doesn’t allow for handling the dependencies that are inherent to sequential data. Each input is treated as independent. There is no mechanism to retain information from previous inputs to inform the processing of the current input. This is where the rnn neural network demonstrates its usefulness. The inherent structure of an rnn neural network makes it suitable for these types of problems. The network’s capacity to learn and exploit the sequential nature of the input data makes it a powerful tool for time series analysis, natural language processing, and other sequence-related tasks. The unique design of the rnn neural network enables it to overcome the limitations of traditional feedforward networks. This makes them a valuable tool for analyzing sequential data.

The Power of Loops: Introducing Recurrent Neural Networks

At the heart of understanding time series data lies the recurrent neural network (RNN). Unlike traditional feedforward networks, the rnn neural network possesses a unique mechanism: a recurrent connection. This loop allows information to persist, effectively creating a “memory” that informs subsequent processing steps. This is critical because in sequential data, the order and context of information are paramount.

Imagine a standard neural network processing a sentence. It treats each word independently, lacking the context of previous words. An rnn neural network, however, processes each word while considering the hidden state from the previous time step. This hidden state acts as a memory, carrying information about the sequence’s past. A diagram of an RNN reveals this looping structure, where the output of a hidden layer is fed back into itself. This feedback mechanism is what distinguishes rnn neural network from other neural network architectures. At each time step, the current input is combined with the previous hidden state to produce a new hidden state. This new state then influences the output at that time step and is passed on to the next. This iterative process allows the rnn neural network to learn temporal dependencies and make predictions based on the sequence’s history.

The concept of “memory” in rnn neural network is essential. It enables the network to retain and utilize information from earlier parts of the sequence. This is particularly important in tasks like natural language processing, where the meaning of a word can depend heavily on the words that came before it. The rnn neural network’s ability to maintain a hidden state allows it to capture these long-range dependencies. By propagating information through the hidden state, the rnn neural network can understand the context and relationships within sequential data, making it a powerful tool for a wide range of applications. The architecture of the rnn neural network, with its recurrent connections, is the key to its ability to process and understand sequential data effectively. The rnn neural network’s “memory” allows it to perform tasks that are impossible for traditional feedforward networks.

How to Build a Simple Sequence Prediction Model

Building a simple sequence prediction model using an rnn neural network provides a practical understanding of recurrent neural networks. This involves several key steps, from data preprocessing to model evaluation. Consider a simple task: predicting the next character in the string. This example uses Python and TensorFlow/Keras for demonstration.

First, data preprocessing is crucial. Start with a text corpus (e.g., a short sentence or phrase). Create a character-to-index mapping and vice versa. This allows you to convert textual data into numerical representations that the rnn neural network can process. Next, prepare the sequences. For each character in the input sequence, the goal is to predict the next character. This creates input-output pairs for training. For example, if the input is “hello”, the first input-output pair might be (“h”, “e”), followed by (“e”, “l”), and so on. One-hot encode the input and output sequences. This represents each character as a binary vector, enhancing model performance. This encoding transforms the integer indices into a suitable format for the rnn neural network.

Define the rnn neural network model architecture. A simple model might consist of an Embedding layer, an LSTM or GRU layer, and a Dense output layer. The Embedding layer converts the one-hot encoded input into a dense vector representation. This reduces dimensionality and captures semantic relationships between characters. The LSTM or GRU layer learns the temporal dependencies in the sequence. Choose an appropriate number of units for this layer. A Dense layer with a softmax activation function outputs the probability distribution over the possible next characters. Compile the model using a suitable optimizer (e.g., Adam), loss function (e.g., categorical cross-entropy), and metrics (e.g., accuracy). Train the rnn neural network model using the prepared data. Adjust the batch size and number of epochs based on the dataset size and model complexity. Monitor the training progress by observing the loss and accuracy on a validation set. Evaluate the trained rnn neural network model by generating predictions on unseen data. Given an initial sequence, the model predicts the next character, which is then appended to the sequence. Iterate this process to generate longer sequences. Evaluate the quality of the generated text by visually inspecting the output or using quantitative metrics like perplexity.

Vanishing and Exploding Gradients: The Challenges of Training Deep RNNs

Training deep rnn neural network architectures presents significant challenges, most notably the vanishing and exploding gradient problems. These issues arise due to the multiplicative nature of gradient calculations during backpropagation through time. In essence, as the gradients are propagated back through many time steps, they can either shrink exponentially towards zero (vanishing gradient) or grow exponentially towards infinity (exploding gradient). The vanishing gradient problem is particularly detrimental, as it prevents the earlier layers of the rnn neural network from learning effectively. When gradients become too small, the weights in these layers are barely updated, rendering the network unable to capture long-range dependencies in the sequential data.

Conversely, exploding gradients can lead to unstable training. Large gradients cause drastic updates to the network’s weights, resulting in oscillations and divergence. The network struggles to converge to a stable solution, and the training process becomes unreliable. These problems are exacerbated in longer sequences, where the gradients must traverse more time steps. Consider a scenario where an rnn neural network is trained on a very long text document. The vanishing gradient problem might prevent the network from learning the relationships between words that are far apart in the document. This severely limits the ability of the rnn neural network to understand the context and generate coherent text.

To mitigate the exploding gradient problem, a technique called gradient clipping is commonly employed. Gradient clipping involves setting a threshold on the magnitude of the gradients. If the gradient exceeds this threshold, it is scaled down to prevent it from becoming too large. This prevents the drastic weight updates that can destabilize training. While gradient clipping addresses exploding gradients, it does not solve the vanishing gradient problem. More advanced rnn neural network architectures, such as LSTMs and GRUs, are specifically designed to overcome the vanishing gradient problem and enable the training of deep rnn neural network models on long sequences. These architectures introduce mechanisms that allow information to flow more easily through time, preventing the gradients from vanishing.

Long Short-Term Memory (LSTM): Overcoming the Vanishing Gradient

LSTMs represent a significant advancement in rnn neural network architecture, specifically designed to mitigate the vanishing gradient problem that plagues traditional RNNs, especially when dealing with long sequences. The vanishing gradient problem arises because, during backpropagation, the gradients diminish exponentially as they are propagated back through time. This makes it difficult for the rnn neural network to learn long-range dependencies in the data. LSTMs address this issue through a sophisticated gating mechanism that controls the flow of information within the network.

At the heart of an LSTM network is the LSTM cell, a specialized unit that replaces the simple activation function in a standard rnn neural network. The LSTM cell contains several key components: the cell state, input gate, output gate, and forget gate. The cell state acts as a “memory” that can preserve information over long periods. The gates are neural networks that regulate the flow of information into and out of the cell state. The forget gate determines what information to discard from the cell state, allowing the network to forget irrelevant past information. The input gate decides what new information to store in the cell state, enabling the rnn neural network to learn new patterns. The output gate controls what information from the cell state is used to compute the output of the LSTM cell at the current time step.

The interplay of these gates allows LSTMs to selectively remember and forget information over extended sequences, effectively overcoming the vanishing gradient problem. By carefully controlling the flow of information, LSTMs can learn long-range dependencies in time series data that would be impossible for standard rnn neural network architectures. The LSTM’s ability to maintain relevant information over long periods makes them particularly well-suited for a wide range of applications, including natural language processing, speech recognition, and time series forecasting. This is due to the specialized architecture of rnn neural network models such as the LSTM.

Gated Recurrent Units (GRU): A Simplified Alternative to LSTM

Gated Recurrent Units (GRUs) present a streamlined alternative to Long Short-Term Memory (LSTM) networks, achieving comparable performance with a reduced number of parameters. Like LSTMs, GRUs are designed to mitigate the vanishing gradient problem inherent in training deep rnn neural network architectures, especially when processing extended sequences. This makes GRUs a powerful tool for a variety of sequence modeling tasks.

The architecture of a GRU cell centers around two key gates: the reset gate and the update gate. The update gate governs the extent to which the previous hidden state is incorporated into the current hidden state. Essentially, it determines how much of the past information should be retained. The reset gate, on the other hand, controls how much of the previous hidden state is disregarded. This allows the rnn neural network to effectively “reset” its memory when necessary, focusing on new and relevant information. These two gates work in concert to enable GRUs to capture long-range dependencies in sequential data.

Comparing GRUs and LSTMs, GRUs offer a simpler structure, making them computationally more efficient. LSTMs feature three gates (input, output, and forget) and a cell state, while GRUs consolidate these into two gates and directly modify the hidden state. This simplification often translates to faster training times and reduced memory requirements. However, the choice between GRUs and LSTMs depends on the specific application. LSTMs, with their more complex architecture, may be better suited for tasks requiring fine-grained control over memory management. GRUs often perform admirably and are a great choice in situations where computational resources are limited or a simpler rnn neural network model is preferred. Both architectures represent advancements over traditional RNNs, offering enhanced capabilities for handling sequential data and overcoming the limitations of vanishing gradients, proving valuable in the realm of rnn neural network applications.

Applications of Recurrent Nets: From Text Generation to Financial Forecasting

Recurrent neural networks (RNNs), along with their variants like LSTMs and GRUs, have revolutionized various fields by effectively processing sequential data. Their ability to maintain a “memory” of past inputs makes them exceptionally well-suited for tasks where context and order are crucial. The versatility of the rnn neural network is evident in its wide range of applications, each leveraging the unique capabilities of these architectures.

One prominent area is Natural Language Processing (NLP). RNNs excel at text generation, where they can be trained to produce coherent and contextually relevant text, mimicking different writing styles or even creating entirely new stories. Machine translation benefits significantly from RNNs, enabling the accurate conversion of text from one language to another by considering the sequential dependencies between words. Sentiment analysis, another NLP application, uses rnn neural network models to determine the emotional tone of a piece of text, providing valuable insights for businesses and researchers alike. Furthermore, speech recognition relies heavily on RNNs to transcribe spoken language into text, a complex task requiring the processing of sequential audio data. The rnn neural network models can be used to identify patterns in audio data and correlate to spoken words.

Beyond NLP, recurrent nets find extensive use in time series forecasting. In financial markets, RNNs can analyze historical stock prices and other financial indicators to predict future trends. Similarly, in meteorology, they can process weather data to forecast future weather patterns with improved accuracy. Video analysis is yet another domain where RNNs are proving invaluable. They can be used for action recognition, identifying and classifying human actions within video sequences. By processing the sequential frames of a video, rnn neural network models can learn to recognize complex activities. These are just a few examples of the many real-world applications where RNNs, LSTMs, and GRUs are making a significant impact. Their ability to handle sequential data makes them powerful tools for solving complex problems in diverse fields. The continued development and refinement of rnn neural network architectures promise even more exciting applications in the future.

Evaluating RNN Performance and Tuning Hyperparameters

Evaluating the performance of rnn neural network models requires careful consideration of the specific task. Several metrics can provide insights into how well an rnn neural network is learning and generalizing. For language modeling, perplexity is a commonly used metric. It measures how well the model predicts a sample of text. Lower perplexity scores indicate better performance. For classification tasks, accuracy, precision, recall, and F1-score are all relevant metrics. These metrics assess the model’s ability to correctly classify sequences into different categories. The choice of metric depends on the specific goals of the classification task and the relative importance of different types of errors. When evaluating rnn neural network models, it is also crucial to use appropriate evaluation datasets and techniques, such as cross-validation, to ensure that the results are reliable and representative.

Tuning hyperparameters is essential for optimizing the performance of rnn neural network models. Several key hyperparameters can significantly impact the model’s ability to learn and generalize. The learning rate controls the step size during the optimization process. A learning rate that is too high can lead to instability. A learning rate that is too low can result in slow convergence. The number of layers in the rnn neural network determines the model’s capacity to learn complex patterns. More layers can capture more intricate relationships. However, they can also increase the risk of overfitting. The hidden state size determines the amount of information that the rnn neural network can retain at each time step. A larger hidden state size can allow the model to capture longer-range dependencies. Regularization techniques, such as dropout and L2 regularization, can help prevent overfitting by adding penalties to the model’s complexity. Techniques like cross-validation can help to find the best hyperparameter.

Optimizing an rnn neural network often involves experimenting with different hyperparameter settings. This experimentation helps to identify the combination of parameters that yields the best performance on the evaluation dataset. Manual search, grid search, and random search are common techniques for hyperparameter optimization. Bayesian optimization and other more advanced methods can also be used to automate the search process and find optimal hyperparameters more efficiently. By carefully evaluating model performance and tuning hyperparameters, practitioners can build high-performing rnn neural network models that effectively address a wide range of sequence modeling tasks.