Understanding Recurrent Neural Networks (RNNs)

Recurrent Neural Networks (RNNs) represent a powerful class of neural networks uniquely designed to process sequential data. Unlike traditional feedforward networks, an RNN network possesses an internal “memory” mechanism. This allows it to maintain information about past inputs, influencing its processing of current inputs. Imagine reading a sentence: you understand each word based on the words that came before it. RNNs work similarly, leveraging past information to better understand the present. This “memory” is crucial for tasks involving sequences, such as processing text, speech, or time series data. RNNs excel at tasks where the order of information matters significantly. The core characteristic of an rnn network is its ability to maintain and utilize this context across the sequence. This makes them particularly well-suited to analyzing sequential data where the order of elements is vital for understanding the overall pattern or meaning.

Sequential data, by its nature, presents a unique challenge for traditional neural networks. The inherent temporal dependencies in such data are lost when processing each data point independently. RNNs address this by introducing recurrent connections, loops within the network that feed information from one step to the next. This “looping” mechanism enables the rnn network to preserve information from previous time steps, allowing the network to build a representation that’s contextually aware. The sequential nature of the data is crucial; the model learns dependencies between elements, not just isolated occurrences. For example, in natural language processing, an rnn network can learn grammatical structures and contextual relationships between words. This is a core difference between RNNs and other architectures, leading to the RNN’s unique suitability for various sequence-based tasks. The ability to capture temporal dependencies allows rnn networks to model complex patterns in data that are otherwise missed.

Various applications benefit from this ability of an rnn network to remember past inputs. Consider predicting the next word in a sentence; the rnn network uses its knowledge of previous words to inform its prediction. In time series analysis, an rnn network can forecast future values by considering historical trends. The applications are diverse, spanning from language translation and speech recognition to financial market prediction and anomaly detection in sensor data. The key is the rnn network’s ability to learn and utilize temporal dependencies within sequential data. Its capacity for learning complex patterns in sequences, makes it a powerful tool for solving a wide variety of problems in diverse fields, establishing its place as a fundamental architecture in deep learning.

How RNNs Differ from Traditional Neural Networks

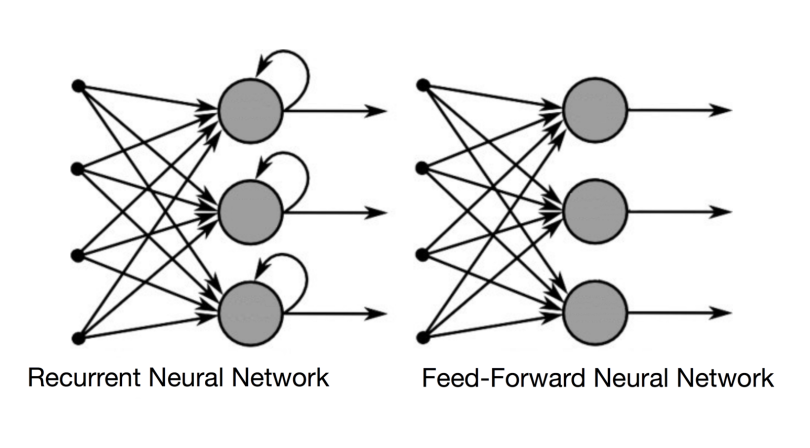

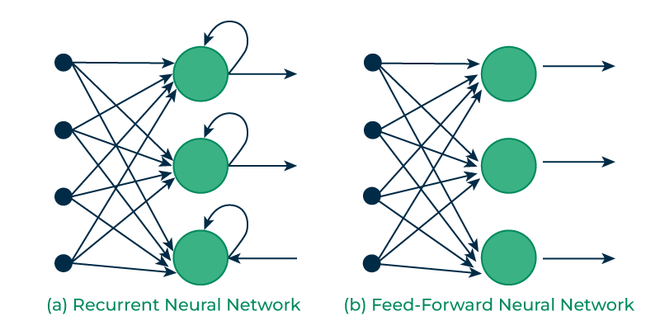

Recurrent neural networks (RNNs) stand apart from traditional feedforward neural networks due to their inherent architecture. Feedforward networks process data in one direction, from input to output, without any loops. In contrast, an rnn network incorporates loops, enabling information to persist and influence subsequent computations. This loop allows the network to maintain a form of “memory,” crucial for processing sequential data. Imagine reading a sentence: understanding the meaning of each word depends on the preceding words. RNNs function similarly, using their internal memory to contextualize each data point in a sequence. The memory is represented by a hidden state vector, updated at each time step. This vector carries information from previous time steps, affecting the network’s output at the current step. The presence of these loops fundamentally distinguishes RNNs and allows for the powerful handling of sequential data that traditional feedforward networks cannot achieve. The fundamental difference lies in the network’s ability to retain and utilize information from past inputs.

A visual representation clarifies this difference. A feedforward network resembles a straight line, with data flowing unidirectionally. An rnn network, however, is depicted as a loop, with the output from one time step feeding back into the network as input for the next. This cyclical process is what forms the “memory” of the rnn network. Each cycle, or time step, updates the hidden state vector, incorporating new information while retaining relevant past data. This continuous feedback mechanism is the defining characteristic of RNN architectures, enabling them to process sequences effectively. The hidden state acts as a summary of past information, dynamically adapting as new data is received. This sophisticated memory mechanism enables an rnn network to capture dependencies and patterns within sequential data.

The hidden state within an rnn network is a key element of its memory. This vector represents the network’s “understanding” of the sequence up to a given point. It’s updated at each time step, incorporating new information and influencing the network’s output. For instance, in natural language processing, the hidden state might represent the network’s understanding of a sentence’s grammatical structure and meaning up to the current word. This allows the rnn network to predict the next word more accurately by considering the context established by previous words. Therefore, the ability of an rnn network to leverage past information is central to its success in handling various sequential data types. This internal memory mechanism is the critical distinction between an rnn network and other network types.

Types of Recurrent Neural Networks

Recurrent neural networks (RNNs) come in various architectures, each designed to address specific challenges in processing sequential data. Standard RNNs, while conceptually simple, often struggle with the vanishing or exploding gradient problem. This issue arises during training, hindering the network’s ability to learn long-range dependencies within the sequence. The vanishing gradient makes it difficult for the rnn network to capture information from earlier time steps, impacting its overall performance. Exploding gradients, on the other hand, lead to unstable training and potentially unreliable results. To overcome these limitations, more sophisticated RNN architectures have been developed.

Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs) are prominent examples of advanced RNN architectures. LSTMs address the vanishing gradient problem by employing a sophisticated gating mechanism. This mechanism regulates the flow of information within the network, allowing it to selectively remember or forget information over time. This makes LSTMs particularly well-suited for tasks requiring the retention of information over extended sequences. GRUs, while simpler than LSTMs, also offer improved performance over standard RNNs. They use fewer parameters than LSTMs, making them computationally more efficient. However, they might not always capture long-range dependencies as effectively as LSTMs. The choice between LSTM and GRU for a given task depends on factors such as the length of the sequences, the complexity of the relationships between data points, and computational resources. For shorter sequences and resource-constrained environments, GRUs might be preferred. For longer sequences or situations requiring precise capture of long-range dependencies, LSTMs are often more effective. The architecture of the rnn network is crucial for achieving optimal results.

Choosing the right rnn network architecture is a critical step in building successful sequence models. The selection depends heavily on the specific application and the nature of the sequential data being processed. Understanding the strengths and weaknesses of each type of RNN allows for informed decision-making, leading to more efficient and accurate models. The ability to handle vanishing or exploding gradients is a key factor, as it directly affects the network’s capacity to learn from long sequences. The use of LSTMs or GRUs often mitigates these problems. In practice, experimentation and comparative analysis are essential for determining the most suitable rnn network for a given task. Furthermore, advancements continue to be made in RNN architectures, offering improved performance and efficiency. Keeping abreast of these developments is crucial for leveraging the full potential of rnn networks in various applications.

How to Build and Train an RNN Network

Building and training an rnn network involves several key steps. First, prepare your data. Sequential data, like text or time series, needs careful preprocessing. This often includes converting text to numerical representations using techniques like tokenization and one-hot encoding. For time series data, normalization or standardization might be necessary. The choice of preprocessing depends on the specific dataset and the rnn network architecture. Data needs to be split into training, validation, and testing sets for accurate model evaluation.

Next, define the rnn network architecture. Popular frameworks like TensorFlow or PyTorch provide tools to construct RNNs easily. You’ll specify the number of layers, the type of RNN cell (e.g., LSTM, GRU), the number of units per layer, and the input and output dimensions. Experimentation is key to finding the optimal architecture for your specific task. Consider factors like the length of input sequences and the complexity of the problem. Remember, a well-designed rnn network architecture is crucial for effective learning and accurate predictions. The choice of the activation functions for the hidden layers can significantly impact performance.

After defining the architecture, select an appropriate loss function and optimization algorithm. Common loss functions for RNNs include mean squared error (MSE) for regression tasks and categorical cross-entropy for classification problems. Optimization algorithms like Adam or RMSprop are frequently used to update the network’s weights during training. The learning rate is a crucial hyperparameter that needs careful tuning. Monitor the training process closely, using metrics like training loss and validation accuracy to assess the model’s performance. Early stopping can prevent overfitting and improve generalization to unseen data. Experiment with different hyperparameter settings to optimize the rnn network’s performance. Regularization techniques, such as dropout, can also help prevent overfitting and improve the model’s ability to generalize to new, unseen data. Thorough evaluation using appropriate metrics is vital for assessing the performance of the rnn network on unseen data. Proper hyperparameter tuning and regularization techniques can significantly enhance the rnn network’s generalization capabilities.

Applications of RNNs in Real-World Scenarios

Recurrent neural networks (RNNs) have revolutionized various fields by effectively processing sequential data. In natural language processing (NLP), RNN networks excel at tasks like machine translation. They analyze the source language’s sequence and generate the target language’s equivalent. Sentiment analysis, determining the emotional tone of text, also benefits from RNNs’ ability to understand context across words. Furthermore, RNN networks power sophisticated text generation models, creating human-like text for applications like chatbots and creative writing tools. The inherent ability of an rnn network to capture temporal dependencies makes it particularly well-suited for these applications.

Beyond NLP, RNN networks find widespread use in time series analysis. Predicting stock prices, a notoriously complex task, leverages RNNs to identify patterns and trends in historical data. Similarly, weather forecasting utilizes RNN networks to process meteorological data sequences and make accurate predictions. These applications demonstrate the rnn network’s capacity to model dynamic systems and extract meaningful insights from time-dependent information. The successful deployment of RNN networks in these areas underscores their power and versatility.

Speech recognition is another domain transformed by RNNs. These networks process audio signals, representing them as sequences of acoustic features. By learning the temporal relationships between these features, RNN networks accurately transcribe spoken language into text. This technology powers virtual assistants and voice-to-text software, improving accessibility and efficiency. The ongoing development and refinement of RNN network architectures continue to push the boundaries of what’s possible in this field. The advancements in RNN networks are continuously enhancing the accuracy and robustness of speech recognition systems.

Advanced RNN Architectures and Techniques

Recurrent neural networks (RNNs) offer a powerful approach to sequential data processing. However, their basic architecture can be enhanced significantly through various advanced techniques. Bidirectional RNNs, for instance, process sequential data in both forward and backward directions. This allows the rnn network to consider both past and future context when making predictions, leading to improved performance in tasks like machine translation where understanding the entire sentence is crucial. The network effectively gains a broader understanding of the context surrounding each point in the sequence. This architecture is particularly beneficial for tasks where understanding the complete sequence context is essential.

Another enhancement is the use of stacked RNNs, where multiple layers of RNNs are stacked on top of each other. Each layer processes the output of the previous layer, allowing the rnn network to learn increasingly complex features from the data. Stacking provides a hierarchical representation, capturing both low-level and high-level features within the sequential data. This approach improves the network’s ability to model long-range dependencies, which are challenging for standard RNNs. The increased capacity allows for the learning of richer and more intricate representations. This makes stacked RNNs a valuable tool in applications requiring deep understanding of complex sequential information.

Attention mechanisms represent a significant advancement in RNN architectures. Standard RNNs process each element of a sequence sequentially, potentially losing important information from earlier parts of the sequence. Attention mechanisms, however, allow the rnn network to focus on different parts of the input sequence when making predictions. This selective focus enables the network to attend to the most relevant parts of the input when processing each element, significantly improving performance, particularly in handling long sequences. This mechanism is crucial for tasks such as machine translation where focusing on the most relevant parts of the input sentence helps improve accuracy. Attention enhances the capabilities of the rnn network, providing a more nuanced and context-aware approach to sequence modeling.

Addressing Challenges in RNN Training and Implementation

Training recurrent neural networks (RNNs) presents unique challenges. One significant hurdle is the vanishing or exploding gradient problem. During backpropagation through time, gradients can shrink exponentially, hindering learning in long sequences. Conversely, exploding gradients can lead to instability and divergence. Addressing this often involves using architectures like LSTMs or GRUs, which incorporate mechanisms to regulate the flow of information. Gradient clipping, a technique that limits the magnitude of gradients, also proves beneficial. The rnn network’s architecture plays a crucial role in mitigating these issues.

Another common challenge stems from long-range dependencies. RNNs can struggle to connect information from distant time steps in a sequence. This limitation affects performance in tasks requiring the network to remember information over extended periods. Architectural choices like LSTMs and GRUs help alleviate this to some degree, but the problem remains a significant consideration in rnn network design. Attention mechanisms, which allow the network to focus on relevant parts of the input sequence, offer an innovative approach to overcome long-range dependency issues. Careful selection of hyperparameters and appropriate data preprocessing strategies also prove vital in improving performance.

Finally, the computational cost of training RNNs can be substantial. The iterative nature of processing sequential data and the potential for large network sizes contribute to this expense. Efficient implementations using specialized hardware, like GPUs, are crucial for training large rnn networks. Techniques for reducing the computational burden include using smaller networks, careful optimization of the training process, and exploring alternative architectures that offer comparable performance with fewer computational resources. The rnn network’s training efficiency is vital for practical applications, particularly in tasks involving massive datasets.

The Future of RNNs and Emerging Trends

Recurrent neural networks (RNNs) have significantly impacted various fields, from natural language processing to time series analysis. However, research continues to explore ways to improve their efficiency and address limitations. One area of focus involves developing more sophisticated architectures that can better handle long-range dependencies in sequential data. This includes advancements in gated mechanisms and novel approaches to memory management within the rnn network itself. Researchers are also investigating methods to reduce the computational cost associated with training large RNN models, enabling their application to even more complex tasks.

The rise of Transformers has presented a compelling alternative to RNNs for sequence modeling. Transformers leverage attention mechanisms to process sequential information in parallel, offering significant speed advantages over RNNs’ sequential processing. While Transformers have shown impressive results in many NLP tasks, RNN networks still retain relevance. They often offer advantages in specific scenarios, particularly when dealing with sequential data that exhibits strong temporal dependencies or when computational resources are limited. The future likely involves a synergistic approach, combining the strengths of both RNNs and Transformers to create hybrid models tailored to specific problem domains. Further exploration into hybrid architectures will likely shape the future landscape of sequence modeling.

Ongoing research in RNN network optimization focuses on improving training stability and mitigating issues like vanishing gradients. New regularization techniques and innovative training strategies are being explored. The development of more efficient hardware specifically designed for RNN computations is also crucial. Specialized hardware can significantly reduce training times and energy consumption, making RNNs more accessible for broader applications. As research progresses, we anticipate further advancements in RNN architectures and training methodologies, leading to more powerful and efficient rnn networks capable of tackling even more challenging problems in diverse fields. The continued evolution of RNN technology promises exciting developments in artificial intelligence and machine learning.