The Power of Sequential Data Analysis

Sequential data, characterized by a specific order or time dependency between data points, is prevalent in numerous real-world scenarios. Traditional machine learning models often falter when confronted with this type of data. These models typically operate under the assumption that data points are independent of one another. This assumption is clearly invalid when dealing with sequences where the order of data carries critical information. For example, predicting the next word in a sentence requires understanding the context provided by the preceding words. Similarly, forecasting stock prices necessitates analyzing historical price fluctuations. A model that treats each data point in a sequence as independent will miss crucial patterns and dependencies, resulting in inaccurate or meaningless results. Such limitations highlight the need for specialized models capable of capturing temporal dynamics and dependencies within the data. This is where the power of a recurrent neural network becomes evident, offering a robust approach to sequential data analysis. A recurrent neural network is designed to handle this challenge by processing the sequence one element at a time, maintaining an internal state that reflects past observations, which is critical for tasks like forecasting or translation.

Consider a sentence; each word influences the interpretation of the subsequent words. Traditional machine learning models would treat each word as a separate input, ignoring the crucial contextual relationships between the words. Imagine trying to predict a stock price; each day’s price is dependent on the previous day’s activity, which is dependent on the activity of the days before. Ignoring that dependence leads to poor model performance. Consequently, this inherent limitation of traditional methods renders them inadequate for many real-world applications involving sequential data. This inherent lack of memory poses a significant challenge in the context of sequential data analysis, where understanding the context of each data point often requires access to information from prior steps. The architecture of a recurrent neural network addresses this limitation by maintaining a memory of past inputs, which is vital for understanding the temporal relationships within the sequence. The ability of recurrent neural network to preserve this contextual information allows them to excel in various applications where sequential data is central.

Therefore, the limitations of traditional models underscore the importance of using a recurrent neural network or other specialized approaches that are specifically designed to handle the sequential nature of this type of information. In essence, the need for methods that can interpret sequential data is evident. Models that cannot understand sequence dependencies are inadequate for such tasks. The development of the recurrent neural network is an essential step toward analyzing sequences, which means, understanding the interconnected nature of temporal data. The design of recurrent neural network provides the necessary framework to interpret temporal patterns, opening possibilities for a deeper understanding of sequential data. The limitations of traditional machine learning approaches further explain why specialized models like a recurrent neural network are essential.

Understanding the Mechanism Behind Recurrent Neural Networks

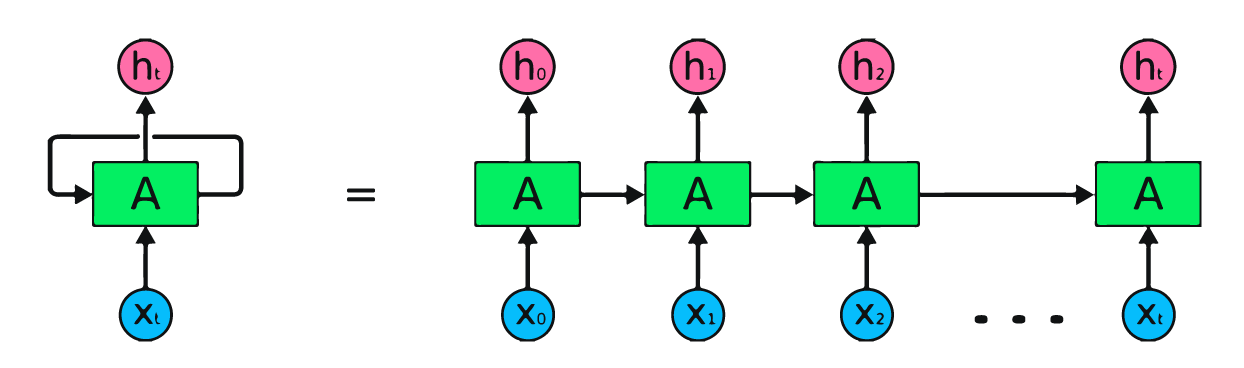

At the heart of a recurrent neural network lies a unique approach to processing sequential data. Unlike traditional feedforward networks, a recurrent neural network incorporates feedback loops. This allows information to persist across time steps. Imagine a network with a “memory” of the past. This memory is contained in what is known as the hidden state. The hidden state is a vector that is updated at each step. This update depends on both the current input and the previous hidden state. This mechanism forms the basis of how these networks process sequences. It’s crucial to understand that this hidden state carries information across different time steps. This contrasts with models assuming data point independence. The core mechanism is the repeated application of a simple transformation to the current input and the previous hidden state, yielding the next hidden state, and an output.

Let’s visualize this with a simple analogy. Imagine reading a sentence, word by word. A recurrent neural network processes it in a similar manner. Each word serves as an input. The network’s hidden state is like the understanding it’s building as it reads. The hidden state captures the context of the sentence seen so far. When the next word arrives, the network combines this word with the existing context. It is updating its understanding to make the final prediction. This cycle repeats until the end of the sentence is reached. This is how a recurrent neural network processes sequential information. Another analogy is a conveyor belt. Each item on the belt is an input, and the processing at each station depends on both the current item and what happened at previous stations. This ability to retain past information enables recurrent neural networks to handle sequential data. It is a unique and powerful trait not found in typical neural networks.

The feedback loops within a recurrent neural network are crucial. These loops allow information to be passed from one step to the next. They provide the network with a form of memory. This “memory” is what enables the recurrent neural network to learn relationships in data over time. The repeated application of the same transformation function at each time step leads to a model. This model can process sequences of arbitrary lengths. The architecture makes these networks well-suited for tasks involving time-series analysis. Think of things like text, audio, or other ordered data. Understanding these core concepts is key to understanding how a recurrent neural network works and when to use them.

How to Apply RNNs to Real-World Problems

Recurrent neural network models are powerful tools applicable to various real-world scenarios. These networks excel in handling sequential data, making them suitable for tasks where the order of information is crucial. One significant application is in Natural Language Processing (NLP). For example, consider sentiment analysis. The input is a sequence of words in a sentence. The output is a classification, such as positive, negative, or neutral sentiment. A simple recurrent neural network, or even more sophisticated architectures like LSTMs or GRUs, can be employed here depending on the complexity required. These models process words sequentially, understanding the context and dependencies between them to accurately assess the sentiment.

Another key area is time-series forecasting. Here, the input is a sequence of data points over time, such as stock prices or weather data. The goal is to predict future values. A recurrent neural network, particularly an LSTM or GRU, is beneficial due to its ability to capture long-term dependencies in the data. For instance, in predicting future stock prices, past fluctuations significantly influence the present and the future. RNNs can learn these patterns. They can identify trends in the historical data. The output is a predicted sequence of future values. A simple RNN might work for less complex sequences. However, LSTMs and GRUs are more suitable for modeling complex time series patterns.

Finally, recurrent neural network architectures play a vital role in audio processing. Speech recognition is a prime example. Here, the input is a sequence of audio signals over time. The output is the corresponding text. RNNs, especially those using LSTM or GRU layers, can accurately model the temporal dynamics of audio. The network learns to map the sequence of audio features to the words spoken. This requires a neural network that can manage sequential input. The network must remember earlier parts of the sequence while processing the rest. This capacity of the recurrent neural network makes them invaluable for various tasks that deal with sequences. These examples illustrate how effective RNNs are for sequential data analysis.

Long Short-Term Memory Networks: Overcoming RNN Limitations

Basic recurrent neural network models face a significant challenge known as the vanishing gradient problem. During training, gradients can become extremely small as they are backpropagated through time, making it difficult for the network to learn long-range dependencies. This means that the network struggles to remember information from the distant past, limiting its ability to effectively model sequential data. Long Short-Term Memory networks, or LSTMs, were developed to address this issue. LSTMs introduce a memory cell, a crucial component that allows the network to maintain information over extended periods. This memory cell is regulated by a series of gates, which control the flow of information into and out of the cell. These gates allow LSTMs to selectively store, forget, and use information, enabling them to learn long-term dependencies more effectively than simple recurrent neural network architectures.

The architecture of an LSTM includes three primary gates: the input gate, the forget gate, and the output gate. The input gate determines which new information should be added to the memory cell. It uses a sigmoid activation function to decide what information to update and a tanh activation to create a vector of new candidate values. These two are then combined to update the memory state. The forget gate uses a sigmoid function to decide what information should be discarded from the cell state, crucial for preventing irrelevant data from cluttering the memory. The output gate, which also employs a sigmoid function, decides which parts of the cell state should be passed to the hidden state, and hence the next time step. Each gate plays a vital role in controlling the flow of information within the LSTM, ensuring that only relevant data is used and kept for future computations. This carefully designed mechanism allows LSTMs to tackle long-term dependencies, a critical capability for many sequential data processing tasks. This makes the recurrent neural network a more robust option.

At the core of the LSTM is the cell state, a kind of conveyor belt that runs along the entire chain, allowing information to flow unchanged for long periods. It carries relevant information through the sequence and is modified by the different gates. The gates decide what information will be removed from the cell state, what will be added, and what will be sent to the next layers. By having this memory cell and the regulatory gates, LSTMs can overcome the vanishing gradients problem and can accurately capture long-range dependencies present in sequential data. This allows these networks to perform significantly better than basic recurrent neural network models, making them the preferred choice for several applications including time-series forecasting, speech recognition, and natural language processing tasks. The sophisticated structure of the LSTM allows for a more powerful and flexible model that is suitable to many different tasks.

Gated Recurrent Units: A Simpler Alternative

Gated Recurrent Units, or GRUs, present a streamlined approach to managing sequential data compared to Long Short-Term Memory networks (LSTMs). GRUs were developed to address the complexities of LSTMs, offering similar performance with a simpler architecture. This simplification is achieved by combining the forget and input gates of LSTMs into a single update gate. GRUs also have a reset gate, which determines how much of the past information to forget. These gates help the recurrent neural network learn and retain long-term dependencies in the sequential data by controlling the flow of information. The update gate decides how much of the past cell state will be updated with new information, effectively determining how much the previous time steps are relevant to the current one. Meanwhile, the reset gate controls how much of the previous hidden state is considered when calculating the new hidden state.

The core advantage of a GRU lies in its reduced number of parameters, making it computationally less demanding than LSTMs. This can lead to faster training times and make them more suitable for applications with limited computational resources. Both GRUs and LSTMs effectively handle the vanishing gradient problem, a major hurdle with basic recurrent neural networks. However, GRUs achieve this with a more simplified structure, by using a single gate to update the cell state, instead of the three that are used in LSTMs. In situations where computational efficiency is paramount, GRUs often provide a great balance between performance and resource usage, while LSTMs offer a slightly more nuanced control over information flow due to their more complex gating mechanisms. Choosing between a GRU and an LSTM depends on the specific needs of the problem. If memory requirements are low, and the training time is a big factor, the GRU might be the better option.

For tasks where more fine-grained control over information flow is required, such as those with more complex sequential dependencies, an LSTM may be the more appropriate choice. The decision on whether to use a GRU or a LSTM often also relies on the type and amount of training data available, as well as computational resources available. GRUs have been widely adopted for use cases that previously used more complicated recurrent neural network. The reduced complexity of the GRU compared to the LSTM makes it a good option when beginning to experiment with recurrent neural networks or when there is need to train a large number of models in a computationally efficient manner. The selection should be based on empirical evaluation to determine the most adequate type of network for the specific sequential data application.

Building and Training Recurrent Neural Network Models

The practical application of a recurrent neural network involves several key steps. The first step is choosing the right data. This data must be sequential in nature, with a temporal relationship between data points. Examples include text sequences, time series data, or audio recordings. Once the data is selected, it is crucial to prepare it properly. This typically includes tasks such as cleaning, normalization, and splitting the data into training, validation, and test sets. The training set is used to adjust the network weights, while the validation set is employed to monitor performance during training and prevent overfitting. The test set provides a final evaluation of the trained recurrent neural network model’s capabilities on unseen data. The next step involves selecting the appropriate architecture for your specific task. This could involve using a simple recurrent neural network, or more sophisticated models like Long Short-Term Memory (LSTM) networks or Gated Recurrent Units (GRUs) depending on the complexity and the length of the input data. Each architecture handles sequential data in slightly different ways, and choosing correctly is an important factor in model performance.

After architecture selection, the training process begins. The chosen recurrent neural network is fed input sequences from the training data. The model computes its predictions, and the difference between the predictions and the actual values is measured using a loss function. The gradients of this loss are then computed with backpropagation and used to update the weights of the model. This optimization is an iterative process that can involve complex methods, such as Adam or RMSprop. Training must also take care to avoid overfitting the training data which could cause poor generalization to unseen examples. This is done through methods like cross-validation, regularization, and early stopping. Cross-validation allows the performance to be tested through multiple random splits of the data set, allowing for a more robust assessment of the model performance. Regularization techniques add penalties to the model’s loss function to ensure it does not become overly complex. Early stopping monitors performance on the validation set and halts training before it starts to overfit.

For building and training, it is common to use industry standard libraries. Popular packages like TensorFlow and PyTorch provide tools that are very useful to perform all the steps, from data loading to optimization. These frameworks implement the recurrent neural network architectures and the necessary mathematical functions, and provide the ability to deploy models in the cloud or edge devices. It is important to remember that these packages are tools that simplify the building process and do not replace the need to understand the underlying concepts and the best practices of the recurrent neural network model. Each framework has strengths and weaknesses, but both are suitable for building and training complex machine learning models. Therefore, the user must have an adequate understanding of their particular needs to determine the best framework for implementation.

Evaluating the Performance of Your RNN

Evaluating the performance of a recurrent neural network model is crucial. The selection of evaluation metrics depends on the task. For classification tasks, accuracy, precision, and recall are frequently used. Accuracy measures the overall correctness. Precision calculates the proportion of correctly predicted positives out of all positive predictions. Recall determines the proportion of actual positives that were correctly identified. In regression tasks, metrics such as Root Mean Squared Error (RMSE) or Mean Absolute Error (MAE) are more appropriate. RMSE provides a measure of the average magnitude of the errors. MAE calculates the average absolute difference between predicted and actual values. These metrics offer insights into how well the recurrent neural network is performing on the data.

Avoiding overfitting and bias is essential for training robust recurrent neural network models. Overfitting occurs when the model learns the training data too well, resulting in poor performance on unseen data. Techniques such as regularization and dropout can help mitigate overfitting. Regularization adds a penalty to the loss function. This discourages overly complex models. Dropout randomly disables neurons during training. This reduces the model’s reliance on specific neurons. Early stopping prevents the model from training for too long. It can be achieved by monitoring the validation set performance. The validation set provides an independent measure of the model’s generalization performance. It should be used during training. The test set provides the final evaluation of the model after training is complete. Test set should never be used for any training decisions.

The careful use of a validation set and a test set is necessary. The validation set provides an unbiased evaluation of the recurrent neural network model. It helps fine-tune hyperparameters. The test set provides an independent estimate of the final model performance. It helps determine if your model will actually perform well in the real world. Properly evaluating a recurrent neural network will help you build a more reliable model. These practices ensure that the recurrent neural network will generalize well to new data.

Future of Sequential Modeling

The landscape of sequential modeling is continuously evolving, with significant advancements building upon the foundations laid by recurrent neural networks. While recurrent neural networks (RNNs) have been instrumental in processing sequential data, newer architectures like attention mechanisms and Transformer networks have emerged, offering improved capabilities for capturing long-range dependencies. These advanced models, particularly Transformers, have shown remarkable performance in natural language processing and other domains. However, it’s crucial to recognize that the principles of RNNs, such as the handling of sequential information through hidden states and feedback loops, remain essential for understanding these newer models. Transformers, for instance, often utilize some kind of recurrent logic to capture sequential information through self-attention layers.

Despite the rise of Transformers, recurrent neural networks continue to be valuable in certain scenarios. For tasks with relatively shorter sequences or those where computational efficiency is paramount, RNNs, particularly LSTMs and GRUs, often represent a more practical choice. Their smaller number of parameters compared to Transformers leads to faster training times and lower resource consumption. Moreover, recurrent neural network architectures are sometimes preferred in embedded systems or edge devices because they are less complex and resource intensive. Specific applications such as real-time audio processing or sensor data analysis, where the sequential relationships may not be extremely long, are still very suitable for recurrent neural network approaches. The ability of recurrent neural networks to sequentially process data points makes them especially appropriate to process time-series data where the sequence of events is important and there is a temporal correlation between them.

The ongoing research and developments in the field will continue to shape the future of sequential modeling, however, the understanding of recurrent neural networks is still a crucial skill for professionals working with sequential data. Even in cases where more advanced architectures are used, a solid grasp of RNNs provides valuable insights into the underlying mechanisms of sequence processing. The principles of how information is passed through time and how long-term dependencies can be captured through recurrent approaches remain fundamental. As researchers continue to explore innovative techniques, including hybrid models that integrate aspects of RNNs and Transformers, a solid base understanding of recurrent neural network dynamics will provide a great advantage. The impact of recurrent neural networks on artificial intelligence is undeniable and they still form the bedrock upon which many current machine learning systems are founded.