Understanding Readiness Probes: Their Purpose and Function

Readiness probes are essential components of modern application deployments. They act as health checks, ensuring a system is fully operational before accepting traffic. These probes continuously monitor the application’s readiness to handle requests. A readiness probe’s primary function is to prevent unhealthy instances from receiving traffic, ensuring high availability and a seamless user experience. Failure to implement effective readiness probes can lead to degraded performance or application outages.

Several types of probes exist, each suited to different application needs. HTTP probes send HTTP requests to a specific endpoint. TCP probes verify the application’s ability to establish a TCP connection. ICMP probes, or ping probes, check network reachability. The choice of probe depends on the application’s architecture and the nature of the health check required. For instance, a web application would benefit from an HTTP probe, while a simple network service might only require a TCP readiness probe. Effective readiness probe implementation is crucial during deployments, scaling, and failovers. During a deployment, probes prevent exposing partially functional instances to users. Scaling operations benefit from probes to ensure only healthy replicas receive traffic. Similarly, failovers rely on accurate readiness probes to seamlessly route requests to functional instances.

Readiness probes are integral to maintaining system stability and user satisfaction. They prevent unhealthy instances from serving traffic, ensuring high availability and a seamless user experience. Understanding the different types of readiness probes – HTTP, TCP, and ICMP – and their appropriate uses is fundamental. The impact of a properly implemented readiness probe strategy extends across all operational phases: deployments, scaling, and failovers. A well-designed readiness probe strategy contributes significantly to an application’s overall resilience and operational efficiency. Failing to implement or misconfigure readiness probes can lead to downtime, impaired performance, and a negative user experience. Therefore, a thorough understanding of readiness probes is paramount for reliable application management. The importance of choosing the right readiness probe type cannot be overstated; the selection should carefully consider the specifics of the application and its health indicators.

How to Effectively Implement Readiness Probes in Kubernetes

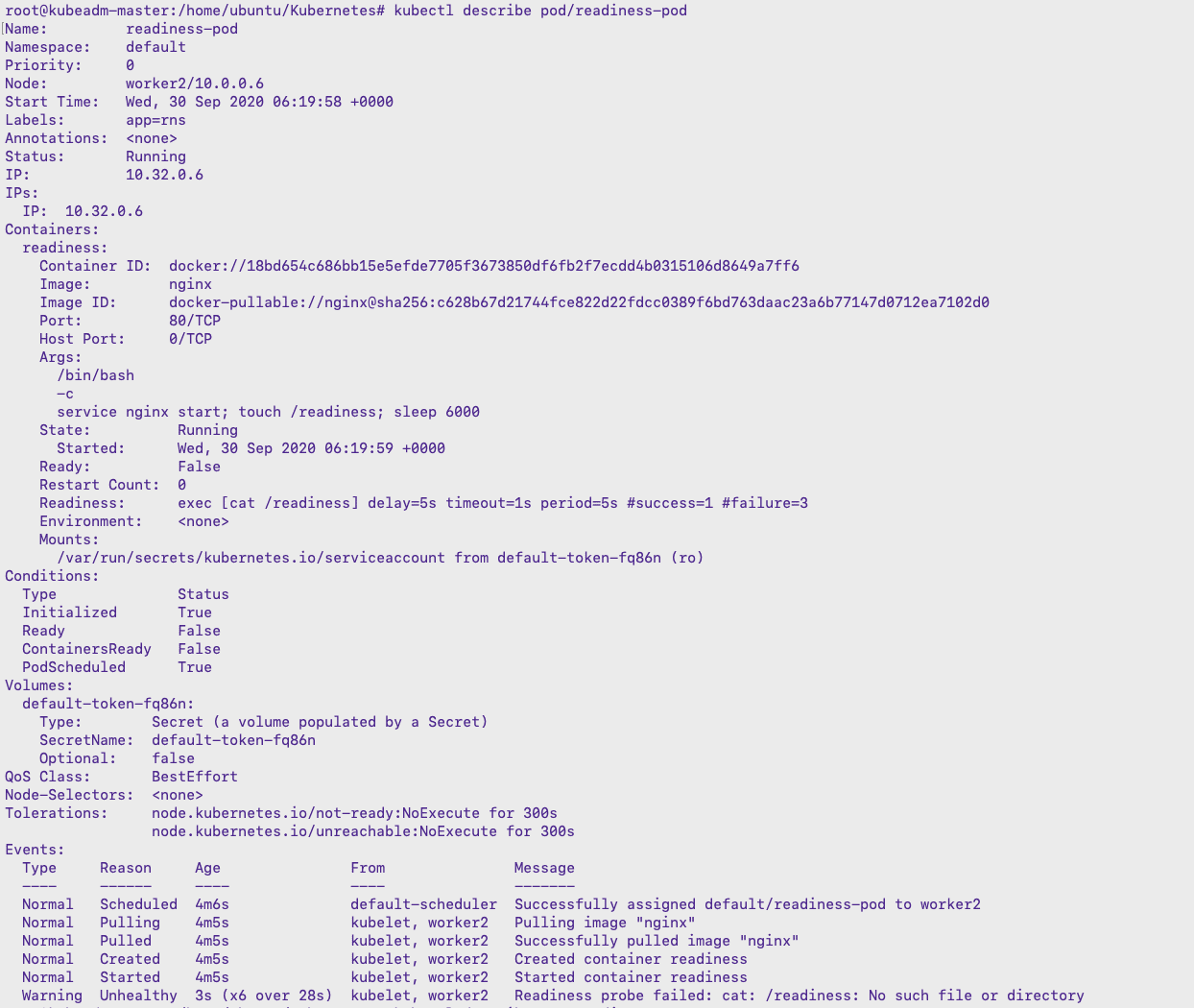

Kubernetes employs readiness probes to ascertain whether a container is ready to accept traffic. These probes are crucial for ensuring application availability and smooth deployments. The configuration of a readiness probe involves specifying several parameters within a Kubernetes YAML file, including `initialDelaySeconds`, `periodSeconds`, `timeoutSeconds`, and `failureThreshold`. Understanding these parameters is vital for correctly implementing readiness probes and avoiding common pitfalls. The `initialDelaySeconds` parameter determines the delay before the probe starts. `periodSeconds` sets the interval between probes. `timeoutSeconds` dictates the time allowed for a single probe to succeed. Finally, `failureThreshold` specifies the number of consecutive failed probes before the container is marked as unhealthy.

A common approach uses an HTTP readiness probe. This involves sending an HTTP request to a specific endpoint within the container. The container is considered ready if the endpoint returns a successful HTTP status code (e.g., 200 OK). The YAML configuration for an HTTP readiness probe would include the path to the endpoint and the required HTTP status code. For example, a readiness probe might check `/healthz`, expecting a 200 OK response. Alternatively, a TCP readiness probe can simply check if a specific port is open and accepting connections. This is often simpler to implement, particularly for services that don’t expose HTTP endpoints. Effective readiness probe implementation hinges on correctly configuring these parameters to match the specific application’s startup and health-check requirements. Incorrect configuration can lead to incorrect health statuses, causing unnecessary delays or service disruptions. Properly implemented readiness probes significantly improve application resilience and reliability.

Consider a scenario where a containerized application requires substantial initialization time. Setting `initialDelaySeconds` appropriately avoids premature health checks before the application is fully ready. Similarly, adjusting `periodSeconds` and `failureThreshold` allows for efficient monitoring without overwhelming the application with excessive health checks. For instance, a large application with a long startup time might require a longer `initialDelaySeconds` value and a less frequent `periodSeconds` value. Conversely, a simpler application with a rapid startup might use shorter values for both. Remember, optimizing these readiness probe settings is key to achieving efficient resource utilization and dependable application health monitoring. Carefully consider the application’s specific needs when configuring your readiness probe. A well-configured readiness probe is a fundamental component of a robust and scalable Kubernetes deployment.

Readiness Probes in Kubernetes and Docker Swarm: A Comparison

Kubernetes and Docker Swarm, popular container orchestration platforms, both support readiness probes. However, their implementations differ slightly. In Kubernetes, readiness probes are defined within the pod specification using YAML. This allows for granular control over parameters like `initialDelaySeconds`, `periodSeconds`, `timeoutSeconds`, and `failureThreshold`. These parameters help fine-tune the probe’s behavior, ensuring accurate health assessments. Docker Swarm, on the other hand, offers less granular control over readiness probes. The configuration is simpler, often relying on built-in health checks or external monitoring tools. The choice between Kubernetes and Docker Swarm often depends on the project’s scale and complexity. For simpler deployments, Docker Swarm’s simpler readiness probe implementation might suffice. For larger, more complex applications, Kubernetes’ more sophisticated approach offers greater flexibility and control, providing a more robust readiness probe solution. Understanding these differences is crucial for selecting the right platform for a given project.

A key difference lies in how each platform handles the readiness probe information. Kubernetes integrates deeply with its internal components, allowing readiness probe data to be readily accessible through the Kubernetes API. This facilitates integration with monitoring tools and simplifies troubleshooting. Docker Swarm offers a less integrated approach. While Docker Swarm supports health checks, obtaining health data might require external monitoring tools or direct interaction with the Docker Swarm API. This can be less convenient and might require more custom scripting or integration efforts. Effective use of readiness probes in either environment is essential for ensuring application availability. Implementing readiness probes appropriately can significantly improve system resilience and reduce downtime. Understanding the nuances of readiness probes within each platform is key to optimizing application deployment and maintenance. Properly configured readiness probes help ensure that only healthy containers receive traffic, maintaining a consistently high level of service availability.

Another aspect to consider is the type of probes supported. Both platforms typically support HTTP, TCP, and ICMP probes. However, the level of customization in defining custom probes can vary. Kubernetes offers more avenues for creating custom probes, particularly useful when dealing with applications requiring specialized health checks. This flexibility allows developers to tailor readiness probes to the unique needs of their application, enhancing the accuracy and effectiveness of the health checks. The versatility of Kubernetes’ readiness probe implementation makes it suitable for a wider range of applications compared to Docker Swarm’s more limited customization options. The correct readinessprobe strategy improves application stability, reducing the likelihood of service disruptions. Proper monitoring of readiness probe results provides critical insight into the health and responsiveness of the application. The proper implementation of a readinessprobe, whether within Kubernetes or Docker Swarm, directly impacts operational efficiency.

Integrating Readiness Probes with Prometheus and Grafana: A Powerful Monitoring Duo

Prometheus, a prominent open-source monitoring and alerting system, excels at collecting metrics. It seamlessly integrates with Kubernetes, readily ingesting data from readiness probes. This allows for continuous monitoring of application health. By configuring Prometheus to scrape the Kubernetes API, the readiness probe status becomes readily available as a metric. This data provides a real-time view of application health, flagging issues before they impact users. Effective monitoring requires efficient data visualization, which is where Grafana steps in. Grafana, a versatile open-source visualization and analytics platform, transforms raw metrics into easily digestible dashboards. Custom dashboards can be created to display the readinessprobe status, alongside other relevant metrics, providing a comprehensive overview of system health. This allows for proactive identification of potential problems. Visual representations help identify trends and patterns that might otherwise remain hidden within raw data. This proactive approach ensures timely resolution of issues.

Implementing this integration involves configuring Prometheus to scrape the Kubernetes API for readinessprobe metrics. This requires specifying the correct endpoints and authentication details. Once Prometheus collects the data, Grafana can be configured to connect to Prometheus and utilize this data for visualization. Creating a Grafana dashboard involves choosing appropriate panels to display the readinessprobe status. These panels will visually represent the success or failure rate of readiness probes over time. Adding other relevant metrics, such as CPU utilization or memory usage, provides valuable context. This allows operators to correlate readinessprobe failures with resource consumption, thereby pinpointing the root cause of issues more efficiently. A well-designed Grafana dashboard improves operational efficiency and facilitates faster response to potential incidents. The combined power of Prometheus and Grafana offers a compelling solution for visualizing and reacting to readinessprobe data, ensuring optimal system uptime.

Advanced users can leverage Prometheus’s querying capabilities to create sophisticated alerts based on readinessprobe data. This allows for automated notifications upon detecting critical failures. These alerts can be integrated into existing incident management systems, streamlining the response process. For example, an alert can trigger when the success rate of a readinessprobe drops below a defined threshold. This proactive approach minimizes downtime and prevents user disruptions. Remember, the proper configuration of readiness probes, coupled with powerful monitoring and alerting systems, forms the cornerstone of robust application deployment and operation. The ability to quickly identify and address issues using this comprehensive approach dramatically improves overall system reliability. Investing in these monitoring tools significantly improves the effectiveness of readinessprobe implementations.

Advanced Techniques: Custom Readiness Probes and Health Checks

Beyond the standard HTTP, TCP, and ICMP readiness probes, complex applications often demand custom solutions. A custom readiness probe allows for highly specific health checks tailored to the application’s unique logic and dependencies. This approach ensures a more accurate reflection of the application’s operational status. For instance, a database-dependent application might require a custom readiness probe that verifies database connection and query execution before declaring itself ready. This goes beyond simple port checks, offering granular control over the health assessment. Implementing a custom readiness probe might involve scripting languages like Python or shell scripts to execute application-specific checks. The output of these scripts then determines the readiness status reported to the orchestrator.

Developing such custom solutions requires careful consideration. The script should be lightweight and efficient to avoid impacting application performance. Error handling and robust logging are crucial for effective troubleshooting and monitoring. The custom readiness probe should also be designed to handle potential failures gracefully and avoid cascading issues. It should provide meaningful status updates, so operators can quickly pinpoint the source of any problems. For example, a custom readiness probe might check the status of internal queues, the health of dependent microservices, or the availability of specific data files. The key is to align the custom readiness probe’s functionality directly with the application’s critical success factors. Integration with monitoring systems will further enhance the value of this tailored approach.

Consider scenarios where a simple port check is insufficient. Applications may have multiple internal components or external dependencies that are crucial to their functionality but aren’t directly reflected in a basic readiness probe. A robust, custom readiness probe offers a more comprehensive view of system health, allowing for better operational decision-making. For Kubernetes, this might involve creating a custom container image that performs these checks. In Docker Swarm, a similar approach would be taken using custom scripts or services. The use of custom readiness probes, therefore, provides increased control and precision in system readiness monitoring. This results in a more finely tuned and responsive system capable of swiftly adapting to changes. Efficiently managing resources and ensuring the readinessprobe’s reliability is paramount for optimal functionality.

Troubleshooting Common Readiness Probe Issues: Best Practices and Solutions

Implementing readiness probes effectively requires careful attention to detail. Misconfigurations can lead to inaccurate health assessments, impacting application availability and potentially causing service disruptions. One common issue is false positives, where a readiness probe incorrectly reports a system as unhealthy. This often stems from overly sensitive probe settings or temporary network glitches. To mitigate this, adjust parameters like `initialDelaySeconds` and `periodSeconds` to allow sufficient time for application initialization and to account for transient network fluctuations. Regularly review probe logs to identify patterns and address underlying issues promptly. Properly configured readiness probes are vital for maintaining system stability; resolving these issues quickly is crucial for operational efficiency. Consider using more sophisticated health checks or implementing custom probes tailored to your application’s specific needs. Always test your readiness probe configurations thoroughly in a staging environment before deploying to production.

Slow response times from readiness probes can also indicate underlying performance bottlenecks. This might be due to resource constraints within the application, slow database queries, or network latency. Employ performance monitoring tools to identify the root cause of the slowness. Optimize application code, database queries, and network configurations to improve responsiveness. Ensure sufficient resources are allocated to the application containers. If the application relies on external services, monitor the health and performance of those services as well. Address slow responses promptly to prevent readiness probes from exceeding the `timeoutSeconds` setting, which will trigger unnecessary failovers or restarts. The efficiency of your system directly correlates with responsiveness, so careful probe tuning is essential. Effective troubleshooting involves a methodical approach to diagnose the cause of slow readiness probe responses.

Another challenge is misconfiguration of readiness probe parameters. Incorrectly setting values like `initialDelaySeconds`, `periodSeconds`, `timeoutSeconds`, or `failureThreshold` can lead to erratic behavior. Thoroughly review the documentation for your chosen container orchestration platform (like Kubernetes or Docker Swarm) to understand the implications of each parameter. Use a structured approach when defining your readiness probe configuration, and employ version control to track changes. Always validate your configuration before deploying it to production, using tools and techniques that simulate real-world conditions. Systematic testing and well-defined configuration management practices will reduce the likelihood of readinessprobe misconfiguration. Prioritizing proper configuration avoids many common issues, significantly improving the reliability and maintainability of your system.

Real-World Examples: Case Studies of Effective Readiness Probe Implementation

A major e-commerce platform leveraged readiness probes to ensure seamless application deployments. Their readinessprobe strategy incorporated HTTP checks targeting key application endpoints. This allowed for automatic rollback if the application failed to reach a predefined level of operational readiness after deployment. The system’s uptime significantly improved, resulting in enhanced customer experience and reduced revenue loss. This successful implementation highlights the importance of comprehensive readinessprobe strategies in high-traffic environments.

In the financial services industry, a large bank integrated readiness probes into its core banking system. The bank used a combination of TCP and custom health checks to monitor the availability of critical services. The readinessprobe system provided real-time insights into the health of the system, enabling proactive intervention and minimizing downtime during peak transaction periods. This proactive approach ensured regulatory compliance and prevented significant financial losses from system outages. The implementation proved that proactive monitoring using readiness probes can drastically reduce risk and improve operational efficiency within a high-stakes environment.

A global logistics company integrated readiness probes into its order management system. They employed a multi-layered approach, using a combination of HTTP readiness probes, custom scripts for database connectivity, and a message queue health check. This thorough strategy enabled the company to quickly identify and address issues impacting order processing. The improved system stability reduced delivery delays and enhanced customer satisfaction. This example demonstrates how a well-designed readinessprobe system can provide a holistic view of application health, improving efficiency and resilience in a complex, distributed system. The use of multiple probe types showcased the adaptability and effectiveness of a comprehensive approach to system readiness monitoring. The resulting improvement in uptime and order processing efficiency directly translated into improved customer satisfaction and profitability. This case study proves that strategically implemented readiness probes yield substantial benefits across diverse industries.

Future Trends in System Readiness and Probe Technology

The landscape of system readiness monitoring is constantly evolving. Advancements in artificial intelligence (AI) and machine learning (ML) promise to revolutionize how readiness probes function. AI-powered predictive analytics can analyze historical data from readiness probes, identifying patterns and predicting potential failures before they occur. This proactive approach allows for preventative maintenance and minimizes downtime. Expect to see more sophisticated algorithms integrated into readiness probe systems, enabling smarter, more efficient health checks. The integration of AI will improve the accuracy and responsiveness of readiness probes, reducing false positives and providing more reliable insights into system health. This evolution will significantly impact operational efficiency and system reliability.

Another significant trend is the increasing emphasis on proactive and automated remediation. Future readiness probes will not only detect problems but also trigger automated responses to resolve issues. This might involve automatically scaling resources, restarting failing containers, or routing traffic to healthy instances. This level of automation will greatly reduce the manual intervention required to maintain system health, leading to faster recovery times and improved operational efficiency. The use of serverless technologies and cloud-native architectures will also influence the design and implementation of readiness probes. Expect to see a greater emphasis on lightweight, scalable probes that can easily adapt to the dynamic nature of cloud-based environments. These probes will need to be designed to minimize overhead and resource consumption while providing comprehensive health information.

The development of standardized readiness probe formats and APIs will also facilitate greater interoperability between different monitoring tools and platforms. This standardization will simplify the integration of readiness probes into existing infrastructure, making them more accessible to a wider range of users. Moreover, the convergence of various monitoring approaches, including readiness probes, metrics, and logs, will offer a more holistic view of system health. This integrated approach will provide deeper insights and enable more effective troubleshooting. The continued development and refinement of readiness probe technology is critical for ensuring the reliability and availability of modern, complex systems. The focus on AI-driven predictions, automated remediation, and standardized protocols ensures that readiness probes will play an increasingly important role in maintaining optimal system performance.