Understanding the Basics: What Are Pods and Nodes?

In the realm of container orchestration, particularly within Kubernetes, the terms “pods” and “nodes” frequently appear. These two fundamental concepts play a crucial role in managing and scaling containerized applications. Here’s a clear definition of each:

Pods: A pod is the smallest and simplest unit in the Kubernetes object model that you create or deploy. A pod represents a running process on your cluster and can contain one or more containers. If there are multiple containers, they share the same network namespace, meaning they can all reach each other’s ports on localhost.

Nodes: A node is a worker machine in Kubernetes, which can be a virtual or physical machine. Each node contains the services necessary to run pods and is managed by the master components. The services on a node include the container runtime, kubelet, and kube-proxy.

In summary, pods are the atomic units of deployment, while nodes are the machines that run and manage those pods. Understanding this relationship is essential for effectively working with Kubernetes and container orchestration.

Key Differences: Pods vs. Nodes

While pods and nodes are interconnected components in container orchestration systems like Kubernetes, they have distinct roles and characteristics. Here are the primary differences between pods and nodes:

- Scalability: Pods are highly scalable, allowing users to create and manage multiple instances of an application or service with ease. Nodes, on the other hand, have a limited capacity for running pods, depending on the available resources. As a result, scaling applications often involves adding or removing nodes from the cluster.

- Resource Allocation: Pods share the resources of the host node, including CPU, memory, and storage. Nodes, however, are responsible for managing and allocating these resources among the pods running on them. Kubernetes provides features like resource requests and limits to help ensure fair distribution of resources across pods.

- Fault Tolerance: Pods can be configured with multiple containers, allowing for increased fault tolerance through container replication. Nodes, being the physical or virtual machines, have their own built-in fault tolerance mechanisms, such as RAID arrays for storage and redundant power supplies. However, node failures typically require more extensive recovery procedures compared to pod failures.

For example, consider a Kubernetes cluster running a web application. The application may consist of multiple pods, each containing one or more containers for the frontend, backend, and database services. These pods run on nodes, which provide the necessary resources and manage the communication between the pods. If a node fails, the pods running on that node will also be affected, and the cluster’s control plane will automatically reschedule them onto other available nodes.

Architectural Overview: How Pods and Nodes Interact

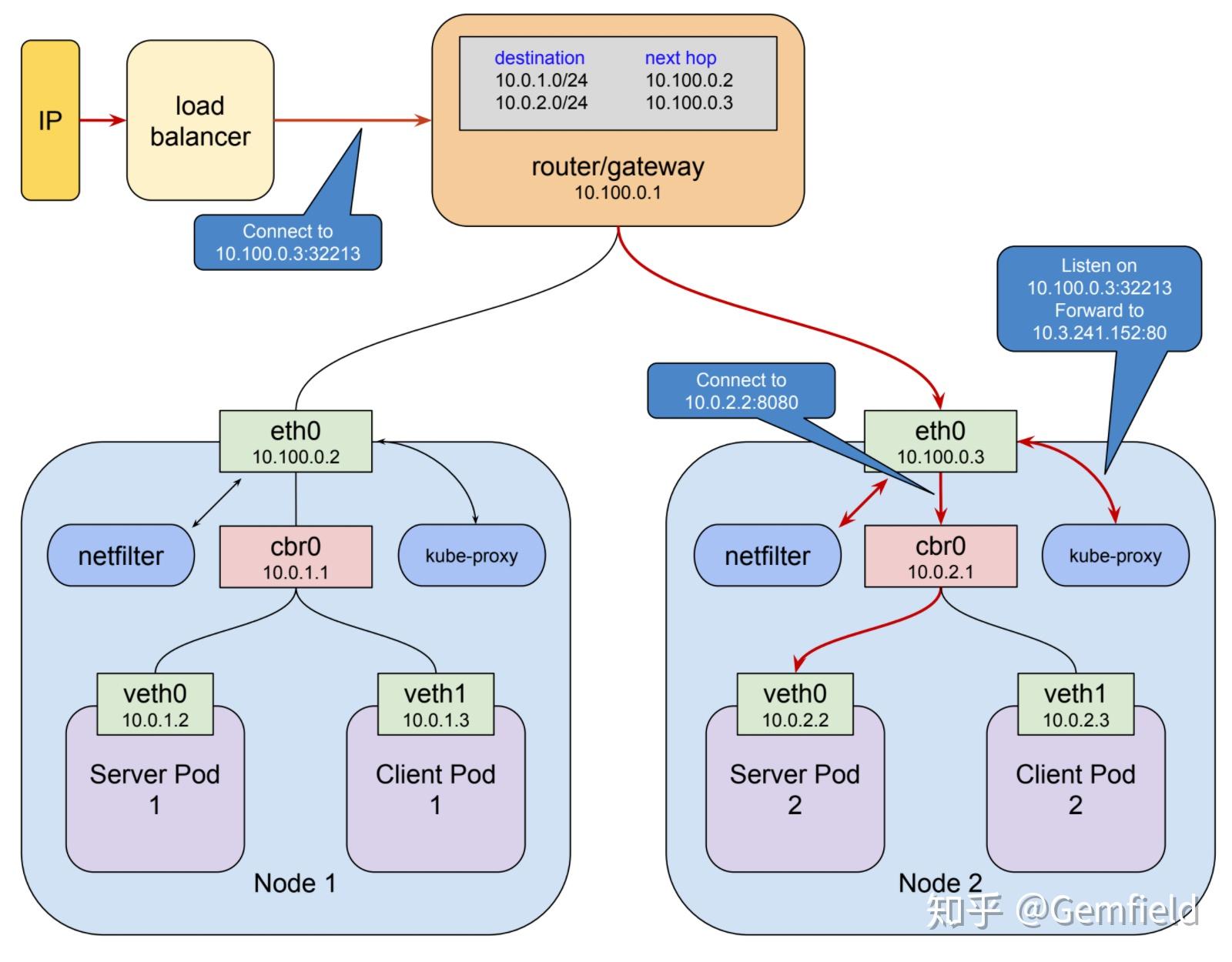

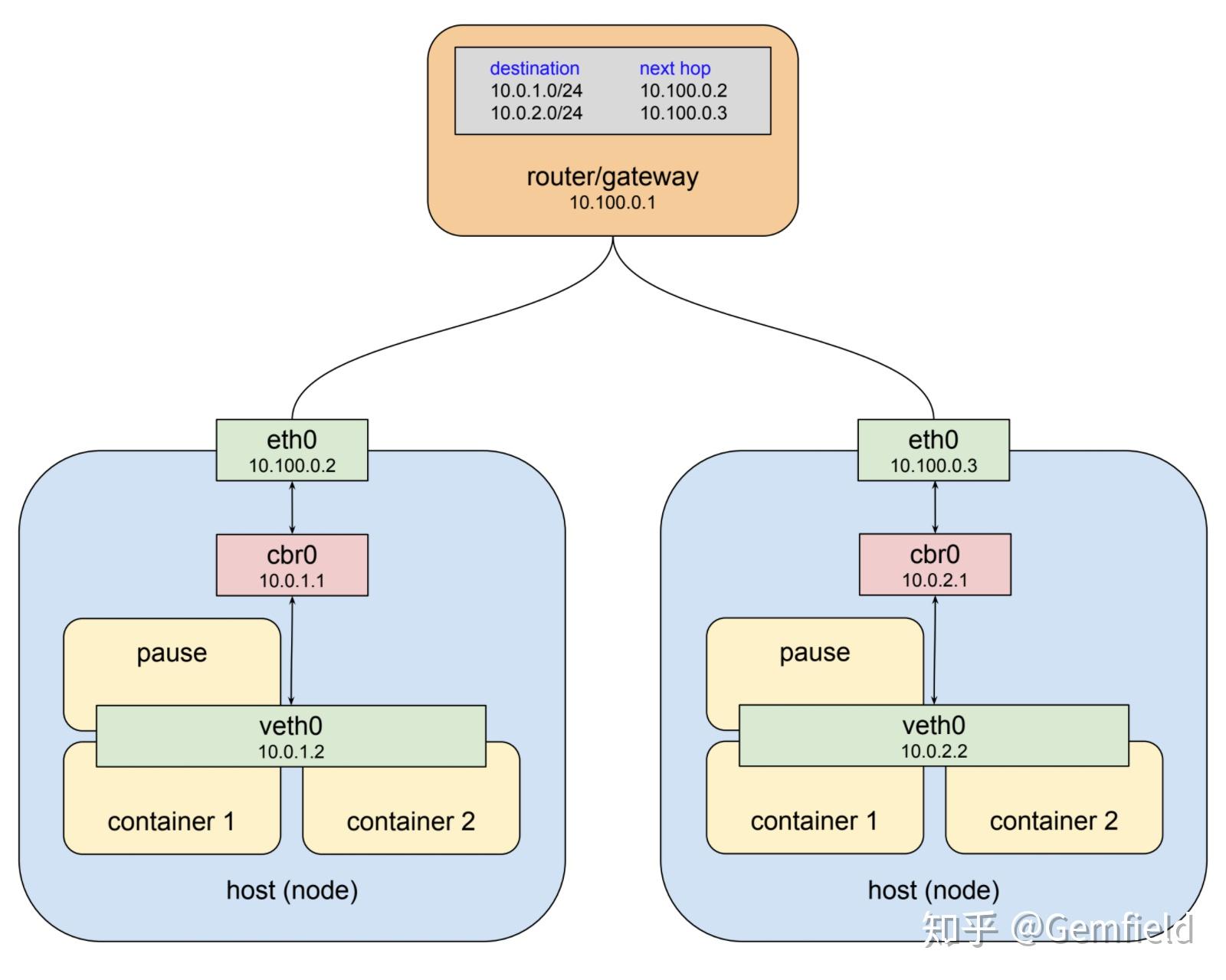

Pods and nodes interact within a Kubernetes cluster to provide a robust and scalable infrastructure for containerized applications. The following diagram illustrates the relationship between pods and nodes:

In this architecture:

- Pods: Contain one or more application containers that share the same network namespace. Pods are ephemeral and can be created, destroyed, or rescheduled as needed.

- Nodes: Are the physical or virtual machines that run and manage pods. Each node contains the necessary services, such as the container runtime, kubelet, and kube-proxy, to maintain the lifecycle of pods and facilitate communication between them.

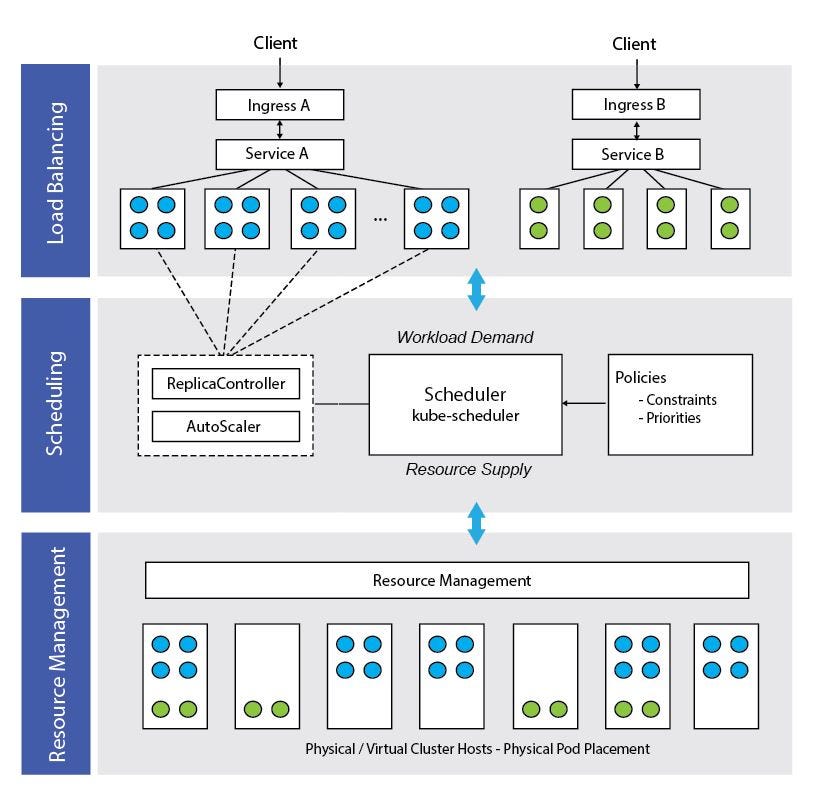

- Control Plane: Manages the cluster’s shared resources and ensures that the desired state is maintained. The control plane includes components like the API server, etcd, and scheduler.

Pods and nodes communicate through the Kubernetes API, which enables the control plane to manage and orchestrate pods across nodes. The kubelet service on each node is responsible for registering the node with the API server and ensuring that the pods running on the node are in the desired state. The kube-proxy service, also running on each node, handles network communication between pods and ensures that the network traffic is properly routed.

Benefits and Drawbacks: Weighing the Pros and Cons

When comparing pods and nodes in Kubernetes or other container orchestration platforms, it’s essential to understand the advantages and disadvantages of each. Here are some factors to consider:

Pods

- Pros: Pods offer a high degree of flexibility and scalability, allowing users to create and manage multiple instances of an application or service with ease. They also promote efficient resource utilization by sharing resources among containers within the same pod.

- Cons: Pods are ephemeral and can be terminated or rescheduled at any time, which may not be ideal for stateful applications. Additionally, managing multiple pods can become complex, requiring careful resource allocation and orchestration.

Nodes

- Pros: Nodes provide a stable foundation for running and managing pods, offering built-in fault tolerance mechanisms and dedicated resources. They also offer a centralized management point for monitoring, troubleshooting, and securing the applications running on them.

- Cons: Nodes can be a single point of failure, and their capacity is limited by the available resources. Adding or removing nodes from a cluster can also be time-consuming and may require careful planning to maintain the desired state.

When evaluating pods vs. nodes, consider factors such as performance, maintenance, and cost. For example, if you need to run a high-availability, resource-intensive application, you might benefit from using multiple nodes with multiple pods for each service. However, if you’re working with a smaller project or have budget constraints, using fewer nodes with more pods per node could be a more cost-effective solution.

How to Choose: Selecting the Right Approach

When deciding between pods and nodes for your specific use case, consider the following factors:

- Project requirements: Determine the needs of your project, such as resource requirements, scalability, and fault tolerance. If your application requires high availability and resource-intensive tasks, using multiple nodes with multiple pods per service might be the best approach. However, for smaller projects or budget-constrained environments, using fewer nodes with more pods per node could be more cost-effective.

- Budget: The cost of using pods and nodes can vary significantly depending on the infrastructure and resources required. Consider the cost of running and maintaining nodes, as well as the potential cost savings from efficient resource utilization with pods.

- Team expertise: Evaluate your team’s familiarity with container orchestration platforms and their ability to manage and maintain the chosen approach. If your team is new to containerization, starting with a simpler setup using fewer nodes and more pods per node might be more manageable.

In general, using pods and nodes together in a Kubernetes or container orchestration environment offers the best balance of flexibility, scalability, and fault tolerance. By understanding the key differences, benefits, and drawbacks of each, you can make informed decisions about the optimal solution for your specific use case.

Implementation Strategies: Best Practices for Pods and Nodes

Implementing and managing pods and nodes effectively requires careful planning and adherence to best practices. Here are some tips to help you get started:

Resource Allocation

Properly allocate resources for your pods and nodes to ensure optimal performance and prevent resource contention. Utilize Kubernetes resource requests and limits to define the minimum and maximum resources each pod can consume. This helps maintain a stable environment and prevents individual pods from consuming all available resources.

Monitoring and Troubleshooting

Monitor the health and performance of your pods and nodes regularly to identify and address issues promptly. Use tools like Prometheus, Grafana, or commercial monitoring solutions to collect and visualize metrics. For troubleshooting, consider using command-line tools like kubectl or platform-specific UIs to diagnose and resolve problems.

Security

Implement security best practices to protect your pods and nodes from potential threats. Use network policies to control traffic between pods, configure secrets and config maps to manage sensitive data, and leverage role-based access control (RBAC) to define user permissions. Additionally, consider using container runtime security features, such as seccomp and AppArmor, to restrict container capabilities and limit potential attack surfaces.

Continuous Integration and Continuous Deployment (CI/CD)

Adopt CI/CD practices to streamline the deployment of pods and nodes. Use tools like Jenkins, GitLab, or GitHub Actions to automate the build, test, and deployment processes. This helps ensure consistent and reliable deployments, reduces human error, and accelerates the development lifecycle.

Scaling and Resilience

Plan for scaling and resilience by implementing autoscaling and self-healing strategies. Utilize Kubernetes Horizontal Pod Autoscaler (HPA) and Cluster Autoscaler to automatically scale pods and nodes based on resource utilization and demand. Additionally, configure pod disruption budgets (PDBs) to ensure a minimum level of availability during voluntary disruptions, such as rolling updates or maintenance operations.

Real-World Applications: Case Studies and Success Stories

Pods and nodes have been successfully implemented in various real-world scenarios, demonstrating their versatility and effectiveness in container orchestration. Here are a few examples:

Example 1: Scaling Microservices with Pods and Nodes

A leading e-commerce company leveraged pods and nodes to scale their microservices-based architecture. By deploying multiple pods for each microservice, they achieved high availability and fault tolerance. Nodes were added or removed based on resource utilization and demand, ensuring optimal performance and cost efficiency.

Example 2: Containerizing Legacy Applications with Pods and Nodes

A financial institution with a large monolithic application decided to modernize their infrastructure using pods and nodes. By containerizing individual components and orchestrating them with Kubernetes, they improved scalability, resource utilization, and deployment speed. This modernization strategy allowed them to maintain their existing application while benefiting from the advantages of containerization.

Example 3: Disaster Recovery and High Availability with Pods and Nodes

A healthcare provider implemented a disaster recovery and high availability strategy using pods and nodes. By replicating pods across multiple nodes and regions, they ensured that their critical applications remained available even in the event of a catastrophic failure. This approach not only improved system resilience but also reduced recovery time objectives (RTOs) and recovery point objectives (RPOs).

Testimonials

“By using pods and nodes, we were able to significantly reduce our deployment times and improve our application’s scalability. The flexibility and ease of management have been a game-changer for our team.” – Lead Developer, E-commerce Company

“Containerizing our legacy application with pods and nodes has been a smooth and rewarding process. We’ve seen improvements in resource utilization, scalability, and deployment speed.” – IT Manager, Financial Institution

“Our disaster recovery and high availability strategy, based on pods and nodes, has given us peace of mind. We know our critical applications will remain available, even in the face of unexpected failures.” – CTO, Healthcare Provider

Future Trends: The Evolution of Pods and Nodes

As container orchestration technologies like Kubernetes continue to mature, pods and nodes are expected to evolve and adapt to new challenges and opportunities. Here are some potential future developments and their implications for the industry:

Serverless Architectures and Functions as a Service (FaaS)

Serverless architectures and FaaS platforms, such as AWS Lambda and Google Cloud Functions, are gaining popularity due to their ease of use, low maintenance, and cost-effective scaling. While not directly related to pods and nodes, these technologies may influence the way container orchestration tools manage resources and deploy applications. Future versions of Kubernetes might include native support for serverless architectures, allowing users to leverage the benefits of both containerization and FaaS.

Multi-Cloud and Hybrid Cloud Orchestration

As organizations adopt multi-cloud and hybrid cloud strategies, managing pods and nodes across different environments becomes increasingly complex. Future developments in pod and node technology might focus on improving interoperability, standardization, and automation across various cloud providers and on-premises infrastructures. This would enable organizations to build more resilient, flexible, and cost-efficient systems that can adapt to changing business needs and market conditions.

Artificial Intelligence (AI) and Machine Learning (ML) Integration

AI and ML technologies are becoming essential components of modern software applications. Future versions of pod and node management tools might incorporate AI and ML capabilities to optimize resource allocation, predict and prevent failures, and automate complex tasks. This would lead to more intelligent, self-healing, and self-organizing systems that can adapt to changing workloads and application requirements in real-time.

Security and Compliance Enhancements

Security and compliance continue to be critical concerns for organizations adopting container orchestration technologies. Future developments in pod and node management tools might focus on enhancing security features, such as network segmentation, role-based access control, and encryption, to meet the evolving needs of enterprises and comply with industry regulations. This would help organizations build more secure and compliant systems while maintaining the flexibility and agility offered by containerization.

Continuous Improvement and Standardization

As the container orchestration landscape matures, we can expect continued improvements in pod and node management tools, focusing on standardization, usability, and performance. This would lead to more robust, scalable, and maintainable systems that can better support the needs of modern enterprises and help them stay competitive in an ever-changing technological landscape.