What are Pods in Kubernetes?

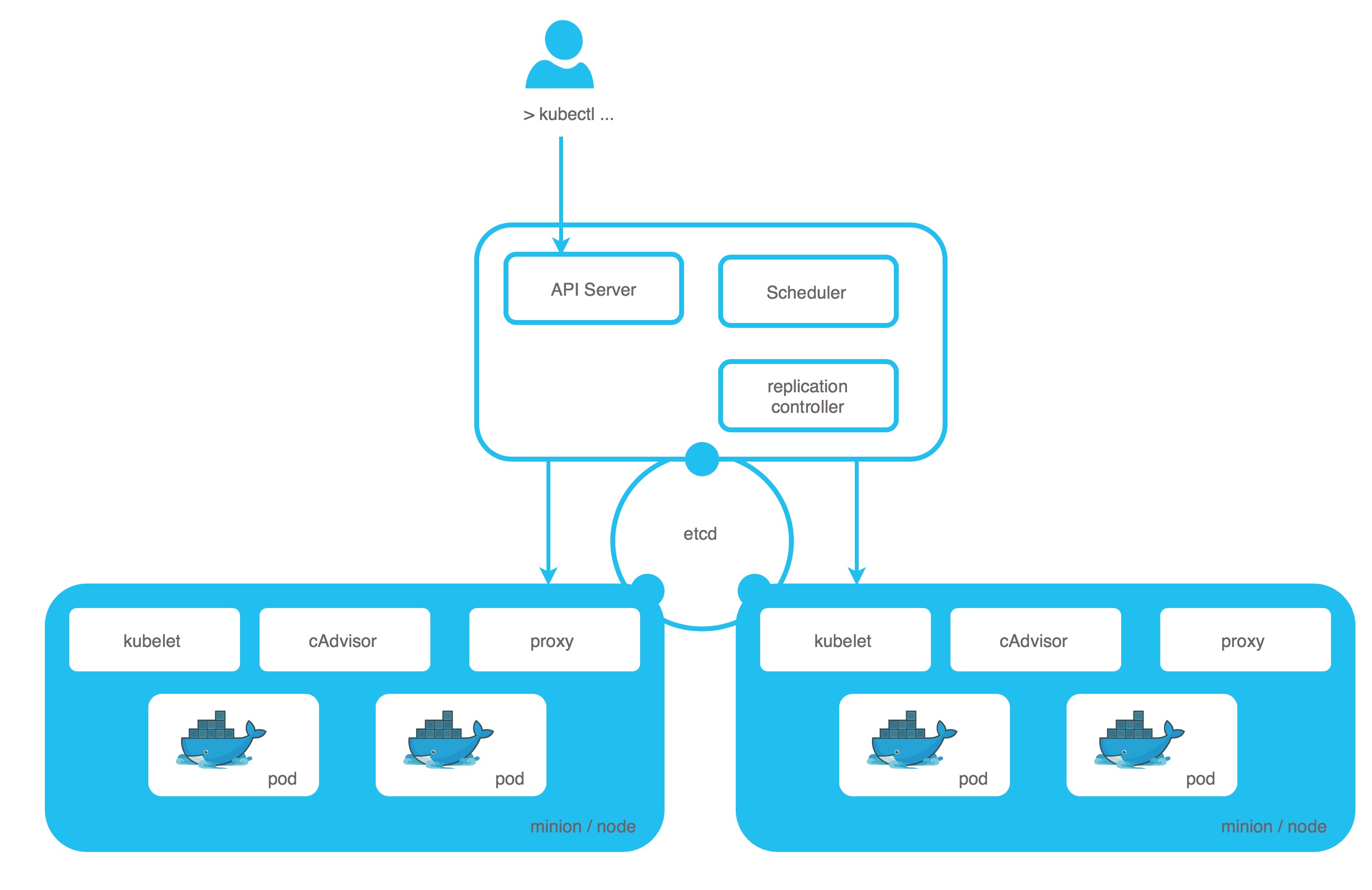

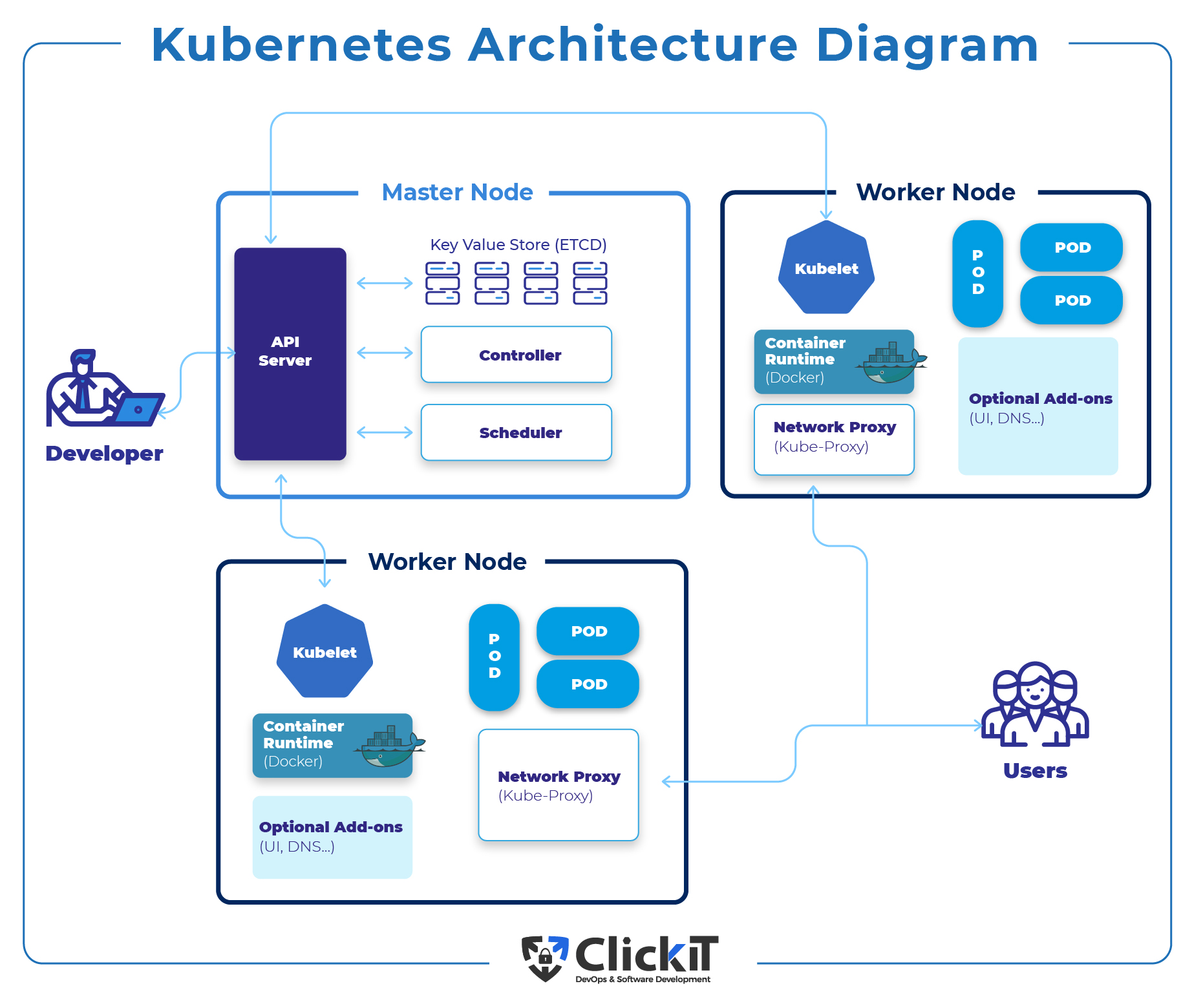

In Kubernetes, a Pod is the basic unit of deployment and the smallest and simplest unit in the Kubernetes object model that you create or deploy. A Pod represents a running process on your cluster and can contain one or more containers. Containers in a Pod share the same network namespace, meaning they can all reach each other’s ports on localhost. They also share the same storage resources, making it easy to manage and coordinate data between containers. Pods are scheduled and managed as a single entity, allowing for efficient resource utilization and simplified management.

Key Features and Benefits of Pods in Kubernetes

Pods in Kubernetes offer several key features and benefits that make them an essential component of container orchestration. One of the primary benefits of Pods is resource sharing. Containers in a Pod can share resources such as storage and network, making it easier to manage and coordinate data between containers. This co-location of containers also improves overall cluster efficiency by reducing the overhead associated with managing multiple containers separately.

Another significant advantage of Pods is communication between containers. Containers in a Pod can communicate with each other using localhost, making it easy to set up and manage communication between containers. This feature is particularly useful when deploying applications that require multiple containers to work together to provide a complete service.

Pods also simplify the management of related containers. By grouping related containers into a single Pod, you can manage and deploy them as a single entity. This approach reduces the complexity of managing multiple containers and makes it easier to ensure that related containers are deployed and managed together.

In summary, Pods in Kubernetes offer several key features and benefits, including resource sharing, co-location, and communication between containers. These features simplify the management of related containers and improve overall cluster efficiency, making Pods an essential component of container orchestration.

How to Create and Deploy Pods in Kubernetes

To create and deploy Pods in Kubernetes, you can use YAML or JSON files to define the Pod specifications. A Pod definition includes information such as the container image, resource requests, and network ports. Here is an example of a simple Pod definition:

{ "kind": "Pod", "apiVersion": "v1", "metadata": { "name": "my-pod" }, "spec": { "containers": [ { "name": "my-container", "image": "my-image:latest", "ports": [ { "containerPort": 80 } ] } ] } }To deploy the Pod, you can use the kubectl command-line tool. Here is an example of how to create and deploy a Pod using kubectl:

$ kubectl create -f my-pod.yaml pod/my-pod createdTo view the status of the Pod, you can use the following command:

$ kubectl get pods NAME READY STATUS RESTARTS AGE my-pod 1/1 Running 0 10sTo delete the Pod, you can use the following command:

$ kubectl delete -f my-pod.yaml pod "my-pod" deletedIn summary, to create and deploy Pods in Kubernetes, you can use YAML or JSON files to define the Pod specifications and the kubectl command-line tool to manage Pods. By following these steps, you can easily create and manage Pods in your Kubernetes cluster.

Pod Lifecycle and Management in Kubernetes

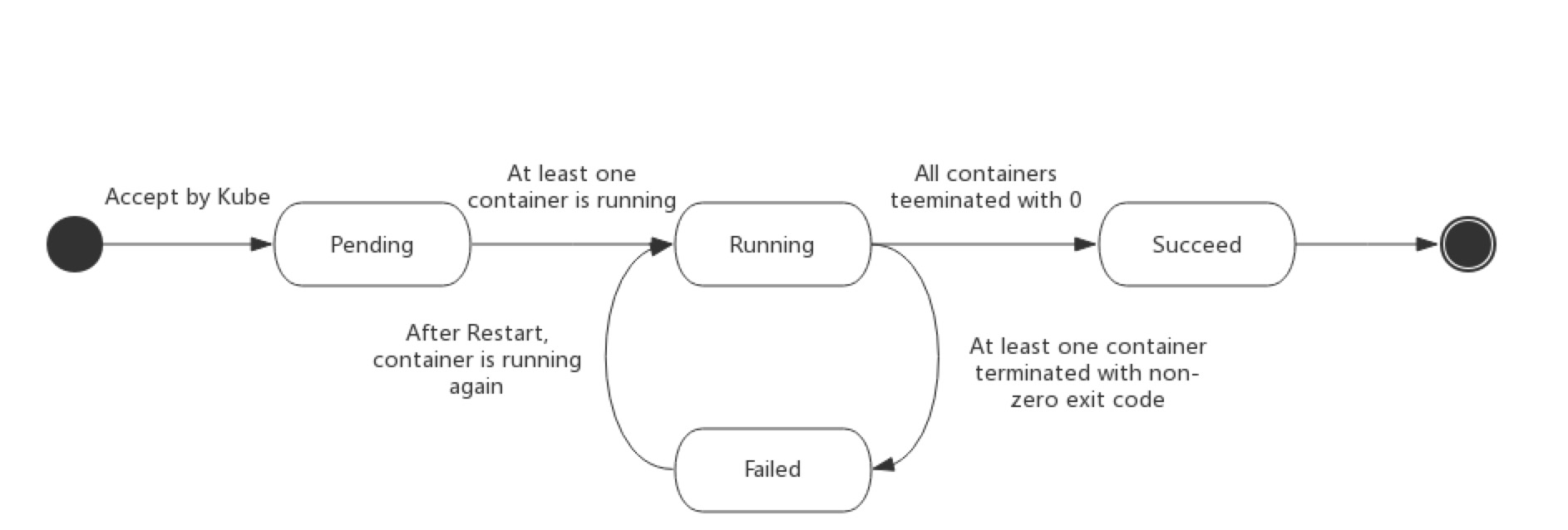

Pods in Kubernetes have a well-defined lifecycle, which includes creation, scaling, and deletion. Understanding the lifecycle of Pods is essential for managing and troubleshooting applications running in a Kubernetes cluster.

Creation

Pods can be created manually using YAML or JSON files or programmatically using Kubernetes API. When a Pod is created, Kubernetes schedules it on a node in the cluster based on resource availability and other constraints. Once the Pod is scheduled, the containers in the Pod are started, and the Pod becomes ready to receive traffic.

Scaling

Pods can be scaled manually or automatically based on resource usage or other metrics. Manual scaling can be done using the kubectl command-line tool or the Kubernetes dashboard. Automatic scaling can be achieved using the Kubernetes Horizontal Pod Autoscaler (HPA), which adjusts the number of replicas of a Pod based on CPU utilization or other metrics.

Deletion

Pods can be deleted manually using the kubectl command-line tool or programmatically using Kubernetes API. When a Pod is deleted, Kubernetes stops the containers in the Pod and removes the Pod from the cluster. If the Pod has multiple replicas, Kubernetes ensures that the desired number of replicas is maintained by creating new Pods as needed.

Monitoring and Troubleshooting

Monitoring and troubleshooting Pods in Kubernetes can be done using built-in tools such as kubectl describe and kubectl logs. These tools provide detailed information about the status and logs of Pods and containers. Third-party plugins and tools can also be used to monitor and troubleshoot Pods in Kubernetes clusters.

In summary, Pods in Kubernetes have a well-defined lifecycle, which includes creation, scaling, and deletion. Understanding the lifecycle of Pods is essential for managing and troubleshooting applications running in a Kubernetes cluster. Kubernetes provides built-in tools for monitoring and troubleshooting Pods, and third-party plugins and tools can also be used to enhance the monitoring and troubleshooting capabilities of Kubernetes clusters.

Advanced Pod Concepts in Kubernetes

While the basic concepts of Pods in Kubernetes are relatively straightforward, there are several advanced concepts that can help improve the reliability and performance of Kubernetes clusters. In this section, we will explore some of these advanced concepts, including Pod Disruption Budgets, Pod Anti-Affinity, and Pod Priorities.

Pod Disruption Budgets

Pod Disruption Budgets (PDBs) allow you to define the minimum number of Pods that must be available at any given time. PDBs help ensure that critical applications continue to run even in the event of planned maintenance or unplanned disruptions. By defining a PDB for a Pod, you can prevent Kubernetes from deleting or evicting Pods that are needed to meet the minimum availability requirements.

Pod Anti-Affinity

Pod Anti-Affinity allows you to specify rules that prevent Kubernetes from scheduling Pods on the same node. Pod Anti-Affinity helps ensure that related Pods are distributed across multiple nodes, improving the overall reliability and availability of the application. By using Pod Anti-Affinity, you can avoid scenarios where a single node failure can take down multiple Pods and impact the availability of the application.

Pod Priorities

Pod Priorities allow you to assign a priority level to Pods, which can help Kubernetes make better scheduling decisions. Pod Priorities help ensure that critical applications are given priority over less critical applications when scheduling Pods. By assigning a high priority to critical Pods, you can ensure that they are scheduled first and have access to the necessary resources.

In summary, advanced Pod concepts in Kubernetes, such as Pod Disruption Budgets, Pod Anti-Affinity, and Pod Priorities, can help improve the reliability and performance of Kubernetes clusters. By using these advanced concepts, you can ensure that critical applications continue to run even in the event of planned maintenance or unplanned disruptions, distribute Pods across multiple nodes, and assign priority levels to Pods to ensure that critical applications are given priority when scheduling Pods.

Real-World Examples of Pods in Kubernetes

Pods are a fundamental building block in Kubernetes, and they are used extensively in real-world applications. In this section, we will explore some real-world examples of how Pods are used in Kubernetes clusters to deploy and manage applications.

Example 1: Deploying a Multi-Container Application

Pods can contain one or more containers, making them an ideal choice for deploying multi-container applications. For example, a web application might consist of a frontend container running a web server and a backend container running a database. By deploying these containers as a single Pod, you can ensure that they are co-located on the same node and can communicate with each other using localhost.

Example 2: Deploying a Stateful Application

Pods can also be used to deploy stateful applications, such as databases. By using a StatefulSet controller in Kubernetes, you can ensure that each Pod is assigned a unique hostname and persistent storage. This makes it possible to deploy stateful applications, such as MySQL or PostgreSQL, in a Kubernetes cluster.

Example 3: Deploying a Horizontal Pod Autoscaler

Pods can be scaled horizontally to handle increased traffic or workloads. By using a Horizontal Pod Autoscaler (HPA) in Kubernetes, you can automatically scale the number of Pod replicas based on CPU utilization or other metrics. This makes it possible to ensure that applications can handle increased traffic or workloads without manual intervention.

Example 4: Deploying a Canary Release

Pods can also be used to deploy canary releases, which involve gradually rolling out new versions of an application to a subset of users. By deploying the new version of the application as a new Pod, you can gradually roll out the new version to a small subset of users and monitor the results before rolling it out to all users.

In summary, Pods are used extensively in real-world applications to deploy and manage containerized applications. By using Pods, you can co-locate related containers, ensure communication between containers, deploy stateful applications, scale applications horizontally, and deploy canary releases. These examples demonstrate the power and flexibility of Pods in Kubernetes and highlight their importance in deploying and managing containerized applications.

Best Practices for Pod Design and Deployment in Kubernetes

Pods are a powerful tool for deploying and managing containerized applications in Kubernetes. To ensure that your Pods are reliable, maintainable, and efficient, it’s important to follow best practices for Pod design and deployment. In this section, we will summarize some of the most important best practices for Pod design and deployment in Kubernetes.

Use Meaningful Names

When creating Pods, it’s important to use meaningful names that accurately reflect the purpose and function of the Pod. This makes it easier to manage and troubleshoot Pods, especially in large clusters with many Pods.

Limit Resource Requests

When creating Pods, it’s important to limit the amount of resources that each Pod requests. This helps ensure that the Pod is scheduled on a node that has sufficient resources to run the Pod. It also helps ensure that the cluster is used efficiently and that there are enough resources available for other Pods.

Enable Liveness and Readiness Probes

Liveness and readiness probes are built-in features in Kubernetes that allow you to monitor the health and status of Pods. By enabling liveness and readiness probes, you can ensure that Pods are restarted if they become unresponsive or unhealthy, and that they are not scheduled if they are not yet ready to receive traffic.

Use Labels and Annotations

Labels and annotations are metadata that you can attach to Pods to provide additional context and information. By using labels and annotations, you can filter, sort, and select Pods based on specific criteria, making it easier to manage and troubleshoot Pods.

Use Namespaces

Namespaces are virtual clusters within a physical Kubernetes cluster. By using namespaces, you can divide a large cluster into smaller, more manageable units. This makes it easier to manage and secure Pods, especially in large clusters with many teams and applications.

Use Helm Charts

Helm is a package manager for Kubernetes that allows you to package and deploy applications as reusable charts. By using Helm charts, you can simplify the deployment and management of Pods, especially in complex, multi-container applications.

In summary, following best practices for Pod design and deployment in Kubernetes can help ensure that your Pods are reliable, maintainable, and efficient. By using meaningful names, limiting resource requests, enabling liveness and readiness probes, using labels and annotations, using namespaces, and using Helm charts, you can improve the reliability and maintainability of your Kubernetes clusters and simplify the management of Pods.

Conclusion: The Power of Pods in Kubernetes

Pods are a fundamental building block in Kubernetes, providing a simple and efficient way to manage containerized applications. By defining Pods in YAML or JSON files and using the kubectl command-line tool, you can easily create, deploy, and manage Pods in your Kubernetes cluster.

Pods offer many benefits, including resource sharing, co-location, and communication between containers. By using Pods, you can simplify the management of related containers and improve overall cluster efficiency. Advanced Pod concepts, such as Pod Disruption Budgets, Pod Anti-Affinity, and Pod Priorities, can further improve the reliability and performance of Kubernetes clusters.

Real-world examples of Pods in Kubernetes demonstrate the power and flexibility of this technology. Companies such as Netflix, eBay, and Robinhood have successfully adopted Kubernetes as their container orchestration platform, using Pods to deploy and manage complex, distributed applications.

To ensure the reliability and maintainability of your Kubernetes clusters, it’s important to follow best practices for Pod design and deployment. This includes using meaningful names, limiting resource requests, and enabling liveness and readiness probes. By following these best practices, you can improve the performance and stability of your Kubernetes clusters and ensure that your containerized applications are running smoothly.

In conclusion, Pods are a powerful tool for deploying and managing containerized applications in Kubernetes. By understanding the key features and benefits of Pods, learning how to create and deploy Pods, and following best practices for Pod design and deployment, you can simplify the management of containerized applications and take full advantage of the power and flexibility of Kubernetes.