The Role of Container Orchestration in Modern Deployments

Container orchestration has become an indispensable aspect of modern application deployment, fundamentally altering how software is developed, deployed, and managed. In today’s dynamic environment, applications are often composed of numerous microservices, each packaged as a container. Manually managing these containers presents considerable challenges, including difficulties with scaling, load balancing, and ensuring high availability. This is where orchestration kubernetes tools, like Kubernetes, steps in to automate and streamline these complex processes. Container orchestration provides a framework for managing the complete life cycle of containerized applications, enabling teams to achieve scalability, resilience, and efficient resource utilization. Without such orchestration, deploying and maintaining applications would be cumbersome, prone to errors, and far less efficient, leading to increased operational costs and reduced agility. This introduction underscores why a robust container orchestration is essential for any organization aiming for optimized and reliable application deployment.

The manual management of containers is typically fraught with issues that impede development velocity and operational efficiency. Specifically, tasks such as manually scheduling containers across different servers, monitoring their health, and ensuring proper load balancing can be incredibly complex, time-consuming and error-prone, especially at scale. In contrast, orchestration platforms like kubernetes offer solutions to automate these processes. They orchestrate the deployment, scaling, and management of containers, allowing developers to focus on building innovative solutions rather than being bogged down by operational complexities. This automation extends to crucial functions such as rolling updates and rollbacks, providing a safety net for deployments. By leveraging container orchestration kubernetes, organizations can achieve higher levels of automation and reduce the potential for human error, leading to increased operational stability and faster deployment cycles. This shift towards automated management through kubernetes represents a significant move towards modern, agile, and scalable software development.

Understanding Kubernetes: A Deep Dive into its Core Concepts

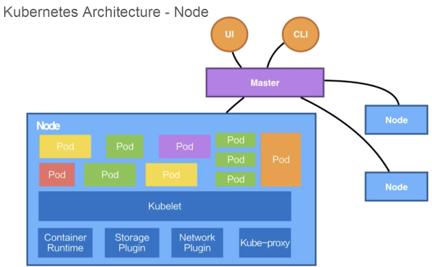

Kubernetes, a powerful system for container orchestration, operates on several core components that work in concert to manage and scale applications. At the heart of Kubernetes are Pods, the smallest deployable units that encapsulate one or more containers. These containers, often representing individual application services, are grouped within Pods to ensure they share the same network and storage. Deployments, another critical Kubernetes component, provide a mechanism for declaring the desired state of an application. A Deployment automatically creates and manages Pods, ensuring that the specified number of replicas of the application are running. If a Pod fails, a Deployment will automatically replace it, thereby enhancing application resilience. Services in Kubernetes enable network access to Pods, acting as an abstraction layer that decouples application access from the underlying Pod lifecycle. Instead of directly referencing a Pod’s IP address, applications can connect to a Service, which will route the traffic to the available Pods. Namespaces provide a way to logically partition resources within a Kubernetes cluster. By using Namespaces, teams can isolate their workloads and prevent resource conflicts, supporting a multi-tenant environment. These core concepts enable effective container orchestration within Kubernetes. Kubernetes also uses labels, which are key-value pairs attached to objects (like Pods), as a way to group and select objects for operations. This level of abstraction is crucial for managing complex deployments of microservices with ease.

Further delving into the operational aspects of Kubernetes, the Control Plane manages the cluster’s overall state and health, making orchestration kubernetes a reliable system. The API Server exposes the Kubernetes API, which enables user interaction and cluster management. The Scheduler determines which node a Pod should run on, based on resource availability and constraints. The Controller Manager ensures that the system’s desired state is always maintained, by creating, updating, and deleting resources as needed. Kubelets, running on each node, are responsible for ensuring that containers are running within their respective Pods and that the node itself is healthy. Container orchestration via Kubernetes is further enhanced by features like ConfigMaps and Secrets, which handle application configuration and sensitive information respectively, further promoting secure and manageable systems. PersistentVolumes and PersistentVolumeClaims enable stateful applications by providing persistent storage that outlives the Pod lifecycle. These components collectively ensure that Kubernetes orchestrates containers in a robust, scalable, and efficient manner, regardless of the underlying infrastructure. This level of detail is what makes orchestration Kubernetes such a powerful and flexible platform.

How to Streamline Deployments with Kubernetes Orchestration

This section provides a practical guide to deploying applications using orchestration kubernetes. The process begins with creating deployment files, typically in YAML format, which describe the desired state of the application, including the number of replicas, container images, and resource requirements. For example, a simple deployment file might specify a Docker image for a web application, request a specific amount of CPU and memory, and indicate that three instances of the application should run. The `kubectl apply -f

After deploying and exposing the application, monitoring its health and performance is essential. Kubernetes provides various tools and mechanisms for this purpose, including built-in health checks (liveness and readiness probes) that monitor the state of each pod and automatically restart failing containers. Tools such as Prometheus and Grafana can be integrated to provide detailed metrics and visualizations about the application’s resource consumption, performance, and errors. This data can be invaluable in identifying bottlenecks, optimizing resource usage, and proactively addressing issues before they impact users. When using orchestration kubernetes you should also utilize the `kubectl get deployments` command to observe the status of the application and `kubectl describe pod

Kubernetes vs Alternatives: When to Choose Kubernetes

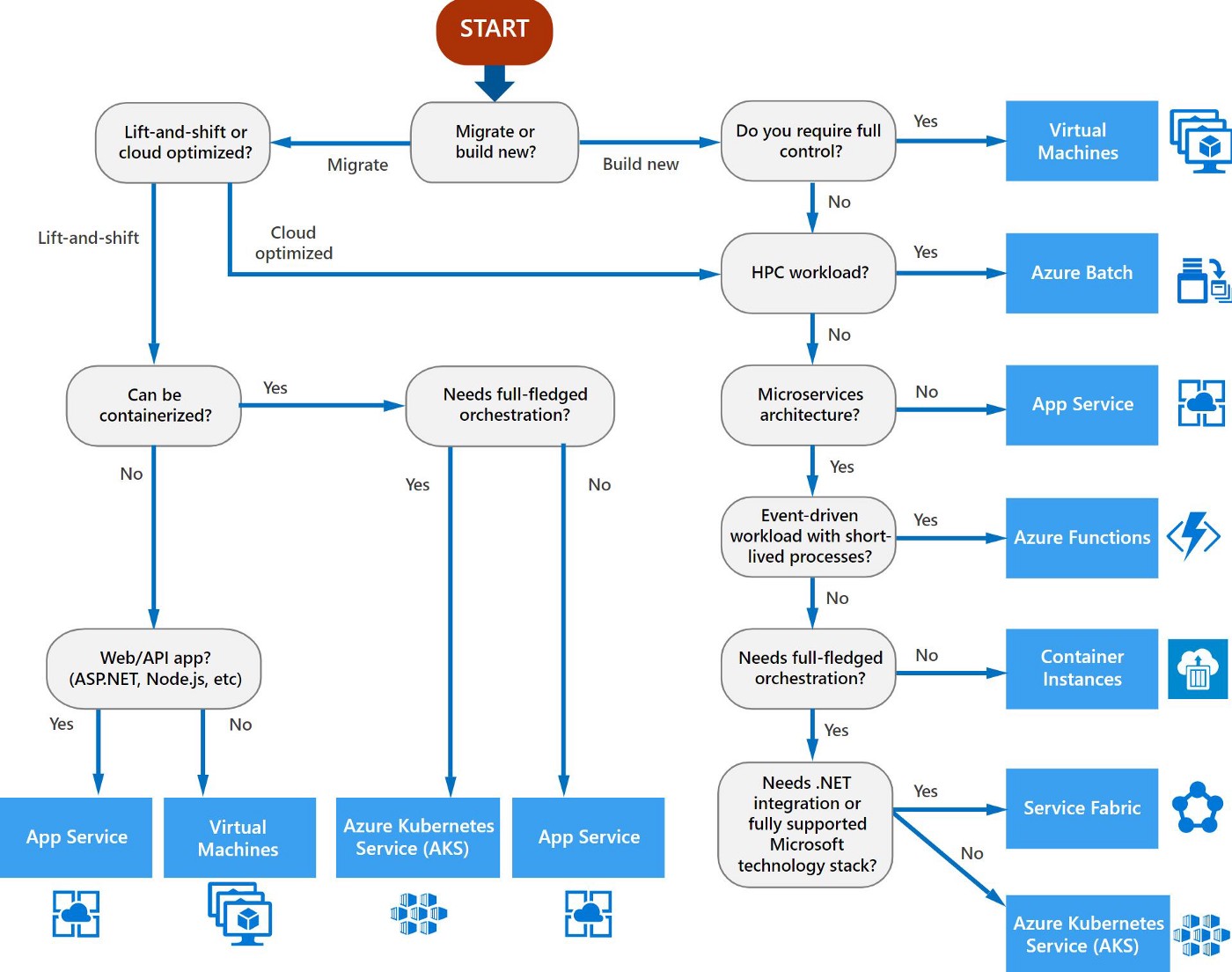

The landscape of container orchestration extends beyond Kubernetes, with tools like Docker Swarm and HashiCorp Nomad presenting viable alternatives. The choice of which tool to adopt largely hinges on the specific requirements of a project, existing infrastructure, and team expertise. Docker Swarm, being a native solution integrated within the Docker ecosystem, offers simplicity and ease of setup, making it an attractive option for smaller deployments or teams already heavily invested in Docker. Its learning curve is generally less steep compared to Kubernetes, which makes it a quick route to container orchestration. HashiCorp Nomad, on the other hand, distinguishes itself with its flexibility and ability to orchestrate both containerized and non-containerized workloads, which could be more suitable for diverse operational landscapes. However, it often requires more manual configuration and setup. When evaluating options, scalability is a key factor, while Kubernetes excels in managing highly scalable applications with intricate architectures, its complexity could be an overkill for smaller projects or less experienced teams. The power of orchestration kubernetes comes from its capability to handle large-scale deployments.

Kubernetes’ strength lies in its advanced features, robust community support, and extensive ecosystem. It offers a vast array of features like auto-scaling, load balancing, and complex deployment strategies, making it an ideal choice for projects that demand these capabilities. These orchestration kubernetes capabilities provide solutions that extend to complex production environments. However, the steep learning curve and the initial complexity of Kubernetes can pose a challenge for smaller teams. Docker Swarm, with its ease of use, may be preferable for teams focused on simplicity and rapid deployment, especially when the need for scaling is not extremely high. Nomad’s flexibility may be preferred when diverse types of workloads need management, including non-containerized applications or those utilizing legacy systems. It is important to note that the benefits of the orchestration kubernetes come with a price of a more complex management layer that needs some level of expertise. The decision to use Kubernetes should be carefully considered based on the trade-offs that each alternative presents. Therefore, an in-depth assessment of team skills, project needs, and available infrastructure are essential to determining the optimal orchestration solution.

Scaling Applications Efficiently using Kubernetes

Kubernetes offers powerful mechanisms for scaling applications to meet varying demands, ensuring optimal resource utilization and performance. The platform facilitates both horizontal scaling, by adding more instances of an application, and vertical scaling, by allocating more resources to existing instances. This ability to dynamically adjust application capacity is a core benefit of using orchestration kubernetes. Horizontal scaling in Kubernetes is primarily achieved through Deployments or ReplicaSets, which allow you to specify the desired number of application replicas. When demand increases, Kubernetes can automatically create new replicas to handle the additional load, distributing traffic among them using Services. This process is often managed through the Horizontal Pod Autoscaler (HPA), which monitors metrics such as CPU utilization or custom metrics and automatically scales the number of pods up or down to maintain performance targets. In terms of vertical scaling, while Kubernetes does not automatically adjust resource limits like CPU or memory, you can define Resource Requests and Limits for pods. Resource Requests represent the amount of resources a pod expects to use, while Resource Limits define the maximum resources the pod can consume. By setting these limits, you can ensure that pods do not consume excessive resources, and Kubernetes can schedule pods on nodes with sufficient capacity. In some instances, you may manually need to adjust these resources for a given pod to handle more load if horizontal scaling is not possible or enough. Efficient management of resource utilization is a critical aspect of effective orchestration kubernetes.

Effective scaling within Kubernetes is further enhanced through the ability to create monitoring and alert systems. Kubernetes integrates well with many monitoring tools, allowing for the real-time observation of application metrics. Based on these metrics, users can configure alerts to notify operators when applications are nearing resource limits, experiencing performance degradation, or when there is a need for horizontal scale. For example, if CPU utilization exceeds a certain threshold for an extended period, the HPA can trigger the creation of new application replicas. Alternatively, alerts can notify administrators if resource utilization becomes too high, which could indicate resource limits are not appropriate and require adjustment. This proactive approach to scaling helps ensure that applications can handle spikes in demand, preventing outages and maintaining a positive user experience. To optimize resource utilization further, it’s important to also consider node capacity when scaling pods, as well as using strategies like pod affinity and anti-affinity, which guide how pods are scheduled across the cluster nodes. This allows the system to maintain a balance in resource consumption and application availability. The capacity for effective scaling makes orchestration kubernetes an ideal choice for modern, resource-intensive applications. Proper configuration and monitoring of resource usage is paramount for maintaining the reliability and availability of applications deployed on Kubernetes. This ensures applications adapt effectively to different loads while optimizing resource consumption within the cluster, further underscoring the importance of orchestration kubernetes in modern deployments.

Enhancing Application Reliability with Kubernetes High Availability

Kubernetes ensures application reliability and high availability through several mechanisms that are crucial for maintaining uninterrupted service. Replication, a core feature of Kubernetes, involves deploying multiple instances of an application across different nodes. This redundancy means that if one instance fails, others can seamlessly take over, preventing service disruption. Rolling updates facilitate smooth application upgrades by gradually replacing old instances with new ones, without causing downtime. Health checks, implemented through probes, constantly monitor the health of the application instances. If a probe fails, Kubernetes automatically restarts the affected instance. These mechanisms form a robust foundation for ensuring continuous operation. Setting up a high-availability cluster for production environments involves careful planning and configuration. It typically requires multiple master nodes for the Kubernetes control plane to avoid a single point of failure, and multiple worker nodes across different availability zones to ensure resilience against infrastructure failures. Proper configuration of network policies and resource requests and limits also contributes to overall stability. The effective orchestration kubernetes environment relies heavily on these features to guarantee the availability of applications.

Monitoring and logging are essential components of a highly available Kubernetes setup. Comprehensive monitoring allows to track the health and performance of the application and the underlying infrastructure. Tools like Prometheus and Grafana can be used to gather metrics and visualize them. Logging ensures that issues are captured and can be diagnosed efficiently. Centralized logging, using tools like Elasticsearch, Fluentd, and Kibana (EFK stack), allows for aggregated and searchable logs, making it easier to troubleshoot and identify potential issues. Proper alerting mechanisms are equally important. Setting up alerts for critical conditions, such as high resource consumption, pod failures, or service unavailability, allows for proactive responses to incidents. Effective monitoring, logging, and alerting are necessary to ensure high availability and to quickly mitigate any issues that arise in an orchestration kubernetes environment. This proactive approach is critical to maintain a dependable application infrastructure.

Furthermore, implementing proper resource management with requests and limits is critical to achieve true high availability. Kubernetes allows to configure how much CPU and memory each pod requires or is allowed to use. By carefully setting these parameters, resources can be allocated efficiently, avoiding resource starvation and improving stability. Network policies also play a vital role in securing a highly available cluster by controlling the communication between pods, preventing unauthorized access and limiting the impact of potential security breaches. Ultimately, all these mechanisms, when combined with best practices, ensure the stability and performance of applications managed by Kubernetes, making it a very robust and reliable platform for the orchestration kubernetes environment. A well-orchestrated kubernetes deployment, focused on high availability, directly reduces downtime and guarantees business continuity.

Implementing Best Practices for Kubernetes Orchestration

Effective orchestration kubernetes demands adherence to best practices that ensure security, stability, and resource efficiency. Security hardening is paramount; begin by limiting access using Role-Based Access Control (RBAC) and Network Policies to restrict communication between pods and namespaces. Regular image scanning is essential to identify vulnerabilities, while secrets should be managed with Kubernetes Secrets or a dedicated secrets manager. Resource management requires careful planning, set resource requests and limits for each container to prevent resource starvation or over-consumption. Use namespaces to logically separate environments (e.g., development, staging, production) and consider resource quotas to limit resource usage per namespace. Proper naming conventions are crucial for clarity, use consistent labels and annotations to allow better filtering and management of Kubernetes objects. Implement a structured approach to naming deployments, services, and other resources to avoid conflicts and make resources easier to identify and understand. Monitoring and alerting setup is fundamental for proactively detecting issues, setting up monitoring tools like Prometheus and Grafana to track key metrics like CPU, memory usage, and latency; Implement alerts for critical issues that may require attention, with specific escalation workflows; and also logging is fundamental, ensure that pod logs are sent to a central logging system for analysis and troubleshooting.

Another important aspect of best practices when using orchestration kubernetes is the application of infrastructure-as-code principles. Use tools like Helm charts or Kustomize to automate deployment configurations, and store your configuration files in version control to track all changes and enable easy rollbacks. Ensure that changes to the cluster configuration are done through a proper GitOps process to ensure only authorized modifications are deployed to the cluster; avoid manual configuration whenever possible. Regularly update Kubernetes and its components to patch security vulnerabilities and benefit from performance enhancements, and always test all updates in a non-production environment before applying them to production. Apply a strong strategy when updating applications in order to minimize application downtime during deployments; leverage rolling updates and canary deployments to minimize risk when upgrading applications. Develop a strategy for handling backups of the Kubernetes cluster configuration to quickly restore from issues and catastrophic failures and always keep the Kubernetes cluster up to date to avoid running outdated and vulnerable Kubernetes versions.

Finally, ensure your team is knowledgeable about best practices for orchestration kubernetes, encourage training and continuous learning within the team. Promote a DevOps culture to foster collaboration between development and operations. Create thorough documentation for your Kubernetes infrastructure to enable other users to work efficiently. Develop runbooks for various maintenance tasks and common troubleshooting scenarios. Conduct regular reviews of your Kubernetes setup to find areas for improvement and implement performance optimization. Through consistent monitoring, logging, and automation; coupled with a strong security framework, a robust Kubernetes orchestration environment can be achieved.

The Future of Container Orchestration and Kubernetes

The landscape of container orchestration kubernetes is constantly evolving, with future trends pointing towards greater automation, enhanced security, and seamless integration with emerging technologies. Edge computing represents a significant area of growth, where orchestration kubernetes will extend its reach beyond traditional data centers to manage applications closer to the data source. This shift is driven by the increasing demands of IoT devices and real-time applications that require minimal latency. The move towards serverless containers is another important trend, which will enable developers to focus on application logic without the complexities of managing the underlying infrastructure. This abstraction is expected to simplify development workflows and enhance scalability and efficiency in containerized environments. Furthermore, the integration of artificial intelligence (AI) and machine learning (ML) within container orchestration systems will allow for smarter resource allocation and predictive scaling, thus ensuring optimal performance of applications. This integration will also automate routine tasks like troubleshooting and security monitoring.

Innovation in the orchestration kubernetes sphere is also focusing on making complex deployments simpler to manage. We’ll see further abstraction of underlying infrastructure with platform-as-a-service (PaaS) offerings built on top of kubernetes that allow a developer to focus on code while the platform manages orchestration. New projects, such as those focused on service meshes, are enhancing how containers communicate with each other and manage traffic. These improvements will lead to more robust and resilient architectures. Also, Kubernetes will continue to expand its reach into different environments like hybrid cloud and multi-cloud settings. Future enhancements will ensure that kubernetes can manage application lifecycle across varied cloud providers or on-premises environments, thus increasing agility and preventing vendor lock-in. Another interesting area to watch is the further development of Kubernetes operators, which will allow for automated management of complex stateful applications through codified operational knowledge. This shift towards operator-based management will increase reliability and reduce the need for manual intervention.

In the near future, the orchestration kubernetes ecosystem will be more user-friendly, with improved interfaces and tools to handle increased levels of complexity. The focus will shift more towards developer experiences, allowing them to harness the power of container orchestration without being experts in the low-level details of Kubernetes itself. As Kubernetes becomes more stable and mature, it is expected to become an even more integral part of the standard IT infrastructure. The future of orchestration kubernetes includes more robust and secure solutions, designed for handling the challenges of modern applications, from the smallest to the largest scale. It’s a dynamic field and will continually evolve with new capabilities emerging to accommodate the needs of emerging technologies. This continuous evolution in container orchestration and Kubernetes reaffirms its importance in modern application management and ensures its continued relevance in the years to come.