Understanding the Crucial Role of Model Evaluation in Machine Learning

Effective ml model evaluation is paramount in the machine learning lifecycle. It directly impacts model selection, deployment, and the overall project’s success. Rigorous evaluation ensures the chosen model accurately reflects the underlying data and performs reliably in real-world scenarios. Poorly evaluated models lead to inaccurate predictions, wasted resources, and flawed business decisions. The consequences can range from minor inconveniences to significant financial losses and reputational damage. A thorough evaluation process considers various metrics, tailored to the specific type of model used. These include classification, regression, and clustering metrics, each providing unique insights into model performance.

The importance of ml model evaluation cannot be overstated. A robust evaluation strategy minimizes risks and maximizes the return on investment in machine learning projects. It ensures that models are reliable, accurate, and aligned with business objectives. This involves selecting appropriate evaluation metrics, implementing rigorous testing procedures, and interpreting the results accurately. Furthermore, effective ml model evaluation facilitates the identification and mitigation of biases, ultimately enhancing fairness and trustworthiness. Careful consideration of these factors distinguishes successful projects from those that fall short of expectations.

Different machine learning models require different evaluation strategies. Classification models, for instance, might utilize metrics such as accuracy, precision, recall, and F1-score. Regression models often rely on metrics like mean squared error (MSE), root mean squared error (RMSE), and R-squared. Clustering models utilize metrics such as silhouette score and Davies-Bouldin index. The choice of appropriate metrics depends on the specific problem being addressed and the relative importance of different aspects of model performance. For example, in a fraud detection system, a high recall rate might be prioritized over a high precision rate, even if it means accepting a higher rate of false positives. The selection of metrics is highly contextual and necessitates a deep understanding of the problem and the business requirements. Choosing the right metrics is critical for effective ml model evaluation.

Choosing the Right Evaluation Metrics for Your ML Model

Effective ml model evaluation hinges on selecting appropriate metrics. The choice depends heavily on the type of machine learning model and the specific business problem. For classification models, common metrics include accuracy, precision, recall, the F1-score, and the AUC-ROC. Accuracy represents the overall correctness of predictions. Precision measures the proportion of correctly predicted positive instances among all predicted positive instances. Recall focuses on the proportion of correctly predicted positive instances among all actual positive instances. The F1-score balances precision and recall, providing a single metric. The AUC-ROC (Area Under the Receiver Operating Characteristic curve) summarizes the model’s ability to distinguish between classes across different thresholds. The selection of the most important metric depends on the specific application. For example, in medical diagnosis, high recall is crucial to minimize false negatives, even if it means accepting a lower precision. In spam detection, high precision might be prioritized to reduce false positives, even if it sacrifices some recall.

Regression models, on the other hand, use different metrics to assess performance. Mean Squared Error (MSE), Root Mean Squared Error (RMSE), and Mean Absolute Error (MAE) quantify the difference between predicted and actual values. MSE and RMSE penalize larger errors more heavily than MAE. R-squared measures the proportion of variance in the dependent variable explained by the model. The choice between these metrics depends on the sensitivity to outliers and the interpretability desired. RMSE, for instance, is easier to interpret than MSE because it’s in the same units as the dependent variable. R-squared provides a measure of overall model fit, but doesn’t directly reflect the magnitude of prediction errors. Careful consideration of the business context is vital. For example, in financial forecasting, small prediction errors can have substantial financial implications, making RMSE a more relevant metric than R-squared.

Clustering models require different evaluation metrics. The Silhouette score measures how similar a data point is to its own cluster compared to other clusters. A high Silhouette score indicates well-separated clusters. The Davies-Bouldin index measures the average similarity between each cluster and its most similar cluster. A lower Davies-Bouldin index suggests better-defined clusters. The choice between these metrics depends on the desired properties of the clusters. The goal of ml model evaluation is to choose metrics that align with the specific goals of the project. Understanding the strengths and weaknesses of each metric ensures a robust and informative evaluation process. Selecting the right metrics for ml model evaluation is crucial for making informed decisions about model selection, deployment, and overall project success.

How to Implement Model Evaluation Techniques Effectively

Effective ml model evaluation is crucial for ensuring a machine learning model performs as expected in real-world applications. This section provides a practical, step-by-step guide to implementing model evaluation techniques using Python and the scikit-learn library. The process begins with data splitting. First, divide the dataset into training and testing sets. This prevents overfitting, where a model performs well on the training data but poorly on unseen data. Scikit-learn’s train_test_split function simplifies this task. Then, choose appropriate evaluation metrics based on the problem type (classification, regression, or clustering). For example, accuracy is suitable for classification problems, while mean squared error (MSE) is commonly used for regression problems. Scikit-learn provides functions to calculate these metrics directly.

Next, train your chosen model using the training data. After training, use the testing data to evaluate the model’s performance. This involves applying the trained model to the test data and comparing its predictions to the actual values. Scikit-learn’s predict and score methods are helpful here. Calculate the selected evaluation metrics to quantify model performance. For instance, if using a classification model and accuracy as the metric, the accuracy_score function computes the accuracy. A high accuracy score suggests a good model, indicating a high percentage of correct predictions. Remember that interpreting evaluation metrics always depends on the context of your project. A seemingly high accuracy might not be meaningful if the model makes serious errors on a small, crucial subset of the data.

To illustrate, consider a simple example using the Iris dataset for classification. First, load the dataset and split it into training and testing sets. Then, train a logistic regression model. Finally, use accuracy_score to evaluate the model’s performance on the test set. The code might look like this: from sklearn.model_selection import train_test_split from sklearn.linear_model import LogisticRegression from sklearn.metrics import accuracy_score # Load the Iris dataset ... # Split data into training and testing sets X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42) # Train a logistic regression model model = LogisticRegression() model.fit(X_train, y_train) # Make predictions on the test set y_pred = model.predict(X_test) # Evaluate the model's accuracy accuracy = accuracy_score(y_test, y_pred) print(f"Accuracy: {accuracy}") This example showcases a basic ml model evaluation workflow. More complex models and datasets might require more sophisticated techniques, but the fundamental principles remain the same. Remember, effective ml model evaluation is an iterative process, often requiring adjustments to the model, features, or evaluation metrics to achieve optimal performance. The key is to carefully consider the business context and choose appropriate evaluation methods to ensure the model meets your project objectives. Thorough ml model evaluation is not just a step, but a continuous process throughout your machine learning project’s lifecycle.

Bias Detection and Mitigation in Your ML Model Evaluation

Bias in machine learning models is a critical concern. It can significantly impact the fairness and accuracy of predictions. Biased ml model evaluation leads to unfair or discriminatory outcomes. These biases often reflect existing societal inequalities present in the training data. For example, a model trained on biased data may unfairly disadvantage certain demographic groups. Effective ml model evaluation must address this issue proactively.

Identifying bias requires careful examination of the evaluation metrics. Disparities in performance across different subgroups can indicate bias. For instance, if a loan application model shows significantly lower approval rates for a specific demographic group, despite similar creditworthiness, bias is likely present. Techniques like fairness-aware metrics, such as equal opportunity or demographic parity, help quantify and assess bias directly. These metrics offer more nuanced insights compared to standard accuracy measures alone. They help determine if the model’s performance is equitable across various groups.

Mitigating bias involves a multi-faceted approach. Data preprocessing techniques, such as re-weighting samples or data augmentation, can help balance the representation of different groups in the training data. Careful feature engineering is crucial; features that are correlated with protected attributes (like race or gender) should be removed or transformed to reduce their discriminatory impact. Algorithm selection also plays a role. Some algorithms are inherently more susceptible to bias than others. Furthermore, ongoing monitoring and auditing of the deployed model are vital. Regular checks can detect emerging biases and ensure fairness over time. Addressing bias is an iterative process and integral to responsible ml model evaluation.

Cross-Validation: Enhancing the Robustness of Your ML Model Evaluations

Cross-validation is a crucial technique in ml model evaluation. It addresses the limitations of training a model on one dataset and testing it on another. This approach provides a more reliable estimate of a model’s performance on unseen data. By dividing the data into multiple subsets, the model is trained and tested repeatedly, leading to a more robust evaluation. The most common types of cross-validation include k-fold cross-validation, stratified k-fold cross-validation, and leave-one-out cross-validation. Each method offers unique advantages and disadvantages, making the choice dependent on the specific dataset and model.

K-fold cross-validation divides the dataset into k equal-sized folds. The model is trained on k-1 folds and tested on the remaining fold. This process is repeated k times, with each fold serving as the test set once. Stratified k-fold cross-validation enhances this process by ensuring that each fold maintains the original class distribution of the dataset. This is particularly important for imbalanced datasets, preventing biased performance estimates. Leave-one-out cross-validation (LOOCV) is an extreme case of k-fold cross-validation where k equals the number of data points. While LOOCV provides a very accurate estimate of performance, it can be computationally expensive for large datasets.

Scikit-learn, a popular Python library for machine learning, provides straightforward tools for implementing various cross-validation techniques. These tools streamline the process, enabling efficient evaluation and comparison of different ml models. Proper implementation of cross-validation significantly improves the reliability of ml model evaluation, leading to better model selection and deployment. Understanding and utilizing these methods are essential for building robust and generalizable machine learning models. The choice of cross-validation method significantly impacts the accuracy and reliability of your ml model evaluation. Careful consideration of the dataset characteristics and computational resources is necessary for optimal selection.

Visualizing Model Performance: Charts and Graphs for Clear Insights

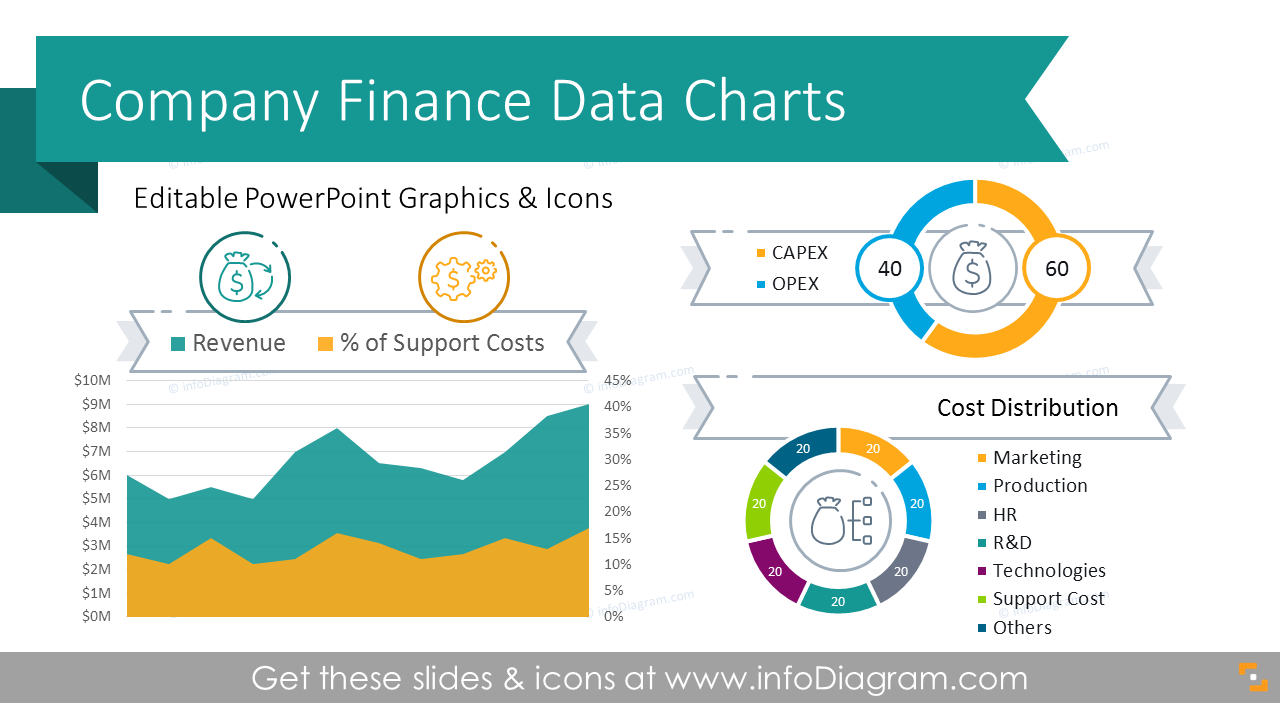

Effective ml model evaluation extends beyond numerical metrics. Visualizations offer crucial insights into model performance, revealing patterns and weaknesses that numbers alone might miss. A confusion matrix, for example, provides a clear picture of the model’s predictions, highlighting true positives, true negatives, false positives, and false negatives. This visual representation is particularly useful for classification tasks, enabling a quick assessment of the model’s accuracy across different classes. The creation of this matrix, using libraries like Matplotlib and Seaborn, enhances the understanding of ml model evaluation significantly.

Furthermore, the Receiver Operating Characteristic (ROC) curve and the Precision-Recall curve are powerful tools for visualizing the trade-off between sensitivity and specificity, or precision and recall respectively. These curves are especially valuable when dealing with imbalanced datasets, where simply relying on accuracy might be misleading. By plotting these curves, one can dynamically observe how the model performs across different thresholds, allowing informed decisions about the optimal operating point. These visualizations are critical aspects of comprehensive ml model evaluation, adding context to the numerical scores.

Beyond these standard visualizations, more sophisticated techniques can illuminate nuanced aspects of model behavior. For instance, visualizing the decision boundaries of a model can reveal areas where it struggles to accurately classify data points. Similarly, visualizing feature importance can highlight the key factors influencing the model’s predictions, providing valuable insights for feature engineering and model improvement. The effective use of these visualization tools is crucial for a thorough and insightful ml model evaluation, moving beyond basic metrics to a deeper understanding of model strengths and weaknesses.

Hyperparameter Tuning and Model Selection using Evaluation Metrics

Effective ml model evaluation is crucial for hyperparameter tuning and model selection. These processes aim to optimize model performance. They rely heavily on the chosen evaluation metrics. For instance, in a classification problem, maximizing the F1-score might guide hyperparameter tuning. This ensures a balance between precision and recall. Similarly, minimizing the root mean squared error (RMSE) might be the objective during regression model optimization. The choice of metric directly impacts the final model’s performance and suitability for its intended application.

Techniques like grid search and randomized search systematically explore different hyperparameter combinations. Ml model evaluation metrics assess the performance of each combination. Grid search exhaustively tests all combinations within a predefined range. Randomized search randomly samples from the hyperparameter space. This is often more efficient for high-dimensional search spaces. In both cases, the chosen evaluation metric guides the selection of the best-performing model. This model exhibits optimal performance according to the defined metric. Careful consideration of the metric is vital. It ensures the selection of a model that best addresses the specific needs and goals of the machine learning project.

Beyond grid and randomized search, more advanced techniques exist for hyperparameter optimization. Bayesian optimization uses probabilistic models to guide the search. This focuses on promising areas of the hyperparameter space. Evolutionary algorithms mimic natural selection to iteratively improve model performance. The underlying principle remains consistent: ml model evaluation metrics provide the feedback mechanism. This determines which hyperparameter configurations and models are superior. This iterative process refines the model until it achieves the desired level of performance. The selection of appropriate evaluation metrics is therefore paramount for successful hyperparameter tuning and model selection. It directly impacts the quality and effectiveness of the final machine learning model. A thorough understanding of ml model evaluation is therefore essential for building high-performing models.

Advanced Model Evaluation Techniques: Beyond the Basics

Beyond the fundamental ml model evaluation methods, several advanced techniques offer refined insights into model performance. Calibration curves, for instance, visually represent the reliability of a model’s predicted probabilities. They highlight discrepancies between predicted probabilities and observed frequencies, revealing areas where the model might over- or under-confident in its predictions. Understanding calibration is crucial for making informed decisions based on a model’s output. Accurate calibration ensures that a predicted probability of 0.8 truly reflects an 80% chance of the event occurring. This is especially important in applications where the costs of false positives or false negatives vary significantly.

Another area of advanced ml model evaluation involves quantifying uncertainty in performance estimates. Traditional metrics provide point estimates of accuracy, but they don’t capture the inherent variability in the evaluation process. Confidence intervals offer a more nuanced view, indicating a range of plausible values for the true performance of the model. This acknowledges that the observed performance on a particular dataset may not perfectly represent the model’s true capability on unseen data. Calculating and interpreting confidence intervals is vital for making reliable generalizations about a model’s effectiveness. This rigorous approach to ml model evaluation helps to avoid overfitting and enhances the trustworthiness of conclusions.

Finally, evaluating specialized models necessitates tailored techniques. Time-series models, for example, require evaluation metrics that account for temporal dependencies in the data. Metrics like mean absolute percentage error (MAPE) and root mean squared percentage error (RMSPE) are often employed. Reinforcement learning agents present unique challenges, demanding evaluation methods that assess cumulative rewards, learning curves, and robustness across different environments. The choice of metric heavily depends on the problem’s specifics, and a deep understanding of the nuances of advanced ml model evaluation is essential for rigorous assessment of these sophisticated models.