Understanding the Core Principles of a Medallion Architecture

The medallion architecture, a cornerstone of modern data lakehouse design, structures data processing into distinct layers, each with a specific purpose, optimizing for data quality and business readiness. This tiered approach categorizes data into three primary zones: Bronze, Silver, and Gold. The Bronze layer acts as the initial landing zone for raw data ingested from various sources. Data in this layer is stored in its original format, preserving all attributes without modification, this guarantees a complete record of ingested information for audit and reprocessing needs. The main goal of the Bronze layer is reliable and secure data storage without any transformations in a lakehouse environment. The Silver layer then comes into play, data from the Bronze zone goes through cleaning, standardization, and basic transformations. This process involves data type conversions, null handling, and removing inconsistencies, ensuring data integrity. This layer focuses on refining and consolidating data, making it suitable for analytical workloads. Finally, the Gold layer houses data that’s fully transformed and ready for business consumption. This layer is where the data is structured and modeled for specific business use cases and applications, often involving aggregations, calculations, and the creation of business metrics. The medallion databricks architecture is a methodology which ensures data has integrity throughout the entire processing lifecycle.

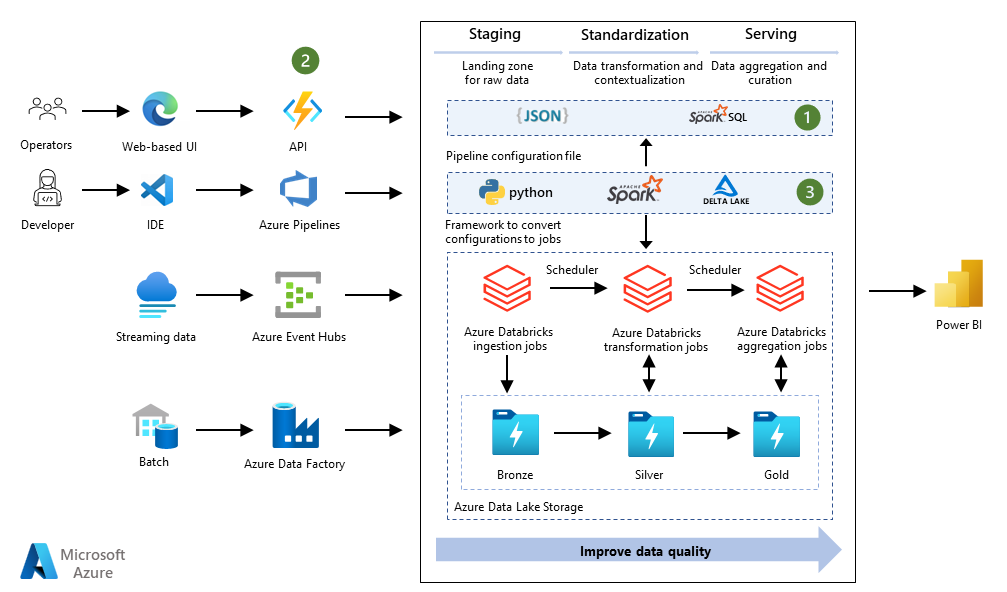

The effective implementation of a medallion architecture heavily depends on having robust tools for data processing and storage. Databricks offers a unified platform that perfectly aligns with the requirements of this architecture. Databricks provides a scalable environment with Apache Spark at its core, enabling efficient data transformations at any layer of the medallion architecture. Delta Lake, a key feature in Databricks, adds crucial reliability to data storage. It provides ACID properties, schema enforcement, and versioning capabilities which are vital for handling data within the Bronze, Silver, and Gold layers. Databricks allows for easy implementation of data pipelines, moving data from one zone to the next with the proper transformations. The platform simplifies the process of setting up ingestion from multiple data sources, running batch or streaming processing, and generating actionable insights. Furthermore, it provides collaborative tools for data teams to operate effectively, enhancing data quality, and improving time to value. The medallion databricks environment thus empowers organizations to build a resilient data lakehouse.

The medallion databricks architecture is not just about data storage; it’s about carefully managing the data life cycle and ensuring the data’s transformation journey is aligned with business requirements. The organized approach of having Bronze, Silver, and Gold zones allows for easier data governance and improves efficiency by keeping the data clean, processed, and easily accessible for business consumption. Databricks, through its features and capabilities, significantly enhances the implementation of the medallion architecture, making it easier to develop, manage, and scale data operations in a lakehouse environment. By combining the medallion design principles with the power of Databricks, organizations can establish robust, scalable, and reliable data platforms.

How to Implement a Robust Data Pipeline with Databricks Medallion

Implementing a robust data pipeline using the medallion architecture within a Databricks environment involves a series of well-defined stages, each crucial for ensuring data quality and business readiness. The process begins with data ingestion, where data from various sources, such as databases, APIs, or streaming platforms, is brought into the Databricks environment. This raw, unprocessed data lands in the Bronze layer, typically stored in a format like Delta Lake. The Bronze layer acts as an immutable record of the ingested data, preserving its original state. Next, the data moves to the Silver layer, where cleaning and standardization take place. This involves tasks like data cleansing, deduplication, data type conversions, and schema enforcement. For example, imagine raw customer data with inconsistent address formats; in the Silver layer, this would be standardized to a consistent format. This stage utilizes Spark’s powerful processing capabilities within the Databricks environment. The following pseudocode exemplifies a simplified transformation process from bronze to silver using Spark SQL: CREATE OR REPLACE TEMP VIEW bronze_table AS SELECT * FROM delta.`/mnt/bronze/customer_data`; CREATE OR REPLACE TABLE silver_table AS SELECT customer_id, upper(trim(customer_name)) as customer_name, normalize_address(address) as address FROM bronze_table WHERE customer_id IS NOT NULL. This shows the basic cleaning and standardization process. This cleaned data, now in the Silver layer, becomes the foundation for the final transformation. The Silver layer in the medallion databricks architecture provides a reliable intermediary stage.

Finally, data is transformed into business-ready information in the Gold layer. This involves aggregations, data modeling, and creation of business metrics, aligning with specific reporting or analytical needs. For example, creating a summarized view of customer purchase history grouped by region or a monthly sales report. This final stage often relies on SQL-based transformations or Spark operations optimized for analytical workloads within the Databricks environment. A pseudocode example using PySpark to process Silver data to Gold could look like: silver_df = spark.read.format("delta").load("/mnt/silver/customer_data"); gold_df = silver_df.groupBy("region").agg(sum("total_purchase").alias("total_sales")); gold_df.write.format("delta").mode("overwrite").save("/mnt/gold/sales_summary"). This code demonstrates how to group and aggregate to create a summarized dataset for the Gold layer. The medallion databricks architecture enables a clear flow of data from raw ingestion to business-ready insights. Throughout this pipeline, it is critical to ensure data validation and error handling, typically by leveraging Databricks features to monitor data quality at each step. Properly configured data pipelines make data management in a medallion databricks environment highly efficient. Each layer of the medallion databricks framework, including Bronze, Silver, and Gold, plays a crucial role in ensuring a reliable and well-structured data lakehouse.

Leveraging Databricks Features for Enhanced Medallion Implementation

Databricks provides a suite of powerful features that significantly enhance the implementation and effectiveness of the medallion architecture. At the core of this is Delta Lake, which offers reliable data storage and management. Delta Lake brings ACID (Atomicity, Consistency, Isolation, Durability) properties to data lakes, ensuring data integrity as it moves through the Bronze, Silver, and Gold layers of a medallion architecture on Databricks. This means that data transformations are more dependable, and data quality is easier to maintain across the different stages of data processing. For instance, schema evolution is greatly simplified with Delta Lake, allowing for changes in data structure without causing failures downstream. Moreover, its time travel capabilities offer a way to easily revert to previous versions of the data, which is invaluable for auditing and recovering from errors. The seamless integration of Delta Lake with Apache Spark within Databricks is a significant advantage. Spark’s powerful processing engine, coupled with Delta Lake’s storage layer, enables efficient and scalable data transformations, speeding up the movement of data through the medallion databricks framework. This allows for complex ETL jobs to be executed swiftly and reliably, making it feasible to process large data sets and enhance the utility of the medallion architecture.

Furthermore, the Unity Catalog feature in Databricks is pivotal in enhancing data governance within a medallion databricks implementation. Unity Catalog provides a centralized data catalog that simplifies the discovery, management, and auditing of data. It allows organizations to manage data access controls and ensure that data complies with regulatory requirements. This improves collaboration across different teams, ensures data privacy, and enables data democratization. The catalog’s fine-grained access controls ensure that users only have access to data that they need, which is essential for maintaining data security and preventing accidental or malicious misuse. With Unity Catalog, data assets within the medallion architecture are easier to find, understand, and trust. Additionally, Databricks SQL provides an easy-to-use interface for querying and analyzing data in the Gold layer of the medallion architecture, allowing business users to quickly gain insights. By utilizing these features, organizations can reduce the complexity of managing their data, improve data quality, and accelerate the time to value. The synergy between Delta Lake, Spark, and Unity Catalog within Databricks creates a robust and reliable environment for deploying the medallion architecture, facilitating better data management and insights.

Optimizing Data Quality within the Medallion Architecture on Databricks

Ensuring data quality at each layer of the medallion architecture on Databricks is paramount for reliable analytics and informed decision-making. This involves implementing robust validation checks, schema enforcement, and effective error handling mechanisms. Data validation should occur at every stage, from the initial Bronze layer where raw data is ingested, to the Silver layer where data is cleaned and standardized, and finally, to the Gold layer which contains business-ready data. For instance, the Bronze layer should validate if the incoming data matches expected data types and formats; the Silver layer might incorporate checks for completeness, removing duplicate records, or standardizing naming conventions. The Gold layer needs to validate consistency with business rules and requirements. Within Databricks, data quality monitoring can leverage Delta Lake’s schema enforcement features to prevent data inconsistencies from corrupting tables and data quality tools are available for more complex analysis. Implementing alert systems is crucial in the medallion databricks approach, promptly notifying data engineers and analysts of any detected data anomalies, allowing for timely corrective actions.

Schema enforcement plays a critical role in maintaining data integrity within the medallion databricks framework. Delta Lake’s ability to automatically track and enforce schema changes simplifies data governance and reduces the risk of breaking downstream processes. By specifying schemas at each layer of the medallion architecture, the system ensures that only data conforming to the expected structure is processed. Any data that fails schema validation can be either rejected or quarantined for further investigation, depending on the chosen strategy. Furthermore, error handling should be designed to gracefully deal with unexpected data issues. This involves implementing clear strategies for logging errors, rerouting or correcting invalid data, and notifying responsible teams. Proper error handling prevents the propagation of bad data through the pipeline, ensuring that only validated and correct data reaches the downstream consumers. These processes are a key part of how medallion databricks maintains its reliable workflow. Databricks provides native capabilities to set up data quality tests, run them as part of pipelines and monitor the results.

A comprehensive data quality strategy in a medallion databricks implementation also involves consistent monitoring. This can include setting up alerts based on data quality metrics such as data completeness, uniqueness, and validity and leveraging Databricks’ monitoring tools to keep track of these metrics. The medallion databricks architecture promotes a systematic approach to data quality, where issues are caught and addressed at the earliest stage possible in the pipeline. This greatly reduces the impact of data errors on business-critical operations and facilitates consistent high quality reporting. Data quality monitoring, validation and alerting are all part of the lifecycle of data operations and Databricks offers a full suite of tools to help achieve this. A successful medallion databricks setup requires that teams maintain an ongoing focus on maintaining high quality data at each stage of data processing.

The Benefits of Using Databricks Medallion for Data Management

The adoption of a medallion architecture within Databricks offers a multitude of advantages for organizations seeking to optimize their data management practices. Primarily, it enhances data reliability through its tiered approach, where data moves progressively from raw ingestion to refined, business-ready formats. The bronze layer, acting as a raw data lake, retains the original data, ensuring a reliable source for future analysis and audits. As data transits to the silver layer, transformations, cleansing, and standardization occur, which significantly reduces data quality issues often associated with raw data. Finally, the gold layer delivers curated, high-quality data perfectly suited for specific business use cases, supporting reliable reporting and advanced analytics. This clear separation of data processing stages ensures that each tier meets strict quality benchmarks, ultimately improving the trustworthiness of data-driven insights. This structured process minimizes the risk of errors cascading through the system. Moreover, with a medallion databricks implementation, organizations will find that it promotes much easier data governance. The clear delineation of responsibilities across the layers facilitates implementing data access controls and enforcing data policies effectively. This is extremely beneficial for larger teams working with complex data ecosystems, making it easier to track data lineage and understand how data changes over time.

Another critical benefit of the medallion architecture on Databricks is the acceleration of business intelligence. By providing ready-to-use datasets in the gold layer, organizations greatly reduce the time required to perform analytical tasks. This enables faster decision-making and supports rapid iteration, which is crucial in competitive markets. Using medallion databricks helps in streamlining the process of data preparation, freeing up valuable resources to focus on deriving actionable insights rather than struggling with data wrangling. Furthermore, this approach promotes scalability of data operations. Databricks, equipped with tools such as Delta Lake and Spark, makes it easier to handle increasing data volumes and growing analytical demands. The robust architecture of medallion databricks allows for data to grow exponentially with optimized data pipelines and improved performance. This capacity to scale efficiently ensures that organizations can continue to extract value from their data assets without encountering bottlenecks in processing or management. The combined benefits of improved data reliability, streamlined data governance, expedited business intelligence, and enhanced scalability highlight the transformative value of adopting a medallion architecture within Databricks. Ultimately, this architecture not only improves the daily operations of data teams but also empowers organizations to unlock the full potential of their data.

Real World Examples of Data Transformation using Medallion on Databricks

The medallion architecture, when implemented on Databricks, has proven its versatility across various industries and data types. Consider a large e-commerce platform, for instance. They use a medallion databricks approach to manage their massive transaction data. Raw transaction records, including customer details, products purchased, and timestamps, land in the Bronze layer, providing a historical archive of all activities. This initial layer ensures no data is lost and remains available for auditing and recovery. The Silver layer then steps in to clean and standardize this data. In this stage, inconsistencies in addresses are resolved, product codes are validated, and missing fields are handled through imputation strategies. The medallion databricks pattern enables this transformation to improve data quality. Finally, the Gold layer transforms the silver data into business-ready information. This includes calculating metrics like average order value, customer lifetime value, and popular product categories. The company utilizes these data in dashboards that support informed business decisions and targeted marketing campaigns, showcasing the practical benefits of the medallion architecture on Databricks.

Another compelling use case involves a healthcare organization leveraging medallion databricks for patient health records. In the bronze layer, unstructured data such as scanned medical forms, doctor’s notes, and lab results are ingested. This data, often coming from various sources with diverse formats, is stored as is, creating an immutable audit trail. In the silver layer, natural language processing (NLP) techniques are applied to extract relevant information from the unstructured data. Key findings, including diagnoses, medications, and test results, are structured and standardized using a controlled vocabulary. Furthermore, the data is validated to ensure accuracy and consistency. The gold layer then transforms this into patient-centric views. This layer allows doctors, researchers, and administrators to quickly access consolidated patient information and reports, leading to better patient care and more efficient healthcare management. Through the use of medallion databricks, they improved the data quality and reduced complexity in data access. This use case illustrates the power of the medallion architecture on Databricks in managing highly sensitive and diverse data sets.

Furthermore, a financial institution uses medallion databricks to manage complex financial transactions from multiple sources. Raw transaction data, along with associated metadata such as timestamps and user IDs, are ingested into the bronze layer of the data lakehouse. In the silver layer, this raw data is cleansed, standardized and transformed. This involves currency conversion, data validation and reconciliation to ensure consistency across various data feeds. Finally, in the gold layer, the silver data is aggregated and transformed for reporting. The data is structured to facilitate financial analysis, compliance reporting and fraud detection. This demonstrates how a medallion architecture on Databricks provides a structured approach to managing data from diverse financial systems, increasing trust and transparency in financial reporting. These real-world examples showcase the versatility and effectiveness of the medallion architecture on Databricks, demonstrating its applicability to various industries and data scenarios.

Troubleshooting Common Issues with Medallion Architecture in Databricks

Implementing a medallion architecture within Databricks, while powerful, is not without its potential pitfalls. Performance bottlenecks are a frequent concern, often arising from inefficient Spark configurations or suboptimal data partitioning strategies. For example, if data is not properly partitioned before writing to Delta Lake in the Bronze layer of your medallion databricks setup, subsequent read operations, especially in the Silver and Gold layers, can suffer from slow performance. Resolving these bottlenecks requires careful analysis of Spark query plans and potentially adjusting the number of partitions, storage formats, or even the cluster configuration. Another common issue revolves around data quality. Even with the tiered approach of the medallion architecture, errors can propagate through the layers. Data validation failures or schema mismatches during data transformation steps in the Silver layer can cause significant problems downstream in the Gold layer where business analytics happens. Rigorous schema enforcement, using Delta Lake constraints and data quality validation steps within your data pipelines are crucial to mitigate such problems. Careful logging and monitoring of data quality metrics are also essential to catch issues proactively. Furthermore, challenges with inefficient workflows are common, particularly if pipelines lack proper dependency management and error handling. This can lead to pipelines failing intermittently or producing incorrect results, which in turn damages the overall reliability of the medallion databricks implementation. Proper use of workflow management tools like Databricks Workflows, combined with robust error handling using try-except blocks and alerts, is essential for building resilient pipelines.

Debugging issues within a medallion databricks environment often necessitates a multi-faceted approach. When faced with performance issues, examining Spark UI for detailed information about running jobs is paramount. Looking for tasks that take an unusually long time to execute, or stages where data is being shuffled excessively can pinpoint the source of the bottleneck. Also, analyzing the cluster metrics within Databricks environment can offer insights into resource utilization and whether the existing configuration is adequate for the workloads. For data quality issues, thorough data profiling and validation checks within the bronze and silver layers is essential to ensure data is clean and standardized before being used for further transformations. Leverage Delta Lake features to manage schema changes and ensure schema consistency across your medallion layers. To address workflow related challenges in medallion databricks setups, implementing robust logging practices can trace the exact flow of data and track the status of each pipeline execution. By effectively capturing error messages, data processing details, and timestamps, the debugging process is greatly simplified. Utilizing version control for your notebooks and pipelines enables easier backtracking of errors. In addition, consider incorporating automated alerting mechanisms based on predefined thresholds for data quality metrics, pipeline duration, or failure rates. These proactive measures can aid in addressing issues swiftly before they escalate and impact downstream analysis. The medallion databricks architecture is designed for scalability but requires diligent maintenance to avoid issues.

Scaling Your Databricks Medallion Data Lakehouse Architecture

Scaling a medallion databricks architecture effectively requires careful planning and execution, especially as data volumes and processing demands increase. To ensure optimal performance, consider a multi-faceted approach that encompasses resource management, performance optimization, and automation. Start by rigorously monitoring resource utilization within your Databricks environment, identifying potential bottlenecks in compute and storage. Leverage Databricks’ autoscaling capabilities to dynamically adjust cluster sizes based on workload demands, preventing over-provisioning and unnecessary costs. For data storage, explore partitioning strategies within Delta Lake to improve query performance, focusing on the most frequently accessed columns and using time-based partitions for time-series data. Employ data skipping effectively to reduce the amount of data scanned during queries and leverage caching features where applicable, especially when you need to access hot data sets frequently. This approach is crucial for managing the bronze, silver, and gold layers of the medallion architecture in Databricks, ensuring each layer scales appropriately.

Implementing an automated end-to-end pipeline with Databricks Workflows is critical for scaling. It promotes consistent workflows, reduces manual intervention, and ensures the reliability of data transformations across all medallion databricks layers. Automating data quality checks, schema evolution, and dependency management are vital for maintaining high data quality as your system scales. Utilize Databricks’ monitoring tools and alerts to proactively identify potential issues and optimize pipeline execution. This involves regular profiling of data to detect schema drifts, applying appropriate schema enforcement, and ensuring that error handling mechanisms are in place at each stage. Also, consider adopting a metadata-driven approach using Databricks Unity Catalog to manage data assets across different layers, improving discoverability and governance. It is also important to implement robust data lineage tracking, which helps identify downstream dependencies and simplify debugging during scaling activities.

Furthermore, adopting a modular approach to your data transformations enhances scalability and maintainability. Break down complex transformation logic into smaller, reusable components, which will not only make it easier to scale specific parts of your pipeline but also improve code maintainability and reuse across different projects within your medallion databricks architecture. Employ robust testing strategies, including unit and integration testing, to validate transformation logic before deploying to production. Focus on incremental processing whenever possible to avoid reprocessing large volumes of data from scratch. Utilize Databricks’ libraries and APIs to implement efficient data loading and processing techniques. These strategies collectively contribute to a robust and scalable medallion architecture, which can grow with data volumes while maintaining high data quality and performance. By paying close attention to resource management, automation, and modularity, you can ensure the longevity and effectiveness of your medallion implementation on Databricks.