Understanding Container Orchestration: A Crucial Component in Modern IT Infrastructure

Container orchestration plays a vital role in managing containers at scale within modern IT infrastructures. As the use of containerization platforms like Docker and Kubernetes continues to rise, understanding the concept of container orchestration and its importance is becoming increasingly crucial. Container orchestration tools such as Kubernetes and Docker help automate the deployment, scaling, and management of containerized applications, ensuring high availability, optimal resource utilization, and seamless maintenance.

Docker: A Brief Overview of the Popular Containerization Platform

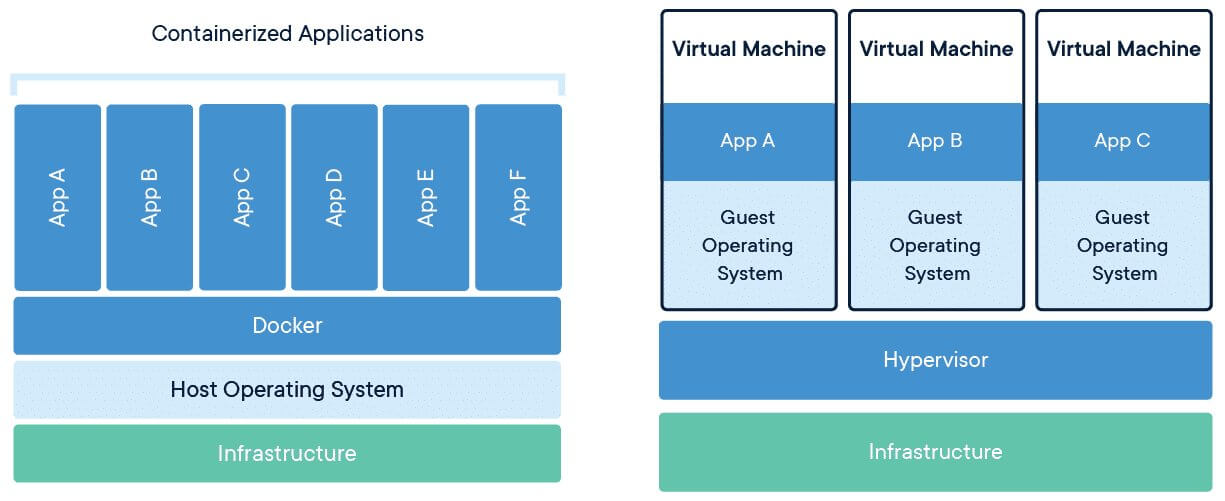

Docker is an open-source containerization platform that enables the creation, deployment, and management of applications inside containers. Containers are lightweight, portable, and self-contained units that include all the necessary dependencies, libraries, and configurations required to run an application. Docker’s containerization technology simplifies application development, deployment, and management by isolating applications and their dependencies from the underlying infrastructure.

Docker offers several key features, including a powerful container runtime, a robust image management system, and a comprehensive set of tools for building, testing, and shipping applications. Docker’s modular architecture and extensive ecosystem make it an ideal choice for organizations looking to streamline their application development and deployment processes.

Use cases for Docker span across various industries and domains, from web development and DevOps to big data and machine learning. Docker’s versatility and flexibility enable organizations to create consistent, reproducible environments for their applications, reducing the time and effort required for application testing, deployment, and scaling.

Kubernetes: A Powerful Container Orchestration System

Kubernetes, also known as K8s, is an open-source container orchestration system designed to automate the deployment, scaling, and management of containerized applications. Developed by Google and now maintained by the Cloud Native Computing Foundation (CNCF), Kubernetes has gained significant popularity in recent years due to its robust architecture, extensive capabilities, and active community support.

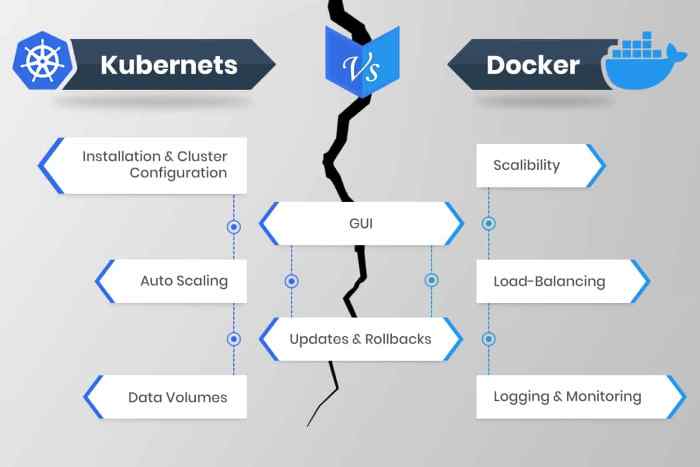

Kubernetes simplifies the management of containerized applications at scale by providing features such as automated rollouts, self-healing, service discovery, and load balancing. Its modular architecture enables organizations to create customized solutions tailored to their specific needs, while its extensive ecosystem offers a wide range of plugins, tools, and integrations for enhanced functionality and flexibility.

Kubernetes’ architecture consists of a master node and multiple worker nodes. The master node is responsible for managing the worker nodes and maintaining the desired state of the cluster, while the worker nodes execute the containerized applications and report their status to the master node. This master-worker node architecture enables Kubernetes to scale and manage large, complex containerized applications with ease.

Kubernetes vs Docker: Key Differences and Similarities

While Kubernetes and Docker serve different purposes in the container orchestration landscape, they complement each other in many ways. Understanding their key differences and similarities is essential for choosing the right tool for your container orchestration needs.

Docker is a containerization platform that enables the creation, deployment, and management of applications inside containers. Kubernetes, on the other hand, is a container orchestration system designed to automate the deployment, scaling, and management of containerized applications across clusters of hosts.

One of the main differences between Kubernetes and Docker is their scope. Docker focuses on containerization, while Kubernetes focuses on container orchestration. Docker provides the runtime environment and tools for creating and managing containers, while Kubernetes offers features for deploying, scaling, and managing containerized applications across a distributed infrastructure.

In terms of similarities, both Kubernetes and Docker are open-source projects with large, active communities. They both support containerization, enabling the creation of portable, lightweight, and self-contained application environments. Additionally, they both offer APIs, command-line interfaces, and extensive documentation, making it easy for developers to get started with their respective platforms.

When to Use Kubernetes and When to Use Docker

Choosing the right tool for container orchestration depends on your specific use cases and requirements. Here are some guidelines on when to use Kubernetes and when to use Docker:

- Use Docker when:

- You need a simple, lightweight containerization solution for development and testing.

- You want to create and manage containers for a single host or a small number of hosts.

- You require a straightforward, easy-to-learn containerization platform with a low learning curve.

- Use Kubernetes when:

- You need to manage and orchestrate containerized applications at scale across multiple hosts.

- You require advanced features such as automated rollouts, self-healing, service discovery, and load balancing.

- You want to create customized solutions tailored to your specific needs using Kubernetes’ modular architecture and extensive ecosystem.

In many cases, organizations use both Kubernetes and Docker together in a container orchestration setup. Docker provides the containerization environment, while Kubernetes manages and orchestrates the containerized applications at scale.

How to Implement Kubernetes and Docker in Your IT Infrastructure

Implementing Kubernetes and Docker in your IT infrastructure involves several steps. Here are some best practices and potential challenges to consider during the implementation process:

- Step 1: Assess your IT infrastructure and requirements.

Evaluate your current IT infrastructure and identify the resources, workloads, and applications that can benefit from containerization and container orchestration. Determine the scale, complexity, and security requirements of your implementation.

- Step 2: Choose the right Kubernetes and Docker distributions and tools.

Select the appropriate Kubernetes and Docker distributions, tools, and plugins based on your requirements and budget. Consider factors such as ease of use, compatibility, scalability, and community support.

- Step 3: Design and configure your container orchestration environment.

Design and configure your Kubernetes and Docker environment, including the master and worker nodes, networks, storage, and security settings. Ensure that your environment meets your performance, scalability, and availability requirements.

- Step 4: Containerize your applications and deploy them to Kubernetes and Docker.

Containerize your applications using Docker and deploy them to your Kubernetes and Docker environment. Use Kubernetes’ declarative configuration and automation features to manage and orchestrate your containerized applications.

- Step 5: Monitor, optimize, and maintain your container orchestration environment.

Monitor your Kubernetes and Docker environment using tools such as Prometheus, Grafana, and Elasticsearch. Optimize your environment for performance, scalability, and cost using Kubernetes’ autoscaling, load balancing, and resource management features. Maintain your environment by applying updates, patches, and security fixes as needed.

Potential challenges during the implementation process include managing complexity, ensuring security, maintaining compatibility, and optimizing performance. Address these challenges by following best practices, using proven tools and techniques, and seeking advice and guidance from the Kubernetes and Docker communities.

Real-World Use Cases: Kubernetes and Docker in Action

Organizations across various industries have successfully implemented Kubernetes and Docker for container orchestration, reaping benefits such as increased efficiency, scalability, and reliability. Here are some real-world use cases:

Use Case 1: Netflix

Netflix, a leading entertainment streaming service, uses Kubernetes and Docker to manage its microservices-based architecture. By adopting container orchestration, Netflix has achieved improved resource utilization, faster deployment times, and better fault tolerance.

Use Case 2: Airbnb

Airbnb, a global online marketplace for lodging, uses Docker to containerize its applications and Kubernetes to manage and orchestrate its containerized workloads. By implementing container orchestration, Airbnb has gained increased efficiency, portability, and consistency across its development and production environments.

Use Case 3: eBay

eBay, a multinational e-commerce corporation, uses Kubernetes and Docker to manage its containerized applications and services. By adopting container orchestration, eBay has achieved improved scalability, resiliency, and faster time-to-market for its software releases.

Use Case 4: Zalando

Zalando, a European online fashion platform, uses Kubernetes and Docker to manage its containerized workloads and microservices architecture. By implementing container orchestration, Zalando has gained increased efficiency, flexibility, and faster deployment times for its applications and services.

These real-world use cases demonstrate the potential benefits and outcomes of implementing Kubernetes and Docker for container orchestration. By adopting these tools, organizations can achieve improved efficiency, scalability, and reliability for their containerized applications and services.

Future Trends: The Evolution of Container Orchestration Tools

As containerization and container orchestration continue to gain traction in modern IT infrastructure, Kubernetes and Docker are evolving to meet the changing needs of organizations. Here are some future trends and developments to keep an eye on:

Trend 1: Serverless Computing and FaaS Integration

Serverless computing and Function-as-a-Service (FaaS) platforms are becoming increasingly popular for event-driven and microservices-based architectures. Both Kubernetes and Docker are adapting to integrate with serverless computing and FaaS platforms, enabling organizations to build more scalable, efficient, and cost-effective applications.

Trend 2: Multi-Cloud and Hybrid Cloud Orchestration

As organizations adopt multi-cloud and hybrid cloud strategies, the need for seamless container orchestration across different cloud providers and on-premises environments is becoming more critical. Both Kubernetes and Docker are developing features and tools to support multi-cloud and hybrid cloud orchestration, enabling organizations to achieve greater flexibility, portability, and cost savings.

Trend 3: Artificial Intelligence and Machine Learning Integration

Artificial Intelligence (AI) and Machine Learning (ML) are becoming essential components of modern IT infrastructure. Both Kubernetes and Docker are integrating with AI and ML platforms, enabling organizations to build more intelligent, automated, and self-optimizing applications and services.

Trend 4: Enhanced Security and Compliance Features

Security and compliance are critical concerns for organizations implementing container orchestration. Both Kubernetes and Docker are continuously improving their security and compliance features, including network segmentation, role-based access control, and encryption, to help organizations meet their security and compliance requirements.

Trend 5: Simplified User Experience and Automation

As container orchestration becomes more complex, the need for simplified user experiences and automation is becoming more critical. Both Kubernetes and Docker are focusing on improving their user interfaces, automation tools, and documentation to help organizations reduce the learning curve and operational overhead of container orchestration.

By staying up-to-date with these future trends and developments, organizations can leverage the full potential of Kubernetes and Docker for container orchestration and stay ahead of the competition in the rapidly evolving IT landscape.