Understanding Container Orchestration and the Role of Kubernetes

Container orchestration is a critical aspect of managing containerized applications, as it simplifies the deployment, scaling, and management of these applications. With the increasing popularity of containerization, container orchestration platforms like Kubernetes have emerged as essential tools for DevOps professionals. Kubernetes, also known as K8s, is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It was originally designed by Google and is now maintained by the Cloud Native Computing Foundation (CNCF). Kubernetes has gained widespread popularity due to its flexibility, scalability, and robustness.

Kubernetes provides a range of features that make it an ideal choice for container orchestration. These features include self-healing, automatic scaling, load balancing, and rolling updates. Kubernetes’ declarative configuration approach enables users to define the desired state of their applications, and the platform automatically manages the underlying infrastructure to achieve that state.

Kubernetes’ modular architecture consists of several components, including nodes, pods, services, and volumes. Nodes are the physical or virtual machines that run the applications, while pods are the smallest deployable units in Kubernetes. Services provide a stable IP address and DNS name for a set of pods, while volumes enable data persistence and sharing between pods.

Kubernetes’ popularity is due in part to its extensive ecosystem, which includes a wide range of tools and integrations. These tools simplify the deployment, management, and monitoring of Kubernetes clusters, making it easier for users to focus on building and deploying their applications.

In summary, Kubernetes is a powerful container orchestration platform that simplifies the deployment, scaling, and management of containerized applications. Its modular architecture, robust features, and extensive ecosystem make it an ideal choice for DevOps professionals looking to manage their containerized applications effectively.

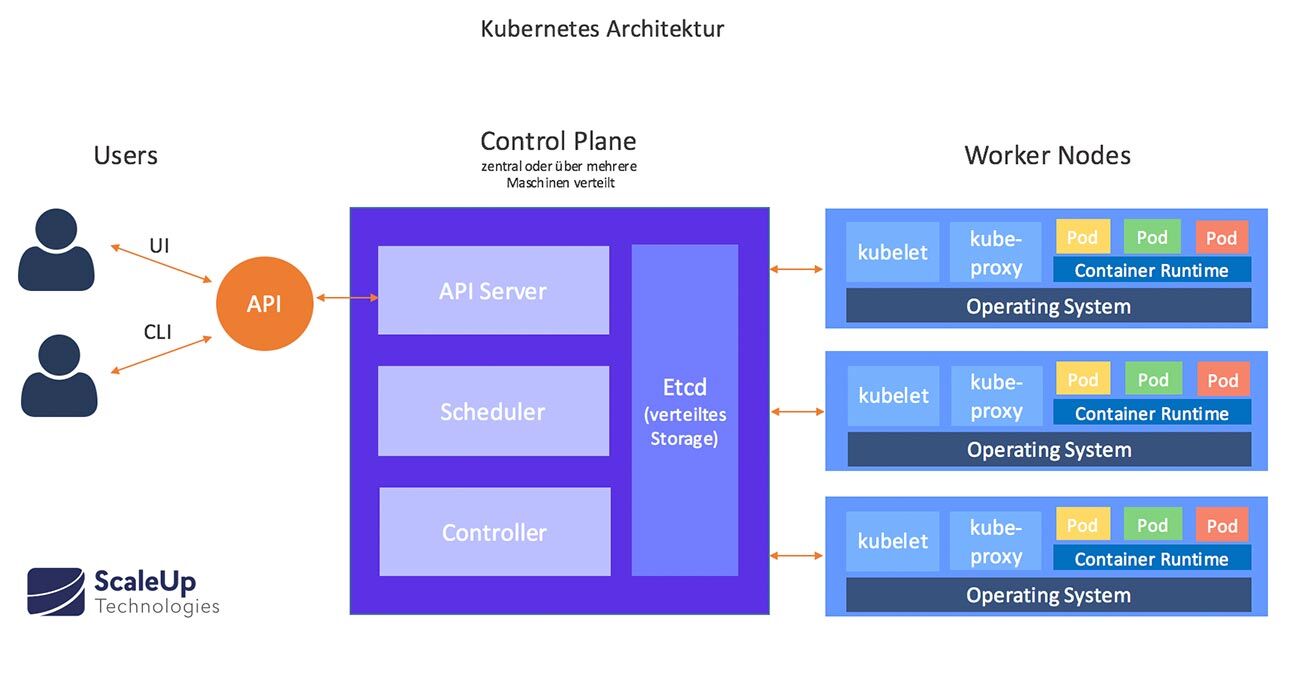

Key Components and Architecture of Kubernetes

Kubernetes has a modular architecture that consists of several components, each with a specific role in managing containerized applications. These components include nodes, pods, services, and volumes. Nodes are the physical or virtual machines that run the applications. Each node contains the Kubernetes runtime environment, including the container runtime, kubelet, and kube-proxy. The container runtime is responsible for running and managing the containers, while the kubelet is the agent that communicates with the Kubernetes API server to manage the node’s resources. The kube-proxy is responsible for managing network traffic between the nodes and the pods.

Pods are the smallest deployable units in Kubernetes. They represent one or more containers that share the same network namespace and storage resources. Pods are ephemeral and can be created, deleted, and recreated as needed. Each pod has a unique IP address and DNS name, enabling communication between the pods and the services.

Services provide a stable IP address and DNS name for a set of pods. They act as a load balancer, distributing network traffic across the pods. Services enable communication between the pods and the external world, including other services and applications.

Volumes enable data persistence and sharing between pods. They are a collection of storage devices that can be mounted on a pod. Volumes provide a way to store and manage data, ensuring that it is available even if the pod is deleted or recreated.

Kubernetes’ declarative configuration approach enables users to define the desired state of their applications, and the platform automatically manages the underlying infrastructure to achieve that state. Users can define the desired state using YAML or JSON files, which can be applied to the cluster using the Kubernetes API.

In summary, Kubernetes’ modular architecture consists of nodes, pods, services, and volumes. These components interact with each other to manage the containerized applications, enabling communication between the pods and the services, providing data persistence and sharing, and ensuring high availability and performance. Kubernetes’ declarative configuration approach simplifies the deployment, scaling, and management of containerized applications, making it an ideal choice for DevOps professionals looking to manage their containerized applications effectively.

How to Deploy Applications in Kubernetes

Kubernetes provides a declarative configuration approach for deploying and managing containerized applications. This approach enables users to define the desired state of their applications, and Kubernetes automatically manages the underlying infrastructure to achieve that state. To deploy an application in Kubernetes, users need to create pods, services, and deployments. Pods represent one or more containers that share the same network namespace and storage resources. Services provide a stable IP address and DNS name for a set of pods, enabling communication between the pods and the external world. Deployments manage the rollout and scaling of pods, ensuring that the desired number of replicas is always running.

Here’s a step-by-step guide on how to deploy an application in Kubernetes:

Create a deployment: A deployment defines the desired state of the application, including the number of replicas, the container image, and the configuration options. Users can create a deployment using a YAML or JSON file, which specifies the deployment’s properties.

Create a service: A service provides a stable IP address and DNS name for a set of pods. Users can create a service using a YAML or JSON file, which specifies the service’s properties, including the selector, the ports, and the IP address.

Apply the configuration: Once the deployment and service configurations are ready, users can apply them to the cluster using the Kubernetes API. The Kubernetes API server ensures that the desired state of the application is achieved, creating and managing the underlying resources as needed.

Verify the deployment: Users can verify the deployment by checking the status of the deployment and the service. Kubernetes provides various commands and tools for managing and monitoring the deployment, enabling users to ensure that the application is running as expected.

In summary, deploying applications in Kubernetes is a straightforward process that involves creating pods, services, and deployments, and managing them using Kubernetes’ declarative configuration approach. By defining the desired state of the application, Kubernetes automatically manages the underlying infrastructure, ensuring high availability and performance. Users can verify the deployment and manage the application using Kubernetes’ various commands and tools, making it an ideal choice for deploying and managing containerized applications.

Scaling and Load Balancing in Kubernetes

Scaling and load balancing are critical aspects of managing containerized applications. Kubernetes simplifies the process of scaling and load balancing applications, ensuring high availability and performance. Kubernetes provides various mechanisms for scaling and load balancing applications, including horizontal scaling, replica sets, and load balancers.

Horizontal scaling involves adding or removing replicas of a pod to handle changes in traffic. Kubernetes provides various tools for managing horizontal scaling, including the Kubernetes API, the command-line interface (CLI), and the Kubernetes dashboard. Users can define the desired state of the application, including the number of replicas, and Kubernetes automatically manages the underlying infrastructure to achieve that state.

Replica sets are a way to ensure that a specified number of replicas of a pod are running at any given time. Replica sets provide a way to manage the lifecycle of pods, ensuring that the desired number of replicas is always running. Replica sets are useful for managing stateless applications, where each replica is identical and can handle requests independently.

Load balancers distribute network traffic across a set of pods, ensuring that no single pod is overwhelmed with traffic. Kubernetes provides various load balancing mechanisms, including the Kubernetes service, the ingress controller, and the service mesh. The Kubernetes service provides a stable IP address and DNS name for a set of pods, enabling communication between the pods and the external world. The ingress controller provides a way to manage external access to the cluster, including load balancing and SSL termination. The service mesh provides a way to manage communication between the pods, including service discovery, load balancing, and security.

In summary, scaling and load balancing are critical aspects of managing containerized applications. Kubernetes simplifies the process of scaling and load balancing applications, ensuring high availability and performance. Horizontal scaling, replica sets, and load balancers are some of the mechanisms that Kubernetes provides for managing scaling and load balancing. By defining the desired state of the application, Kubernetes automatically manages the underlying infrastructure, enabling users to focus on building and deploying their applications.

Monitoring and Logging in Kubernetes

Monitoring and logging are critical aspects of managing containerized applications. Kubernetes provides various mechanisms for monitoring and logging applications, enabling users to ensure the stability and reliability of their applications. Monitoring involves collecting and analyzing metrics to gain insights into the performance and health of the applications. Kubernetes provides various monitoring mechanisms, including the Kubernetes metrics server, the Kubernetes dashboard, and third-party monitoring tools. The Kubernetes metrics server provides metrics about the cluster, including the number of nodes, the number of pods, and the resource usage. The Kubernetes dashboard provides a user interface for monitoring and managing the cluster, including the ability to view metrics and logs. Third-party monitoring tools, such as Prometheus and Grafana, provide advanced monitoring capabilities, including alerting and visualization.

Logging involves collecting and aggregating logs from the applications and the infrastructure. Kubernetes provides various logging mechanisms, including the Kubernetes logging architecture, the Kubernetes logging operator, and third-party logging tools. The Kubernetes logging architecture provides a way to collect and aggregate logs from the applications and the infrastructure. The Kubernetes logging operator provides a way to manage the logging infrastructure, including the ability to configure log forwarding and retention. Third-party logging tools, such as Fluentd and ELK Stack, provide advanced logging capabilities, including filtering, parsing, and analysis.

In summary, monitoring and logging are critical aspects of managing containerized applications. Kubernetes provides various mechanisms for monitoring and logging applications, enabling users to ensure the stability and reliability of their applications. Monitoring involves collecting and analyzing metrics, while logging involves collecting and aggregating logs. By monitoring and logging their applications, users can gain insights into the performance and health of their applications, enabling them to detect and resolve issues quickly.

Networking in Kubernetes

Networking is a critical aspect of managing containerized applications in Kubernetes. Kubernetes provides a networking model that enables communication between pods and services, ensuring high availability and performance. The Kubernetes networking model is based on the concept of a flat network, where all pods are in the same network namespace and can communicate with each other directly. Each pod is assigned a unique IP address, enabling communication between pods and services.

Services provide a way to expose applications running in pods, enabling communication between the pods and the external world. Kubernetes provides various types of services, including ClusterIP, NodePort, and LoadBalancer. ClusterIP provides a stable IP address and DNS name for a set of pods within the cluster, enabling communication between the pods. NodePort provides a way to expose a service on a specific port on each node in the cluster, enabling communication between the pods and the external world. LoadBalancer provides a way to expose a service outside the cluster, enabling communication between the pods and external services.

Network policies provide a way to secure and manage network traffic in Kubernetes. Network policies enable users to define rules for traffic flow between pods and services, ensuring that only authorized traffic is allowed. Network policies are implemented using network plugins, which provide the necessary functionality for managing network traffic.

Ingress rules provide a way to manage external access to the cluster, enabling communication between the pods and external services. Ingress rules define rules for routing traffic to specific services based on the URL path, the HTTP headers, and other criteria. Ingress controllers provide the necessary functionality for managing ingress rules, enabling users to define and manage external access to the cluster.

Service meshes provide a way to manage communication between the pods, enabling users to define and manage service-to-service communication. Service meshes provide advanced features, including service discovery, load balancing, and security, enabling users to ensure high availability and performance.

In summary, networking is a critical aspect of managing containerized applications in Kubernetes. Kubernetes provides a networking model that enables communication between pods and services, ensuring high availability and performance. Services, network policies, ingress rules, and service meshes are some of the mechanisms that Kubernetes provides for managing networking. By managing networking in Kubernetes, users can ensure high availability and performance, enabling them to deploy, scale, and manage containerized applications effectively.

Security Best Practices in Kubernetes

Security is a critical aspect of managing containerized applications in Kubernetes. Kubernetes provides various mechanisms for securing applications and infrastructure, enabling users to ensure the confidentiality, integrity, and availability of their applications. Role-based access control (RBAC) provides a way to manage access to Kubernetes resources based on user roles. RBAC enables users to define roles and bindings, which specify the permissions that users have for specific resources. By using RBAC, users can ensure that only authorized users have access to specific resources, preventing unauthorized access and modifications.

Network policies provide a way to secure and manage network traffic in Kubernetes. Network policies enable users to define rules for traffic flow between pods and services, ensuring that only authorized traffic is allowed. By using network policies, users can prevent unauthorized access to their applications and infrastructure, ensuring the confidentiality and integrity of their data.

Secrets management provides a way to manage sensitive data, such as passwords, tokens, and certificates, in Kubernetes. Secrets management enables users to store, distribute, and manage sensitive data securely, ensuring that only authorized users have access to specific secrets. By using secrets management, users can prevent unauthorized access to their applications and infrastructure, ensuring the confidentiality and integrity of their data.

In summary, security is a critical aspect of managing containerized applications in Kubernetes. Kubernetes provides various mechanisms for securing applications and infrastructure, enabling users to ensure the confidentiality, integrity, and availability of their applications. Role-based access control (RBAC), network policies, and secrets management are some of the mechanisms that Kubernetes provides for managing security. By implementing security best practices in Kubernetes, users can ensure the confidentiality, integrity, and availability of their applications, enabling them to deploy, scale, and manage containerized applications effectively and securely.

Real-World Use Cases of Kubernetes

Kubernetes has become a popular platform for container orchestration, enabling organizations to deploy, scale, and manage containerized applications effectively. In this section, we will explore real-world use cases of Kubernetes in different industries and applications, describing how Kubernetes simplifies the deployment, scaling, and management of containerized applications in these scenarios.

Use Case 1: Cloud Migration

Cloud migration is a common use case for Kubernetes. Organizations can use Kubernetes to migrate their applications to the cloud, enabling them to take advantage of the scalability, reliability, and cost-effectiveness of cloud infrastructure. Kubernetes provides a consistent platform for managing applications across different cloud environments, enabling organizations to deploy and manage their applications seamlessly.

Use Case 2: Microservices Architecture

Microservices architecture is another common use case for Kubernetes. Microservices enable organizations to break down monolithic applications into smaller, independent components, enabling them to develop, deploy, and scale their applications more efficiently. Kubernetes provides a platform for managing microservices, enabling organizations to deploy, scale, and manage their microservices seamlessly.

Use Case 3: DevOps and CI/CD

DevOps and CI/CD are also common use cases for Kubernetes. Kubernetes provides a platform for automating the deployment, scaling, and management of applications, enabling organizations to implement DevOps and CI/CD practices more efficiently. Kubernetes enables organizations to deploy their applications quickly and reliably, reducing the time and effort required for testing, deployment, and release.

Use Case 4: Big Data and Analytics

Big Data and Analytics are also use cases for Kubernetes. Kubernetes provides a platform for managing big data and analytics workloads, enabling organizations to process and analyze large volumes of data efficiently. Kubernetes enables organizations to deploy, scale, and manage their big data and analytics workloads seamlessly, reducing the time and effort required for data processing and analysis.

Use Case 5: IoT and Edge Computing

IoT and Edge Computing are emerging use cases for Kubernetes. Kubernetes provides a platform for managing IoT and edge computing workloads, enabling organizations to process and analyze data closer to the source. Kubernetes enables organizations to deploy, scale, and manage their IoT and edge computing workloads seamlessly, reducing the time and effort required for data processing and analysis.

Use Case 6: Machine Learning and AI

Machine Learning and AI are also use cases for Kubernetes. Kubernetes provides a platform for managing machine learning and AI workloads, enabling organizations to process and analyze large volumes of data efficiently. Kubernetes enables organizations to deploy, scale, and manage their machine learning and AI workloads seamlessly, reducing the time and effort required for data processing and analysis.

Use Case 7: High-Performance Computing

High-Performance Computing is another use case for Kubernetes. Kubernetes provides a platform for managing high-performance computing workloads, enabling organizations to process and analyze large volumes of data efficiently. Kubernetes enables organizations to deploy, scale, and manage their high-performance computing workloads seamlessly, reducing the time and effort required for data processing and analysis.

Use Case 8: Gaming and Media Streaming

Gaming and Media Streaming are also use cases for Kubernetes. Kubernetes provides a platform for managing gaming and media streaming workloads, enabling organizations to deploy, scale, and manage their applications efficiently. Kubernetes enables organizations to deploy, scale, and manage their gaming and media streaming workloads seamlessly, reducing the time and effort required for deployment and management.

Use Case 9: E-commerce and Retail

E-commerce and Retail are also use cases for Kubernetes. Kubernetes provides a platform for managing e-commerce and retail workloads, enabling organizations to deploy, scale, and manage their applications efficiently. Kubernetes enables organizations to deploy, scale, and manage their e-commerce and retail workloads seamlessly, reducing the time and effort required for deployment and management.

Use Case 10: Healthcare and Life Sciences

Healthcare and Life Sciences are also use cases for Kubernetes. Kubernetes provides a platform for managing healthcare and life sciences workloads, enabling organizations to deploy, scale, and manage their applications efficiently. Kubernetes enables organizations to deploy, scale, and manage their healthcare and life sciences workloads seamlessly, reducing the time and effort required for deployment and management.