Understanding the Kubernetes Scheduler: Orchestrating Containerized Applications

The Kubernetes scheduler plays a vital role in managing containerized applications. It automatically assigns pods to nodes within a Kubernetes cluster. This crucial function ensures efficient resource utilization and application availability. The scheduler considers various factors, including resource requests and limits, node capacity, and application requirements. Understanding the Kubernetes scheduler is fundamental to effective cluster management. Its intelligent allocation capabilities prevent resource bottlenecks and maximize the performance of your applications. The Kubernetes scheduler continuously monitors the cluster state, dynamically adjusting pod placements as needed. This dynamic approach ensures optimal performance even under fluctuating workloads. Efficient scheduling is essential for scaling applications and maintaining high availability.

The Kubernetes scheduler employs sophisticated algorithms. These algorithms optimize pod placement based on numerous criteria. Resource constraints are a primary consideration. The scheduler ensures sufficient CPU, memory, and storage are available before assigning a pod. Application requirements, such as specific hardware or software, are also factored in. Node affinity and anti-affinity rules allow for strategic pod placement based on application dependencies or isolation needs. This granular control enhances application stability and performance. The Kubernetes scheduler minimizes resource contention and maximizes the efficiency of your cluster. This makes it a core component for any robust Kubernetes deployment. The scheduler’s capabilities are further enhanced by features like pod prioritization and preemption.

Effective use of the Kubernetes scheduler directly impacts application performance and cluster stability. By understanding and leveraging its capabilities, administrators can achieve optimal resource utilization. This leads to cost savings and improved application responsiveness. The scheduler’s ability to handle complex deployment scenarios makes it a powerful tool for managing large-scale applications. Mastering the Kubernetes scheduler is crucial for anyone deploying and managing containerized workloads. Its features allow for fine-grained control, enabling administrators to tailor their deployment strategies to meet specific application needs. The Kubernetes scheduler is a cornerstone of the Kubernetes ecosystem, providing the intelligence needed for efficient cluster operation. Its sophisticated algorithms and features provide essential capabilities for managing and scaling containerized applications.

How to Optimize Your Kubernetes Deployment Strategy Using the Scheduler

Efficiently managing your Kubernetes deployments hinges on understanding and optimizing the Kubernetes scheduler. This critical component assigns pods to nodes, maximizing resource utilization and application performance. Effective resource requests and limits are foundational. Resource requests define the minimum resources a pod needs to function. Resource limits prevent pods from consuming excessive resources, protecting overall cluster stability. The Kubernetes scheduler considers these requests and limits when making placement decisions. Properly defining these parameters ensures predictable pod behavior and prevents resource starvation. Failing to specify them adequately can lead to unpredictable performance and scheduling conflicts.

Node affinity and anti-affinity offer granular control over pod placement. Node affinity allows you to constrain pod scheduling to nodes with specific labels. This is useful for deploying stateful applications or those requiring specific hardware. For example, a database pod might require a node with an “ssd” label. Anti-affinity, conversely, prevents pods from being scheduled on the same node. This is crucial for high-availability deployments where spreading replicas across different nodes improves resilience. The Kubernetes scheduler intelligently uses these constraints, enhancing application availability and resilience. Mastering these techniques is key to building robust and scalable applications on Kubernetes. Incorrect configuration can result in deployments failing or performing suboptimally. Therefore, thorough testing and validation are paramount.

Beyond basic affinity and anti-affinity, taints and tolerations provide another layer of control. Taints mark nodes with specific characteristics, while tolerations allow pods to ignore these taints. This mechanism enables you to dedicate nodes for specific purposes, such as running sensitive workloads or operating system types. Pods without the necessary tolerations cannot run on tainted nodes. This enables you to isolate sensitive applications or reserve certain nodes for specific needs. Further enhancing control, pod priority and preemption ensure critical applications receive resources even under pressure. High-priority pods can preempt lower-priority ones, guaranteeing the execution of time-sensitive tasks. The Kubernetes scheduler prioritizes pods based on defined priority classes, balancing resource allocation to meet critical application needs. The intelligent algorithms of the Kubernetes scheduler ensure efficient resource utilization and optimal deployment strategies.

Exploring Advanced Kubernetes Scheduler Features and Configurations

The Kubernetes scheduler offers advanced features to fine-tune cluster resource allocation and pod scheduling. Understanding and leveraging these capabilities can significantly improve overall cluster efficiency and application performance. Resource quotas, for instance, allow administrators to set limits on resource consumption per namespace, preventing resource hogging by specific applications and ensuring fair resource distribution across the cluster. This is particularly crucial in multi-tenant environments where different teams share the same Kubernetes cluster. Effective resource quota management contributes to a stable and predictable Kubernetes environment.

Priority classes provide another layer of control over pod scheduling. By assigning priority classes to pods, administrators can influence the scheduling order, ensuring that critical applications are launched and remain running even under resource pressure. Higher-priority pods are preferentially scheduled, potentially preempting lower-priority pods if resources are limited. This feature is vital for handling business-critical applications requiring consistent uptime. The Kubernetes scheduler’s sophisticated algorithms balance these priorities with resource availability, optimizing overall cluster utilization. Carefully configuring priority classes requires understanding the trade-offs between guaranteeing high-priority application performance and potentially impacting less critical workloads.

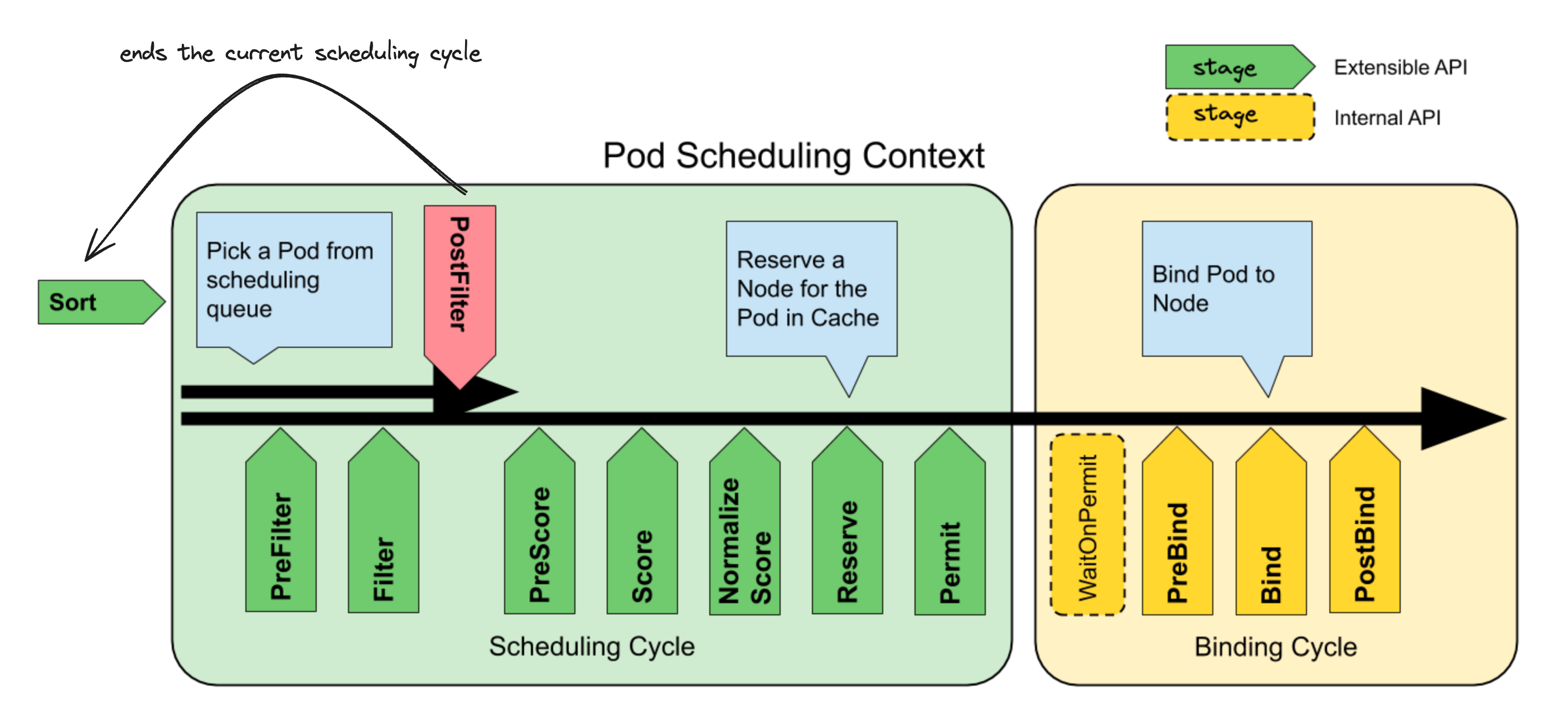

Extending the Kubernetes scheduler’s capabilities involves exploring custom scheduling algorithms and integrating external schedulers. While the default scheduler is robust, specialized application needs may necessitate tailored scheduling logic. This might involve creating custom controllers or integrating third-party schedulers that offer unique features or better alignment with specific application deployment strategies. For example, a custom controller could prioritize pods based on custom metrics or external signals, allowing for more sophisticated and application-aware scheduling decisions. However, implementing such solutions requires advanced Kubernetes expertise and a comprehensive understanding of the underlying architecture. Integrating with external schedulers provides additional possibilities, potentially enabling functionalities not natively supported by the standard kubernetes scheduler. The decision to leverage these advanced options hinges on a thorough cost-benefit analysis, ensuring the increased complexity is justified by the resultant improvements in application deployment and management. Effective monitoring and log analysis are critical to diagnose and resolve any issues that might arise from these advanced configurations.

Analyzing Kubernetes Scheduler Performance and Identifying Bottlenecks

Monitoring the Kubernetes scheduler’s performance is crucial for maintaining a healthy and efficient cluster. Effective monitoring allows administrators to proactively identify and address potential bottlenecks before they impact application availability and performance. Key metrics to track include scheduling latency, the time it takes for the kubernetes scheduler to assign pods to nodes. High latency indicates potential issues requiring investigation. Resource utilization, encompassing CPU, memory, and storage usage on nodes, provides insights into resource contention. Imbalances in resource utilization can negatively affect scheduling efficiency and application performance. The kubernetes scheduler’s pod placement success rate reflects its ability to successfully assign pods to suitable nodes. A low success rate suggests problems with resource requests, node constraints, or insufficient resources within the cluster. The kubernetes scheduler is vital for cluster stability.

Common bottlenecks in the kubernetes scheduler often stem from resource contention. Insufficient resources on nodes lead to scheduling delays or failures. Over-provisioning of resource requests in pod definitions can exacerbate this issue. Inefficient resource allocation strategies, such as a lack of node affinity or anti-affinity rules, can also cause pods to be scheduled inefficiently, leading to resource contention and reduced overall cluster performance. Another frequent problem is insufficient node capacity. Adding new nodes or upgrading existing nodes to increase their capacity can alleviate resource scarcity. The Kubernetes scheduler intelligently manages resources. Improving node utilization helps optimize resource allocation. Careful analysis of pod specifications and resource requests ensures efficient resource allocation by the Kubernetes scheduler. This prevents resource contention and improves overall cluster performance. Proper configuration of taints and tolerations on nodes facilitates strategic pod placement, enabling optimized resource utilization and enhancing the effectiveness of the kubernetes scheduler.

Troubleshooting scheduler issues involves a combination of monitoring tools and log analysis. Tools like the Kubernetes dashboard, `kubectl`, and cloud provider monitoring solutions provide valuable insights into scheduler behavior and resource utilization. Analyzing scheduler logs helps pinpoint specific errors or delays. Strategies to improve scheduler performance include optimizing resource requests and limits in pod definitions, refining node affinity and anti-affinity rules, and employing taints and tolerations to manage node resource constraints. By proactively monitoring, analyzing, and addressing bottlenecks, administrators can ensure the kubernetes scheduler operates efficiently, contributing to a robust and high-performing Kubernetes cluster. Regular reviews of resource utilization metrics, coupled with periodic analysis of scheduler logs, are crucial for identifying and rectifying any performance degradation impacting the kubernetes scheduler. Addressing resource contention and improving node utilization are key to optimizing the kubernetes scheduler’s performance. The kubernetes scheduler plays a critical role in Kubernetes cluster performance.

Deep Dive into Pod Prioritization and Preemption in Kubernetes

Kubernetes employs a sophisticated pod prioritization and preemption mechanism to manage resource allocation effectively, especially in resource-constrained environments. This feature ensures critical applications continue running even when resources are scarce. The kubernetes scheduler assigns priority scores to pods based on various factors, including resource requests, quality of service (QoS) classes, and custom priority classes defined by users. Higher priority pods are more likely to be scheduled first, and if necessary, the scheduler can preempt lower priority pods to free up resources for higher priority ones. This dynamic process ensures that crucial applications remain operational.

Preemption, a key component of the kubernetes scheduler’s prioritization system, involves reclaiming resources from lower-priority pods to allocate them to higher-priority ones. When a higher-priority pod is pending and needs resources, the scheduler identifies lower-priority pods running on suitable nodes. These lower-priority pods are then evicted to make room for the higher-priority pod. The evicted pods are typically rescheduled later when resources become available. This intelligent resource management strategy is particularly vital for mission-critical applications demanding guaranteed resource availability. Effective use of priority classes and understanding the preemption policy are crucial for optimizing the performance of the kubernetes scheduler.

Consider a scenario with a database pod requiring significant resources and a web server pod. Assigning a higher priority to the database pod through priority classes ensures that it receives resources first, even if the web server pod is already running. If resources become limited, the kubernetes scheduler might preempt the web server to accommodate the database. The web server will be rescheduled when resources are available. This demonstrates the power of pod prioritization and preemption for maintaining application availability and stability within the kubernetes scheduler framework. Understanding how to effectively utilize these features is crucial for managing complex deployments and ensuring reliable operation of the entire system.

Understanding Node Affinity and Anti-Affinity: Optimizing Resource Allocation in the Kubernetes Scheduler

Node affinity and anti-affinity are powerful features within the Kubernetes scheduler that enable precise control over pod placement. These features allow administrators to define rules specifying where pods should or should not be scheduled. Node affinity directs pods towards nodes possessing specific characteristics, enhancing application performance and reliability. For instance, a database pod might require placement on a node with high storage capacity. This ensures optimal resource utilization and prevents performance bottlenecks. Effective use of node affinity improves the efficiency of the kubernetes scheduler.

Conversely, node anti-affinity ensures pods avoid co-location on the same node. This is particularly beneficial for applications needing high availability and fault tolerance. Consider a stateless application replicated across multiple nodes. Anti-affinity prevents all instances from residing on a single node. If that node fails, the entire application remains operational. The kubernetes scheduler leverages these rules to distribute pods, minimizing the risk of single points of failure. Properly configuring node affinity and anti-affinity significantly enhances the resilience and scalability of applications deployed within a Kubernetes cluster. Careful planning and implementation of these features are vital for optimizing the performance and resource allocation managed by the kubernetes scheduler.

Implementing node affinity and anti-affinity involves utilizing labels and selectors. Nodes are labeled with key-value pairs describing their attributes. Pod specifications include selectors, targeting specific node labels. This allows administrators to create fine-grained placement rules. For instance, a selector might specify that a pod should only run on nodes with the label “disktype:ssd.” Similarly, anti-affinity rules prevent pods with matching labels from being scheduled together. These rules are evaluated by the kubernetes scheduler during pod placement. Understanding and employing these techniques is key to leveraging the full potential of the Kubernetes scheduler for efficient and resilient application deployments. The kubernetes scheduler uses this information to make informed decisions, improving resource allocation and application reliability.

Integrating External Schedulers and Custom Controllers for Advanced Orchestration

Extending the capabilities of the Kubernetes scheduler beyond its built-in functionality offers significant advantages for complex deployments. Integrating external schedulers allows organizations to leverage specialized algorithms or tailor scheduling decisions to unique application requirements. This approach is particularly valuable when dealing with highly specific resource constraints or complex application dependencies that the standard Kubernetes scheduler may not fully address. For example, an organization might integrate a custom scheduler to optimize for specific hardware resources or network topologies. This enhanced control provides a significant competitive edge in optimizing resource utilization and application performance.

Developing custom controllers provides another powerful method to extend the Kubernetes scheduler. Custom controllers enable fine-grained control over pod placement and resource allocation. They allow for the implementation of sophisticated scheduling logic based on factors not directly considered by the default scheduler. This flexibility is crucial for applications with stringent requirements, such as those demanding specific hardware features or needing to adhere to strict data locality constraints. The ability to create custom logic enhances the responsiveness of the Kubernetes scheduler to unique organizational needs. Well-designed custom controllers improve overall cluster efficiency and application performance. The Kubernetes scheduler benefits from these integrations.

The process of integrating external schedulers or developing custom controllers requires a strong understanding of the Kubernetes API and the internal workings of the scheduler. It also necessitates careful planning and testing to ensure stability and reliability. However, the investment in developing custom solutions pays off in the form of increased control, improved resource utilization, and enhanced application performance within the Kubernetes environment. Organizations can achieve significant improvements in their infrastructure management by strategically leveraging these advanced techniques. The Kubernetes scheduler’s flexibility remains a key advantage in managing complex deployments.

Troubleshooting Common Kubernetes Scheduler Issues and Best Practices

Troubleshooting a malfunctioning kubernetes scheduler often begins with monitoring key metrics. Resource utilization, scheduling latency, and pod placement success rates provide valuable insights. High scheduling latency might indicate resource contention or an overloaded scheduler. Low pod placement success rates suggest insufficient resources or misconfigured node selectors. Tools like kube-state-metrics and Prometheus offer comprehensive monitoring capabilities, providing a clear picture of the kubernetes scheduler’s health. Effective monitoring allows for proactive identification of potential problems.

Common issues encountered with the kubernetes scheduler include pods failing to schedule due to insufficient resources, conflicting node affinities, or missing tolerations. Scheduling delays can stem from resource starvation, complex scheduling constraints, or scheduler configuration issues. Resource allocation problems often result from inaccurate resource requests and limits, leading to inefficient resource utilization and potential pod evictions. To address these challenges, carefully review resource requests and limits for each pod. Ensure that node taints and tolerations are correctly configured to meet application requirements. Employ node affinity and anti-affinity to strategically place pods. The kubernetes scheduler’s configuration also needs thorough review. Proper tuning can significantly improve performance.

Maintaining a healthy and efficient kubernetes scheduler requires proactive monitoring, precise resource allocation, and effective troubleshooting techniques. Regularly review cluster resource usage. This helps identify potential bottlenecks before they impact application performance. Implement robust alerting mechanisms to notify administrators of critical scheduler events. Regularly audit the kubernetes scheduler configuration to ensure it aligns with evolving cluster needs. By following these best practices, administrators can enhance the performance and reliability of their kubernetes scheduler, guaranteeing efficient and reliable container orchestration. The kubernetes scheduler plays a vital role in ensuring smooth operation. Proactive management and diligent monitoring are essential to its success.