What is a Reverse Proxy in Kubernetes?

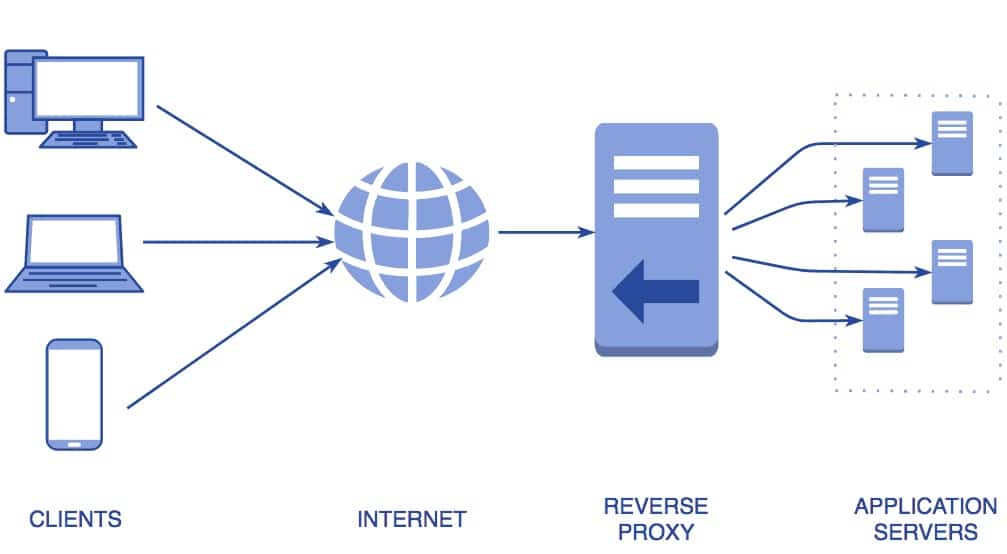

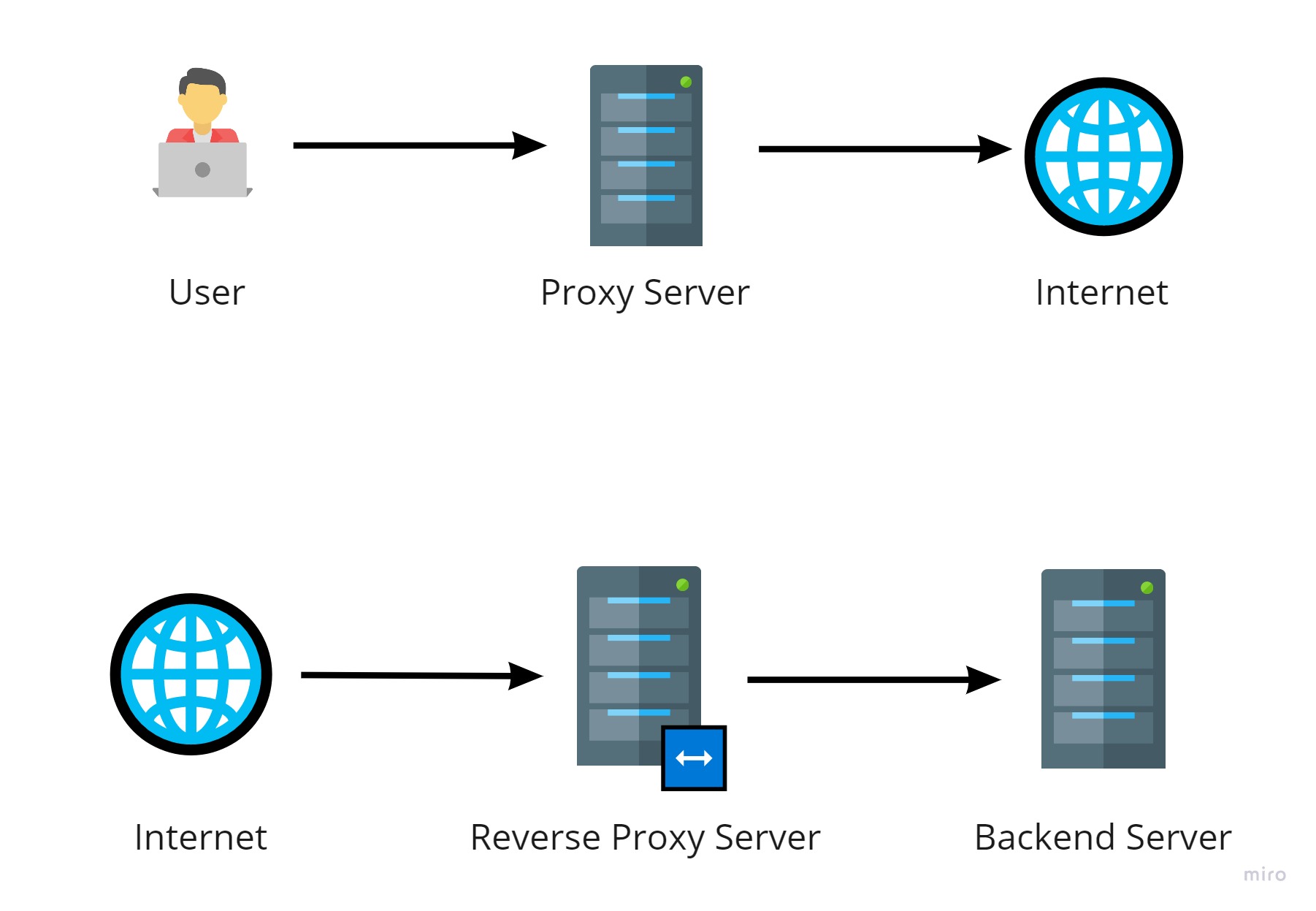

A reverse proxy in Kubernetes is a server that acts as an intermediary between client applications and backend server applications. Its primary role is to receive incoming requests from clients, forward them to the appropriate backend servers, and return the responses back to the clients. This process is also known as request routing or request forwarding.

In the context of Kubernetes, a reverse proxy is often used to manage and direct traffic to various services and pods running within a cluster. By doing so, it provides numerous benefits, such as load balancing, security, and simplified service discovery. These advantages make a reverse proxy an essential component of many Kubernetes-based architectures.

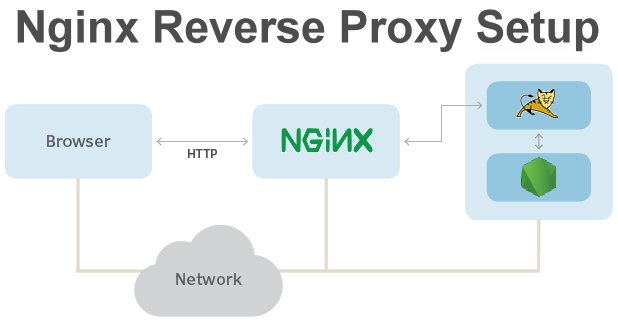

The reverse proxy in Kubernetes is typically implemented using an Ingress resource, which defines the rules for routing traffic to different services based on the request’s URL path, hostname, or other attributes. Popular reverse proxy solutions for Kubernetes include NGINX, Traefik, and HAProxy, each offering unique features and capabilities to meet various use cases and requirements.

How Kubernetes Reverse Proxy Differs from Traditional Reverse Proxies

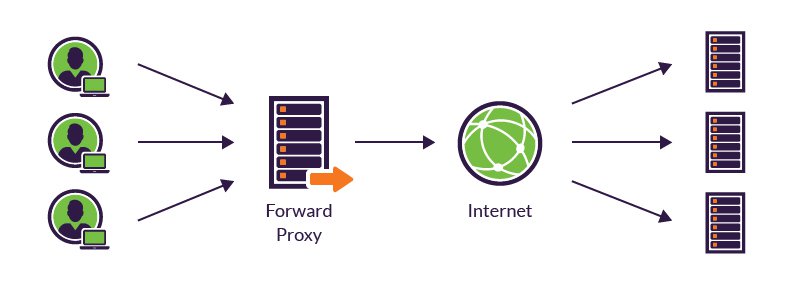

Kubernetes reverse proxy differs from traditional reverse proxies in several significant ways, making it an ideal choice for modern, dynamic, and containerized environments. Some of these unique features include:

- Dynamic nature: Traditional reverse proxies typically require manual configuration and management, while Kubernetes reverse proxy automatically adapts to changes in the cluster, such as the creation, deletion, or scaling of services and pods. This self-configuring ability reduces the administrative burden and ensures high availability and resilience.

- Auto-scaling capabilities: Kubernetes reverse proxy can automatically scale up or down based on the current workload, ensuring optimal performance and resource utilization. In contrast, traditional reverse proxies often require manual scaling, which can lead to under- or over-provisioning of resources.

- Integration with containerized environments: Kubernetes reverse proxy is designed to work seamlessly with containerized applications, enabling easy deployment, management, and scaling of microservices architectures. Traditional reverse proxies may not offer the same level of integration and compatibility with container runtimes and orchestration tools.

- Ingress resource: Kubernetes reverse proxy uses an Ingress resource to define the rules for routing traffic to different services. This declarative approach simplifies configuration and management compared to the imperative configuration methods used in traditional reverse proxies.

By leveraging these unique features, Kubernetes reverse proxy offers a more flexible, scalable, and efficient solution for managing and directing client requests in modern containerized environments.

Benefits of Using a Reverse Proxy in Kubernetes

A reverse proxy in Kubernetes offers several advantages that make it an essential component of many containerized environments. Some of these benefits include:

- Load balancing: Kubernetes reverse proxy can distribute incoming traffic across multiple backend servers, ensuring that no single server is overwhelmed and providing high availability and resilience.

- Security: By acting as an intermediary between clients and backend servers, a reverse proxy can provide an additional layer of security. It can terminate SSL/TLS connections, offload encryption and decryption tasks, and implement access control policies, web application firewalls, and other security measures.

- Simplified service discovery: Kubernetes reverse proxy can automatically discover and route traffic to the appropriate services and pods based on the Ingress resource configuration. This feature simplifies service discovery and reduces the need for manual configuration and management.

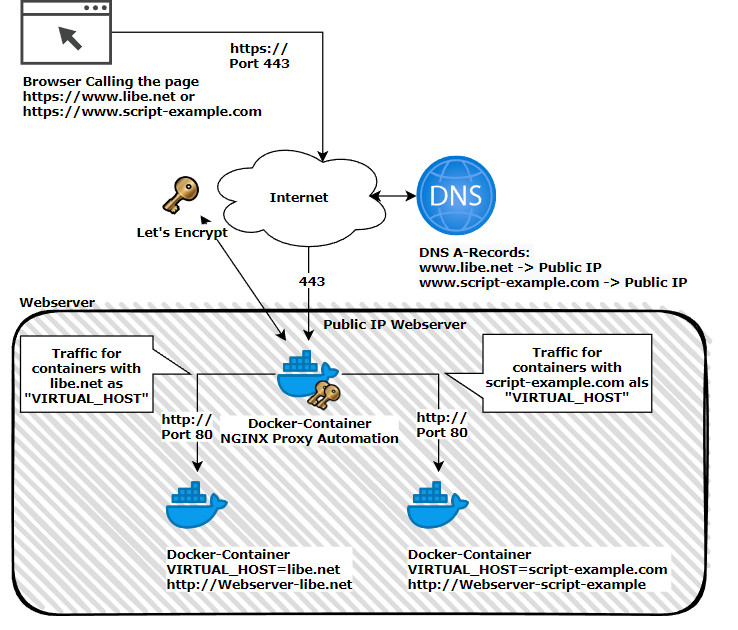

- Name-based virtual hosting: Kubernetes reverse proxy supports name-based virtual hosting, enabling the deployment of multiple websites or applications on a single IP address. This capability is particularly useful in shared hosting environments or when deploying microservices architectures.

- Request routing and transformation: Kubernetes reverse proxy can perform request routing and transformation based on various criteria, such as URL paths, headers, or query parameters. This feature enables advanced use cases, such as A/B testing, canary releases, and blue/green deployments.

By leveraging these benefits, a reverse proxy in Kubernetes can help organizations improve the performance, security, and reliability of their containerized applications and services.

Popular Kubernetes Reverse Proxy Solutions

Several reverse proxy solutions are widely used in Kubernetes environments, each offering unique features and capabilities. Some of the most popular solutions include:

- NGINX: NGINX is a well-known and widely adopted reverse proxy solution, known for its high performance, stability, and scalability. NGINX offers extensive support for HTTP and TCP protocols, enabling it to handle various workloads, such as web applications, APIs, and microservices. NGINX Ingress Controller is a popular choice for Kubernetes environments, providing advanced features like session persistence, request and response buffering, and customizable logging.

- Traefik: Traefik is a modern and dynamic reverse proxy solution designed for cloud-native environments. It offers automatic service discovery, seamless integration with Kubernetes and other container orchestration tools, and support for various load balancing strategies. Traefik also provides a user-friendly dashboard for monitoring and managing reverse proxy configurations and can be easily extended with middleware plugins for added functionality.

- HAProxy: HAProxy is a high-performance and reliable reverse proxy solution, known for its low resource consumption and robust feature set. It supports various load balancing algorithms, protocols, and encryption methods, making it a versatile choice for Kubernetes environments. HAProxy Ingress Controller is available for Kubernetes, providing integration with the Kubernetes API and support for advanced features like TLS termination, connection pooling, and request collapsing.

These popular Kubernetes reverse proxy solutions cater to various use cases and requirements, enabling organizations to choose the one that best fits their needs and preferences.

How to Configure a Reverse Proxy in Kubernetes

Configuring a reverse proxy in Kubernetes involves several steps, including creating a Deployment, a Service, and an Ingress resource. Here’s a brief overview of the process:

- Create a Deployment: A Deployment defines the desired state of a set of replicas of a pod, including the container images, resources, and environment variables. To create a Deployment for a reverse proxy, you need to specify the reverse proxy container image, such as NGINX, Traefik, or HAProxy, and any necessary configuration files or environment variables.

- Create a Service: A Service provides a stable IP address and DNS name for a set of pods, enabling communication between them. To create a Service for the reverse proxy, you need to specify the selector that matches the pods created by the Deployment and the desired type of Service, such as ClusterIP, NodePort, or LoadBalancer.

- Create an Ingress resource: An Ingress resource defines the rules for routing external traffic to internal services based on the request’s URL path, hostname, or other attributes. To create an Ingress resource for the reverse proxy, you need to specify the Ingress controller, the rules for routing traffic to the Service, and any necessary annotations or configurations, such as TLS termination, rate limiting, or logging.

By following these steps, you can configure a reverse proxy in Kubernetes that provides load balancing, security, and simplified service discovery for your containerized applications and services.

Best Practices for Implementing a Reverse Proxy in Kubernetes

Implementing a reverse proxy in Kubernetes can provide numerous benefits, but it’s essential to follow best practices to optimize performance, security, and reliability. Here are some recommendations:

- Use TLS termination: Terminating SSL/TLS connections at the reverse proxy can offload encryption and decryption tasks from the backend servers, reducing their resource consumption and improving their performance. TLS termination also enables centralized management of SSL/TLS certificates and configurations.

- Enable rate limiting: Implementing rate limiting at the reverse proxy can prevent abuse, reduce the risk of denial-of-service (DoS) attacks, and ensure fair resource allocation among clients. Rate limiting can be based on various criteria, such as IP addresses, request rates, or response sizes.

- Monitor logs: Regularly monitoring the logs of the reverse proxy can provide valuable insights into the traffic patterns, performance metrics, and security events. Analyzing logs can help identify issues, detect anomalies, and troubleshoot problems, enabling proactive maintenance and incident response.

- Implement access control policies: Configuring access control policies at the reverse proxy can restrict access to specific resources, services, or applications based on various criteria, such as IP addresses, authentication methods, or authorization levels. Access control policies can help prevent unauthorized access, reduce the attack surface, and improve the overall security posture.

- Use health checks: Implementing health checks at the reverse proxy can ensure that only healthy and responsive backend servers are used for load balancing and request forwarding. Health checks can be based on various criteria, such as HTTP response codes, response times, or custom health probes.

- Optimize caching: Caching frequently accessed resources, such as static files or API responses, at the reverse proxy can reduce the latency, improve the throughput, and reduce the resource consumption of the backend servers. Caching can be based on various criteria, such as content types, expiration times, or cache headers.

By following these best practices, organizations can ensure that their Kubernetes reverse proxy is optimized for performance, security, and reliability, providing a solid foundation for their containerized applications and services.

Real-World Use Cases of Kubernetes Reverse Proxy

Kubernetes reverse proxy is used in various production environments to provide load balancing, security, and simplified service discovery for containerized applications and services. Here are some examples of real-world use cases:

- Microservices architectures: In microservices architectures, Kubernetes reverse proxy can be used to route traffic between different microservices, ensuring high availability, resilience, and scalability. By implementing service discovery, load balancing, and health checks, Kubernetes reverse proxy can help organizations build and operate complex microservices applications with ease.

- Hybrid cloud setups: In hybrid cloud setups, Kubernetes reverse proxy can be used to route traffic between on-premises and cloud-based resources, enabling seamless integration and migration of applications and services. By implementing TLS termination, access control policies, and rate limiting, Kubernetes reverse proxy can help organizations ensure secure and efficient communication between different environments.

- Multi-tenant clusters: In multi-tenant clusters, Kubernetes reverse proxy can be used to route traffic between different tenants, ensuring isolation, security, and resource allocation. By implementing namespace-based virtual hosting, network policies, and resource quotas, Kubernetes reverse proxy can help organizations operate multi-tenant clusters with confidence.

- API gateways: In API gateways, Kubernetes reverse proxy can be used to provide a unified entry point for multiple APIs, enabling centralized management, monitoring, and security. By implementing request routing, transformation, and aggregation, Kubernetes reverse proxy can help organizations build and operate scalable and resilient API gateways.

- Service meshes: In service meshes, Kubernetes reverse proxy can be used to provide a dedicated infrastructure layer for service-to-service communication, enabling advanced features such as service discovery, load balancing, and observability. By implementing sidecar injection, data plane proxies, and control plane APIs, Kubernetes reverse proxy can help organizations build and operate service meshes with ease.

These are just a few examples of how Kubernetes reverse proxy is used in production environments to provide value and usefulness to organizations. By leveraging the dynamic nature, auto-scaling capabilities, and integration with containerized environments, Kubernetes reverse proxy can help organizations build and operate modern, scalable, and resilient applications and services.

Challenges and Limitations of Kubernetes Reverse Proxy

While Kubernetes reverse proxy offers numerous benefits, it also comes with some challenges and limitations that organizations should be aware of. Here are some of the common issues and limitations:

- Complexity: Configuring and managing a reverse proxy in Kubernetes can be complex, requiring a deep understanding of various concepts, such as Deployments, Services, Ingress resources, and annotations. Organizations may need to invest in training, documentation, and support to ensure successful implementation and operation.

- Resource consumption: Running a reverse proxy in Kubernetes can consume significant resources, such as CPU, memory, and network bandwidth. Organizations should monitor the resource usage and adjust the configurations, such as the number of replicas, the resource requests and limits, and the caching policies, to optimize the performance and cost.

- Potential single points of failure: A reverse proxy in Kubernetes can become a potential single point of failure if not properly designed, implemented, and operated. Organizations should implement redundancy, high availability, and disaster recovery strategies, such as multiple replicas, load balancers, and backup and restore procedures, to ensure business continuity and resilience.

- Security risks: Implementing a reverse proxy in Kubernetes can introduce security risks, such as misconfigurations, vulnerabilities, and attacks. Organizations should follow best practices, such as using TLS termination, enabling rate limiting, implementing access control policies, and monitoring logs, to mitigate the risks and ensure the security and compliance of their applications and services.

By understanding these challenges and limitations, organizations can take proactive measures to address them and ensure the successful implementation and operation of Kubernetes reverse proxy in their production environments. With the right approach, Kubernetes reverse proxy can provide significant benefits, such as load balancing, security, and simplified service discovery, for containerized applications and services.