Docker Compose: A Powerful Tool for Local Development

Containerization technology has revolutionized the way developers build, deploy, and manage applications. As the popularity of containerization has grown, so has the need for managing and orchestrating these containers. This article delves into two prominent tools, Kubernetes Pods and Docker Compose, comparing their features, use cases, and performance to help you make an informed decision when faced with the challenge of kubernetes pods vs docker compose.

Docker Compose is a powerful tool designed for local development, enabling users to define and run multi-container applications with ease. Its lightweight nature and simple YAML syntax make it an ideal choice for small-scale projects and rapid prototyping. Docker Compose allows developers to create a .yml file that describes the services, networks, and volumes required for their application, simplifying the process of managing multiple containers.

One of the primary advantages of Docker Compose is its simplicity. It is an excellent choice for developers who are new to containerization or working on projects with limited scope. Docker Compose’s straightforward configuration and ease of use make it an ideal tool for creating isolated development environments, testing, and debugging applications. Additionally, Docker Compose integrates seamlessly with Docker’s other tools, such as Docker Swarm and Docker Engine, providing a smooth transition as projects grow and evolve.

However, Docker Compose is not without its limitations. It is primarily designed for local development and may not be suitable for production environments. While it offers some scaling capabilities, it lacks advanced features such as self-healing, auto-scaling, and rolling updates, which are essential for managing applications in large-scale, dynamic environments. For these reasons, Docker Compose may not be the best choice for managing complex, enterprise-level applications, and it is crucial to consider the trade-offs when deciding between kubernetes pods vs docker compose.

Kubernetes Pods: A Robust Solution for Production Environments

Containerization technology has transformed the way developers build, deploy, and manage applications. As the popularity of containerization has grown, so has the need for managing and orchestrating these containers. This article compares two popular tools, Kubernetes Pods and Docker Compose, to help you make an informed decision when faced with the challenge of kubernetes pods vs docker compose.

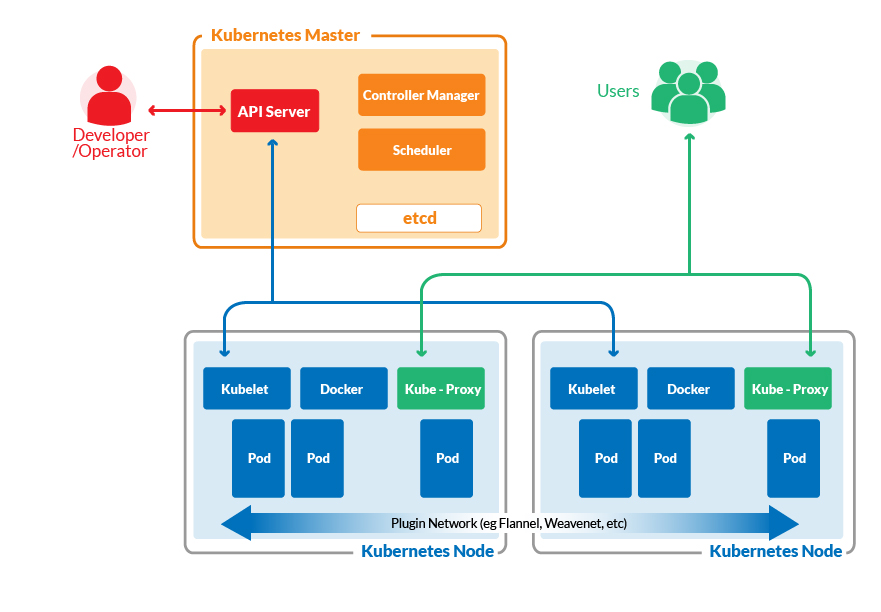

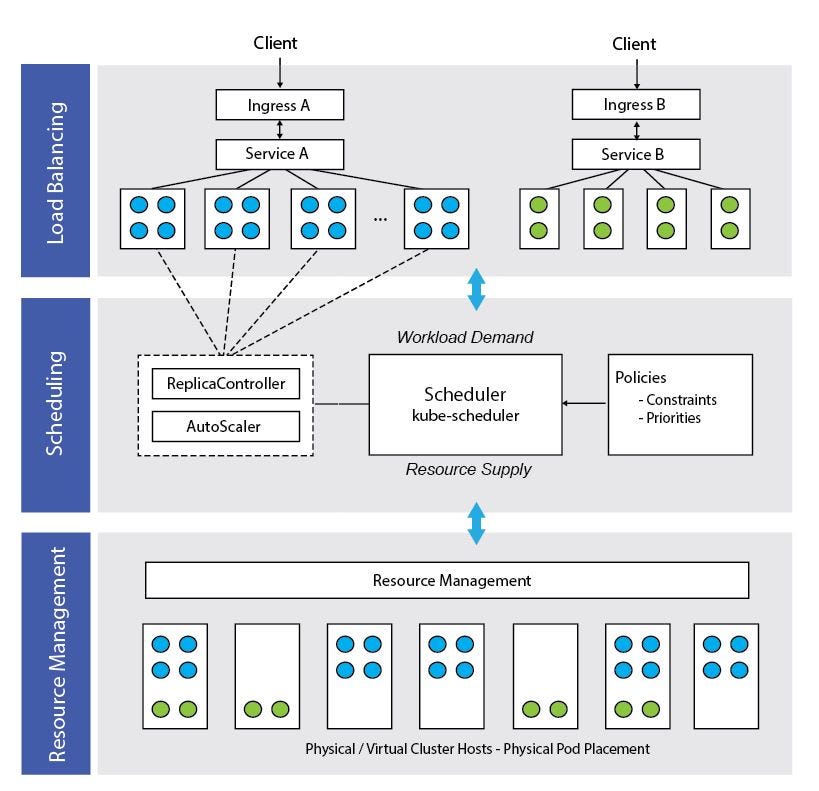

Kubernetes Pods are a core component of the Kubernetes container orchestration platform, designed for production environments. They offer advanced features such as self-healing, auto-scaling, and rolling updates, making them an ideal choice for managing complex, enterprise-level applications. Kubernetes Pods provide a robust and flexible solution for container orchestration, enabling users to define and manage multiple containers as a single unit.

One of the primary advantages of Kubernetes Pods is their ability to handle complex, large-scale applications. Kubernetes Pods offer features such as resource allocation, automated rollouts, and service discovery, ensuring that applications run smoothly and efficiently in dynamic environments. Additionally, Kubernetes Pods support advanced networking capabilities, enabling users to create and manage complex network topologies with ease.

However, Kubernetes Pods are not without their limitations. Their complex architecture and steep learning curve might be overwhelming for beginners and small-scale projects. Kubernetes Pods require a deep understanding of their underlying concepts and components, such as services, deployments, and replica sets, to be used effectively. Moreover, Kubernetes Pods may be overkill for small-scale projects or projects that do not require advanced features such as self-healing and auto-scaling. These factors should be carefully considered when deciding between kubernetes pods vs docker compose.

Head-to-Head Comparison: Kubernetes Pods vs. Docker Compose

Containerization technology has transformed the way developers build, deploy, and manage applications. As the popularity of containerization has grown, so has the need for managing and orchestrating these containers. This article compares two popular tools, Kubernetes Pods and Docker Compose, to help you make an informed decision when faced with the challenge of kubernetes pods vs docker compose.

In this section, we will directly compare Kubernetes Pods and Docker Compose, revealing their strengths and weaknesses in various aspects, such as scalability, ease of use, and community support. This comparison will provide a clear understanding of the best use cases for each tool, enabling you to make an informed decision based on your project’s requirements.

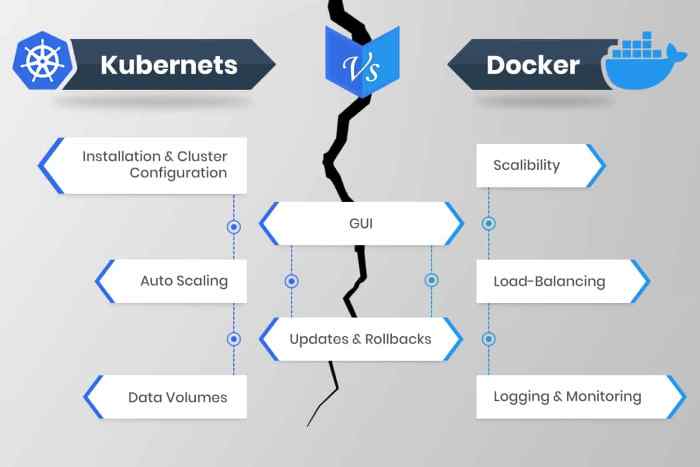

Scalability

Kubernetes Pods are designed for large-scale, dynamic environments and offer advanced features such as self-healing, auto-scaling, and rolling updates. These features make Kubernetes Pods an ideal choice for managing complex, enterprise-level applications. On the other hand, Docker Compose is more suited for small-scale projects and rapid prototyping due to its simplicity and lightweight nature. While Docker Compose does offer some scaling capabilities, it is not as robust or flexible as Kubernetes Pods in handling large-scale applications.

Ease of Use

Docker Compose is known for its simplicity and ease of use. Its lightweight nature and simple YAML syntax make it an ideal choice for small-scale projects and rapid prototyping. In contrast, Kubernetes Pods have a steeper learning curve due to their complex architecture and numerous components. Users must have a deep understanding of Kubernetes concepts and components, such as services, deployments, and replica sets, to use Kubernetes Pods effectively.

Community Support

Both Kubernetes Pods and Docker Compose have strong and active communities. However, Kubernetes has a larger community due to its widespread adoption in production environments. This larger community means that Kubernetes users have access to a wealth of resources, tutorials, and best practices, making it easier to find solutions to common problems and challenges. Docker Compose, while having a smaller community, still offers robust support and resources for users.

Integration and Compatibility

Docker Compose integrates seamlessly with Docker’s other tools, such as Docker Swarm and Docker Engine, providing a smooth transition as projects grow and evolve. Kubernetes Pods, on the other hand, are designed to work within the Kubernetes ecosystem, offering seamless integration with other Kubernetes components and services. This integration and compatibility make both tools powerful choices for container orchestration, but it is essential to consider the long-term goals and ecosystem of your project when deciding between kubernetes pods vs docker compose.

Choosing the Right Tool for Your Project: Factors to Consider

When deciding between Kubernetes Pods and Docker Compose, it is crucial to consider various factors to make the best choice for your specific use case. Here is a checklist to help guide your decision-making process:

Project Size and Complexity

For small-scale projects and rapid prototyping, Docker Compose is an ideal choice due to its simplicity and ease of use. However, for large-scale, complex applications requiring advanced features such as self-healing, auto-scaling, and rolling updates, Kubernetes Pods are more suitable. Consider the size and complexity of your project when choosing between kubernetes pods vs docker compose.

Long-Term Goals

Assess your project’s long-term goals and determine whether you will require advanced features such as auto-scaling and self-healing. If your project is expected to grow and evolve, Kubernetes Pods might be a better choice due to their robust feature set and seamless integration with other Kubernetes components. On the other hand, if your project has limited scope and is not expected to grow significantly, Docker Compose might be a more lightweight and straightforward option.

Learning Curve

Kubernetes Pods have a steeper learning curve due to their complex architecture and numerous components. Users must have a deep understanding of Kubernetes concepts and components, such as services, deployments, and replica sets, to use Kubernetes Pods effectively. Docker Compose, on the other hand, is known for its simplicity and ease of use. Consider the learning curve and the expertise of your team when deciding between kubernetes pods vs docker compose.

Community Support

Both Kubernetes Pods and Docker Compose have strong and active communities. However, Kubernetes has a larger community due to its widespread adoption in production environments. This larger community means that Kubernetes users have access to a wealth of resources, tutorials, and best practices, making it easier to find solutions to common problems and challenges. Docker Compose, while having a smaller community, still offers robust support and resources for users.

Integration and Compatibility

Consider the long-term goals and ecosystem of your project when deciding between Kubernetes Pods and Docker Compose. Docker Compose integrates seamlessly with Docker’s other tools, such as Docker Swarm and Docker Engine, providing a smooth transition as projects grow and evolve. Kubernetes Pods, on the other hand, are designed to work within the Kubernetes ecosystem, offering seamless integration with other Kubernetes components and services.

Real-World Use Cases: Success Stories and Lessons Learned

Understanding how organizations and developers have successfully implemented Kubernetes Pods and Docker Compose in real-world projects can provide valuable insights and experiences to guide your decision-making process. Here are some success stories and lessons learned from implementing these container orchestration tools:

Case Study 1: E-commerce Platform

An e-commerce platform with a large user base and high traffic implemented Kubernetes Pods to manage their microservices architecture. By using Kubernetes Pods, they were able to achieve advanced features such as self-healing, auto-scaling, and rolling updates, ensuring high availability and reliability for their users. The platform was able to handle sudden traffic spikes and maintain optimal performance levels, even during peak usage times.

Case Study 2: Social Media Application

A social media application with a growing user base implemented Docker Compose for their local development and testing environments. By using Docker Compose, the development team was able to define and run multi-container applications with ease, reducing the time and effort required for testing and debugging. The lightweight nature of Docker Compose made it an ideal choice for their small-scale projects, enabling rapid prototyping and development cycles.

Lessons Learned

These case studies highlight the importance of choosing the right container orchestration tool based on your project’s requirements. Kubernetes Pods are better suited for large-scale, complex applications requiring advanced features such as self-healing, auto-scaling, and rolling updates. In contrast, Docker Compose is an ideal choice for small-scale projects and rapid prototyping due to its simplicity and ease of use. By understanding the strengths and weaknesses of these tools, you can make an informed decision when deciding between kubernetes pods vs docker compose.

Getting Started with Kubernetes Pods and Docker Compose

Once you have decided between Kubernetes Pods and Docker Compose based on your project’s requirements, it’s time to set up and configure these tools for your projects. Here are some step-by-step tutorials and best practices to ensure a smooth and efficient workflow:

Getting Started with Docker Compose

To get started with Docker Compose, follow these steps:

- Install Docker on your local machine.

- Create a

docker-compose.ymlfile in the root directory of your project. - Define your services, networks, and volumes in the

docker-compose.ymlfile using YAML syntax. - Run the

docker-compose upcommand to start your multi-container application.

For best practices, ensure that you use versioned YAML syntax, define environment variables for each service, and use volumes for persistent data storage. Additionally, consider using Docker Compose’s built-in features such as health checks and dependency management to optimize your workflow.

Getting Started with Kubernetes Pods

To get started with Kubernetes Pods, follow these steps:

- Install Kubernetes on your local machine or a cloud provider.

- Create a Kubernetes manifest file in YAML or JSON format.

- Define your Pod’s containers, volumes, and other resources in the manifest file.

- Use the

kubectl apply -fcommand to create the Pod and its resources.

For best practices, ensure that you use labels and annotations for resource discovery, define resource requests and limits for each container, and use persistent volumes for data storage. Additionally, consider using Kubernetes’ advanced features such as Deployments, Services, and ConfigMaps to optimize your workflow.

Best Practices for Managing and Monitoring Containers

Once you have chosen your container orchestration tool, it’s essential to follow best practices for managing and monitoring your containers to optimize their performance and stability. Here are some tips and strategies to help you get started:

Resource Management

Properly managing your container resources is crucial for ensuring optimal performance and preventing resource starvation. Here are some best practices for resource management:

- Define resource requests and limits for each container to ensure fair resource allocation.

- Monitor resource usage regularly and adjust resource limits as needed.

- Use horizontal scaling to add or remove containers based on resource usage and application demand.

Health Checks and Self-Healing

Health checks and self-healing mechanisms are essential for maintaining container availability and reliability. Here are some best practices for health checks and self-healing:

- Define liveness and readiness probes for each container to monitor their health and availability.

- Use Kubernetes’ self-healing mechanisms, such as auto-scaling and rolling updates, to automatically recover from failures and maintain high availability.

- Implement retry and failover strategies to ensure that failed containers are automatically replaced and that traffic is redirected to healthy containers.

Logging and Monitoring

Logging and monitoring are essential for diagnosing issues, identifying trends, and optimizing performance. Here are some best practices for logging and monitoring:

- Use centralized logging solutions, such as ELK Stack or Fluentd, to collect and analyze container logs.

- Implement monitoring solutions, such as Prometheus or Nagios, to monitor container metrics and identify issues before they become critical.

- Configure alerts and notifications to proactively notify you of issues and potential problems.

By following these best practices, you can ensure that your containerized applications are properly managed, monitored, and optimized for performance and stability. Whether you choose Kubernetes Pods or Docker Compose, these best practices will help you get the most out of your container orchestration tool.

Future Trends in Container Orchestration: What to Expect

Container orchestration is an ever-evolving field, with new technologies and techniques emerging regularly. Here are some future trends in container orchestration that you should keep an eye on:

Serverless Containers

Serverless computing is a popular trend in cloud computing, and serverless containers are the next evolution of this technology. Serverless containers allow developers to run containerized applications without worrying about infrastructure management. This approach can help reduce operational costs and improve scalability. Serverless containers are still in the early stages of development, but they have the potential to revolutionize container orchestration in the future.

GitOps

GitOps is a new approach to container orchestration that uses Git as the source of truth for infrastructure and application configuration. With GitOps, developers can manage infrastructure and application configuration using Git workflows, enabling them to automate deployment, scaling, and management tasks. GitOps can help improve collaboration, reduce errors, and accelerate development cycles. This approach is gaining popularity in the Kubernetes community and is expected to become a standard practice in container orchestration in the future.

Multi-Cloud Orchestration

As organizations adopt multi-cloud strategies, there is a growing need for multi-cloud container orchestration. Multi-cloud orchestration enables developers to manage containers across multiple cloud providers, providing greater flexibility and reducing vendor lock-in. Kubernetes is well-positioned to become the standard for multi-cloud orchestration, with its extensive ecosystem and support for multiple cloud providers.

Artificial Intelligence and Machine Learning

Artificial intelligence (AI) and machine learning (ML) are becoming increasingly important in container orchestration. AI and ML can help automate complex tasks, such as resource allocation, load balancing, and fault tolerance. These technologies can also help improve security, compliance, and governance. Kubernetes has already started integrating AI and ML into its platform, and this trend is expected to continue in the future.

Conclusion

Container orchestration is a critical component of modern microservices architecture, and choosing the right tool for your project is essential. Whether you choose Kubernetes Pods or Docker Compose, it’s essential to follow best practices for managing and monitoring your containers. By staying up-to-date with the latest trends and innovations in container orchestration, you can ensure that your containerized applications are optimized for performance, scalability, and stability.