What is a Pod in Kubernetes? Understanding the Building Block

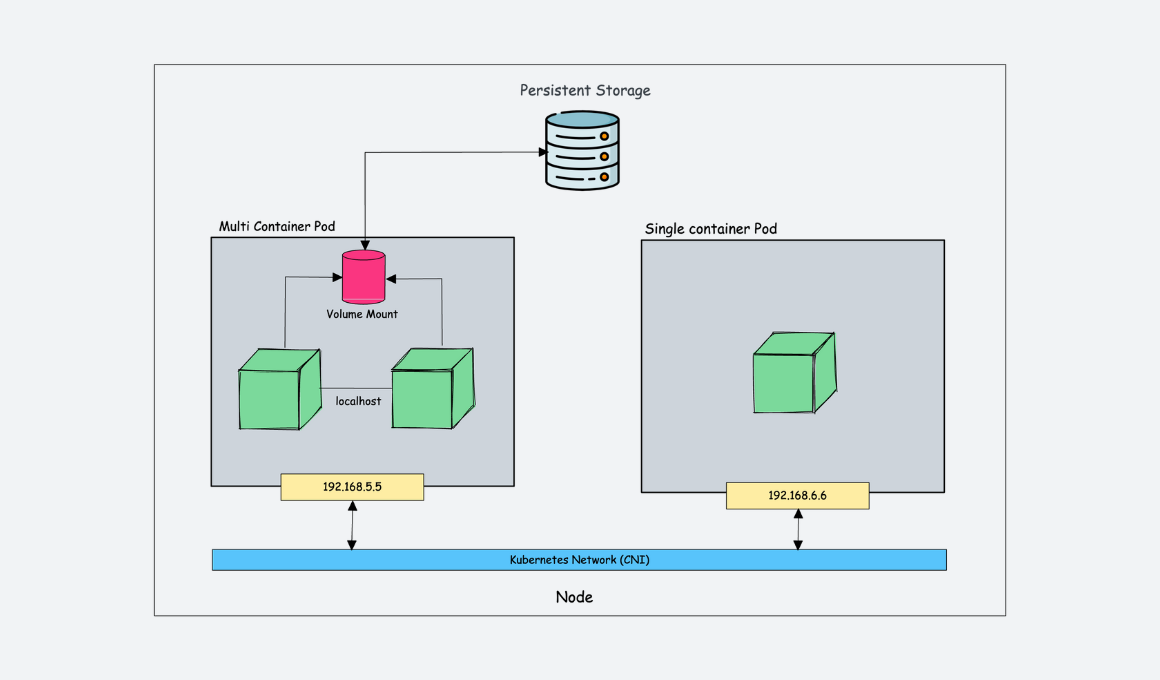

A Kubernetes Pod represents the fundamental building block within the Kubernetes ecosystem. It is the smallest deployable unit that can be created and managed. A Pod encapsulates one or more containers, sharing network and storage resources. These containers within a Pod operate as a cohesive unit. Understanding the Pod concept is crucial for effectively deploying and managing applications on Kubernetes. As an important note, a good kubernetes pod example shows its importance.

Pods possess certain key characteristics. They are inherently ephemeral, meaning they are designed to be transient and replaceable. When a Pod fails or needs to be updated, Kubernetes automatically terminates the existing Pod and creates a new one. This ephemeral nature necessitates the use of Services to provide a stable endpoint for accessing applications running within Pods. Pods also share a network namespace, allowing containers within the same Pod to communicate with each other using `localhost`. Additionally, they can share storage volumes, enabling data sharing between containers. Therefore, a kubernetes pod example is very helpful to show how ephemeral pods work.

Containerization, using technologies like Docker, has revolutionized application deployment by providing a consistent and isolated environment for applications to run. However, Kubernetes extends this concept further by introducing Pods. While containers provide the packaging and isolation, Pods provide the context for running and managing these containers within a cluster. Pods enable features such as co-location of tightly coupled applications, shared resources, and simplified management. Without Pods, managing individual containers in a distributed environment would be significantly more complex. A kubernetes pod example illustrates this management simplification. Therefore, the Pod abstraction is essential for leveraging the full power of Kubernetes for application deployment and management.

Crafting a Simple Pod Definition: YAML Essentials

Creating a Kubernetes Pod involves defining its configuration using YAML (YAML Ain’t Markup Language). This declarative approach allows you to specify the desired state of your Pod. The Kubernetes control plane then works to achieve and maintain that state. A YAML file for a Pod outlines everything Kubernetes needs to know to run your containerized application. It is important for any kubernetes pod example.

The basic structure of a Pod definition YAML file includes several key fields. The `apiVersion` field specifies the Kubernetes API version being used (e.g., `v1`). The `kind` field indicates the type of resource being defined, which in this case is `Pod`. The `metadata` section contains information about the Pod, such as its `name` and `labels`. The `name` is a unique identifier for the Pod within the namespace. `Labels` are key-value pairs that can be used to organize and select Pods. All these configurations are very important in a kubernetes pod example.

The most important section is `spec`, which defines the desired state of the Pod. Within `spec`, the `containers` field is a list of container definitions that will run within the Pod. Each container definition includes the `image` to use (e.g., `nginx:latest` from Docker Hub), resource requests and limits, ports to expose, and environment variables. A minimal example Pod configuration might look like this:

apiVersion: v1

kind: Pod

metadata:

name: my-first-pod

labels:

app: my-app

spec:

containers:

- name: my-container

image: nginx:latest

This simple example defines a Pod named “my-first-pod” with the label “app: my-app”. It contains a single container named “my-container” that runs the latest version of the Nginx image. This is a base kubernetes pod example. Using `kubectl apply -f pod-definition.yaml` deploys this Pod to your cluster.

Defining Containers Within a Pod: Specifying Images and Resources

The `containers` section within a Kubernetes Pod definition is crucial. It specifies the containers that will run inside the Pod. This section determines the images, resources, and configurations for each container. A `kubernetes pod example` often showcases a simple container definition. The most important element is the `image` field. This field defines the container image to use. Images are typically pulled from a container registry like Docker Hub. For example, `image: nginx:latest` will pull the latest Nginx image. It’s best practice to use specific image tags instead of `latest` for reproducibility.

Resource requests and limits are also defined in the `containers` section. These settings control the amount of CPU and memory each container can use. `resources.requests` specifies the minimum resources a container needs. `resources.limits` sets the maximum resources a container can consume. Setting these values helps Kubernetes schedule Pods effectively. It also prevents a single container from monopolizing cluster resources. Another key aspect is defining ports. The `ports` section maps container ports to the Pod’s network namespace. This allows other Pods and Services to access the container. Environment variables can also be set in the `env` section. These variables provide configuration data to the containerized application. A `kubernetes pod example` might use environment variables to set database connection strings or API keys.

Furthermore, the `command` and `args` fields allow you to override the default command and arguments of the container image. This is useful for customizing the container’s behavior. To run multiple containers within a single Pod, define multiple container specifications within the `containers` array. Each container will run independently but share the Pod’s network namespace and volumes. A multi-container `kubernetes pod example` could include a web server container and a logging sidecar container. Resource requests and limits should be carefully considered for each container in a multi-container Pod. Ensure that the Pod has sufficient resources to accommodate all containers. The effective management of these components is at the heart of a `kubernetes pod example` implementation.

Exposing Pods: Services and Networking Considerations

Context_4: Kubernetes Pods, by their nature, are ephemeral. This means they can be created, destroyed, and rescheduled frequently. Therefore, directly accessing Pods using their IP addresses is not a reliable solution. Kubernetes Services provide a stable abstraction layer, enabling access to Pods from within the cluster and externally. A Service assigns a consistent IP address and DNS name to a set of Pods, ensuring that applications can communicate with them reliably, regardless of Pod restarts or rescheduling. This is critical for creating robust and scalable applications within Kubernetes. The creation of a Kubernetes pod example depends on the service for reliability.

Different Service types cater to various access requirements. `ClusterIP` is the default Service type, exposing the Service on a cluster-internal IP address. This allows other Pods within the cluster to access the Service. `NodePort` exposes the Service on each Node’s IP address at a static port. This allows external access to the Service using the Node’s IP address and the specified port. `LoadBalancer` provisions an external load balancer in cloud environments (like AWS, Azure, or GCP) to distribute traffic to the Service. Each option presents different network considerations and implementation details. Selecting the appropriate service type constitutes an important decision.

For exposing applications to the external world, Ingress controllers offer a more sophisticated solution compared to `NodePort`. An Ingress controller acts as a reverse proxy and load balancer, routing external traffic to different Services based on hostnames or paths. Ingress provides a centralized point of entry for external traffic, simplifying routing and providing additional features like SSL termination and virtual hosting. Many different forms of Kubernetes pod example benefit from Ingress. The implementation details of Ingress controllers can vary depending on the Kubernetes environment and chosen provider, but the general concept remains the same: providing a flexible and scalable way to manage external access to services running within the Kubernetes cluster. Understanding these networking options is paramount for effectively deploying and managing applications within Kubernetes.

Managing Pod Lifecycles: Restart Policies and Liveness Probes

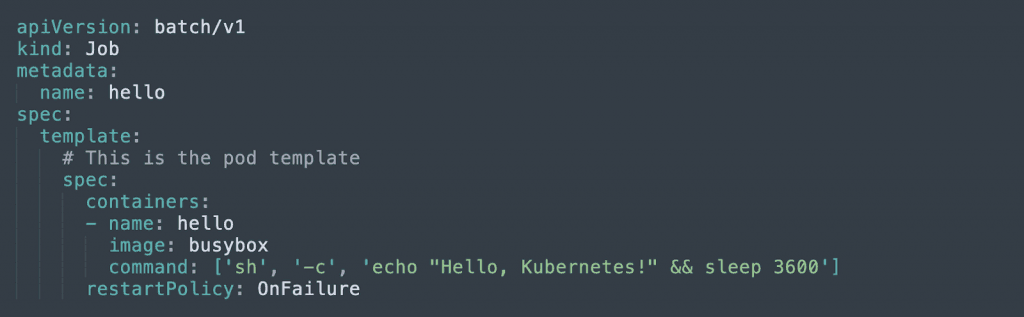

Kubernetes meticulously oversees the lifecycle of Pods, ensuring applications remain healthy and available. Restart policies dictate how Kubernetes responds to container failures within a Pod. The `restartPolicy` field, defined in the Pod specification, accepts three possible values: `Always`, `OnFailure`, and `Never`. `Always` ensures that the Pod is restarted whenever a container terminates, regardless of the exit code. This is suitable for applications designed to run continuously. `OnFailure` restarts the container only if it exits with a non-zero exit code, indicating a failure. This is appropriate for batch jobs or tasks that should only be retried upon encountering an error. `Never` prevents Kubernetes from automatically restarting the Pod; a manual intervention or an external controller would be required to restart it. Understanding these policies is crucial for building resilient applications within a Kubernetes cluster. The importance of restart policies can be observed in a kubernetes pod example, where a simple misconfiguration can lead to a pod constantly restarting, wasting resources.

Liveness probes are another vital mechanism for managing Pod health. A Liveness Probe is a periodic health check performed by Kubernetes to determine if a container is running and healthy. If the probe fails, Kubernetes will restart the container. This proactive approach helps to recover from transient issues, such as deadlocks or resource contention, that might not necessarily cause the container to crash but render it unresponsive. Liveness probes can be configured using different methods: `exec` (executes a command inside the container), `httpGet` (performs an HTTP GET request against the container), and `tcpSocket` (attempts to establish a TCP connection to the container). The choice of probe depends on the specific application and its health check requirements. Properly configured Liveness probes contribute significantly to the overall stability and availability of applications deployed within a Kubernetes environment.

Readiness probes complement Liveness probes by determining when a container is ready to start accepting traffic. A container might be running but not yet fully initialized, for instance, while it’s still loading data or establishing database connections. Readiness probes prevent traffic from being routed to a container that is not yet ready, ensuring that users only interact with fully functional application instances. Similar to Liveness probes, Readiness probes can be configured using `exec`, `httpGet`, or `tcpSocket`. If a Readiness probe fails, the Pod is removed from the Service endpoints, effectively taking it out of the load balancing rotation until the probe succeeds again. Incorporating both Liveness and Readiness probes into Pod definitions is a best practice for creating robust and self-healing applications within Kubernetes. When combined, they are a powerful addition to your kubernetes pod example, further solidifying the benefits of kubernetes.

Example Pod Deployment: Running a Basic Nginx Server

This section offers a complete, practical kubernetes pod example of deploying a Pod running a basic Nginx web server. This showcases a common use case and demonstrates how to bring together the concepts discussed in previous sections. We will start by presenting the full YAML configuration for the Nginx Pod. This configuration includes specifying resource requests and limits to ensure the Pod has adequate resources, defining port mappings to expose the Nginx server, and implementing a liveness probe to ensure the Pod remains healthy. Below is an example of kubernetes pod example configuration:

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

resources:

requests:

cpu: 100m

memory: 128Mi

limits:

cpu: 200m

memory: 256Mi

ports:

- containerPort: 80

livenessProbe:

httpGet:

path: /

port: 80

initialDelaySeconds: 3

periodSeconds: 3

The `apiVersion` and `kind` fields specify that this is a Pod definition. The `metadata` section includes the name of the Pod (`nginx-pod`) and a label (`app: nginx`) for easy identification and management. The `spec` section defines the container that will run within the Pod. In this example, we are using the `nginx:latest` image from Docker Hub. Resource requests and limits are set to ensure the Pod has enough CPU and memory. The `containerPort` field specifies that port 80 on the container should be exposed. The liveness probe is configured to check the health of the Nginx server by sending an HTTP GET request to the root path (`/`) on port 80. The probe will start after 3 seconds (`initialDelaySeconds`) and run every 3 seconds (`periodSeconds`).

To deploy this Pod, save the YAML configuration to a file (e.g., `nginx-pod.yaml`) and use the `kubectl apply` command: `kubectl apply -f nginx-pod.yaml`. After running this command, Kubernetes will create the Pod. To verify the status of the Pod, use the command: `kubectl get pod nginx-pod`. This command will display information about the Pod, including its status (e.g., Running, Pending, Succeeded, Failed). To further inspect the Pod and troubleshoot any issues, you can use the `kubectl describe pod nginx-pod` command. This command provides detailed information about the Pod, including events, resource usage, and container status. This kubernetes pod example offers a foundational understanding of deploying web applications within a Kubernetes environment. By understanding this kubernetes pod example, users can easily deploy other applications.

Advanced Pod Configurations: Volumes and ConfigMaps

Context_7: Kubernetes Pods offer advanced configuration options to enhance application functionality and manageability. Two key features are Volumes and ConfigMaps. Volumes provide persistent storage for Pods, while ConfigMaps inject configuration data. These features decouple configuration from the application code, improving flexibility and maintainability. A kubernetes pod example can benefit greatly from using volumes for data persistence.

Volumes in Kubernetes allow containers within a Pod to share storage. Several Volume types are available, each serving different purposes. `emptyDir` provides temporary storage that lasts the lifetime of the Pod. `hostPath` mounts a file or directory from the host node’s filesystem into the Pod. `PersistentVolumeClaim` requests storage from a PersistentVolume, enabling persistent storage independent of the Pod’s lifecycle. To use a Volume, it must be defined in the Pod’s `spec.volumes` section. Then, it must be mounted into the desired container using the `volumeMounts` section within the container definition. The `mountPath` specifies the path within the container where the Volume will be mounted. For a kubernetes pod example requiring persistent data, PersistentVolumeClaims are essential. Using volumes makes kubernetes pod example implementations more robust and adaptable to real-world scenarios.

ConfigMaps allow you to decouple configuration artifacts from image content to keep applications portable. A ConfigMap is an API object used to store non-confidential data in key-value pairs. Containers in a Pod can consume ConfigMaps as environment variables, command-line arguments, or configuration files. To use a ConfigMap, first, create the ConfigMap containing your configuration data. Then, in the Pod definition, reference the ConfigMap in the `envFrom` section of the container. This injects the ConfigMap’s key-value pairs as environment variables into the container. Alternatively, you can mount a ConfigMap as a Volume, making its contents available as files within the container. ConfigMaps provide a centralized way to manage application configuration, simplifying updates and reducing the need to rebuild container images. This approach is vital for a well-structured kubernetes pod example, promoting best practices in configuration management. This separation ensures that the core application logic remains independent of specific deployment environments, further enhancing the portability and scalability of the kubernetes pod example.

Troubleshooting Pod Issues: Inspecting Logs and Events

When deploying Kubernetes Pods, encountering issues is a common part of the process. Effective troubleshooting is crucial for ensuring applications run smoothly. One of the first steps in diagnosing problems is inspecting the Pod’s logs. The `kubectl logs` command allows users to view the output from the containers within a Pod. For example, `kubectl logs

In addition to logs, Kubernetes events provide valuable information about the lifecycle of a Pod. Events record significant occurrences, such as Pod creation, scheduling, container starts, and failures. The `kubectl describe pod

Several common issues can arise during Pod deployment. Image pull failures can occur if the image name is incorrect, the image is not available in the specified registry, or the Kubernetes cluster does not have the necessary credentials to pull the image. Resource exhaustion can happen if the Pod requests more CPU or memory than is available on the node. Container crashes can be caused by application errors, configuration issues, or unmet dependencies. When troubleshooting, consider these tips: Verify the container image name and tag, check resource requests and limits to ensure they are appropriate for the application, and examine the application’s configuration files for errors. Also, ensure that the Kubernetes nodes have sufficient resources available. By systematically inspecting logs, events, and configurations, most Pod-related issues can be effectively diagnosed and resolved, ensuring the smooth operation of your applications. Remember that every successful deployment is a Kubernetes pod example for future reference.