The Rise of Microservices and the Need for Kubernetes

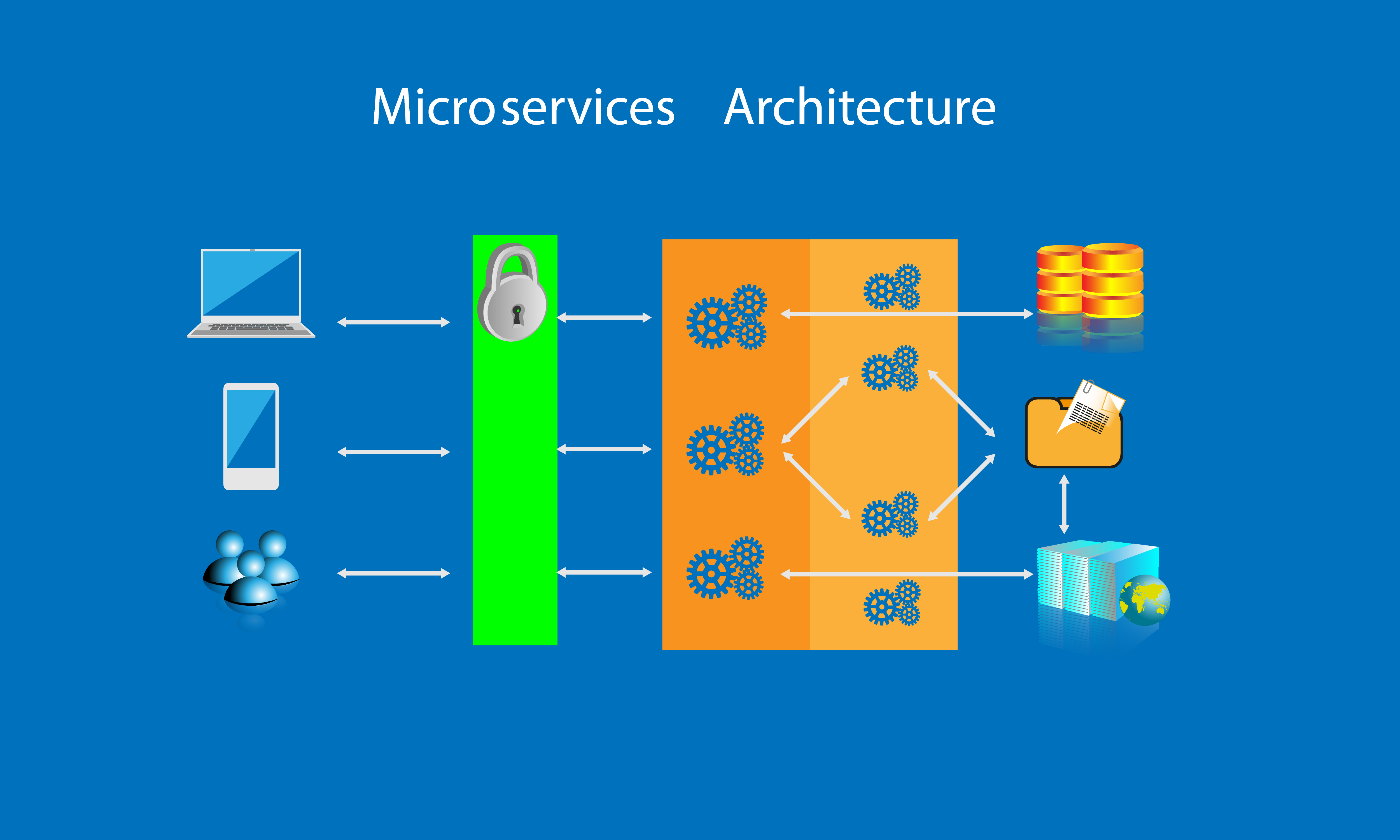

The rapid growth of microservices architecture has transformed the way modern applications are designed and deployed. By breaking down monolithic applications into smaller, independent services, organizations can achieve greater agility, scalability, and flexibility. However, this shift has also introduced new challenges in terms of managing, scaling, and orchestrating these distributed microservices. Kubernetes, the open-source container orchestration platform, has emerged as a powerful solution to address the complexities of managing microservices. As the adoption of microservices architecture continues to rise, Kubernetes has become an essential tool for organizations looking to streamline the deployment, scaling, and management of their distributed applications.

Kubernetes provides a comprehensive set of features that enable the effective management of microservices. Its ability to automate the deployment, scaling, and management of containerized applications makes it an ideal choice for organizations seeking to optimize the performance and reliability of their microservices-based infrastructure. By leveraging Kubernetes, teams can focus on developing and delivering high-quality microservices, while the platform handles the underlying complexities of orchestration and resource management.

Furthermore, Kubernetes’ support for self-healing, load balancing, and fault tolerance ensures the resilience and high availability of microservices, even in the face of infrastructure failures or unexpected spikes in traffic. This level of reliability and scalability is crucial for modern, cloud-native applications that need to adapt to changing user demands and market conditions.

Understanding the Fundamentals of Kubernetes

Kubernetes is a powerful open-source container orchestration platform that has become the de facto standard for managing and scaling microservices-based applications. At the core of Kubernetes are several key components that work together to provide a comprehensive solution for deploying and managing containerized applications. Pods are the fundamental building blocks of Kubernetes, representing a group of one or more containers that share resources and a common network. Pods provide a level of abstraction above individual containers, allowing Kubernetes to manage and scale them as a single unit.

Services in Kubernetes act as the entry point for accessing microservices, providing a stable network address and load balancing capabilities. They enable communication between different microservices and ensure that client requests are routed to the appropriate instances.

Deployments are Kubernetes resources that manage the lifecycle of stateless applications, such as microservices. They define the desired state of an application, including the number of replicas, the container image to use, and various configuration settings. Kubernetes then ensures that the actual state of the application matches the desired state defined in the Deployment.

By understanding these fundamental Kubernetes components and how they work together, developers and operators can effectively manage and orchestrate their microservices-based applications. Kubernetes provides a robust and scalable platform for deploying, scaling, and managing microservices, ensuring high availability, fault tolerance, and efficient resource utilization.

As organizations continue to embrace microservices architecture, the importance of Kubernetes in managing the complexity of these distributed systems becomes increasingly evident. Mastering the fundamentals of Kubernetes is a crucial step in successfully implementing and maintaining a microservices-based infrastructure.

Designing Microservices for Kubernetes

As organizations embrace the microservices architecture, it is crucial to design these services in a way that optimizes their deployment and management within a Kubernetes environment. By following best practices, developers can ensure that their microservices are well-suited for the Kubernetes platform, leading to improved scalability, reliability, and overall performance. One of the key considerations in designing microservices for Kubernetes is containerization. Kubernetes is designed to work with containerized applications, so it is essential to package each microservice as a Docker container. This containerization process not only simplifies the deployment and scaling of microservices but also ensures consistent and predictable runtime environments.

Another important aspect of designing microservices for Kubernetes is service discovery. In a distributed microservices architecture, services need to be able to locate and communicate with each other seamlessly. Kubernetes provides built-in service discovery mechanisms, such as the Kubernetes Service abstraction, which allows microservices to find and connect to each other using a stable network address.

Additionally, the communication between microservices should be designed with Kubernetes in mind. Microservices should be designed to be loosely coupled, with clear and well-defined boundaries, to facilitate independent deployment and scaling. This can be achieved through the use of asynchronous communication patterns, such as message queues or event-driven architectures, which align well with the Kubernetes model of managing and orchestrating distributed applications.

Furthermore, the design of microservices should consider the Kubernetes resource management model, which includes concepts like Pods, Deployments, and Services. Microservices should be designed to be stateless and self-contained, making it easier for Kubernetes to manage their lifecycle and ensure high availability and scalability.

By following these best practices in designing microservices for Kubernetes, organizations can leverage the full power of the Kubernetes platform to manage and orchestrate their distributed applications effectively. This, in turn, can lead to improved agility, resilience, and overall performance of the microservices-based infrastructure.

Deploying and Managing Microservices with Kubernetes

Deploying and managing microservices on the Kubernetes platform involves a structured and comprehensive approach to ensure the efficient and reliable operation of the distributed application. Kubernetes provides a range of tools and strategies to streamline the deployment and management of microservices, enabling organizations to leverage the full potential of the platform. One of the primary methods for deploying microservices on Kubernetes is through the use of Kubernetes manifests, which are YAML files that define the desired state of the application. These manifests describe the various Kubernetes resources, such as Deployments, Services, and Ingress, that are required to run the microservices. By defining the application’s infrastructure as code, Kubernetes manifests enable consistent, repeatable, and automated deployments, ensuring that the microservices are deployed in a predictable and reliable manner.

In addition to Kubernetes manifests, organizations can also leverage Helm, a popular package manager for Kubernetes, to simplify the deployment and management of microservices. Helm charts provide a templated approach to packaging and deploying complex Kubernetes applications, making it easier to manage the dependencies and configurations of multiple microservices.

Once the microservices are deployed, Kubernetes provides a range of management capabilities to ensure their ongoing health and performance. This includes the ability to scale microservices up or down based on demand, perform rolling updates to deploy new versions of the services, and monitor the overall state of the application through built-in observability features.

Scaling microservices in Kubernetes is a straightforward process, as the platform can automatically adjust the number of replicas based on predefined metrics, such as CPU utilization or request volume. This ensures that the microservices can handle fluctuations in user traffic and maintain optimal performance.

Similarly, Kubernetes simplifies the process of rolling out updates to microservices. By leveraging Deployment resources, Kubernetes can gradually roll out new versions of the services, ensuring that the application remains available and minimizing the impact on end-users.

Monitoring and troubleshooting Kubernetes microservices is also a crucial aspect of the management process. Kubernetes provides a range of built-in tools and integrations, such as logging, metrics, and tracing, that enable developers and operators to gain visibility into the health and performance of the microservices. This allows for proactive identification and resolution of issues, ensuring the overall reliability and stability of the Kubernetes-based microservices infrastructure.

Ensuring Resilience and High Availability with Kubernetes

As organizations embrace the microservices architecture, ensuring the resilience and high availability of these distributed applications becomes a critical concern. Kubernetes, with its robust set of features and capabilities, provides a comprehensive solution for building highly available and fault-tolerant microservices-based systems. One of the key Kubernetes features that contributes to the resilience of microservices is self-healing. Kubernetes continuously monitors the state of the application and automatically takes corrective actions when it detects any issues or failures. For example, if a Pod running a microservice fails, Kubernetes will automatically reschedule and launch a new instance of the Pod, ensuring that the service remains available to end-users.

Load balancing is another crucial aspect of ensuring high availability in a Kubernetes environment. Kubernetes Service resources abstract the network access to microservices, providing a stable and load-balanced entry point. This allows Kubernetes to distribute incoming traffic across multiple instances of a microservice, ensuring that the application can handle increased workloads without compromising performance or availability.

Kubernetes also provides built-in support for fault tolerance, enabling microservices to gracefully handle infrastructure failures or unexpected spikes in traffic. Features like horizontal pod autoscaling and resource management allow Kubernetes to automatically scale the number of replicas for a microservice based on predefined metrics, such as CPU utilization or request volume. This ensures that the application can handle increased demand without experiencing downtime or degraded performance.

Furthermore, Kubernetes’ support for rolling updates and canary deployments enables organizations to safely and incrementally roll out new versions of their microservices. This minimizes the risk of disruptions to the running application and ensures that end-users experience a seamless transition between different versions of the microservices.

By leveraging Kubernetes’ resilience and high availability features, organizations can build microservices-based applications that are highly reliable, scalable, and fault-tolerant. This, in turn, helps to ensure a consistent and positive user experience, even in the face of unexpected challenges or spikes in demand.

Optimizing Kubernetes for Microservices Performance

As organizations embrace the Kubernetes platform to manage their microservices-based applications, optimizing the performance of these distributed systems becomes a crucial consideration. Kubernetes provides a range of features and techniques that can be leveraged to ensure the optimal performance of microservices running on the platform. One of the key aspects of performance optimization is effective resource management. Kubernetes allows for the fine-tuning of resource allocations, such as CPU and memory, for individual microservices. By setting appropriate resource requests and limits, organizations can ensure that each microservice receives the necessary resources to function efficiently, while also preventing resource contention and potential performance degradation.

Network optimization is another important factor in maximizing the performance of Kubernetes microservices. Kubernetes provides various networking features, such as the use of overlay networks and load balancing, that can be configured to optimize network throughput and reduce latency. This is particularly important for microservices that rely on frequent communication and data exchange between different components of the distributed application.

Scaling microservices in Kubernetes is a crucial aspect of performance optimization. Kubernetes’ autoscaling capabilities, including Horizontal Pod Autoscaling (HPA) and Vertical Pod Autoscaling (VPA), allow the platform to automatically adjust the number of replicas or the resource allocations for microservices based on predefined metrics, such as CPU utilization or request volume. This ensures that the microservices can handle fluctuations in user demand without compromising performance.

Additionally, Kubernetes provides advanced scheduling features that can be leveraged to optimize the placement of microservices on the underlying infrastructure. By considering factors like node affinity, taints, and tolerations, organizations can ensure that microservices are deployed on the most suitable nodes, taking into account resource availability, proximity, and other constraints.

Furthermore, Kubernetes offers a range of tools and integrations that can be used to monitor and analyze the performance of microservices. This includes the use of metrics, logging, and tracing solutions, which provide valuable insights into the resource utilization, latency, and overall health of the microservices. By leveraging these observability features, organizations can identify and address performance bottlenecks, ensuring the optimal operation of their Kubernetes-based microservices infrastructure.

By implementing these performance optimization strategies, organizations can unlock the full potential of Kubernetes in managing their microservices-based applications, leading to improved scalability, responsiveness, and overall user experience.

Securing Kubernetes Microservices

As organizations embrace the Kubernetes platform to manage their microservices-based applications, ensuring the security of these distributed systems becomes a critical concern. Kubernetes provides a range of features and best practices that can be leveraged to enhance the security of microservices running on the platform. One of the fundamental security considerations in a Kubernetes environment is access control. Kubernetes offers a robust role-based access control (RBAC) system that allows administrators to define and enforce granular permissions for various Kubernetes resources, such as Pods, Deployments, and Services. By implementing RBAC policies, organizations can ensure that only authorized users and processes have the necessary access to interact with the microservices.

Network security is another crucial aspect of securing Kubernetes microservices. Kubernetes supports the use of network policies, which enable the definition of rules to control the network traffic flow between microservices. This allows organizations to implement a zero-trust network model, where communication between microservices is restricted to only the necessary connections, reducing the attack surface and minimizing the risk of unauthorized access.

Container security is also a key consideration when running microservices on Kubernetes. Kubernetes provides various security features, such as the ability to configure container-level security settings, including resource constraints, capabilities, and SELinux profiles. By implementing these security controls, organizations can ensure that the containers running their microservices are hardened and less vulnerable to potential attacks.

Additionally, Kubernetes supports the integration of external security tools and solutions, such as vulnerability scanners, image registries, and security monitoring platforms. These integrations enable organizations to extend the security capabilities of the Kubernetes platform, allowing for comprehensive vulnerability management, image scanning, and real-time security monitoring of the microservices-based infrastructure.

Furthermore, Kubernetes provides built-in support for encryption, both at rest and in transit, ensuring the confidentiality of data and communication between microservices. This includes the ability to configure secure communication channels, such as HTTPS, and the use of encryption for Kubernetes resources like Secrets.

By leveraging the security features and best practices offered by Kubernetes, organizations can build a robust and secure microservices-based infrastructure, mitigating the risks associated with distributed applications and ensuring the overall protection of their critical business assets.

Monitoring and Troubleshooting Kubernetes Microservices

As organizations embrace the Kubernetes platform to manage their microservices-based applications, the need for effective monitoring and troubleshooting becomes increasingly crucial. Kubernetes provides a range of tools and techniques that can be leveraged to ensure the overall health and performance of the microservices running on the platform. One of the key aspects of monitoring Kubernetes microservices is the use of logging. Kubernetes integrates with various logging solutions, such as Elasticsearch, Fluentd, and Splunk, allowing organizations to centralize and analyze the logs generated by their microservices. This enables developers and operators to quickly identify and diagnose issues by examining the logs for error messages, performance bottlenecks, and other relevant information.

In addition to logging, Kubernetes also offers robust metrics collection and visualization capabilities. The platform integrates with monitoring solutions like Prometheus, which can be used to collect and analyze a wide range of metrics, including CPU and memory usage, network traffic, and application-specific metrics. By leveraging these metrics, organizations can gain valuable insights into the performance and resource utilization of their Kubernetes microservices, enabling them to make informed decisions about scaling, resource allocation, and optimization.

Tracing is another important aspect of monitoring Kubernetes microservices. Distributed tracing solutions, such as Jaeger or Zipkin, can be integrated with Kubernetes to provide end-to-end visibility into the flow of requests across the microservices. This allows developers to identify performance bottlenecks, understand the dependencies between microservices, and troubleshoot complex issues that may arise in the distributed application.

When it comes to troubleshooting Kubernetes microservices, the platform provides a range of tools and commands that can be used to investigate and resolve issues. This includes the ability to inspect the status of Pods, Deployments, and Services, as well as the ability to access the logs and execute commands within running containers. Additionally, Kubernetes offers the ability to perform rolling updates and rollbacks, which can be invaluable when addressing issues related to application deployments.

Furthermore, Kubernetes integrates with various third-party tools and platforms, such as Istio for service mesh management and Linkerd for service-to-service communication, which can provide additional monitoring and troubleshooting capabilities specific to the microservices architecture.

By leveraging the monitoring and troubleshooting features offered by Kubernetes, organizations can ensure the overall health and performance of their microservices-based applications, enabling them to quickly identify and resolve issues, optimize resource utilization, and deliver a reliable and responsive user experience.