Understanding Microservices Architecture

Microservices represent a revolutionary approach to software architecture. Instead of building a single, monolithic application, developers create a suite of small, independent services. Each service focuses on a specific business function. This contrasts sharply with monolithic applications, where all components are tightly coupled. The advantages are substantial. Microservices offer superior scalability, allowing individual services to be scaled independently based on demand. Independent deployments reduce the risk of widespread outages. Fault isolation prevents a single service failure from cascading through the entire system. Further, teams can choose the best technology for each service, fostering innovation and efficiency. However, managing a complex system of interconnected services presents challenges. Increased complexity requires sophisticated tools for monitoring and deployment. Distributed tracing becomes crucial for debugging and performance analysis. Effective inter-service communication strategies are essential for seamless operation. Kubernetes emerges as a powerful solution to address these challenges, streamlining the orchestration and management of these decentralized components within the kubernetes microservice ecosystem. This architecture, while demanding, delivers significant long-term benefits in flexibility and maintainability.

Adopting a microservices architecture offers significant benefits for modern applications. The independent deployment of individual services allows for faster release cycles and improved agility. This is particularly crucial in the face of evolving business needs and rapidly changing market demands. Further, the ability to scale individual services independently is a key advantage in handling variable workloads and ensuring optimal resource utilization. This granular scaling approach avoids the limitations of monolithic architectures, which often require scaling the entire application even if only one component is experiencing high demand. Fault isolation is another key advantage, limiting the impact of individual service failures. A failing microservice does not necessarily bring down the entire application, ensuring higher availability and resilience. This resilience is vital in ensuring the ongoing functionality of the application, especially in mission-critical systems. This approach to system design enhances the robustness and dependability of modern applications, especially as the number of services increases. The flexibility offered by microservices, coupled with the management capabilities of Kubernetes, forms a powerful foundation for building scalable and resilient applications. This robust framework minimizes downtime and maximizes efficiency within the kubernetes microservice structure. The improved operational efficiency contributes to significant cost savings in the long term.

The transition to microservices necessitates careful consideration of inter-service communication. Efficient communication between these independent services is paramount for application functionality. Strategies for handling inter-service communication must be chosen carefully, balancing factors such as performance, reliability, and complexity. Various communication patterns exist, including synchronous and asynchronous approaches, each with its own strengths and weaknesses. The selection of the optimal communication strategy depends largely on the specific needs and characteristics of individual services and the overall application architecture. Efficient communication within the kubernetes microservice infrastructure relies heavily on well-defined interfaces and robust error handling mechanisms. Tools like service meshes can enhance communication reliability, resilience, and security. These tools provide capabilities like traffic management, observability, and security policies to simplify the management of communication within a complex microservices architecture. A well-planned and executed communication strategy ensures the efficiency, reliability, and scalability of the entire kubernetes microservice system.

Kubernetes Fundamentals: Containers and Orchestration

Kubernetes simplifies the deployment and management of containerized applications. Containers, often created using Docker, package applications and their dependencies. This ensures consistent execution across different environments. Kubernetes orchestrates these containers, automating deployment, scaling, and management. Understanding this orchestration is crucial for effective kubernetes microservice deployments. It handles the complexities of distributed systems, allowing developers to focus on application logic rather than infrastructure.

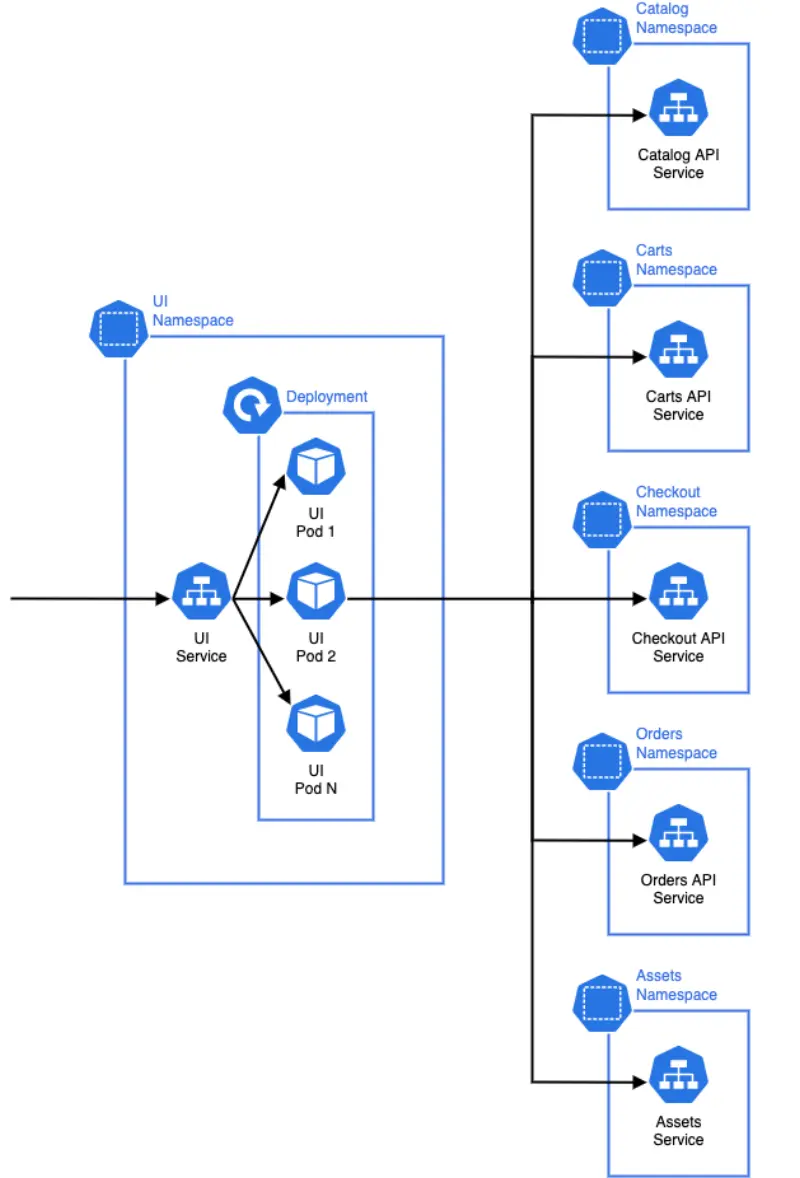

Key Kubernetes concepts include Pods, the smallest deployable units, containing one or more containers. Deployments manage Pod replications, ensuring high availability. Services provide stable network access to Pods. Namespaces isolate resources, allowing for better organization and management of different projects or teams within a Kubernetes cluster. These components work together to manage kubernetes microservice applications efficiently, providing a robust and scalable foundation. The combination of containers and Kubernetes offers unparalleled flexibility and control for deploying and managing modern applications.

Consider a scenario where a kubernetes microservice experiences increased traffic. Kubernetes automatically scales the number of Pods running the service to handle the load. This horizontal scaling ensures responsiveness even during peak demand. Similarly, resource allocation can be adjusted vertically by increasing the resources (CPU, memory) assigned to individual Pods. Kubernetes monitors resource usage and automatically adjusts scaling based on defined policies, optimizing resource utilization. This dynamic scaling is a key advantage of using Kubernetes for managing applications, especially microservices. Kubernetes provides the tools and mechanisms for building resilient and adaptable systems. This scalability and adaptability are key benefits for any modern, high-traffic application.

Deploying Your First Kubernetes Microservice: A Practical Guide

This section provides a step-by-step guide to deploying a simple “hello world” microservice to a Kubernetes cluster. This tutorial uses Minikube for local development, offering a straightforward approach for beginners. First, ensure Minikube is installed and running. Then, create a simple Dockerfile for your application. This Dockerfile will build a container image containing your application code. The image will be pushed to a container registry (like Docker Hub), making it accessible to your Kubernetes cluster. Next, create a Kubernetes deployment YAML file. This file defines how your application will be deployed within the Kubernetes cluster, specifying the number of replicas and other deployment parameters. This deployment file is then applied to your Minikube cluster using the kubectl apply command. The process involves creating a deployment configuration that tells Kubernetes to create and manage pods running the container image. You can monitor the deployment’s progress using kubectl get pods. After successful deployment, access your application through the Kubernetes service. Remember to expose the service appropriately to allow external access. This process clearly illustrates the ease and power of deploying a kubernetes microservice.

To enhance understanding, let’s consider a slightly more complex example. Assume your application requires environmental variables or configmaps. You’ll need to define these within your Kubernetes deployment using config maps, making your application configuration highly manageable and adaptable. Kubernetes allows for easy modification and scaling of the deployment. You can update the deployment configuration, and Kubernetes will automatically handle the rollout process. This ensures minimal downtime and a smooth upgrade experience. You can scale your kubernetes microservice horizontally by increasing the number of replicas in your deployment. Kubernetes automatically distributes the load across multiple instances, maximizing application availability and efficiency. The ability to manage and scale kubernetes microservices is a key advantage of this approach.

Monitoring your kubernetes microservice is crucial. Use tools like kubectl top nodes and kubectl top pods to check resource consumption. Tools such as Prometheus and Grafana offer detailed insights into application performance. This allows you to make informed decisions regarding resource allocation and scaling strategies, optimizing your application’s resource utilization. For more sophisticated scenarios, consider using a managed Kubernetes service provided by cloud platforms like Google Kubernetes Engine (GKE) or Amazon Elastic Kubernetes Service (EKS). These managed services often streamline deployment, security, and management processes. They free up valuable time and resources while enabling seamless scaling and high availability of your kubernetes microservice.

Scaling and Managing Kubernetes Microservices

Kubernetes provides robust mechanisms for scaling microservices to handle fluctuating demands. Horizontal scaling adds more replicas of your application pods, distributing the workload across multiple instances. This ensures high availability and responsiveness even under heavy load. Vertical scaling, on the other hand, increases the resources (CPU, memory) allocated to existing pods. Kubernetes simplifies both approaches, allowing you to effortlessly adapt to changing needs. Effective scaling is crucial for a successful kubernetes microservice architecture.

The Horizontal Pod Autoscaler (HPA) is a powerful Kubernetes feature that automates horizontal scaling. The HPA monitors resource utilization (CPU, memory) of your pods. If utilization exceeds predefined thresholds, the HPA automatically creates new replicas. Conversely, if utilization drops below the target, it scales down, reducing resource consumption and costs. Custom metrics can also be integrated for more granular control over scaling based on specific application needs. This automated approach ensures your kubernetes microservice applications always have the resources they need. Monitoring resource usage, using tools integrated with your kubernetes cluster, is essential for fine-tuning scaling strategies.

Efficient scaling is a cornerstone of a successful kubernetes microservice deployment. Understanding the nuances of horizontal and vertical scaling, and leveraging the HPA, allows for optimal resource utilization and cost efficiency. Regular monitoring provides valuable insights into resource consumption patterns, enabling informed decisions regarding scaling strategies and preventing performance bottlenecks. A well-scaled kubernetes microservice architecture is a resilient and adaptable one, capable of handling unexpected surges in demand while maintaining a high level of service availability. Continuous monitoring of resource usage in your kubernetes microservice environment is vital for maintaining optimal performance and responsiveness.

Ensuring Microservice Reliability with Kubernetes

High availability and fault tolerance are critical for any production-ready kubernetes microservice architecture. Kubernetes provides several mechanisms to achieve this. ReplicaSets ensure that a specified number of pod replicas are always running. If a pod fails, Kubernetes automatically creates a new one to replace it. This maintains continuous service availability. StatefulSets build upon ReplicaSets, adding persistent storage and ordered pod identities. This is crucial for stateful applications, ensuring data consistency even during failures or upgrades. These features are essential for building reliable kubernetes microservices.

Rolling updates are a cornerstone of reliable kubernetes microservice deployments. Kubernetes allows for gradual updates to your applications without causing downtime. Instead of updating all pods simultaneously, Kubernetes updates them one by one or in batches. This minimizes disruption and allows for quick rollback if issues arise. Health checks are vital for identifying failing pods and triggering automatic restarts or replacements. Kubernetes integrates seamlessly with various health check mechanisms, ensuring only healthy pods serve traffic. Properly configured health checks are a crucial aspect of building resilient kubernetes microservices.

Strategies for handling failures go beyond simple pod restarts. Consider implementing circuit breakers to prevent cascading failures. Circuit breakers limit the number of requests to a failing service, protecting the rest of your system. Backoff strategies introduce delays between retry attempts, reducing the load on a potentially overloaded service. Proper error handling and logging across your kubernetes microservices are vital for monitoring and debugging. Effective monitoring tools provide insights into application health, resource utilization, and failure rates. These tools are indispensable for proactive identification and resolution of potential issues within your kubernetes microservice ecosystem.

Networking and Inter-service Communication in Kubernetes Microservices

Effective inter-service communication is crucial for any kubernetes microservice architecture. Kubernetes provides several mechanisms to facilitate this. Kubernetes Services act as an abstraction layer, providing a stable IP address and DNS name for a set of Pods. This allows microservices to communicate with each other without needing to know the individual IP addresses of the Pods, enhancing the overall flexibility and scalability of the kubernetes microservice deployment. This approach simplifies management and improves resilience as Pods can be scaled or restarted without breaking communication. The choice of a suitable communication pattern—synchronous or asynchronous—depends on the specific needs of your kubernetes microservice application. Synchronous communication, often using REST APIs or gRPC, is suitable for immediate responses, while asynchronous communication, employing message queues (like Kafka or RabbitMQ), is better suited for tasks that don’t require immediate responses and can handle eventual consistency. Careful selection is key for optimal performance and reliability within your kubernetes microservice system.

Ingress controllers extend Kubernetes’ networking capabilities to the external world. They act as a reverse proxy and load balancer, routing external traffic to the appropriate services within the cluster. Ingress controllers offer features like SSL termination, path-based routing, and advanced load balancing strategies. They are essential for exposing your kubernetes microservices to the internet securely and efficiently. For complex communication patterns and advanced traffic management, a service mesh like Istio or Linkerd can be beneficial. These tools provide additional features such as traffic routing, observability, and security policies that enhance the reliability and manageability of your kubernetes microservice infrastructure. They offer centralized control over the communication flow between microservices and provide valuable insights into the performance and health of your distributed system, significantly aiding in troubleshooting and optimization within the kubernetes microservice environment. They’re particularly helpful for managing microservices at scale.

Understanding these networking concepts is paramount for designing and implementing robust and scalable kubernetes microservice architectures. Careful consideration of communication patterns and the selection of appropriate tools—Kubernetes Services, Ingress controllers, and potentially a service mesh—are vital for ensuring efficient and reliable inter-service communication in your kubernetes microservice deployments. The right choices enhance scalability, resilience, and security, paving the way for a successful and well-managed microservice ecosystem within Kubernetes. Choosing the right approach significantly impacts the overall performance and maintainability of your kubernetes microservice application. Efficient network management is key to a thriving kubernetes microservice deployment.

Security Best Practices for Kubernetes Microservices

Securing kubernetes microservices requires a multi-layered approach. Network policies control traffic flow between pods, preventing unauthorized access within the kubernetes cluster. These policies define which pods can communicate with each other, enhancing the security posture of your kubernetes microservice deployments. Implementing robust Role-Based Access Control (RBAC) is crucial. RBAC grants specific permissions to users and service accounts, limiting access to sensitive resources and preventing unauthorized actions within your kubernetes environment. This principle of least privilege is essential for securing your kubernetes microservice architecture. Regularly scanning container images for vulnerabilities is vital. Insecure images can introduce significant risks. Employ automated vulnerability scanning tools to identify and remediate security flaws before deployment, ensuring a secure foundation for your kubernetes microservices.

Protecting the Kubernetes control plane itself is paramount. This is the central component managing the cluster. Secure access to the control plane using strong authentication and authorization mechanisms. Regularly update the Kubernetes components to patch known vulnerabilities. Staying current with security updates is critical for mitigating emerging threats and maintaining the integrity of your kubernetes microservice deployments. Consider implementing security monitoring tools to detect suspicious activities and respond promptly to security incidents. Real-time monitoring allows for proactive threat detection and mitigation, ensuring the continuous security of your kubernetes microservice infrastructure. The importance of a secure foundation for your kubernetes microservices cannot be overstated.

For enhanced security in your kubernetes microservice environment, explore using a service mesh like Istio or Linkerd. These tools provide advanced security features such as mutual TLS authentication, traffic encryption, and policy enforcement. They simplify securing communication between your microservices. Properly configured, a service mesh significantly improves the overall security posture of a kubernetes microservice architecture. Remember, security is an ongoing process. Regular security audits and penetration testing are essential to identify and address potential weaknesses. Continuously evaluating and improving your security practices is vital for maintaining the long-term security of your kubernetes microservices. A robust security strategy is essential for the success of any kubernetes microservice deployment.

Choosing the Right Kubernetes Distribution for Microservices

Selecting the appropriate Kubernetes distribution is crucial for successful kubernetes microservice deployments. The choice hinges on several factors, balancing scalability needs, security requirements, and budget constraints. Managed Kubernetes services, offered by cloud providers like Google Kubernetes Engine (GKE), Amazon Elastic Kubernetes Service (EKS), and Azure Kubernetes Service (AKS), provide significant advantages. These managed services handle infrastructure management, leaving you to focus on application deployment and scaling. They often incorporate robust security features and automate many operational tasks, simplifying the overall management of your kubernetes microservice architecture. However, managed services typically incur higher costs compared to self-managed options. The cost difference can be substantial, especially as the scale and complexity of your kubernetes microservice environment grow.

Self-managed Kubernetes clusters offer greater control and customization. This approach allows for tailoring the infrastructure to specific needs and optimizing resource utilization for a more cost-effective kubernetes microservice solution. However, self-management requires dedicated expertise in system administration, networking, and security. Teams must handle tasks such as cluster provisioning, maintenance, updates, and security patching. This increased operational overhead necessitates skilled personnel and can impact time-to-market for new features or updates within the kubernetes microservice application. Proper planning is essential to mitigate operational risks and ensure the long-term stability of the self-managed kubernetes microservice environment. Careful consideration must also be given to the underlying infrastructure, whether on-premises or in a private cloud.

Ultimately, the optimal Kubernetes distribution for a kubernetes microservice deployment depends on a careful evaluation of your organization’s resources, technical expertise, and specific requirements. Factors to consider include the anticipated scale of the microservice architecture, the level of security required, budget limitations, and the availability of in-house expertise for managing Kubernetes. A thorough assessment of these factors will guide you toward choosing the right distribution to support your kubernetes microservice needs, ensuring a smooth and efficient deployment and operation. Choosing a managed service often simplifies the process, but self-management offers greater flexibility at a potential cost in operational complexity. A comprehensive evaluation is key for choosing the right fit for your kubernetes microservice project.