What Are Kubernetes Init Containers and Why They Matter?

Kubernetes init containers are specialized containers. They run before the primary application containers within a Pod. These containers handle essential setup tasks. This includes dependencies or configurations. They ensure that the main application starts correctly. The core purpose of a Kubernetes init container is to prepare the environment. It must be ready before the main application begins. This mechanism is crucial for consistent deployments. It also helps with reliable application startups. A Kubernetes init container helps standardize how applications launch. This is particularly important in modern microservices environments. Each service often has unique requirements. Using init containers helps manage these complexities. They ensure every application starts with the proper settings. This reduces potential startup errors. It also ensures the application behaves as expected from the start.

The use of a kubernetes init container helps maintain order in the deployment process. They are responsible for pre-flight checks and setup operations. These might include tasks like fetching data. They also cover tasks like running database migrations. Because they run to completion before other containers start, they provide a checkpoint. They ensure that the environment is exactly as needed. This provides a reliable starting point. Without this pre-setup, application startup can be unpredictable. Issues might happen due to missing configurations. Or, they might happen due to unmet dependencies. A well-defined kubernetes init container strategy enhances the overall stability. It also improves reliability of Kubernetes deployments. This is particularly useful for complex applications.

Utilizing kubernetes init container also promotes a clearer separation of concerns. The main application container only deals with its core functionality. All necessary preparation is performed by the init container. This separation makes the application easier to manage. It also makes it easier to troubleshoot. The init container model prevents the main application container from becoming cluttered. It also prevents it from having to perform multiple roles. A specific Kubernetes init container can be responsible for one task. This makes the overall deployment more organized and easier to understand. This method increases the maintainability of the entire Kubernetes environment, ultimately reducing errors and improving uptime.

How to Implement a Kubernetes Init Container: A Practical Guide

Implementing a Kubernetes init container involves defining it within the Pod specification. The `initContainers` field is an array where you can specify one or more init containers. Each init container is defined similarly to a regular container, with an image, resource requirements, and commands. For example, a basic YAML definition might include an `image` field that specifies the container image to use. You will also see a `name` field, which identifies the init container. The `command` or `args` fields specify the startup commands. Resource requests and limits, such as CPU and memory, can be defined too. A simple kubernetes init container may be used to clone data for example. To do so, the `image` could be a `git` image, and its `command` could be `git clone

Another example of a kubernetes init container configuration involves performing a database migration. In this scenario, an image equipped with a database migration tool is used. The command would invoke the migration process. For example, if you are using flyway as migration tool, your init container could be using an image that contains flyway, and its command could be `flyway migrate`. This would perform database schema changes before the main application starts, ensuring the application has the database schema it needs to run. Resource considerations for kubernetes init container specifications are also important. You should allocate adequate resources to enable them to complete their tasks successfully. However, you should avoid over-provisioning as this might lead to resource waste. The image selection should also be carefully considered. Choose images that are as small as possible and that contain only the tooling required to perform the initialization tasks. This will help keep your pod startup times to a minimum.

Configuration of your init containers requires to think about the task each container should perform. You may have different init containers that execute in a sequential order, meaning that the next init container only starts after the previous one has completed successfully. This allows for complex initialization processes where each step depends on the successful completion of the previous one. The order of execution is determined by the order in which they are listed inside the `initContainers` array. Understanding how to define an init container and how to configure its different fields will help you build more complex kubernetes application deployments, and will allow you to perform complex pre-startup tasks with a higher level of safety and reliability. This ensures your application starts correctly with the correct context needed to function.

Common Use Cases for Init Containers in Kubernetes

Kubernetes init containers offer a versatile solution for various pre-application startup tasks. One common application involves setting up volume mounts and permissions. This ensures that application containers have the necessary access rights to storage volumes. Another frequent use case centers on database schema migrations. An init container can execute migration scripts before the main application starts. This guarantees the database is ready when the application needs it. The ability to retrieve external configurations or secrets is invaluable. A kubernetes init container can fetch these before the main application starts. This avoids hardcoding sensitive information directly into the application. Another vital function is retrieving TLS certificates for secure communication. This allows applications to use secure channels from the beginning. Running security-related checks is also facilitated by init containers. They can verify system integrity before the primary application runs. These checks can help detect potential vulnerabilities early in the deployment process. The use of kubernetes init container simplifies complex deployment scenarios. They ensure that applications start with all required dependencies and configurations in place.

Furthermore, consider an application that depends on external services. A kubernetes init container can perform health checks on these services. It can verify they are running and accessible before the application starts. This reduces the risk of application failures due to missing dependencies. Init containers are beneficial when dealing with multi-stage deployments. The initial stage might include fetching data and preparing it for the next step. This approach provides isolation and clarity, making it easy to manage the different deployment stages. These containers are also used in environments where network configurations are dynamically required. An init container can perform necessary networking adjustments. The main container can then function effectively in a complex network setup. This approach improves deployment reliability and reduces potential errors. By isolating initial tasks in a kubernetes init container, developers ensure that the main application remains lean and solely focused on its core functionality. This results in cleaner, more maintainable applications.

In addition to the examples given, think about applications that require specialized tools or libraries for startup. A kubernetes init container can be responsible for installing these requirements. This guarantees that the main container always has a consistent environment to run. Consider use cases when an application requires an initial data load. An init container can be used to load data from a database or a file. This ensures the main application starts with the correct datasets. This method simplifies the main container’s logic. It also prevents the main application from needing to deal with initial data loading. Kubernetes init containers are essential for complex deployment flows. They offer reliable and repeatable setups. They handle many necessary pre-application tasks without adding overhead to the core application container.

Advanced Init Container Patterns and Best Practices

Exploring advanced patterns with Kubernetes init containers unlocks more sophisticated deployment strategies. Data sharing between init containers and application containers is often achieved through shared volumes. This method allows an init container to prepare data or configurations. The application container can then access this data. Another advanced technique involves using multiple init containers sequentially. This lets you break down complex setup tasks into manageable steps. For instance, one init container might fetch secrets. Another could then perform database migrations. It’s crucial to handle errors effectively within these init containers. Implement retry mechanisms for transient failures. This can improve the reliability of the pod startup process. Consider using backoff strategies. This will prevent overloading the system with repeated failed attempts.

When working with kubernetes init container, image size and focus are crucial. Keep init container images small. These images should only include the tools necessary for their specific task. This helps reduce the pod startup time, and it can also help reduce network traffic when pulling the images. A large and complex init container can slow down the entire deployment process. Also, always focus on what the init container’s main task should be, which is to prepare for the main application to run and not actually run the main application functionality inside the init container. Debugging a failing kubernetes init container often requires examining the container logs. Kubernetes provides tools to access these logs using `kubectl`. Utilize the describe command. This can give valuable insights into a container’s status. Issues with resource allocation can be also common, so make sure that the containers have adequate CPU and memory resources to run without issues.

Troubleshooting init containers can present unique challenges. Problems in an init container can prevent the main application container from even starting. Careful error handling in the init container will prevent this from happening. Be sure to have a good logging strategy for init containers. This will make it easier to troubleshoot problems. Remember that any error within an init container will be propagated to the main application. Be sure to also be able to quickly identify what the problems are by having good logging and by using the right command to inspect the failed init containers. Another important aspect to consider is to not over-use them. Having too many init containers can greatly increase the pod startup time, so the number of init containers should be kept to the minimum.

Comparing Init Containers to Other Kubernetes Startup Mechanisms

Kubernetes offers several ways to manage application startup dependencies and configurations. One common alternative to a kubernetes init container is a sidecar container. Sidecar containers run alongside the main application container within the same Pod. They are useful for tasks that need to run continuously. This contrasts with init containers, which execute once and complete before the application starts. Another option involves using startup scripts directly in the main application container’s image. While simpler for basic tasks, this method lacks isolation. It also makes the application image less reusable. Choosing the right approach depends on the specific needs of the application deployment.

A kubernetes init container provides better isolation and separation of concerns compared to startup scripts within the application. This is because init containers operate in their own isolated environment. They run to completion before the application container starts. This isolation ensures that setup tasks are performed correctly, without conflicts with the main application process. Sidecar containers, although useful for continuous operations, are not ideal for one-time initialization tasks. They can add unnecessary overhead and complexity for pre-flight operations. Therefore, using a dedicated kubernetes init container allows for more reliable and predictable execution of pre-flight operations. This can greatly improve the overall robustness of the deployment process. Too many init containers, however, can increase pod startup times. This can make the overall deployment slower. Careful consideration of the specific requirements is essential to avoid unnecessary complexity and delays.

Favoring a kubernetes init container over other approaches becomes clear when considering several key aspects. The ability to define clear dependencies through sequential init container execution is valuable. This allows for controlled setup steps. Also, the immutability of application containers following initialization is a benefit. Furthermore, having each init container focus on a single, well-defined task is advantageous. This improves maintainability and makes it easier to debug. It is also crucial to understand that having too many init containers can create a performance bottleneck. The startup of a pod can be slower if there are too many init containers that need to complete. Each additional init container adds to the overall time taken to start an application. Therefore, thoughtful planning is important to maximize the benefits of a kubernetes init container, while avoiding the drawbacks of overusing them. Careful consideration of specific use cases will lead to better deployment strategies.

Example: Using an Init Container to Retrieve Configuration from ConfigMap

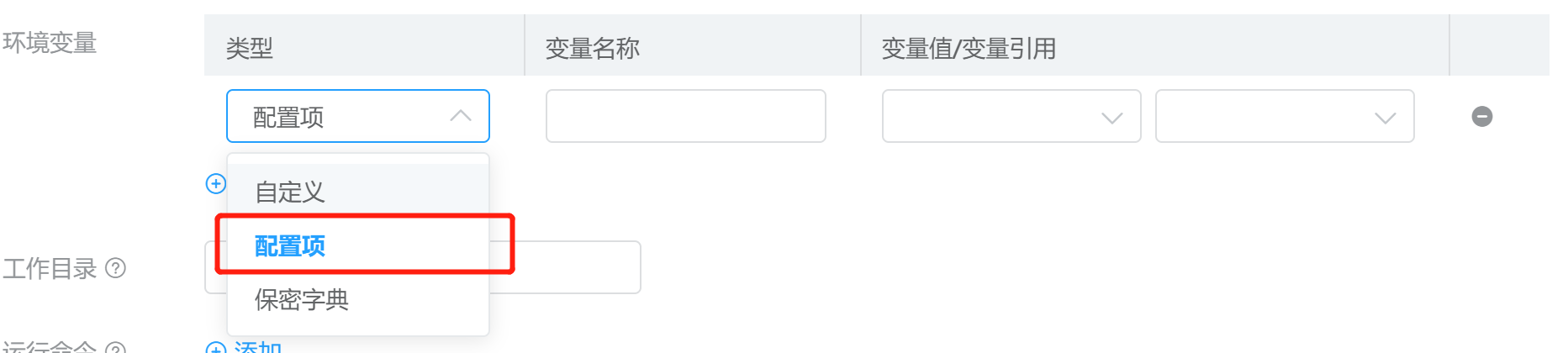

Let’s explore a practical scenario where a Kubernetes init container fetches configuration data from a ConfigMap. This configuration will then be made accessible to the main application container. This process demonstrates how a Kubernetes init container can manage application dependencies effectively before the main app starts. First, you need to define a ConfigMap with the desired configurations. For instance, a ConfigMap named `app-config` might include settings like database connection strings or feature flags. The next step involves creating a Pod definition that includes both the main application container and the Kubernetes init container. The init container will use a simple image, such as `busybox`, and will run a command to retrieve the configuration data from the ConfigMap and write it to a shared volume. This shared volume is mounted by both the init container and the main application container. The main container can then access the configurations from this mounted volume. The `initContainers` section of the Pod specification would look something like this:

initContainers:

- name: config-init

image: busybox

command: ['sh', '-c', 'cp /config/* /shared-data']

volumeMounts:

- name: shared-volume

mountPath: /shared-data

- name: config-volume

mountPath: /config

volumes:

- name: shared-volume

emptyDir: {}

- name: config-volume

configMap:

name: app-config

In this example, the `config-init` Kubernetes init container copies files from the `/config` directory, where the ConfigMap is mounted, to `/shared-data`, a location within the shared volume. The main application container then mounts `/shared-data` to access this data. The main container would mount the `shared-volume` at some path (e.g. /app/config). The configuration data placed in the volume can then be accessed by the application. You can use environment variables or configuration files read from `/app/config` inside the application. This example highlights the benefit of using a Kubernetes init container to ensure configuration is available before the application runs. This reduces the complexity of managing configurations within the application logic and ensures a clean separation of concerns. It is also an effective way to apply configuration without rebuilding the application container images and reduces the complexity of the application itself. By utilizing an init container, the main application container will have all of the required configurations available upon startup, resulting in a more consistent and reliable application deployment.

Troubleshooting Common Issues with Kubernetes Init Containers

Working with kubernetes init container can sometimes present challenges. One common issue is image pull failures. This occurs when Kubernetes cannot retrieve the specified container image. This could be due to incorrect image names, network connectivity problems, or issues with the container registry. Another frequent problem is resource allocation issues. If an init container does not have sufficient CPU or memory limits, it may fail to start. This can cause the entire pod to remain in a pending state. Ensure the resource requests and limits are properly set. These are common causes of the kubernetes init container not starting correctly.

Container execution errors are also frequent. These can happen due to incorrect commands or scripts in the init container. It can be due to failing to meet the necessary conditions for execution. To diagnose these problems, start by checking the Kubernetes event logs. Use the command `kubectl describe pod

Another key to troubleshooting kubernetes init container issues is to monitor resource usage. The `kubectl top pod` command can show the current resource consumption. If a container is using more resources than requested, it could lead to problems. Debugging complex interactions between multiple init containers can be tricky. If using shared volumes for communication, verify permissions, and data synchronization. If an init container is waiting for a specific condition or state before it completes, use sleep commands to pause and log data so debugging is easier. Retries for failed init containers need to be handled gracefully by Kubernetes. Consider using a backoff limit on the pod specification if needed. Applying these debugging techniques should help resolving problems with kubernetes init container.

Future Trends and Development of Kubernetes Init Containers

The landscape of Kubernetes is continuously evolving, and with it, so are the capabilities of the Kubernetes init container. Future developments aim to enhance the flexibility and efficiency of these critical components. One area of focus is on improving the lifecycle management of init containers. This could involve more granular control over their execution order and dependencies. Expect to see better integration with Kubernetes’ advanced scheduling features. This will allow for more optimized resource usage during the pod startup process. Further advancements might include more sophisticated error handling and retry mechanisms for init containers. This is vital for guaranteeing the reliability of application deployments. This would allow better handling of transient issues that can occur during the initialization phase. Another important development area is the improved visibility and monitoring of Kubernetes init container operations. Future tooling could provide more detailed insights into their execution times, resource consumption, and any potential bottlenecks they might introduce.

Emerging trends like GitOps are also influencing how Kubernetes init container are being used. GitOps promotes a declarative approach to infrastructure and application management. In this context, init containers can play a vital role in ensuring that applications begin with the correct configurations and settings. As GitOps practices become more widespread, developers will find new innovative ways to leverage the power of Kubernetes init container. This includes using them to fetch and apply configurations stored in Git repositories. This aligns with the idea of infrastructure as code and can lead to more auditable and repeatable deployment processes. This integration with GitOps is likely to drive an increase in the strategic use of init containers. Kubernetes init container will be seen as essential for any modern, cloud native setup. The Kubernetes community is actively looking at ways to make the implementation even easier and more user-friendly.

Furthermore, the ongoing refinement of container image technologies will also impact how init containers are utilized. Lighter and more specialized images can reduce the startup time of init containers. This will contribute to quicker application deployment cycles. Future versions of Kubernetes may offer enhancements to image management, making it simpler to maintain and update images. There may also be a push for improved security around init containers, by providing better access controls and preventing malicious code injection. With the continuous growth of the Kubernetes ecosystem, the future of Kubernetes init container looks promising. Expect to see a greater emphasis on simplification, automation, and security. This will empower developers to use init containers more effectively for their applications.