Understanding Google’s Kubernetes Engine

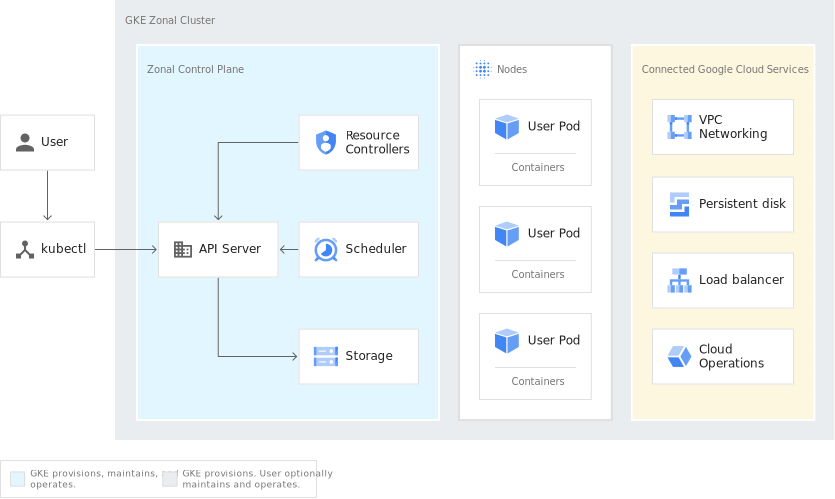

Google Kubernetes Engine (GKE) stands as a powerful, managed Kubernetes service, designed to simplify the deployment, scaling, and management of containerized applications. Kubernetes, at its core, is an open-source system for automating these crucial tasks. Imagine having a conductor for an orchestra of containerized applications, that’s what Kubernetes is. It handles everything from deploying new containers, scaling them based on demand, to ensuring they are healthy and running. This orchestration is vital in modern application development, where agility and scalability are paramount. Without a system like Kubernetes, managing container deployments, especially at scale, would be exceedingly complex and prone to errors. The beauty of GKE lies in the fact that it removes the operational overhead associated with managing the Kubernetes infrastructure itself. Instead of having to install, configure, and maintain your own Kubernetes cluster, Google handles these intricate operations. This not only reduces the need for specialized Kubernetes expertise but also allows organizations to focus their resources on building and deploying applications, rather than on infrastructure maintenance. Essentially, GKE provides a robust platform, simplifying the complexities of Kubernetes and making it easier to harness the power of containerization for applications. Using a managed service such as GKE, rather than self-managing a Kubernetes cluster, offers benefits such as high availability, automatic updates, and improved security. For these reasons we are focusing on how you can use the kubernetes google engine provided by Google Cloud.

By using GKE, organizations can rapidly deploy, scale, and manage containerized applications, leveraging the strength of Kubernetes without getting bogged down in infrastructure management complexities. The move towards managed services is a strategic one; GKE, as a leader in the kubernetes google space provides not only ease of use but also the assurance of Google’s robust infrastructure and security standards. Organizations choose managed Kubernetes for the agility it offers, enabling them to release updates more frequently and adapt to market needs faster. This is because the underlying infrastructure is expertly maintained, allowing development teams to spend less time on configurations and maintenance and more time on coding and application development. The ease of access and the reduced risk of human error in the infrastructure configuration makes GKE a top choice. The platform is designed for high availability, which means your applications remain accessible even during infrastructure updates or potential hardware failures, this is something very challenging to achieve on your own. GKE ensures a smoother operational experience by providing a stable and reliable Kubernetes environment.

Ultimately, GKE simplifies Kubernetes by managing a significant portion of the complexities associated with cluster management. This allows development teams to focus on innovation, while relying on Google’s expertise to handle the infrastructure that powers their applications. This managed approach contrasts sharply with the intricacies of self-managed clusters, which require deep technical expertise and considerable time to keep the cluster running smoothly. Furthermore, GKE automatically incorporates security best practices and offers a variety of compliance certifications, further streamlining the kubernetes google experience for organizations of all sizes. The integration with other Google Cloud services, such as monitoring and logging, provides valuable insights into the performance and stability of your applications. This holistic approach contributes to a more efficient and effective deployment workflow, making Kubernetes more accessible and practical for organizations to use. The result is a powerful platform, which enables its users to maximize the benefits of containerization without getting caught in the complexity of underlying infrastructure.

Setting Up Your First Kubernetes Cluster on Google Cloud

Creating a Kubernetes cluster on Google Cloud is the initial step in leveraging its powerful container orchestration capabilities. Google Kubernetes Engine (GKE) offers a streamlined approach to cluster creation, which can be achieved through the Google Cloud Console or the command-line interface (CLI) using `gcloud`. The process begins by accessing the Kubernetes Engine section within the Google Cloud Console. Here, one can initiate cluster creation by clicking on “Create Cluster.” A crucial aspect of this process involves choosing the appropriate location for your cluster, which should be based on factors like proximity to users or other cloud services you are using. Subsequently, the configuration requires selecting the machine types for the nodes; this includes defining the number of nodes, their processing power, and memory capacity. This selection process impacts both performance and cost, so it’s vital to choose specifications that meet your application’s resource needs without overspending. For a basic setup, it’s recommended to start with a smaller number of nodes and machine types, which can later be scaled as your application grows, ensuring you start your kubernetes google journey in an optimal way. After choosing location and machine types, specify the cluster size, which means defining the number of worker nodes for the cluster. A minimal cluster setup is adequate for initial testing and deployments, allowing you to get familiar with the platform before dealing with complex scenarios. The console provides an intuitive interface guiding you through these steps, where the essential configuration details of your kubernetes google cluster are defined and reviewed.

Alternatively, for those who prefer the command line, the `gcloud` CLI provides a robust and flexible way to create your kubernetes google cluster. Using the command `gcloud container clusters create [CLUSTER_NAME] –zone=[ZONE] –machine-type=[MACHINE_TYPE] –num-nodes=[NUMBER_OF_NODES]`, a cluster can be spun up quickly. Replace `[CLUSTER_NAME]` with your chosen name, `[ZONE]` with the desired geographical zone (like us-central1-a), `[MACHINE_TYPE]` with the required instance type (such as n1-standard-2), and `[NUMBER_OF_NODES]` with the number of worker nodes. For instance, a cluster creation command might look like `gcloud container clusters create my-first-cluster –zone us-central1-a –machine-type n1-standard-2 –num-nodes 3`. This method offers the advantage of automation and the ability to incorporate cluster creation into scripts. Regardless of the chosen method, it’s crucial to verify the cluster’s health and readiness once provisioning is complete. This involves checking the Kubernetes dashboard or using `kubectl get nodes` to view the nodes status. Successfully completing these steps results in a functional Kubernetes cluster, ready to host your applications. Setting up a solid foundation using Google Kubernetes Engine is essential for exploring the power of container orchestration, and it is a great way to start your experience using kubernetes google.

How to Deploy Applications on Kubernetes Using GKE

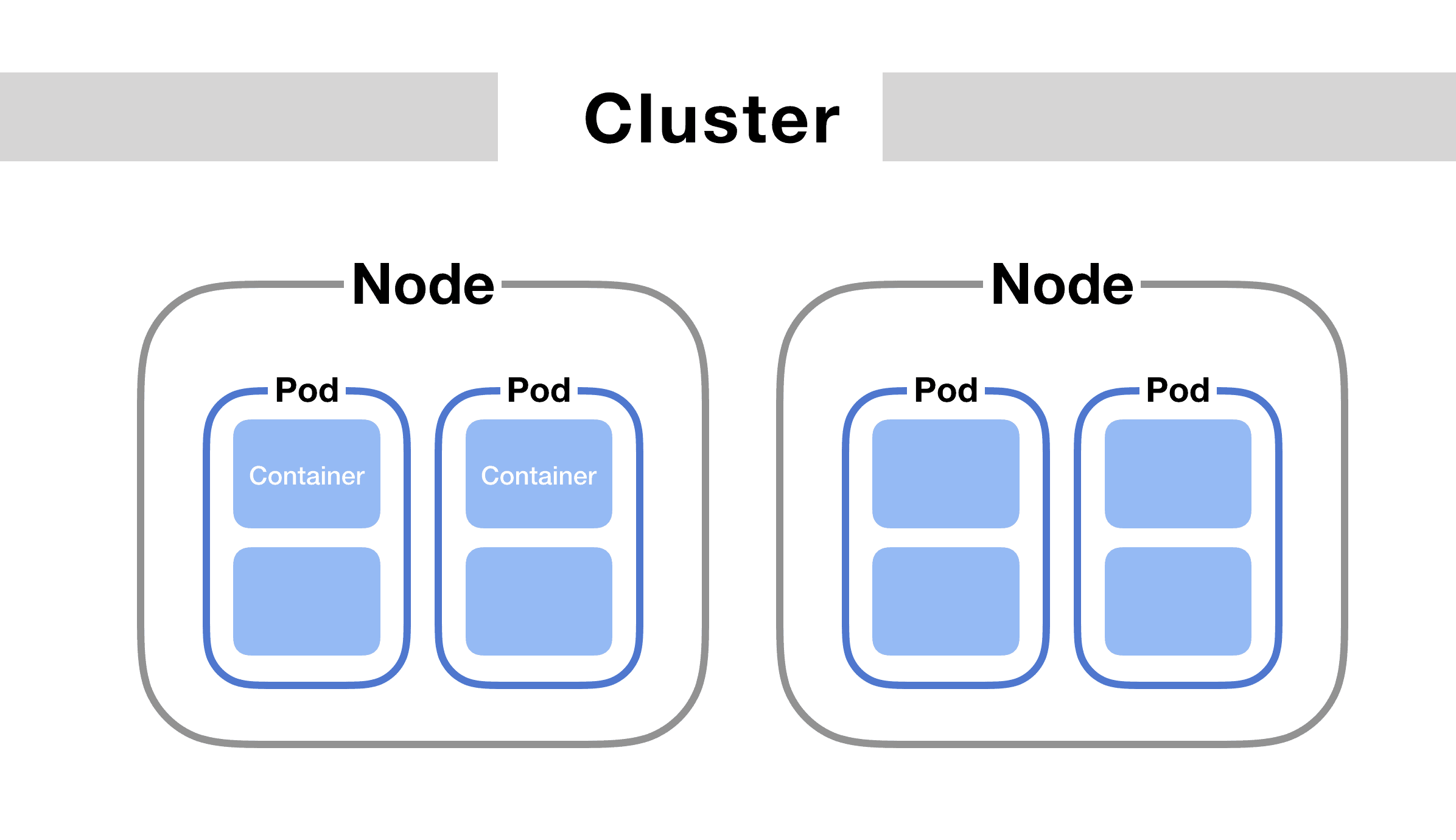

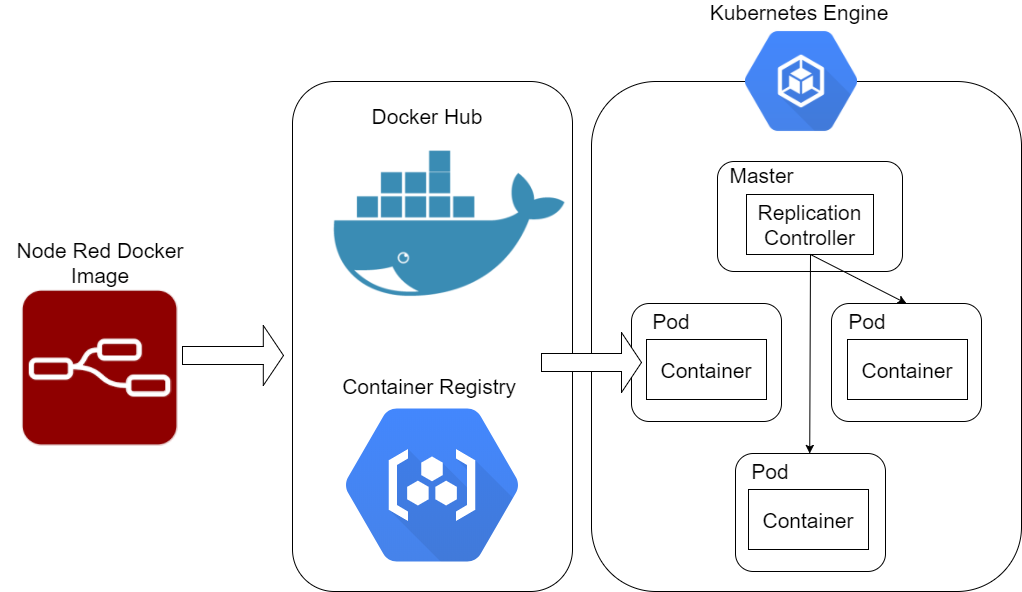

Deploying applications on a Kubernetes cluster managed by Google Kubernetes Engine (GKE) involves several key concepts that are crucial for a successful rollout. Understanding deployments, pods, and services is fundamental to this process. A deployment, in Kubernetes terms, represents a desired state for your application. It defines the number of identical pods (the smallest deployable unit containing one or more containers) that should be running, ensuring that your application remains available and scales as needed. When deploying to kubernetes google, you need a YAML configuration file to define your deployment. This file typically specifies the container image to use, resources required, and labels used for selecting pods. For instance, a simple deployment might be defined to run a web server using a Docker image. Once defined, this YAML file can be applied using the `kubectl apply -f

After applying the deployment, Kubernetes launches pods to match the specification. Each pod runs one or more containers that are instances of the application. When you are dealing with kubernetes google, services come into play to expose the application running in these pods. A service acts as an abstraction layer that enables access to the application from outside the pod or internally between different pods. They provide a stable IP address and DNS name, that clients can use to reach the application regardless of which pod instance is actually handling the request. For example, if your application is a web service, a service of type `LoadBalancer` could be used to expose it to the internet via a load balancer. This service is also defined via a YAML file, it specifies how the underlying pods should be discovered using labels. To actually deploy a real-world application, you’ll need to: first, build and push your container images to a container registry like Google Container Registry or Artifact Registry; second, prepare your deployment and service YAML definitions; and finally, apply these definitions using `kubectl` to deploy the application to the GKE cluster. You can then monitor the application’s status using `kubectl` commands and verify the successful deployment.

Scaling Your Application With Google Kubernetes Engine

Scaling applications effectively is crucial for maintaining performance and availability, and Google Kubernetes Engine (GKE) provides robust mechanisms to achieve this. Kubernetes google allows for scaling based on demand, ensuring applications can handle varying traffic loads without interruption. One of the fundamental scaling techniques involves adjusting the number of replicas. By modifying the deployment configuration, more instances of a pod can be created, effectively distributing the load across them. This approach is particularly beneficial when anticipating an increase in user activity or during peak hours. Furthermore, manual scaling through `kubectl scale deployment

Horizontal Pod Autoscaling (HPA) is a powerful feature within Google Kubernetes Engine that enables automated scaling of applications, optimizing resource utilization and ensuring high availability. To implement HPA, a target metric, such as CPU or memory usage, is defined along with minimum and maximum replica counts. When the defined metric exceeds the target, HPA initiates the creation of additional pods and conversely, reduces the number of pods during periods of low usage. This feature not only optimizes performance, it also controls infrastructure spending. Google kubernetes implementation allows for fine-tuning HPA configuration to precisely match application scaling to demand, thereby avoiding over-provisioning. Moreover, HPA integrates with Kubernetes metrics server to access and monitor resource usage data. This tight integration enables automated scaling decisions to be based on real-time data. This system dynamically adjusts the number of pods to accommodate actual loads, leading to more efficient use of resources and better overall performance within the kubernetes google platform.

Optimizing resource utilization in Google Kubernetes Engine involves more than just scaling replicas. Resource requests and limits can be specified for each pod to define the minimum resource requirements and the maximum allowable resource consumption respectively. By carefully setting requests and limits, it can ensure each pod has necessary resources while preventing resource starvation across the cluster. Additionally, it is essential to monitor resource usage patterns to identify any potential bottlenecks or inefficiencies. Google Cloud’s monitoring tools, integrate smoothly with kubernetes google, and provide insights into resource consumption, including CPU usage, memory consumption, and network throughput. Leveraging this data allows for the efficient optimization of resource allocation by dynamically adjusting pod configurations and autoscaling policies, resulting in a finely tuned environment that can handle diverse operational loads. By using these approaches, GKE ensures applications remain performant, highly available, and cost-effective.

Monitoring and Logging Your GKE Deployments

Effective monitoring and logging are critical for maintaining the health and performance of applications deployed on Kubernetes using Google Kubernetes Engine. Google Cloud provides a suite of powerful tools to gain deep insights into your deployments. Cloud Monitoring allows tracking of key metrics, such as CPU and memory utilization, network traffic, and request latency. By establishing dashboards and charts, users can visualize trends and proactively identify potential issues. Setting up alerts based on specific thresholds ensures that critical problems are immediately flagged, enabling rapid responses and minimizing downtime. In the context of kubernetes google deployments, these monitoring capabilities offer a crucial understanding of resource consumption and application behavior. This is not just about identifying problems after they occur; it’s about proactive optimization and ensuring a smooth operational experience. The integration between Google Cloud Monitoring and GKE enables real-time insights, leading to better decision-making.

Google Cloud Logging provides a centralized platform for aggregating and analyzing logs from your kubernetes google environment. This includes application logs, system logs, and audit logs, all accessible through a unified interface. Filtering and querying logs allows identifying specific events or errors, making troubleshooting easier and faster. Log-based metrics can also be generated, which further enhances the monitoring process. These logs provide invaluable context for understanding application behavior and identifying the root causes of issues. Furthermore, they are essential for compliance and auditability. By analyzing log data, users gain a deeper understanding of their applications, identifying bottlenecks, and potential security concerns. Combining the power of monitoring and logging ensures that your kubernetes google deployments are running optimally and securely, leading to higher levels of service availability and customer satisfaction.

To fully leverage monitoring and logging, consider establishing clear objectives and defining specific metrics that reflect your application’s performance requirements. This is critical for understanding what “normal” looks like and what constitutes an anomaly. Additionally, take time to create informative dashboards that provide a comprehensive overview of your infrastructure and application health. Continuously review logs and metrics to identify trends and proactively address issues. By integrating these practices into your workflows, users will be able to gain the full benefits of Google’s monitoring and logging capabilities and maintain a stable and high-performing environment on kubernetes google platform.

Securing Your Kubernetes Environment on Google Cloud

Securing a Kubernetes cluster running on Google Cloud is paramount for protecting sensitive information and maintaining the integrity of your applications. A robust security strategy involves multiple layers, starting with Identity and Access Management (IAM). IAM allows granular control over who can access and manage your Kubernetes resources within Google Cloud. By implementing the principle of least privilege, you ensure that users and service accounts only have the necessary permissions to perform their designated tasks, limiting the potential impact of compromised credentials. Effective IAM configuration is foundational for a secure kubernetes google environment, and is a must-have for production grade systems.

Network policies are another crucial component of securing your Kubernetes deployments on google cloud. These policies enable you to isolate workloads within the cluster, controlling the flow of traffic between different pods and namespaces. By defining network policies, you can restrict communication between applications, preventing lateral movement in the event of a security breach. This approach significantly reduces the attack surface and contains potential damage. Furthermore, securing communication between services within your kubernetes google infrastructure can be achieved through various methods, such as TLS encryption. Ensuring that all internal communication is encrypted protects data in transit from eavesdropping or tampering. Another aspect is the secure handling of secrets, sensitive data like API keys and passwords, which is facilitated using Kubernetes secrets management features and integrates with Google Cloud’s Secret Manager which will enhance your security posture within Google’s ecosystem.

Finally, it’s essential to continuously monitor your kubernetes google environment for any security vulnerabilities. Regularly scanning container images for known weaknesses, keeping your Kubernetes versions up to date and promptly patching any discovered vulnerabilities is a good practice, and is a must-have. By integrating security checks into your continuous integration and deployment pipeline, you can proactively identify and address security concerns before they can be exploited. In addition, audit logging and review should always be part of security best practices. Employing these comprehensive strategies ensures your kubernetes google environment is resilient against security threats and that your data remains protected, supporting the overall confidentiality, integrity, and availability of your application and infrastructure.

Understanding the Cost Implications of Google Kubernetes Engine

Delving into the cost structure of Google Kubernetes Engine (GKE) is crucial for effective budget management when using kubernetes google services. The expenses associated with GKE are influenced by several key factors, including the computational resources consumed by your Kubernetes cluster’s nodes. This encompasses the size of the virtual machines (VMs) used for each node, the quantity of these nodes, and their operational duration. Larger machine types with more processing power and memory result in increased costs, and the total number of nodes directly impacts your overall expense. The storage employed by your cluster, whether for persistent volumes used to store data for your application, or for the container images, plays a pivotal role in the final bill. These storage costs vary based on the type of storage and the amount of space utilized, and it is an important consideration. Additionally, networking charges are another component of the total cost. Networking expenses can include the data transfer fees for ingress and egress traffic, charges for load balancing and other network services, and the complexity of your network configuration with Kubernetes Google.

To effectively manage costs associated with kubernetes google engine, it’s essential to understand and utilize the tools Google Cloud provides for monitoring expenses. The Google Cloud Cost Management tools allow you to track spending across various resources, providing insights into your GKE costs over time. These tools enable the identification of areas where spending is higher than expected, or resources that are not being used efficiently. This will help pinpoint opportunities for optimizing your kubernetes google deployments. Optimizing GKE expenses involves a range of techniques, such as rightsizing nodes to match application needs, and employing auto-scaling features to adjust resources to handle variable workload demands. Utilizing preemptible VMs for non-critical workloads, which offer significant cost savings at the expense of potential interruptions, should also be considered. Additionally, it is essential to remove unused resources, and leverage managed services as much as possible. These may result in cost savings and simpler resource management.

Further optimization techniques include careful planning of the number of replicas, and proper resource limits for pods. For example, avoiding over-provisioning of resources, and strategically utilizing node pools can effectively help save cost. Implementing resource quotas, and setting limits on the usage of CPU and memory by your applications can also prevent individual applications from consuming excessive resources. Regular evaluation and optimization of your kubernetes google usage should be part of your operations. This proactive approach to cost management will ensure that you’re getting the most out of Google Kubernetes Engine without exceeding your budget, and maintaining the health of the cluster.

Exploring Advanced Features of Google Kubernetes Engine

Google Kubernetes Engine (GKE) extends beyond basic container orchestration, offering a range of advanced features designed to streamline complex deployments and enhance application management. Node auto-provisioning, for instance, allows the cluster to automatically scale its node pool based on resource demands, optimizing both performance and cost. This dynamic scaling ensures that applications always have the necessary resources to operate efficiently. Furthermore, serverless containers, powered by Knative, present a revolutionary way to deploy and run applications on kubernetes google. This allows for event-driven and serverless workloads, simplifying the deployment process and reducing the operational overhead of managing servers. These advancements within kubernetes google provide developers with tools to create more resilient and responsive applications.

The integration of Istio into kubernetes google also marks a major advancement, offering a robust service mesh layer. Istio allows for advanced traffic management, security enhancements, and observability for microservices deployed within the cluster. Its capabilities for routing, rate limiting, and fault injection provide a framework for achieving advanced control over inter-service communication. By using Istio, developers can focus more on the application logic and less on the intricacies of networking. This feature is a key component for organizations seeking to establish sophisticated microservices architectures using kubernetes google, enabling them to manage increasingly complex deployments with ease and confidence. These advanced features highlight the robust ecosystem available for applications running on kubernetes google.

Exploring these advanced features allows users to move beyond the basics of kubernetes google, unlocking a new level of control and adaptability. Node auto-provisioning simplifies resource management, ensuring efficient resource allocation without manual intervention, which in turn helps optimize costs and application availability. Serverless container technology drastically reduces operational burden and gives the freedom to focus on application development and functionality. Service mesh technologies like Istio present powerful tools to refine the way microservices interact, thus enhancing the security and manageability of complex systems. As users grow in their knowledge of kubernetes google, these features offer pathways for expanding capabilities and building sophisticated applications. These more advanced features make kubernetes google a powerful choice for a wide range of deployments, whether they are simple or complex.