The Role of Kubernetes and Docker in Containerized Deployments

Containerization has revolutionized the way businesses and organizations manage and deploy applications, offering a more efficient and streamlined approach to software development and maintenance. Two key players in this space are Kubernetes and Docker, both of which play crucial roles in container orchestration and management. This article will explore the individual and combined benefits of using Kubernetes and Docker for internal operations.

Docker is a popular open-source containerization platform that allows developers to package applications and their dependencies into isolated containers. These containers can then be deployed and run consistently across various environments, from local development machines to large-scale production servers. Docker simplifies the distribution and scaling of applications, reducing the risk of compatibility issues and conflicts between different software stacks.

Kubernetes, on the other hand, is an open-source container orchestration system designed to automate the deployment, scaling, and management of containerized applications. It provides a robust platform for managing and coordinating containerized workloads, ensuring high availability, efficient resource utilization, and seamless scaling. By integrating Kubernetes with Docker, businesses can leverage the strengths of both platforms to create a powerful, flexible, and scalable containerized environment.

The combination of Kubernetes and Docker offers several benefits for internal operations. First, it enables organizations to manage and scale containerized applications more efficiently, reducing the manual effort required to deploy and maintain applications. Second, it allows for better resource utilization, as Kubernetes can automatically allocate and manage resources across multiple containers, ensuring optimal performance and minimizing waste. Third, Kubernetes provides advanced features such as automated rollouts, self-healing, and service discovery, further streamlining internal operations and improving overall system resilience.

In summary, Kubernetes and Docker are powerful tools for containerized deployments and management. By integrating these platforms, businesses can create a robust, scalable, and efficient containerized environment that simplifies application deployment, scaling, and maintenance. In the following sections, we will delve deeper into the key differences and similarities between Kubernetes and Docker, as well as provide a step-by-step guide to implementing Kubernetes with Docker for internal operations.

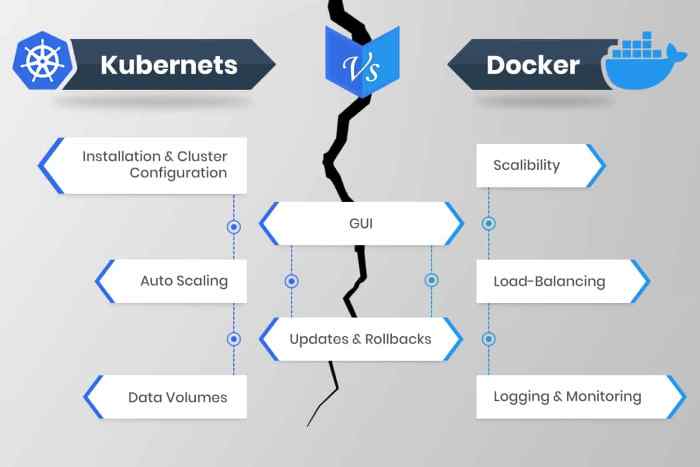

Kubernetes vs. Docker: Key Differences and Similarities

While Kubernetes and Docker serve similar purposes in containerized environments, they have distinct roles and features that make them complementary tools for internal operations. Understanding these differences and similarities is crucial for businesses and organizations looking to leverage the full potential of Kubernetes and Docker integration.

One of the primary differences between Kubernetes and Docker lies in their core functionalities. Docker is a containerization platform that focuses on packaging applications and their dependencies into isolated containers. In contrast, Kubernetes is a container orchestration system designed to automate the deployment, scaling, and management of containerized applications across clusters of hosts.

Despite their differences, Kubernetes and Docker share several similarities that make them a powerful combination for containerized deployments. Both platforms are open-source, enabling developers and organizations to customize and extend their functionalities according to specific needs. They also both support cross-platform compatibility, allowing applications to run consistently across various environments, from local development machines to large-scale production servers.

In terms of features, Docker provides a rich set of tools for building, packaging, and distributing containerized applications. It includes a lightweight runtime engine, a comprehensive set of command-line tools, and a robust API for managing and interacting with containers. Docker also supports various networking and storage configurations, enabling developers to create complex, multi-container applications with ease.

Kubernetes, on the other hand, offers advanced features for container orchestration and management. It supports automated deployment, scaling, and rollouts, allowing businesses to quickly and efficiently scale their applications according to demand. Kubernetes also provides self-healing capabilities, ensuring high availability and resilience by automatically restarting failed containers, replacing unhealthy nodes, and scaling applications to maintain optimal performance.

By integrating Kubernetes and Docker, businesses can leverage the strengths of both platforms to create a robust, flexible, and scalable containerized environment. Kubernetes can manage and coordinate multiple Docker containers, ensuring efficient resource utilization, seamless scaling, and automated failover. Meanwhile, Docker provides the foundation for building and packaging containerized applications, enabling businesses to streamline their development and deployment processes.

In the following sections, we will provide a step-by-step guide on implementing Kubernetes with Docker, sharing best practices for optimizing performance, highlighting real-world success stories, and discussing future trends in Kubernetes and Docker integration.

How to Implement Kubernetes with Docker: A Step-by-Step Guide

Integrating Kubernetes with Docker can provide significant benefits for businesses and organizations looking to streamline their containerized deployments and improve internal operations. This step-by-step guide will walk you through the prerequisites, installation, configuration, and management steps for implementing Kubernetes with Docker.

Prerequisites

Before integrating Kubernetes with Docker, ensure that you have the following prerequisites in place:

- A compatible Linux distribution (e.g., Ubuntu 18.04 or CentOS 7)

- Docker installed and configured on your system

- A minimum of 2 GB of RAM for each Kubernetes node

- A network plugin for container networking (e.g., Flannel or Calico)

Installation

To install Kubernetes on your system, follow these steps:

- Add the Kubernetes signing key to your system:

- Add the Kubernetes repository to your system:

- Update your package list and install the Kubernetes packages:

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add - sudo apt-add-repository "deb http://apt.kubernetes.io/ kubernetes-xenial main" sudo apt-get update sudo apt-get install -y kubelet kubeadm kubectl Configuration

After installing Kubernetes, you can initialize your master node with the following command:

sudo kubeadm init --pod-network-cidr=10.244.0.0/16 Once the master node is initialized, you can configure your system to use the Kubernetes command-line tool, kubectl:

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config Next, install a network plugin for container networking (e.g., Flannel or Calico) by following the plugin’s specific installation instructions.

Management

After configuring Kubernetes and your chosen network plugin, you can start deploying and managing containerized applications with Kubernetes. To deploy a Docker container with Kubernetes, create a deployment manifest file (e.g., deployment.yaml) that specifies the container image, resource requirements, and other configuration details. Then, use the kubectl command-line tool to create and manage the deployment:

kubectl create -f deployment.yaml kubectl get deployments kubectl scale deployment --replicas=3 By following these steps, you can successfully integrate Kubernetes with Docker and begin managing and scaling your containerized applications with ease.

Optimizing Performance: Best Practices for Kubernetes and Docker Integration

To maximize the performance of your containerized applications, it’s essential to follow best practices for integrating Kubernetes and Docker. This section will discuss resource allocation, networking, and storage configurations to help you optimize your Kubernetes and Docker environment.

Resource Allocation

Proper resource allocation is crucial for ensuring the smooth operation of your containerized applications. Kubernetes allows you to specify resource requests and limits for each container, ensuring that your applications receive the resources they need to function correctly without impacting other applications running on the same node.

To optimize resource allocation, consider the following best practices:

- Set resource requests and limits for each container based on expected usage patterns and performance requirements.

- Monitor resource utilization regularly and adjust resource requests and limits as needed to maintain optimal performance.

- Use Kubernetes’ horizontal pod autoscaling feature to automatically scale the number of replicas based on resource utilization and performance metrics.

Networking

Kubernetes provides a powerful networking model that enables secure, efficient communication between containers and services. To optimize networking performance, consider the following best practices:

- Use a dedicated network plugin for container networking, such as Flannel or Calico, to ensure efficient communication between containers.

- Configure Kubernetes services to expose your applications to other containers and services within the cluster, using either ClusterIP, NodePort, or LoadBalancer service types.

- Use Kubernetes ingress resources to expose your applications to external clients, using ingress controllers such as NGINX or Traefik to manage external access.

Storage

Kubernetes provides a flexible storage model that enables you to attach and manage storage resources for your containerized applications. To optimize storage performance, consider the following best practices:

- Use persistent volumes (PVs) and persistent volume claims (PVCs) to manage storage resources for your applications, ensuring that storage is properly provisioned and attached to the correct containers.

- Configure storage classes to enable dynamic provisioning of storage resources, ensuring that your applications always have access to the storage they need.

- Use Kubernetes’ storage resources, such as ephemeral volumes or config maps, to manage application data and configuration, ensuring that data is properly backed up and synchronized across replicas.

By following these best practices for resource allocation, networking, and storage configurations, you can optimize the performance of your Kubernetes and Docker environment and ensure that your containerized applications run smoothly and efficiently.

Real-World Applications: Success Stories of Kubernetes and Docker Integration

Many businesses and organizations have successfully integrated Kubernetes and Docker to streamline their internal operations and improve the efficiency of their containerized applications. This section will highlight some real-world examples and success stories of Kubernetes and Docker integration.

Example 1: Netflix

Netflix, a leading streaming service provider, uses Kubernetes and Docker to manage its containerized microservices. By integrating these tools, Netflix has been able to achieve high levels of scalability, reliability, and fault tolerance for its applications. According to Netflix, Kubernetes has helped the company reduce the time and effort required to manage its containerized workloads, enabling its developers to focus more on building and delivering innovative features to its users.

Example 2: Airbnb

Airbnb, a popular online marketplace for vacation rentals, has also successfully integrated Kubernetes and Docker to manage its containerized workloads. By using Kubernetes, Airbnb has been able to achieve high levels of automation, enabling the company to quickly and easily deploy and manage its containerized applications. According to Airbnb, Kubernetes has helped the company reduce the time and effort required to manage its containerized workloads, enabling its developers to focus more on building and delivering innovative features to its users.

Example 3: eBay

eBay, a leading online marketplace, has also adopted Kubernetes and Docker to manage its containerized workloads. By using Kubernetes, eBay has been able to achieve high levels of scalability, reliability, and fault tolerance for its applications. According to eBay, Kubernetes has helped the company reduce the time and effort required to manage its containerized workloads, enabling its developers to focus more on building and delivering innovative features to its users.

These real-world examples demonstrate the significant benefits of integrating Kubernetes and Docker for containerized deployments. By using these tools together, businesses and organizations can achieve high levels of scalability, reliability, and fault tolerance for their applications, while reducing the time and effort required to manage their containerized workloads.

Navigating Challenges: Common Issues and Troubleshooting Techniques

While integrating Kubernetes and Docker can provide numerous benefits, it can also present several challenges. In this section, we will identify some common issues that may arise when integrating Kubernetes and Docker, and offer practical troubleshooting techniques and solutions.

Challenge 1: Configuration Management

Managing configurations for Kubernetes and Docker can be complex and time-consuming. Configuration drift, where configurations become inconsistent over time, can lead to errors and downtime. To address this challenge, consider using configuration management tools such as Helm or Kustomize, which can help automate the configuration management process and reduce the risk of configuration drift.

Challenge 2: Resource Allocation

Properly allocating resources for Kubernetes and Docker is essential for ensuring optimal performance. However, determining the right resource allocation strategy can be challenging. To address this challenge, consider using Kubernetes’ built-in resource management features, such as resource requests and limits, to ensure that each container receives the resources it needs to function correctly.

Challenge 3: Networking

Setting up and managing networking for Kubernetes and Docker can be complex, particularly when dealing with multiple containers and services. To address this challenge, consider using Kubernetes’ built-in networking features, such as pod-to-pod communication and service discovery, to simplify the networking process and ensure that containers can communicate with each other effectively.

Challenge 4: Security

Securing Kubernetes and Docker environments can be challenging, particularly when dealing with multiple containers and services. To address this challenge, consider using Kubernetes’ built-in security features, such as network policies and role-based access control, to ensure that containers are properly secured and that access is restricted to authorized users and services.

By understanding these common challenges and implementing the appropriate troubleshooting techniques and solutions, businesses and organizations can effectively navigate the complexities of integrating Kubernetes and Docker and ensure that their containerized applications run smoothly and securely.

Staying Updated: Keeping Your Kubernetes and Docker Skills Current

Staying updated on the latest developments and updates for Kubernetes and Docker is essential for ensuring that you can effectively manage and optimize your containerized deployments. In this section, we will discuss the importance of staying current and offer resources and strategies for continuous learning and improvement.

Why Stay Updated?

Kubernetes and Docker are constantly evolving, with new features, updates, and bug fixes released regularly. Staying current on these developments can help you take advantage of new functionality, improve performance, and reduce the risk of security vulnerabilities. Additionally, staying updated can help you stay competitive in your industry and demonstrate your expertise to clients and colleagues.

Resources for Staying Updated

There are numerous resources available for staying updated on Kubernetes and Docker, including:

- Official documentation: Both Kubernetes and Docker maintain comprehensive documentation that is regularly updated with the latest information.

- Blogs and newsletters: Many organizations and individuals maintain blogs and newsletters that cover the latest developments in Kubernetes and Docker. Consider subscribing to a few of your favorites to stay up-to-date.

- Online courses and training: Online platforms such as Udemy, Coursera, and edX offer a wide range of courses and training programs on Kubernetes and Docker, many of which are regularly updated with the latest information.

- Community events and conferences: Kubernetes and Docker have active communities that host regular events and conferences, both online and in-person. Attending these events can be a great way to learn from experts, network with peers, and stay up-to-date on the latest developments.

Strategies for Continuous Learning

To stay current on Kubernetes and Docker, consider implementing the following strategies:

- Set aside dedicated time for learning: Set aside a specific amount of time each week or month for learning about Kubernetes and Docker. This can help you stay consistent and ensure that you are making progress over time.

- Experiment with new features and updates: Try out new features and updates as they become available. This can help you gain hands-on experience and identify any potential issues before they become widespread.

- Join online communities: Join online communities such as forums, Slack channels, and social media groups. These communities can be a great source of information, support, and inspiration.

- Collaborate with peers: Collaborate with peers who are also interested in Kubernetes and Docker. Sharing knowledge and experiences can help you learn faster and more effectively.

By staying updated on the latest developments and updates for Kubernetes and Docker, you can ensure that you have the skills and knowledge needed to effectively manage and optimize your containerized deployments. Additionally, by implementing strategies for continuous learning and improvement, you can stay ahead of the curve and demonstrate your expertise to clients and colleagues.

Future Trends: The Evolution of Kubernetes and Docker Integration

As Kubernetes and Docker continue to evolve and gain widespread adoption, businesses and organizations can expect to see new trends and developments in their integration. In this section, we will explore some of these future trends and discuss their implications for businesses and organizations in various industries.

Trend 1: Increased Adoption of Kubernetes and Docker in Edge Computing

Edge computing, which involves processing data closer to the source, is becoming increasingly important as businesses and organizations seek to reduce latency, improve performance, and reduce bandwidth costs. Kubernetes and Docker are well-suited for edge computing environments, and we can expect to see increased adoption of these tools in this space.

Trend 2: Integration with Serverless Architectures

Serverless architectures, which allow developers to build and run applications without worrying about infrastructure, are gaining popularity. Kubernetes and Docker can be integrated with serverless architectures, enabling businesses and organizations to take advantage of the benefits of both technologies.

Trend 3: Improved Security Features

Security is a top concern for businesses and organizations implementing Kubernetes and Docker. As these tools continue to evolve, we can expect to see improved security features, such as enhanced network segmentation, better access controls, and more robust encryption.

Trend 4: Integration with AI and Machine Learning Tools

AI and machine learning are becoming increasingly important in various industries, from healthcare to finance. Kubernetes and Docker can be integrated with AI and machine learning tools, enabling businesses and organizations to build and deploy intelligent applications more efficiently.

Trend 5: Greater Emphasis on Multi-Cloud Strategies

As businesses and organizations seek to reduce vendor lock-in and improve flexibility, we can expect to see a greater emphasis on multi-cloud strategies. Kubernetes and Docker can be used to manage containerized workloads across multiple clouds, enabling businesses and organizations to take advantage of the benefits of multiple cloud providers.

By staying up-to-date on the latest trends and developments in Kubernetes and Docker integration, businesses and organizations can ensure that they are taking full advantage of these powerful tools and positioning themselves for success in an ever-evolving technological landscape.