What are Kubernetes Deployments?

Kubernetes deployments are a core component of the Kubernetes platform, designed to manage containerized applications. They provide a declarative way to define the desired state of an application, including the number of replicas, scaling requirements, and update strategies. By automating the deployment, scaling, and management of applications, Kubernetes deployments help to ensure high availability, seamless updates, and minimal downtime.

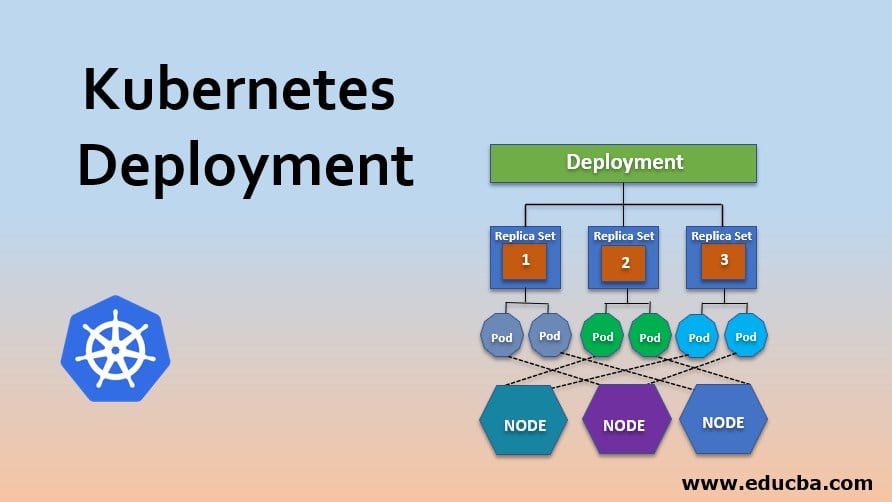

Key Components of Kubernetes Deployments

Kubernetes deployments consist of several essential components that work together to ensure high availability and seamless application updates. These components include ReplicaSets, Pods, and Deployment strategies. A ReplicaSet is responsible for maintaining a specified number of identical Pods, ensuring that the desired number of replicas is always running. Pods, the smallest deployable units in Kubernetes, represent a single instance of a running process in a cluster. Deployment strategies, on the other hand, define how updates should be applied to Pods and ReplicaSets, ensuring minimal downtime and smooth transitions between application versions.

Creating a Kubernetes Deployment

To create a Kubernetes deployment, you can use either YAML files or kubectl commands. The following steps demonstrate how to create a deployment using a YAML file:

- Create a YAML file defining the desired state and configuration of your application. Here’s an example:

apiVersion: apps/v1 kind: Deployment metadata: name: my-app spec: replicas: 3 selector: matchLabels: app: my-app template: metadata: labels: app: my-app spec: containers: - name: my-app-container image: my-app-image:1.0.0 ports: - containerPort: 8080 - Apply the YAML file using the kubectl apply command:

kubectl apply -f my-app-deployment.yaml Alternatively, you can create a deployment using kubectl commands directly:

kubectl create deployment my-app --image=my-app-image:1.0.0 --replicas=3 When creating a Kubernetes deployment, follow these best practices:

- Define the desired state and configuration using a YAML file for better maintainability and version control.

- Set resource requests and limits to ensure efficient resource utilization.

- Implement proper labeling and annotation strategies for easier management and discovery of your deployments.

- Use declarative configuration to manage your deployments, allowing Kubernetes to automatically reconcile the actual state with the desired state.

Rolling Updates and Rollbacks in Kubernetes Deployments

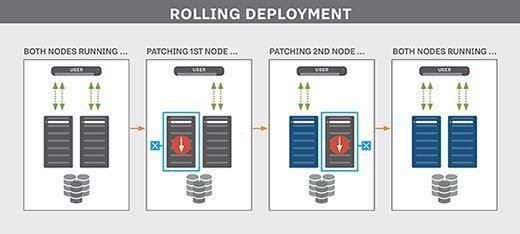

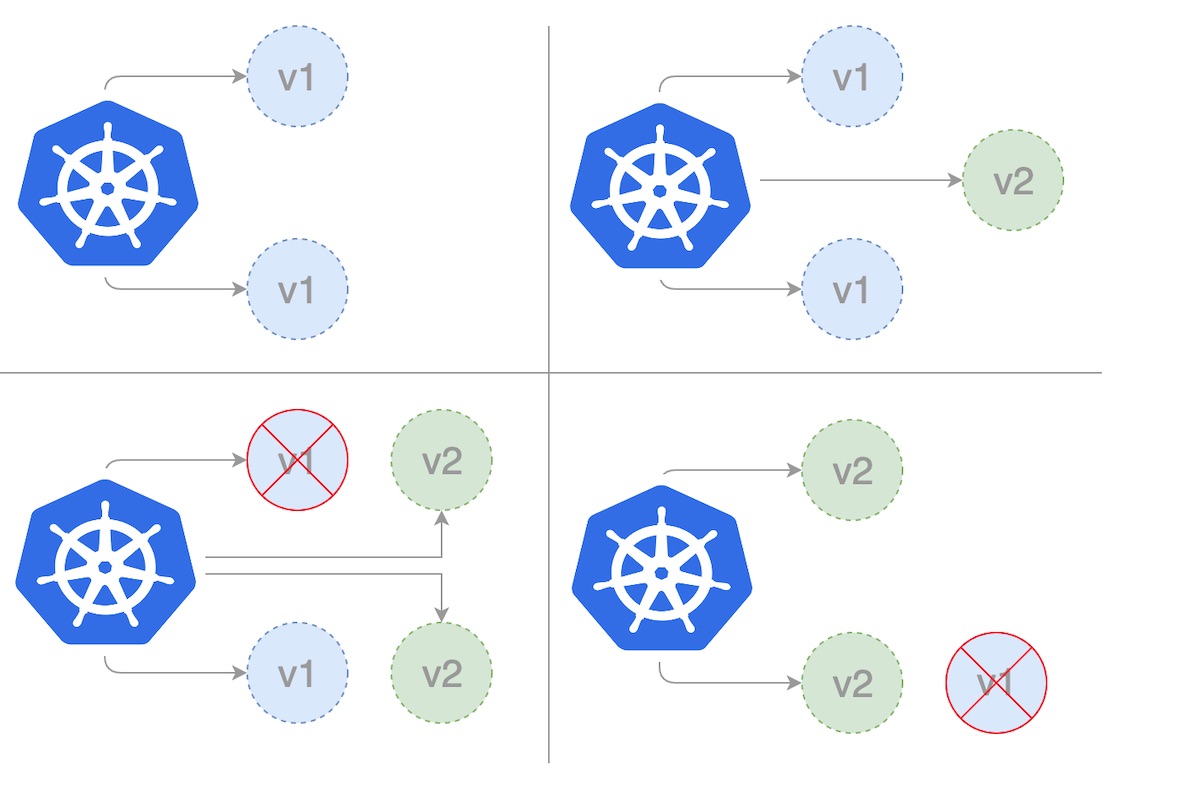

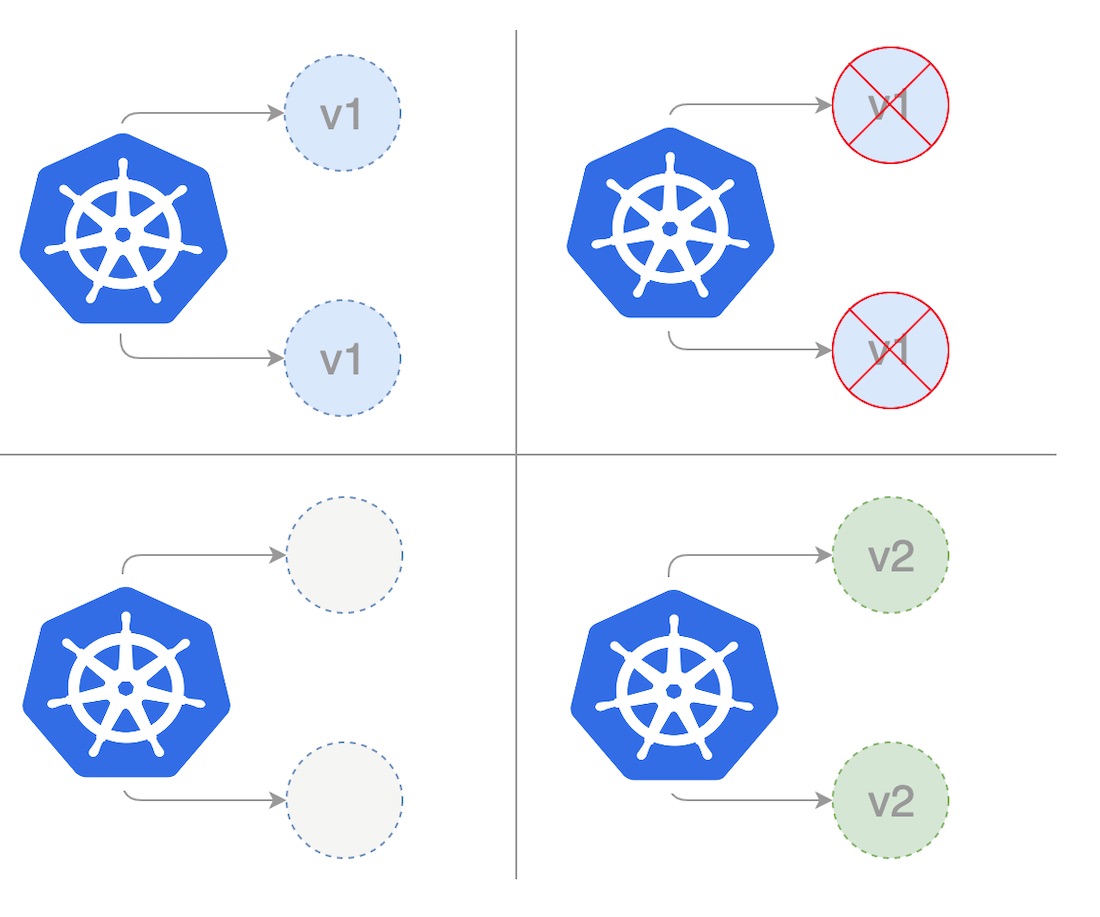

Rolling updates and rollbacks are essential features of Kubernetes deployments that help maintain high availability and ensure seamless application updates. Rolling updates allow you to update the application version without any downtime, while rollbacks enable you to revert to a previous version if necessary.

Performing Rolling Updates

To perform a rolling update, you can update the image tag or version in your existing deployment’s YAML file and apply the changes using kubectl. Kubernetes will then automatically create new Pods with the updated version and gradually replace the old Pods with the new ones, ensuring that the desired number of replicas is always maintained. This process minimizes downtime and reduces the risk of application failures during updates.

Performing Rollbacks

If you need to roll back to a previous version of your application, you can use the kubectl rollout undo command followed by the deployment name. Kubernetes will then revert the deployment to the previous revision, creating new Pods with the older version and gradually replacing the newer Pods. This process ensures minimal downtime and allows you to quickly recover from failed updates or unanticipated issues.

Best Practices for Rolling Updates and Rollbacks

- Test updates in a staging environment before applying them to production deployments.

- Use canary releases or blue-green deployments for more controlled update strategies.

- Monitor resource utilization, application performance, and system logs during updates and rollbacks to ensure smooth deployment operations.

- Implement proper labeling and annotation strategies to facilitate easy management and discovery of your deployments.

Scaling Kubernetes Deployments

Scaling Kubernetes deployments is the process of adjusting the number of Pods or resources allocated to a deployment to accommodate changes in demand or resource requirements. Kubernetes supports two scaling methods: horizontal scaling and vertical scaling. Both methods have their benefits and use cases, depending on the specific requirements of your application.

Horizontal Scaling

Horizontal scaling involves adding or removing Pod replicas to a deployment. This method is useful when you need to scale up or down quickly to handle changes in traffic or resource demands. Horizontal scaling is also more resilient to hardware failures, as new Pods can be scheduled on different nodes in the cluster. To horizontally scale a deployment, you can use the kubectl scale command followed by the deployment name and the desired number of replicas:

kubectl scale deployment my-app --replicas=5 Vertical Scaling

Vertical scaling involves increasing or decreasing the resources (CPU, memory, or storage) allocated to a Pod. This method is useful when you need to accommodate resource-intensive workloads or applications with predictable resource requirements. However, vertical scaling has limitations, as there is a finite limit to the resources available on a single node. To vertically scale a Pod, you can update the resource requests and limits in the Pod’s YAML file and apply the changes using kubectl.

Best Practices for Scaling Kubernetes Deployments

- Monitor resource utilization and application performance to determine the optimal scaling strategy for your application.

- Implement proper labeling and annotation strategies to facilitate easy management and discovery of your deployments.

- Use autoscaling features, such as the Kubernetes Horizontal Pod Autoscaler (HPA) or Cluster Autoscaler, to automatically scale deployments based on predefined metrics or thresholds.

- Test scaling strategies in a staging environment before applying them to production deployments.

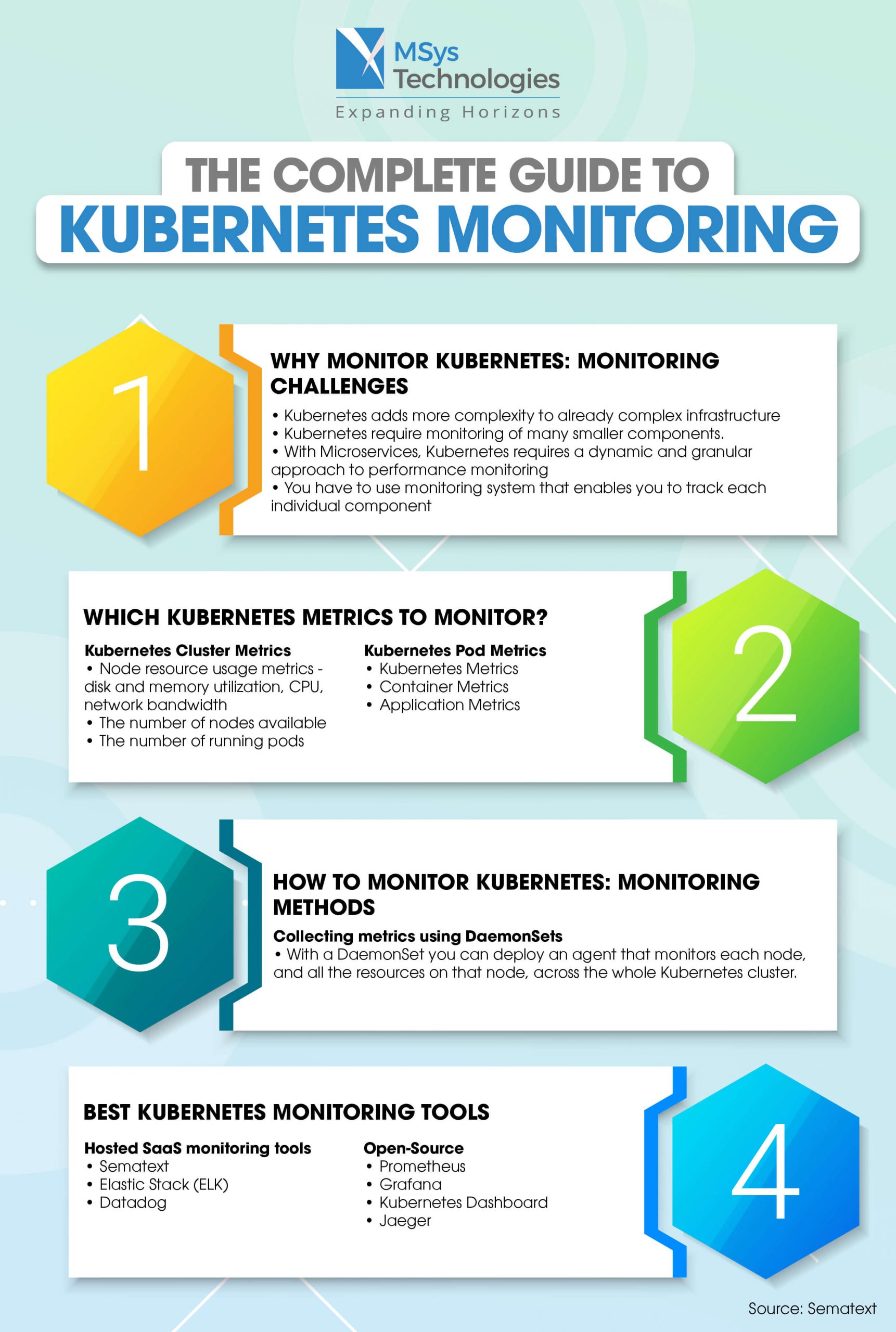

Monitoring and Troubleshooting Kubernetes Deployments

Monitoring and troubleshooting Kubernetes deployments are crucial for ensuring smooth deployment operations and maintaining high availability. Various tools and techniques are available to monitor resource utilization, application performance, and system logs. By effectively monitoring your deployments, you can quickly identify and resolve issues, ensuring optimal performance and reliability.

Monitoring Resource Utilization

Monitoring resource utilization involves tracking the CPU, memory, and storage usage of your deployments. Kubernetes provides built-in metrics through the Metrics API, which can be accessed using tools like the Kubernetes Dashboard, Prometheus, or Grafana. These tools allow you to visualize resource usage, set alerts for specific thresholds, and identify potential performance bottlenecks.

Monitoring Application Performance

Monitoring application performance involves tracking the availability, response time, and error rates of your applications. Tools like Jaeger, Zipkin, or OpenTelemetry can be used to trace requests across multiple services and identify performance issues. Additionally, synthetic monitoring solutions, such as LoadImpact or Uptrends, can simulate user interactions and measure the overall responsiveness and reliability of your applications.

Monitoring System Logs

Monitoring system logs involves collecting, aggregating, and analyzing logs from your Kubernetes components, applications, and infrastructure. Tools like Fluentd, Logstash, or Elasticsearch can be used to centralize logs and provide real-time insights into system events, errors, and warnings. By analyzing logs, you can quickly identify and resolve issues, ensuring optimal performance and reliability.

Best Practices for Monitoring and Troubleshooting Kubernetes Deployments

- Implement proper labeling and annotation strategies to facilitate easy management and discovery of your deployments.

- Configure alerts and notifications for critical resource usage, application performance, and system log events.

- Regularly review monitoring data and logs to identify trends, patterns, and potential issues.

- Test monitoring and troubleshooting strategies in a staging environment before applying them to production deployments.

Best Practices for Kubernetes Deployments

Adhering to best practices for Kubernetes deployments can help ensure high availability, seamless updates, and efficient resource utilization. By following these guidelines, you can build and maintain reliable, scalable, and manageable Kubernetes deployments that meet the needs of your containerized applications.

Use Declarative Configuration

Declarative configuration involves defining the desired state of your Kubernetes resources in a YAML or JSON file. This approach simplifies deployment management, as you can easily track changes, version control your configurations, and apply updates using tools like kubectl or Helm. By using declarative configuration, you can maintain a consistent and predictable environment for your applications.

Limit Resource Requests

Limiting resource requests for your Pods ensures that Kubernetes can efficiently schedule and manage resources across the cluster. By specifying resource requests and limits in your deployment configuration, you can prevent resource contention, improve cluster stability, and ensure that your applications receive the resources they need to operate effectively.

Implement Proper Labeling and Annotation Strategies

Proper labeling and annotation strategies enable you to organize, filter, and select Kubernetes resources based on specific criteria. By using consistent and descriptive labels and annotations, you can simplify deployment management, improve resource discovery, and facilitate operations like scaling, rolling updates, and troubleshooting.

Monitor and Audit Your Deployments

Monitoring and auditing your Kubernetes deployments involve tracking resource utilization, application performance, and system logs to ensure smooth deployment operations. By regularly reviewing monitoring data and audit logs, you can identify trends, patterns, and potential issues, allowing you to proactively address problems and maintain a high-performing and reliable environment for your applications.

Test and Validate Changes in a Staging Environment

Testing and validating changes in a staging environment before applying them to production deployments can help prevent issues, reduce downtime, and ensure a smooth deployment process. By simulating real-world scenarios and testing updates in a controlled environment, you can identify and resolve potential problems before impacting your production workloads.

Continuously Learn and Adapt

Kubernetes is a rapidly evolving platform, and new features, best practices, and tools are constantly emerging. To stay current and maintain a high-performing environment, it’s essential to continuously learn, adapt, and refine your Kubernetes deployment strategies. Engage with the Kubernetes community, attend events, and participate in online forums to stay informed and up-to-date on the latest developments in the Kubernetes ecosystem.

Popular Kubernetes Deployment Tools and Solutions

When working with Kubernetes deployments, various tools and solutions can help simplify the deployment process, streamline management tasks, and enhance the overall developer experience. This section reviews three popular Kubernetes deployment tools and solutions: Helm, Kustomize, and Jenkins X. We’ll discuss their features, benefits, and limitations, providing guidance on selecting the right tool for your use case.

Helm

Helm is a package manager for Kubernetes that simplifies the deployment and management of applications. With Helm, you can package your Kubernetes manifests into reusable charts, making it easy to install, upgrade, and roll back applications. Helm also includes built-in templates and values, allowing you to customize and configure your deployments according to your specific requirements.

- Features: Charts, releases, hooks, values, and templates

- Benefits: Simplified deployment, versioning, and rollbacks

- Limitations: Additional complexity, learning curve, and potential security concerns

Kustomize

Kustomize is a standalone tool to customize Kubernetes objects through a kustomization file. It enables you to define a base configuration and apply customizations, such as patching, overlaying, and injecting resources, without modifying the original YAML files. Kustomize simplifies the management of complex deployments and promotes reusability and consistency across environments.

- Features: Patches, overlays, generators, and transformers

- Benefits: Customization, reusability, and consistency

- Limitations: Limited support for advanced features, such as hooks and versioning

Jenkins X

Jenkins X is a project that simplifies the process of developing, building, and deploying cloud-native applications on Kubernetes. It includes tools for continuous integration (CI), continuous delivery (CD), and GitOps, enabling you to automate the entire software development lifecycle. Jenkins X supports various CI/CD tools, such as Jenkins, Tekton, and GitHub Actions, and integrates with popular Kubernetes platforms like Google Kubernetes Engine (GKE) and Amazon Elastic Kubernetes Service (EKS).

- Features: CI/CD pipelines, GitOps, and automated deployments

- Benefits: Streamlined development, simplified deployments, and automated workflows

- Limitations: Additional complexity, learning curve, and potential security concerns

Selecting the Right Tool for Your Use Case

When selecting a Kubernetes deployment tool or solution, consider the following factors:

- Complexity: Choose a tool that aligns with your team’s expertise and the complexity of your deployments.

- Customization: Select a tool that supports the level of customization required for your applications and environments.

- Integration: Ensure that the tool integrates with your existing infrastructure, tools, and workflows.

- Scalability: Choose a tool that can scale with your deployments and handle increasing demands.

- Security: Evaluate the security features and potential vulnerabilities of each tool before making a decision.