Kubernetes Control Plane: The Brains of the Operation

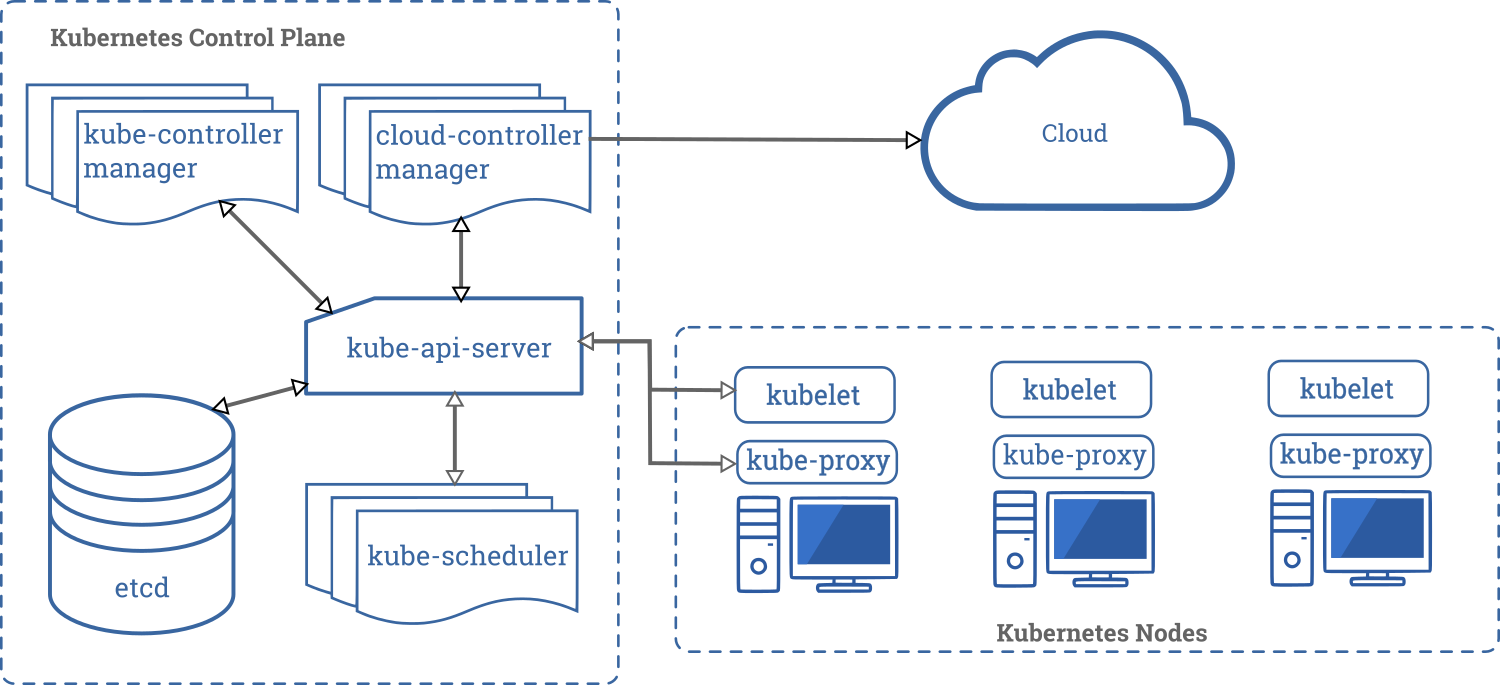

The Kubernetes control plane acts as the orchestrator for the entire system. It’s the central brain managing all the kubernetes components and ensuring smooth operation. Think of it as the air traffic control for your applications, directing and managing everything that happens within the cluster. Key components within the control plane work together seamlessly. These core kubernetes components include etcd, the kube-apiserver, the kube-scheduler, and the kube-controller-manager. Each plays a vital role in maintaining the cluster’s health and functionality.

Etcd, a highly available and consistent key-value store, serves as the persistent memory for the entire system. It stores the complete state of the cluster, including the desired state of applications and the current state of resources. This crucial information allows the other components to maintain a clear picture of what needs to happen and what is currently happening within the Kubernetes environment. The kube-apiserver acts as the central API, the command center, handling all communication between the control plane and worker nodes. All requests to manage and monitor the cluster pass through this central component. It is a critical component of the kubernetes components.

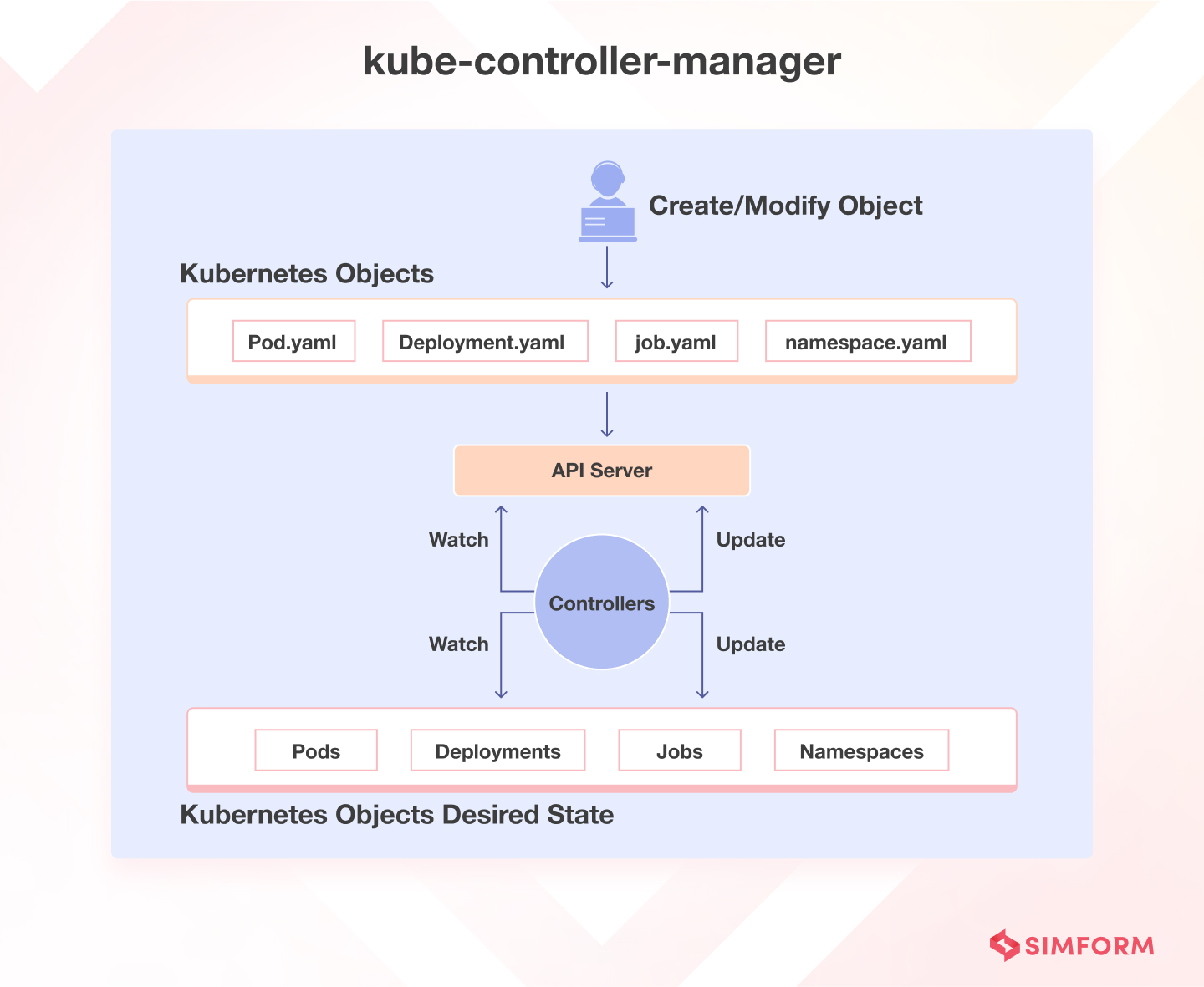

The kube-scheduler is responsible for intelligently assigning pods to worker nodes. This assignment considers factors like resource availability, pod constraints, and other crucial aspects to ensure optimal resource utilization and application performance. It strives to balance the workload across the cluster and provide the most efficient application deployment. Finally, the kube-controller-manager ensures the desired state of the cluster is consistently maintained. It constantly monitors the cluster, detecting and responding to changes and ensuring that the actual state matches the desired state. These core kubernetes components work together, constantly monitoring and adjusting the state of the cluster. Their interdependency is crucial for the stability and efficiency of the Kubernetes ecosystem. The interplay between these components is essential for the functionality of many applications within the kubernetes components.

Understanding the Kubernetes Worker Nodes: Where the Magic Happens

Kubernetes worker nodes form the operational backbone of a Kubernetes cluster. These nodes execute the containers that comprise your applications. Each worker node runs several crucial kubernetes components. The kubelet acts as the primary agent, managing all containers on the node. It receives instructions from the control plane and ensures that containers are running as specified. The kube-proxy handles network routing within the node, enabling communication between pods and external services. A container runtime, such as Docker or containerd, executes the containers themselves. These essential kubernetes components work in concert, forming a powerful and efficient system for managing containerized workloads. Understanding their roles is paramount to effectively managing your Kubernetes deployments.

The relationship between the control plane and worker nodes is fundamentally about distributed control and execution. The control plane components, as detailed previously, orchestrate the tasks, assigning them to worker nodes based on resource availability and other criteria. The worker nodes, in turn, act as the executors, ensuring that the containers run efficiently and effectively. A critical aspect of this relationship is the constant communication between control plane and nodes. The control plane continuously monitors the status of each worker node, tracking resource usage, container health, and overall system performance. This monitoring enables the system to proactively adjust deployments, restart failing containers, and scale resources as needed. Effective utilization of these Kubernetes components is key to maintaining a robust and scalable infrastructure.

Visualizing this interaction is helpful. Imagine the control plane as a central command center, issuing instructions and monitoring progress. The worker nodes are the field operatives, receiving those instructions and carrying them out. Each worker node diligently manages its assigned tasks, reporting back to the central command center on its status. This constant feedback loop enables the system to react dynamically to changes in demand, ensuring optimal performance and high availability. The efficient interaction of these core kubernetes components is crucial for managing application deployments and achieving optimal cluster performance. Detailed diagrams can illuminate these relationships further, providing a deeper understanding of the underlying architecture.

Pods: The Fundamental Building Blocks of Kubernetes

Pods represent the smallest and most basic deployable units within the Kubernetes ecosystem. Understanding pods is crucial for mastering Kubernetes components. They are ephemeral in nature, meaning they can be created and destroyed automatically by the system as needed. A pod encapsulates one or more containers, which are the actual runtime environments for applications. These containers share the same network namespace and storage, allowing them to communicate efficiently. Each pod is assigned a unique IP address within the Kubernetes cluster, enabling inter-pod communication. Think of a pod as a single unit that combines several related containers working together.

The relationship between pods and containers is essential to grasp when working with Kubernetes components. While containers provide the application runtime environment, pods provide the operational context. A single pod might house a main application container and several supporting containers, such as a database or a sidecar proxy. This approach allows for efficient resource management and application deployment. Shared resources within a pod, like storage volumes, are accessible to all containers within that pod. This shared access simplifies inter-container communication and resource sharing. Effective pod lifecycle management is crucial. Kubernetes handles pod creation, deletion, and restart, ensuring application availability and fault tolerance.

Managing the lifecycle of pods is an important aspect of Kubernetes components. Kubernetes automatically manages the creation, deletion and restarting of pods, enabling seamless application deployment and high availability. For instance, if a pod crashes, Kubernetes will automatically restart it. This automatic management simplifies application deployment and improves resilience. Understanding pod specifications is equally important. These specifications determine the resources a pod requires, including CPU, memory and storage. Precise specifications ensure efficient resource allocation and prevent resource contention within the cluster. Mastering the intricacies of pods is key to efficient application deployment within the Kubernetes environment. Properly configuring and managing pods contributes to a robust and scalable application architecture. This understanding forms a fundamental cornerstone of proficiency with Kubernetes components.

Deployments: Managing and Scaling Your Applications in Kubernetes

Deployments are fundamental Kubernetes components for managing application deployments and scaling. They automate the process of creating and updating application instances, ensuring high availability and graceful updates. Deployments use replication controllers to manage the desired number of application pods. This ensures that if a pod fails, Kubernetes automatically creates a replacement. The system maintains the specified number of replicas, providing resilience and redundancy. Understanding deployments is crucial for managing and scaling applications within the Kubernetes ecosystem. They offer a declarative approach to managing applications, allowing you to specify the desired state, and Kubernetes handles the work of achieving and maintaining that state.

Deployments support rolling updates, allowing for gradual transitions between versions of your application. This minimizes downtime and allows for easy rollback to previous versions if issues arise. During a rolling update, new pods are created with the updated image, and old pods are terminated gradually. This process is carefully managed to ensure that the application remains available throughout the update. You can configure various parameters, such as the number of new replicas to create before terminating old ones, to control the update’s speed and impact. This capability is essential for deploying updates to production systems without causing significant disruptions. Resource requests and limits are specified within deployments. This is vital for managing resource consumption and preventing resource starvation.

Managing resource requests and limits within your deployments is essential for effective resource allocation across your Kubernetes cluster. Resource requests define the minimum resources required by each pod, while resource limits define the maximum amount each pod can use. By carefully setting these values, you can prevent resource contention and ensure that your applications receive the resources they need to perform optimally. Deployments provide sophisticated control over resource management within the wider architecture of Kubernetes components. Proper configuration prevents resource exhaustion, a common cause of application instability. Effective use of deployments streamlines the process of scaling applications to meet fluctuating demand, ensuring application performance remains stable regardless of user traffic.

Services: Exposing Your Applications in Kubernetes

Services are crucial Kubernetes components that provide a stable IP address and DNS name for accessing your applications. Pods, being ephemeral, constantly change their IP addresses. Services act as an abstraction layer, providing a consistent endpoint regardless of pod changes. This is essential for maintaining application availability and allowing external access. Several service types cater to different needs within the Kubernetes ecosystem.

The most common service type is ClusterIP. This creates a service accessible only within the cluster. NodePort exposes the service on each node’s IP at a static port, making it accessible from outside the cluster. LoadBalancers, often used in cloud environments, automatically create an external load balancer to distribute traffic across your application’s pods. Finally, Ingress controllers provide advanced features for routing external traffic, including TLS termination and path-based routing. Understanding these different service types within the wider context of kubernetes components is key to effectively deploying and managing your applications. Choosing the right type depends on your specific requirements and the infrastructure you are using. For example, if you need external access, NodePort or LoadBalancer would be suitable. For more complex routing scenarios, an Ingress controller is a better option. Effective use of services is vital for the success of any Kubernetes deployment.

Consider a scenario where you deploy a web application. Initially, you might start with a ClusterIP service for internal testing. Once ready for public access, you could switch to a LoadBalancer service in a cloud environment. This will automatically create a load balancer, distributing traffic across your application’s pods. This dynamic scaling capability, facilitated by Kubernetes components like Services, makes managing and scaling applications significantly easier and more efficient. The interplay between Services and other kubernetes components such as deployments is crucial for a robust and scalable application architecture. Efficiently using these core components is critical for success in managing the complexities of modern application deployments.

Namespaces: Organizing Your Kubernetes Clusters

Namespaces offer a powerful mechanism for logically partitioning a Kubernetes cluster. They provide a way to isolate resources, such as pods, services, and deployments, within a shared environment. This is crucial for multi-tenant clusters, where multiple teams or projects might need to use the same Kubernetes infrastructure. Namespaces help avoid naming conflicts and improve resource management by enabling teams to operate independently, without interfering with each other. This enhanced organization is a key benefit of utilizing namespaces as a core kubernetes components.

Consider a scenario with multiple development teams. Each team can be assigned its own namespace. This means that each team has its own isolated set of resources, preventing accidental access or modification of other teams’ applications. Namespaces also enhance security. Access control policies can be applied at the namespace level, limiting the permissions of users or applications to specific resources within their assigned namespaces. Resource quotas can also be set per namespace, ensuring fairness and preventing resource exhaustion by one team impacting others. Effectively utilizing namespaces is essential for managing complex Kubernetes deployments and scaling for growth. The smart use of namespaces contributes to a stable and efficient operation of the entire system as a kubernetes component.

Creating and managing namespaces is straightforward using the `kubectl` command-line tool. Administrators can easily create new namespaces, assign resource quotas, and manage access control policies. Monitoring resource usage within each namespace allows for proactive capacity planning and optimization. By leveraging namespaces, organizations can improve the overall efficiency, security, and maintainability of their Kubernetes clusters. The efficient use of namespaces, a core kubernetes components, allows for a more organized and controlled environment, simplifying management and enhancing the cluster’s overall performance. Understanding and utilizing namespaces correctly is a vital skill for anyone working with Kubernetes.

Troubleshooting Common Kubernetes Issues: A Practical Guide

Kubernetes, with its intricate network of components, can sometimes present challenges. Understanding common issues and employing effective troubleshooting techniques is crucial for smooth operation. One frequent problem involves pod failures. Pods might fail due to insufficient resources, flawed application code, or image pull issues. Effective debugging involves checking the pod’s status using `kubectl describe pod

Network connectivity problems are another common hurdle in Kubernetes. Pods might fail to communicate with each other or external services due to misconfigured network policies or network issues within the underlying infrastructure. Utilize `kubectl get pods -o wide` to check the pod’s IP addresses and node assignment. Examine the Kubernetes networking components, including the kube-proxy and service configurations. Tools like `tcpdump` or `ping` can help diagnose network connectivity issues between pods. Resource exhaustion, especially memory and CPU, frequently impacts application performance and stability. The `kubectl top nodes` and `kubectl top pods` commands offer real-time resource utilization data for nodes and pods. This helps identify resource-intensive pods or nodes under heavy load. Vertical scaling (increasing resources for individual pods) or horizontal scaling (adding more pods) can resolve these issues. Understanding these key kubernetes components is critical for efficiently managing resource allocations.

Successfully troubleshooting Kubernetes issues hinges on mastering kubectl commands. `kubectl describe` provides detailed information about various Kubernetes resources. The `kubectl logs` command displays logs from running containers. Understanding Kubernetes events with `kubectl get events` is critical to identifying the sequence of events leading to a problem. These commands, along with careful examination of resource usage and application logs, empower administrators to effectively diagnose and resolve common problems. Mastering these troubleshooting techniques is vital for maintaining the health and stability of any Kubernetes cluster. Effective use of these tools ensures the efficient management of kubernetes components.

Kubernetes Persistent Volumes (PVs) and Persistent Volume Claims (PVCs): Managing Persistent Storage

Stateful applications require persistent storage to retain data beyond the lifespan of a pod. Understanding Kubernetes persistent volumes (PVs) and persistent volume claims (PVCs) is crucial for managing this data within the kubernetes components ecosystem. PVs abstract physical storage resources, presenting them as logical volumes to the Kubernetes cluster. They represent the storage itself, independent of any particular pod. Think of PVs as the storage units available to the cluster, like a pool of hard drives. PVCs, on the other hand, are requests from pods for storage. They are essentially claims on the available PVs. A pod makes a PVC request, specifying the required storage capacity and access modes. The Kubernetes scheduler then finds a suitable PV to satisfy this request. This dynamic provisioning ensures that stateful applications always have access to the persistent storage they need, even if pods are rescheduled or deleted. Efficient management of PVs and PVCs is fundamental to many kubernetes components.

The interaction between PVs and PVCs is a key aspect of managing persistent data within a Kubernetes cluster. When a stateful application requires persistent storage, it declares a PVC in its deployment specification. Kubernetes then finds a matching PV. Once bound, the PVC provides the pod with access to the storage via a mount path. This decoupling of storage from pods improves the resilience of the application. Even if a pod fails and is rescheduled on a different node, its persistent data remains intact because it’s separate from the pod itself, managed by the key kubernetes components. This is essential for maintaining data consistency and availability in dynamic environments. Choosing the right type of PV (e.g., local, NFS, cloud storage) depends on the specific storage needs and the cluster’s infrastructure. Careful configuration of access modes (ReadWriteOnce, ReadOnlyMany, ReadWriteMany) is also important to guarantee data integrity and availability for the different kubernetes components.

Provisioning persistent storage involves creating PVs representing existing storage resources or dynamically provisioning new ones using storage classes. Storage classes define how PVs are provisioned, allowing administrators to customize storage features like type, size, and access modes. Kubernetes handles the binding of PVCs to PVs, simplifying storage management for application developers. They only need to specify their storage requirements through PVCs; the cluster’s kubernetes components take care of the rest. This automated approach streamlines the process and simplifies deploying and managing stateful applications in a Kubernetes cluster. Understanding the lifecycle of PVs and PVCs, including their creation, binding, and deletion, is essential for successfully deploying and operating stateful applications using the various kubernetes components.