What is a Kubernetes Cluster and Why Set One Up?

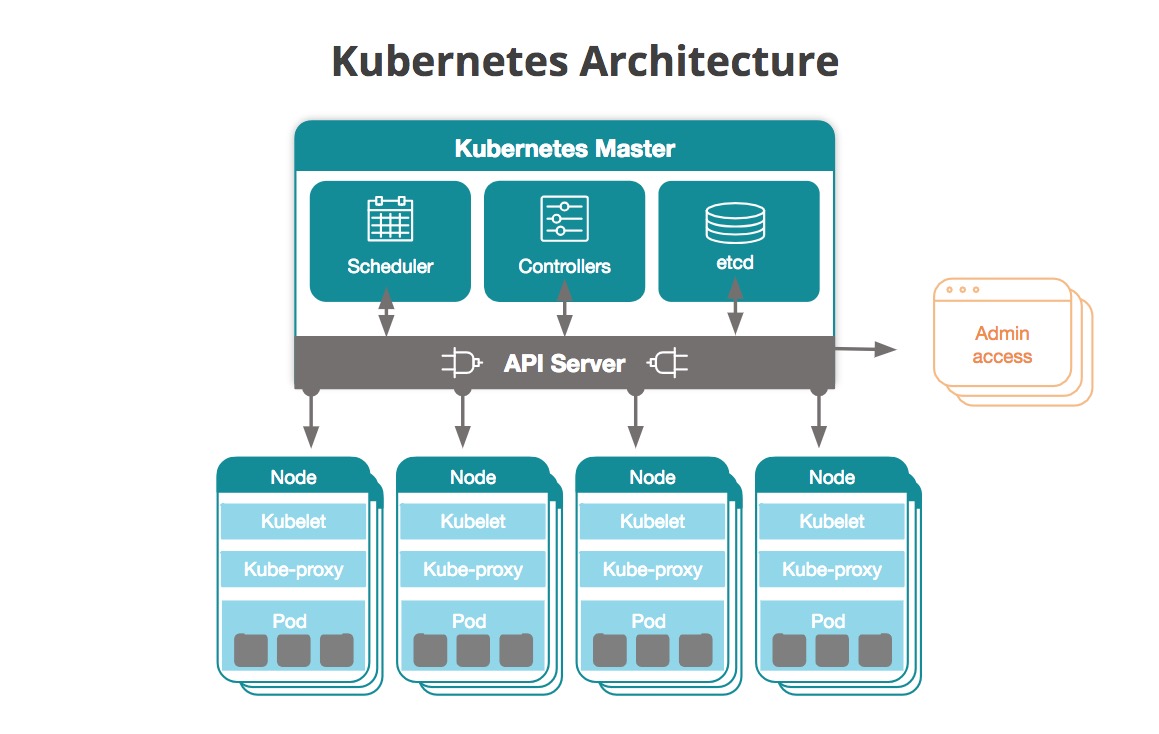

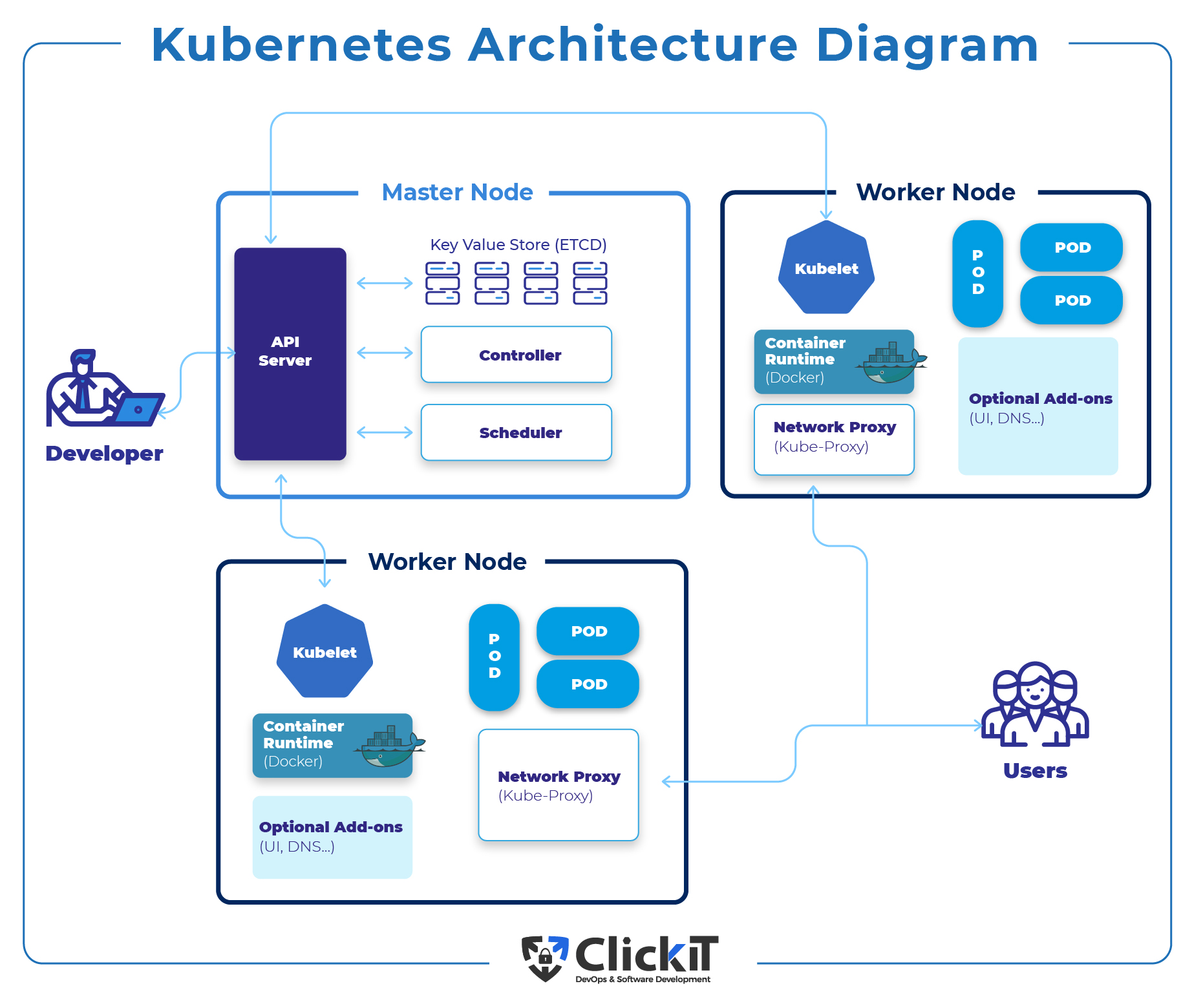

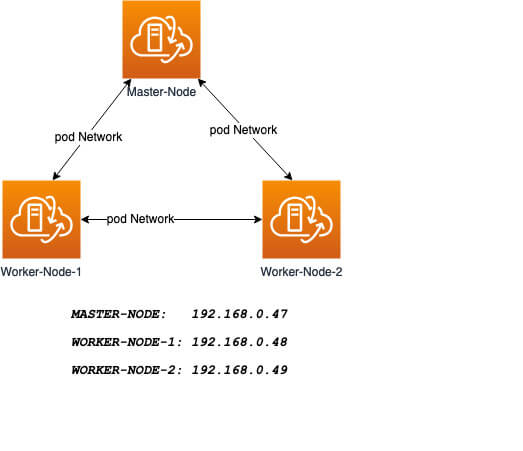

A Kubernetes cluster is a powerful, open-source platform designed to automate the deployment, scaling, and management of containerized applications. By setting up a Kubernetes cluster, you can harness the benefits of containerization, such as resource efficiency, portability, and rapid application deployment. Kubernetes clusters consist of a master node, which runs the control plane components, and worker nodes, which host the applications and their associated containers. The master node is responsible for maintaining the desired state of the cluster, while the worker nodes execute the tasks required to run the applications.

Setting up a Kubernetes cluster offers several advantages, including:

- Scalability: Kubernetes clusters can easily scale up or down to accommodate changes in application workload, ensuring optimal performance and resource utilization.

- Fault-tolerance: Kubernetes clusters provide built-in mechanisms for handling hardware failures, software bugs, and network issues, ensuring high availability and reliability for your applications.

- Resource efficiency: Kubernetes clusters enable fine-grained resource allocation and sharing, ensuring that each application receives the resources it needs to operate efficiently.

- Consistency: Kubernetes clusters enforce consistent deployment and management practices across different environments, reducing the risk of configuration errors and inconsistencies.

In summary, a Kubernetes cluster is an essential tool for managing containerized applications, offering scalability, fault-tolerance, resource efficiency, and consistency. By setting up a Kubernetes cluster, you can unlock the full potential of containerization and streamline your application deployment and management processes.

Prerequisites: System Requirements and Tools

Before setting up a Kubernetes cluster, it is essential to ensure that your system meets the necessary requirements and that you have the appropriate tools installed. This section outlines the prerequisites for a successful Kubernetes cluster setup.

Hardware and Operating System Requirements

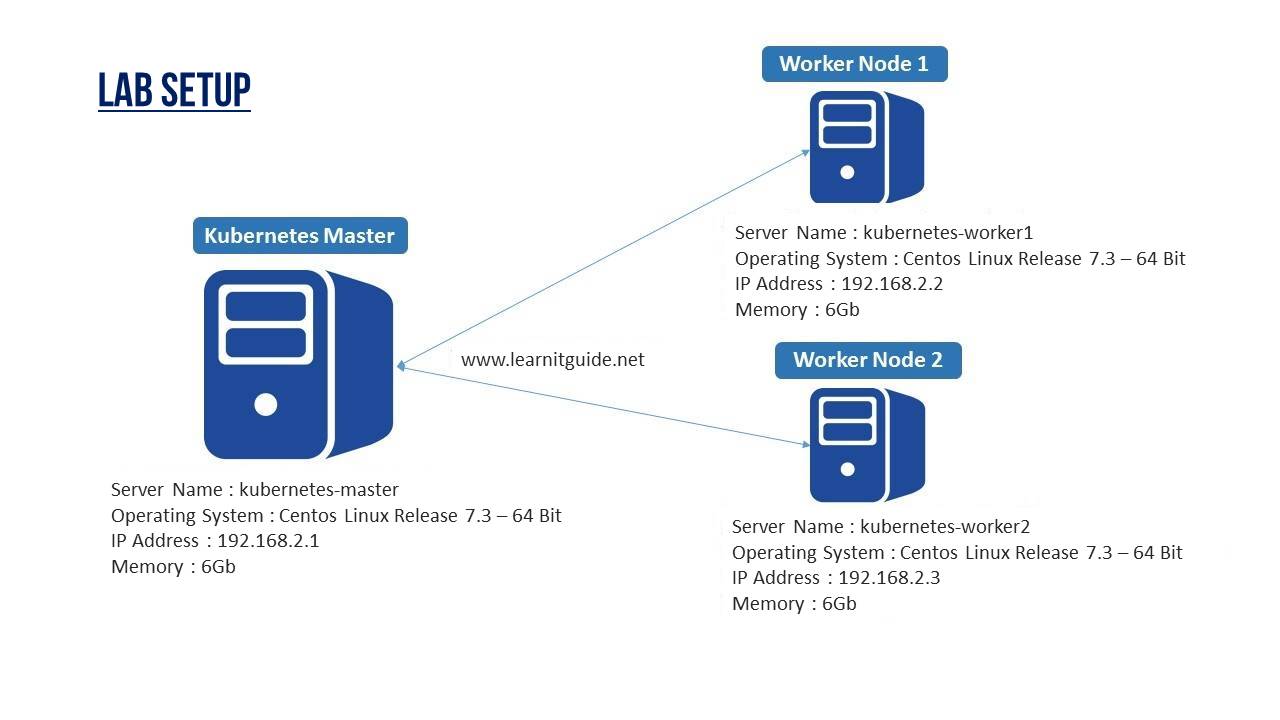

Kubernetes clusters can run on various hardware and operating systems. Generally, a modern Linux distribution, such as Ubuntu 18.04 or CentOS 7, is recommended for the master and worker nodes. The minimum hardware requirements for a single-node Kubernetes cluster include:

- 2 CPUs

- 2 GB of RAM

- 10 GB of free disk space

For multi-node clusters, it is recommended to allocate more resources to each node to ensure optimal performance and resource utilization.

Software Prerequisites

To set up a Kubernetes cluster, you will need to install the following software components:

- Docker: Docker is the container runtime used by Kubernetes to manage containerized applications. Docker version 19.03 or later is recommended.

- kubeadm: kubeadm is a Kubernetes tool used to bootstrap the cluster creation process.

- kubelet: kubelet is the Kubernetes agent responsible for managing the worker nodes.

- kubectl: kubectl is the Kubernetes command-line tool used to interact with the cluster.

These components can be installed using the package manager of your chosen Linux distribution.

Recommended Text Editors and Command-Line Tools

For editing Kubernetes configuration files, it is recommended to use a powerful text editor, such as Visual Studio Code or Vim. Additionally, it is helpful to have a command-line tool, such as curl or wget, installed to facilitate the download and installation of various components.

In summary, setting up a Kubernetes cluster requires a modern Linux distribution, sufficient hardware resources, and the installation of Docker, kubeadm, kubelet, and kubectl. By ensuring that these prerequisites are met, you can proceed with confidence in creating a robust and reliable Kubernetes cluster.

Choosing the Right Kubernetes Cluster Architecture

Selecting the appropriate Kubernetes cluster architecture is crucial for ensuring optimal performance, resource utilization, and cost management. This section outlines the different Kubernetes cluster architectures and provides guidelines for selecting the most suitable architecture based on specific use cases and resource availability.

Single-Node Kubernetes Cluster

A single-node Kubernetes cluster consists of a single machine running both the control plane and worker node components. This architecture is ideal for development, testing, and learning purposes, as it is quick to set up and requires minimal resources. However, single-node clusters are not recommended for production workloads, as they lack the fault-tolerance and scalability required for mission-critical applications.

Multi-Node Kubernetes Cluster

A multi-node Kubernetes cluster consists of separate machines running the control plane and worker node components. This architecture provides fault-tolerance and scalability, making it suitable for production workloads. Multi-node clusters can be further divided into master nodes, which run the control plane components, and worker nodes, which host the applications and their associated containers. Advantages of multi-node clusters include:

- Fault-tolerance: If a worker node fails, the control plane can automatically reschedule the affected applications to other healthy nodes, ensuring high availability.

- Scalability: Multi-node clusters can easily scale up or down to accommodate changes in application workload, ensuring optimal performance and resource utilization.

- Resource isolation: By separating the control plane and worker nodes, you can allocate resources more efficiently, ensuring that each component receives the resources it needs to operate effectively.

However, multi-node clusters require more resources and are more complex to set up and manage compared to single-node clusters.

Managed Kubernetes Services

Managed Kubernetes services, such as Google Kubernetes Engine (GKE), Amazon Elastic Kubernetes Service (EKS), and Azure Kubernetes Service (AKS), provide a fully managed Kubernetes environment, eliminating the need for cluster administration and maintenance. These services offer built-in fault-tolerance, scalability, and security features, making them an attractive option for organizations looking to deploy Kubernetes clusters quickly and efficiently. Advantages of managed Kubernetes services include:

- Reduced administrative overhead: Managed Kubernetes services handle cluster administration and maintenance, freeing up resources for other tasks.

- Enhanced security: Managed Kubernetes services provide built-in security features, such as network policies and role-based access control, to protect your cluster and applications.

- Easy scalability: Managed Kubernetes services enable you to scale your cluster up or down with just a few clicks, ensuring optimal performance and resource utilization.

However, managed Kubernetes services can be more expensive than self-managed clusters and may limit your control over certain aspects of the cluster configuration.

In summary, selecting the appropriate Kubernetes cluster architecture depends on your specific use case, resource availability, and cost constraints. By understanding the advantages and disadvantages of single-node, multi-node, and managed Kubernetes services, you can make an informed decision and set up a cluster that meets your needs.

Step-by-Step Guide: Setting Up a Kubernetes Cluster

This section provides a detailed, step-by-step guide on setting up a Kubernetes cluster. We will cover essential tasks, such as installing the Kubernetes control plane, configuring the worker nodes, and deploying a sample application. Use clear, concise instructions and include screenshots or diagrams where necessary.

Step 1: Install the Kubernetes Control Plane

Begin by installing the Kubernetes control plane on a master node using the official kubeadm installation guide. This process involves setting up the Docker container runtime, installing kubeadm, kubelet, and kubectl, and initializing the master node.

Step 2: Configure the Worker Nodes

Next, join the worker nodes to the cluster by executing a command on each worker node, as provided by the kubeadm init command on the master node. This process involves configuring the Docker container runtime, installing kubelet and kubectl, and joining the worker nodes to the cluster.

Step 3: Verify the Cluster Setup

Use the kubectl command-line tool to verify that the cluster is running correctly. Execute the following command to view the nodes in the cluster:

kubectl get nodes Ensure that all nodes, including the master and worker nodes, are in the Ready state.

Step 4: Deploy a Sample Application

Deploy a sample application, such as the official guestbook application, to the cluster to verify that it is functioning correctly. This process involves creating a deployment, exposing the deployment as a service, and verifying that the application is accessible.

Step 5: Secure the Cluster

After setting up the cluster, it is essential to secure it by implementing network policies, role-based access control, and secrets management. Refer to the Securing Your Kubernetes Cluster section for best practices and guidelines.

Step 6: Monitor and Troubleshoot the Cluster

Regularly monitor and troubleshoot the cluster to ensure optimal performance and resource utilization. Refer to the Monitoring and Troubleshooting Your Kubernetes Cluster section for guidelines on identifying and resolving common issues.

In summary, setting up a Kubernetes cluster involves installing the control plane, configuring the worker nodes, verifying the cluster setup, deploying a sample application, securing the cluster, and monitoring and troubleshooting the cluster. By following these steps, you can create a robust and reliable Kubernetes cluster that meets your needs.

Securing Your Kubernetes Cluster

Securing your Kubernetes cluster is crucial to protect your applications and data from unauthorized access, tampering, and theft. In this section, we will discuss best practices for securing your Kubernetes cluster, including network policies, role-based access control, secrets management, and regular updates and patches.

Implement Network Policies

Network policies are a built-in Kubernetes feature that enables you to control the flow of traffic between pods in your cluster. By implementing network policies, you can restrict traffic to only the necessary connections, reducing the attack surface of your cluster.

Configure Role-Based Access Control

Role-based access control (RBAC) is a security feature that enables you to control who can access your cluster and what actions they can perform. By configuring RBAC, you can ensure that only authorized users and applications can interact with your cluster, reducing the risk of unauthorized access.

Manage Secrets Securely

Secrets, such as passwords, API keys, and certificates, are essential for accessing and managing your applications and data. It is crucial to manage secrets securely to prevent unauthorized access and tampering. Kubernetes provides built-in secrets management features, such as secret volumes and config maps, that enable you to manage secrets securely.

Regularly Update and Patch Your Cluster

Regularly updating and patching your Kubernetes cluster is essential to ensure that you have the latest security fixes and features. Kubernetes provides regular updates and patches that address security vulnerabilities and improve the stability and performance of your cluster.

Additional Security Measures

Additional security measures, such as network segmentation, intrusion detection and prevention, and log analysis, can further enhance the security of your Kubernetes cluster. By implementing these measures, you can detect and respond to security threats quickly, reducing the risk of data breaches and unauthorized access.

In summary, securing your Kubernetes cluster is essential to protect your applications and data from unauthorized access, tampering, and theft. By implementing network policies, configuring role-based access control, managing secrets securely, and regularly updating and patching your cluster, you can ensure that your cluster is secure and reliable. Additionally, consider implementing additional security measures, such as network segmentation, intrusion detection and prevention, and log analysis, to further enhance the security of your cluster.

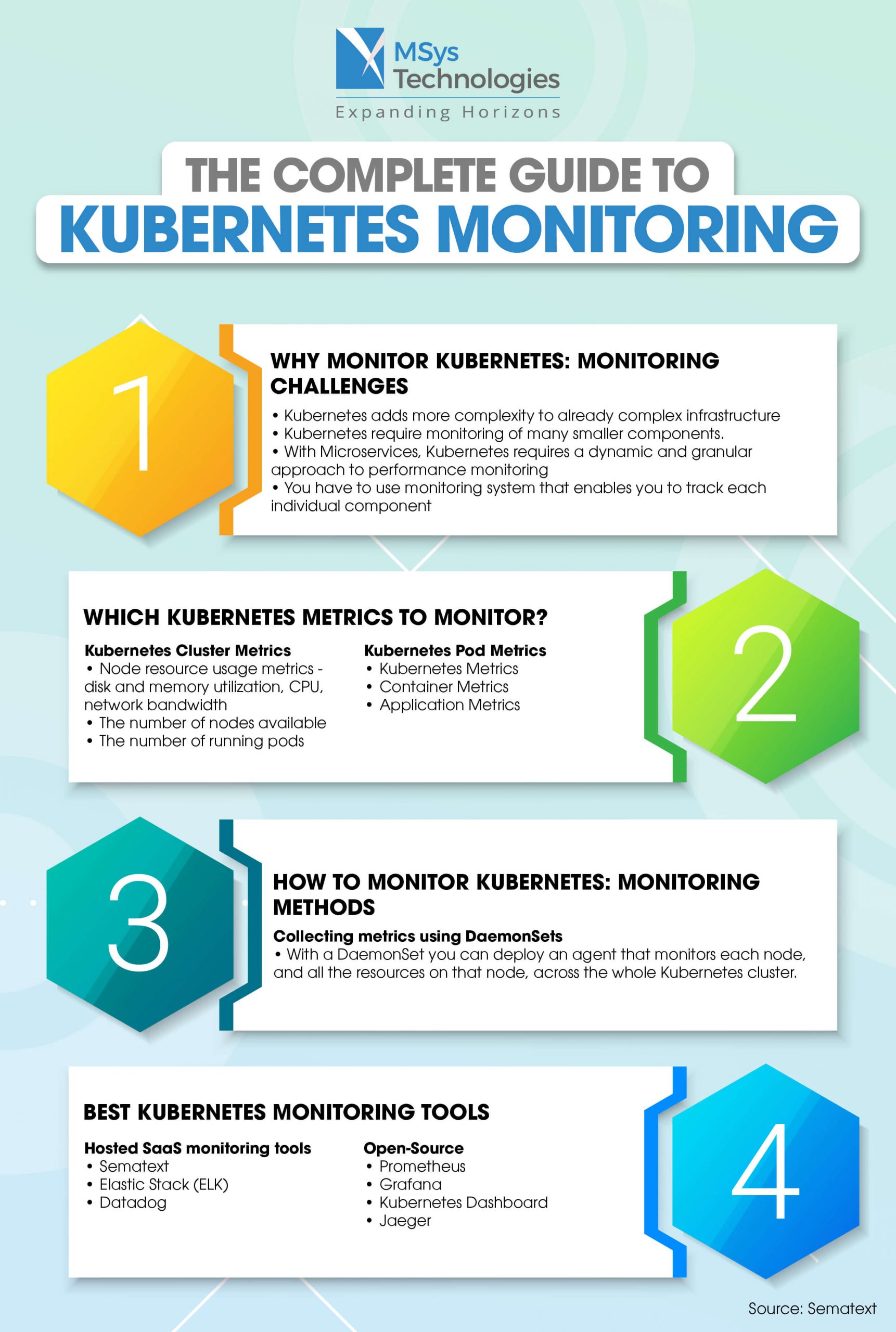

Monitoring and Troubleshooting Your Kubernetes Cluster

Monitoring and troubleshooting your Kubernetes cluster is essential to ensure optimal performance, reliability, and security. In this section, we will discuss the built-in monitoring tools, such as kubectl and metrics-server, and recommend third-party monitoring solutions. We will also provide guidelines for identifying and resolving common issues, such as resource contention, network connectivity problems, and misconfigured applications.

Built-in Monitoring Tools

Kubernetes provides several built-in monitoring tools, such as kubectl and metrics-server, that enable you to monitor the health and performance of your cluster. Kubectl is a command-line tool that allows you to manage and interact with your cluster, while metrics-server is a Kubernetes service that collects resource usage metrics from the nodes and pods in your cluster.

Third-Party Monitoring Solutions

Third-party monitoring solutions, such as Prometheus, Grafana, and Datadog, provide advanced monitoring and visualization capabilities for Kubernetes clusters. These solutions enable you to monitor the health and performance of your cluster in real-time, set up alerts and notifications, and perform root cause analysis for issues and incidents.

Identifying and Resolving Common Issues

Identifying and resolving common issues, such as resource contention, network connectivity problems, and misconfigured applications, is essential to ensure the reliability and security of your Kubernetes cluster. Here are some guidelines for identifying and resolving these issues:

- Resource Contention: Monitor the resource usage of your nodes and pods using tools such as kubectl, metrics-server, or third-party monitoring solutions. Identify any resource bottlenecks or contention and adjust the resource requests and limits of your pods accordingly.

- Network Connectivity Problems: Monitor the network traffic and connectivity of your nodes and pods using tools such as kubectl, metrics-server, or third-party monitoring solutions. Identify any network issues, such as packet loss, latency, or network congestion, and take appropriate action, such as adjusting the network policies or scaling the cluster.

- Misconfigured Applications: Monitor the health and status of your applications using tools such as kubectl, metrics-server, or third-party monitoring solutions. Identify any misconfigured applications or issues with the application deployment or configuration and take appropriate action, such as adjusting the application configuration or rolling back to a previous version.

In summary, monitoring and troubleshooting your Kubernetes cluster is essential to ensure optimal performance, reliability, and security. By using built-in monitoring tools, such as kubectl and metrics-server, and third-party monitoring solutions, you can monitor the health and performance of your cluster in real-time. Additionally, by following guidelines for identifying and resolving common issues, such as resource contention, network connectivity problems, and misconfigured applications, you can ensure the reliability and security of your Kubernetes cluster.

Optimizing Performance in Your Kubernetes Cluster

Optimizing performance in your Kubernetes cluster is essential to ensure that your applications run smoothly and efficiently. In this section, we will offer tips on resource allocation, load balancing, and caching. We will also explain how to use Kubernetes features, such as horizontal pod autoscaling and resource quotas, to improve application performance and reduce costs.

Resource Allocation

Proper resource allocation is crucial to ensure that your applications have the necessary resources to run efficiently. Kubernetes allows you to specify resource requests and limits for your pods, ensuring that they have access to the required CPU and memory resources. By monitoring the resource usage of your nodes and pods, you can adjust the resource requests and limits to optimize performance and reduce costs.

Load Balancing

Load balancing is the process of distributing network traffic across multiple nodes or pods to ensure that no single node or pod is overwhelmed. Kubernetes provides built-in load balancing features, such as the Kubernetes service, which enables you to expose your applications as a load-balanced service. By using load balancing, you can ensure that your applications are highly available and can handle increased traffic.

Caching

Caching is the process of storing frequently accessed data in memory to reduce the time and resources required to access the data. Kubernetes provides several caching mechanisms, such as in-memory caching and persistent caching, that enable you to cache data at the node, pod, or application level. By using caching, you can improve the performance and responsiveness of your applications.

Kubernetes Features for Performance Optimization

Kubernetes provides several features that enable you to optimize the performance of your applications and reduce costs. Here are some of the key features:

- Horizontal Pod Autoscaling: Horizontal pod autoscaling is a Kubernetes feature that enables you to automatically scale the number of pods in a deployment based on CPU utilization or other metrics. By using horizontal pod autoscaling, you can ensure that your applications have the necessary resources to handle increased traffic and reduce the risk of resource contention.

- Resource Quotas: Resource quotas are a Kubernetes feature that enables you to limit the amount of CPU, memory, and other resources that a namespace can consume. By using resource quotas, you can ensure that your applications have access to the necessary resources while preventing resource starvation and contention.

In summary, optimizing performance in your Kubernetes cluster is essential to ensure that your applications run smoothly and efficiently. By using proper resource allocation, load balancing, and caching mechanisms, you can improve the performance and responsiveness of your applications. Additionally, by using Kubernetes features, such as horizontal pod autoscaling and resource quotas, you can improve application performance and reduce costs.

Scaling and Upgrading Your Kubernetes Cluster

Scaling and upgrading your Kubernetes cluster is essential to ensure that it can handle increased traffic and stay up-to-date with the latest features and security patches. In this section, we will explain the process of adding or removing nodes, upgrading Kubernetes versions, and migrating to new infrastructure. We will also provide guidelines for minimizing downtime and maintaining data integrity during these operations.

Adding or Removing Nodes

Adding or removing nodes is a common operation when scaling a Kubernetes cluster. Kubernetes provides several mechanisms for adding or removing nodes, such as the Kubernetes Cluster API and the Kubernetes node lifecycle. By using these mechanisms, you can ensure that your cluster can handle increased traffic and reduce the risk of resource contention.

Upgrading Kubernetes Versions

Upgrading Kubernetes versions is essential to ensure that your cluster is up-to-date with the latest features and security patches. Kubernetes provides several mechanisms for upgrading Kubernetes versions, such as the Kubernetes rolling updates and the Kubernetes canary deployments. By using these mechanisms, you can ensure that your cluster is upgraded smoothly and with minimal downtime.

Migrating to New Infrastructure

Migrating to new infrastructure is a complex operation that requires careful planning and execution. Kubernetes provides several mechanisms for migrating to new infrastructure, such as the Kubernetes etcd backup and restore and the Kubernetes cluster migration. By using these mechanisms, you can ensure that your data is migrated safely and securely and that your cluster is up and running on the new infrastructure.

Guidelines for Minimizing Downtime and Maintaining Data Integrity

Minimizing downtime and maintaining data integrity are essential when scaling and upgrading a Kubernetes cluster. Here are some guidelines for minimizing downtime and maintaining data integrity:

- Backup your data: Before performing any scaling or upgrading operations, ensure that you have a backup of your data. This will enable you to recover your data in case of any issues during the scaling or upgrading process.

- Test in a staging environment: Before performing any scaling or upgrading operations in your production environment, test them in a staging environment. This will enable you to identify and resolve any issues before they affect your production environment.

- Use rolling updates and canary deployments: When upgrading Kubernetes versions, use rolling updates and canary deployments. These mechanisms enable you to upgrade your cluster smoothly and with minimal downtime.

- Monitor your cluster: During and after the scaling or upgrading process, monitor your cluster to ensure that it is running smoothly and that there are no issues. Use built-in monitoring tools, such as kubectl and metrics-server, and third-party monitoring solutions to monitor your cluster.

In summary, scaling and upgrading your Kubernetes cluster is essential to ensure that it can handle increased traffic and stay up-to-date with the latest features and security patches. By using proper mechanisms for adding or removing nodes, upgrading Kubernetes versions, and migrating to new infrastructure, you can ensure that your cluster is scaled and upgraded smoothly and with minimal downtime. Additionally, by following guidelines for minimizing downtime and maintaining data integrity, you can ensure that your cluster is running smoothly and securely.