What is Container Orchestration and Why is it Important?

Containerization has revolutionized software deployment. It packages applications and their dependencies into isolated units. These units, called containers, ensure consistency across different environments. Containerization solves the “it works on my machine” problem. It simplifies deployment and enhances portability. However, managing numerous containers manually can become complex. This is where container orchestration steps in to help with the kubernetes architecture explained.

Container orchestration automates the deployment, scaling, and management of containers. It ensures applications are highly available and resilient. Kubernetes is a leading container orchestration platform. It automates operational tasks, such as rolling updates and self-healing. Its benefits are significant and address critical challenges. Scalability is improved, allowing applications to handle increased traffic. Resilience is enhanced, ensuring applications remain available despite failures. Efficient resource utilization optimizes infrastructure costs. Understanding container orchestration is crucial for modern application development and deployment. The kubernetes architecture explained is essential for efficient resource allocation. This architecture allows for dynamic scaling and resource management based on the application’s requirements.

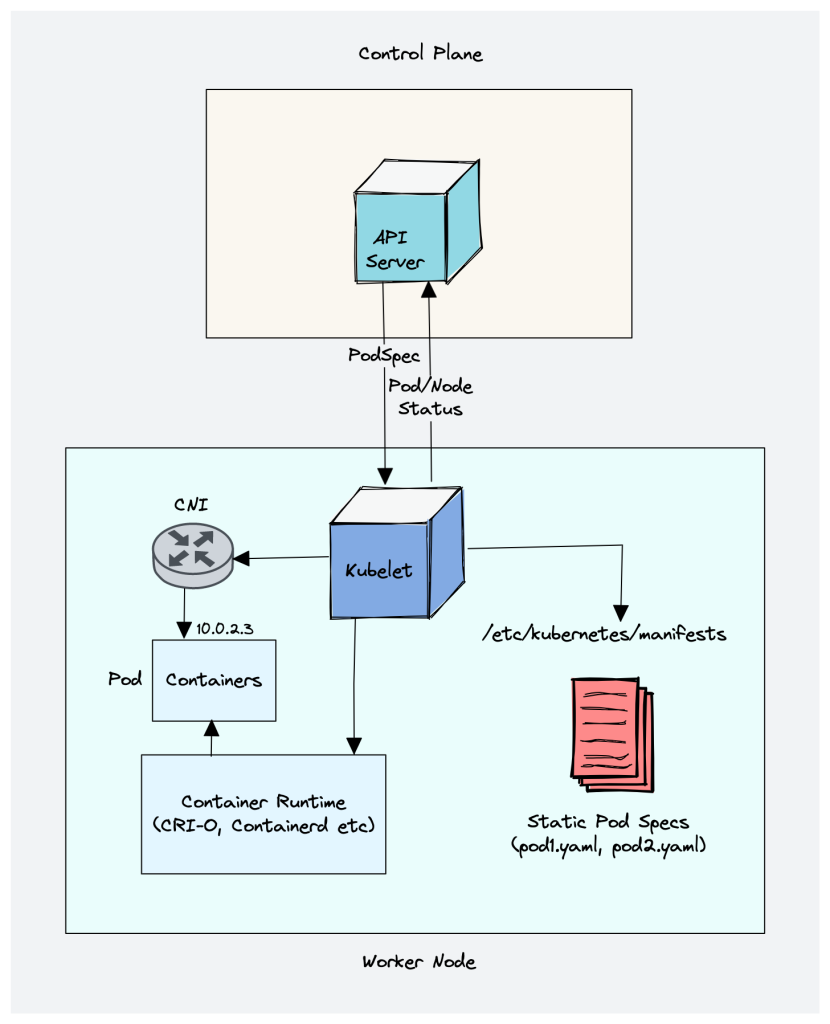

Kubernetes provides a robust framework for managing containerized applications at scale. It offers features like automated deployment, scaling, and self-healing. These features contribute to a more efficient and reliable infrastructure. The Kubernetes architecture explained involves several key components working together. These include the control plane and worker nodes. The control plane manages the cluster, while the worker nodes run the applications. Kubernetes is a powerful tool for organizations looking to embrace cloud-native technologies. It streamlines operations and accelerates the delivery of innovative software. Kubernetes is the best solution because of its open-source nature and large community support, making the kubernetes architecture explained and the best option for modern application deployment.

Understanding the Control Plane: The Brains of Your Kubernetes Cluster

The Kubernetes control plane is central to managing a Kubernetes cluster. It acts as the brain, making decisions about scheduling, resource allocation, and maintaining the desired state of the system. Several components work together within the control plane to ensure smooth operation. Understanding these components is crucial for grasping the fundamentals of Kubernetes architecture explained.

The API server serves as the front end for the Kubernetes control plane. It exposes the Kubernetes API, allowing users, other components, and external systems to interact with the cluster. The API server receives requests, validates them, and then processes them. Etcd is a distributed key-value store that serves as Kubernetes’ backing store for all cluster data. It stores the configuration, state, and metadata of the cluster. Data consistency and reliability are paramount, and etcd ensures these for the Kubernetes architecture explained. The scheduler is responsible for assigning pods to nodes. It considers resource requirements, hardware/software/policy constraints, affinity and anti-affinity specifications, data locality, inter-workload interference and deadlines when making scheduling decisions. The controller manager runs a set of controllers that regulate the state of the cluster. These controllers ensure that the actual state matches the desired state. For example, the replication controller maintains the desired number of replicas for each deployment. Several controllers cooperate for the purpose of running a kubernetes architecture explained successfully. The cloud controller manager integrates the Kubernetes cluster with the underlying cloud provider’s infrastructure. It manages cloud-specific resources, such as load balancers, storage volumes, and networking. The control plane components continuously communicate with each other. They ensure the cluster operates as intended. Each component has specific responsibilities, but they are all vital for Kubernetes architecture explained.

In essence, the control plane components collaboratively manage the cluster’s resources and workloads. The API server handles requests, etcd stores data, the scheduler assigns pods, the controller manager regulates state, and the cloud controller manager interacts with the cloud provider. This synergy guarantees high availability, scalability, and resilience within the Kubernetes environment. Therefore, understanding the roles of these components is foundational to understanding Kubernetes architecture explained and effectively managing Kubernetes deployments. A well-functioning control plane is crucial for a healthy and efficient Kubernetes cluster. The control plane is essential to describe kubernetes architecture explained.

Nodes and Pods: The Building Blocks of Kubernetes Workloads

In the realm of Kubernetes, nodes and pods serve as fundamental components. Understanding their roles is critical to grasping the essence of the Kubernetes architecture explained. A node represents a worker machine, which can be either a physical server or a virtual machine. These nodes form the backbone of the Kubernetes cluster, providing the computational resources necessary to run applications.

Pods, on the other hand, are the smallest deployable units in Kubernetes. A pod encapsulates one or more containers that share network and storage resources. These containers within a pod are co-located and co-scheduled, meaning they run together on the same node. This design facilitates tight coupling and efficient communication between related containers. Each pod is assigned a unique IP address within the cluster, enabling communication between pods. Understanding the relationship between nodes and pods is crucial for comprehending the Kubernetes architecture explained.

The Kubernetes scheduler plays a pivotal role in assigning pods to nodes. The scheduler considers various factors, such as resource requirements, node availability, and placement constraints, to determine the optimal node for each pod. This scheduling process ensures efficient resource utilization and workload distribution across the cluster. Pods within the Kubernetes architecture explained, can communicate with each other through services, which provide a stable endpoint regardless of pod location or changes. This robust communication model is a cornerstone of the Kubernetes architecture explained, ensuring that applications remain accessible and functional even as the underlying infrastructure evolves. Properly managing nodes and pods is key to harnessing the power and flexibility of Kubernetes.

How to Deploy Your First Application on Kubernetes: A Step-by-Step Guide

Embark on your Kubernetes journey by deploying a simple application. This practical guide provides a step-by-step approach to get you started with Kubernetes. The goal is to provide an easy-to-follow example, simplifying the complexities of Kubernetes architecture explained. The first step involves crafting a deployment YAML file. This file defines the desired state of your application, including the container image to use, the number of replicas, and other configurations. A basic deployment YAML file might look like this:

Next, apply the YAML file to your Kubernetes cluster using the `kubectl apply -f deployment.yaml` command. Kubectl is the command-line tool for interacting with your Kubernetes cluster. This command instructs the Kubernetes control plane to create the resources defined in your YAML file. Kubernetes architecture explained involves the control plane taking over, scheduling the pods onto available nodes. To expose your application, you’ll create a service. A service provides a stable IP address and DNS name for accessing your application. You can expose your application using different service types, such as ClusterIP, NodePort, or LoadBalancer.

For this example, let’s use a NodePort service. This makes your application accessible on a specific port on each node in the cluster. Create a service YAML file and apply it using `kubectl apply -f service.yaml`. Once the service is created, you can access your application by navigating to the node’s IP address and the specified NodePort in your web browser. This hands-on experience provides a foundational understanding of Kubernetes architecture explained. This process demonstrates how to deploy and expose applications within a Kubernetes cluster. The provided example offers valuable insights into the core components and functionalities of the platform. This knowledge empowers you to further explore and implement more complex deployments.

Services: Exposing Your Applications to the Outside World

Kubernetes services are fundamental to understanding how applications running within pods are accessed. These services provide a stable network endpoint, abstracting away the complexities of individual pod IP addresses, which can change as pods are created, destroyed, or rescheduled across nodes. The Kubernetes architecture explained relies heavily on services to ensure consistent accessibility, regardless of the dynamic nature of the underlying pods. This ensures the applications remain available, even during updates, failures, or scaling events. Services enable seamless service discovery within the cluster, allowing different components of an application to locate and communicate with each other without needing to track individual pod IPs.

There are several types of Kubernetes services, each designed for specific use cases. `ClusterIP` creates an internal IP address within the cluster that is only accessible to other pods. `NodePort` exposes the service on each node’s IP address at a static port, making it accessible from outside the cluster using the node’s IP and the specified port. `LoadBalancer` automatically provisions a load balancer in the cloud provider’s infrastructure and exposes the service through the load balancer’s IP address. The choice of service type depends on the desired level of exposure and the underlying infrastructure. Kubernetes architecture explained incorporates the proper service type to optimize accessibility, while ensuring the stability of network endpoints.

Services play a critical role in the Kubernetes architecture explained, by decoupling applications from the underlying pod infrastructure. This abstraction allows for flexibility in scaling, updating, and managing applications without impacting the user experience. By providing a stable endpoint, services allow developers to focus on application logic rather than dealing with the complexities of pod networking. Furthermore, services facilitate communication between different parts of an application, allowing them to discover and interact with each other seamlessly. Understanding the different types of services and how they are used is essential for effectively deploying and managing applications on Kubernetes, and is a core part of a well-designed kubernetes architecture explained.

Understanding Deployments and ReplicaSets: Ensuring High Availability

Deployments and ReplicaSets are fundamental resources in Kubernetes, crucial for managing applications and ensuring high availability. Understanding how these components function is key to a well-managed kubernetes architecture explained. A Deployment’s primary responsibility is to manage ReplicaSets. ReplicaSets, in turn, manage pods. This layered approach provides a robust mechanism for maintaining the desired state of your applications within the Kubernetes cluster.

ReplicaSets ensure that a specified number of pod replicas are running at any given time. If a pod fails, the ReplicaSet automatically creates a replacement. This self-healing capability is a core benefit of Kubernetes. Deployments build upon this foundation by providing declarative updates to applications. When you update a Deployment, it creates a new ReplicaSet with the updated pod definition. It then gradually scales down the old ReplicaSet and scales up the new one. This process, known as a rolling update, minimizes downtime and ensures a seamless transition to the new version of your application. The rolling update strategy is a key aspect of kubernetes architecture explained.

Consider a scenario where you need to update your application. Instead of manually deleting and recreating pods, you simply update the Deployment’s YAML file. The Deployment controller handles the rest. It creates a new ReplicaSet, gradually introduces the new pods, and removes the old ones. This automated process reduces the risk of human error and simplifies application management. Furthermore, Deployments allow you to easily roll back to a previous version of your application if needed. This is an important safety net in case a new deployment introduces unexpected issues. Understanding how Deployments and ReplicaSets work together is essential for building resilient and scalable applications on Kubernetes. This understanding of kubernetes architecture explained also contributes to efficient resource management and simplified operational tasks.

Namespaces: Organizing Your Kubernetes Resources

Kubernetes namespaces provide a method to divide cluster resources between multiple users or teams. They act as virtual clusters within a physical cluster. This isolation enhances security and simplifies resource management, making it easier to manage complex deployments. A key aspect of kubernetes architecture explained is how namespaces contribute to a well-organized and manageable environment.

Namespaces are particularly useful in scenarios where multiple teams share a single Kubernetes cluster. For instance, a development team can operate within a “dev” namespace, while a separate staging team utilizes a “staging” namespace, and the production environment resides in a “prod” namespace. This separation prevents resource conflicts and ensures that each team can manage their applications independently. The kubernetes architecture explained demonstrates how namespaces improve collaboration and reduce the risk of accidental interference between different environments.

Furthermore, namespaces simplify resource quotas and access control. Administrators can define resource limits for each namespace, preventing individual teams from consuming excessive resources and impacting other teams. Role-Based Access Control (RBAC) can be applied at the namespace level, granting specific permissions to users or service accounts within a particular namespace. Understanding how namespaces function is essential for grasping the overall kubernetes architecture explained, as they provide a fundamental building block for organizing and securing applications within a cluster. This logical separation is a crucial aspect of Kubernetes, enabling efficient resource utilization and streamlined management across diverse teams and projects. Properly utilized namespaces contribute significantly to a robust and maintainable kubernetes architecture explained.

Kubernetes Network Model: Container to Container Communication

The Kubernetes networking model is crucial for enabling seamless communication between containers and pods within a cluster. Understanding this model is essential for deploying and managing applications effectively. Within a pod, containers share the same network namespace and can communicate with each other via localhost. This simplifies inter-container communication, as they can directly address each other without complex routing configurations. This aspect of kubernetes architecture explained highlights the platform’s efficient resource management.

For pod-to-pod communication across different nodes, Kubernetes assigns each pod a unique IP address. This allows pods to communicate directly with each other, regardless of the node they reside on. This flat network space eliminates the need for network address translation (NAT) within the cluster, simplifying networking configurations. The Kubernetes network model relies on Container Network Interface (CNI) plugins to provide this networking functionality. CNI plugins are responsible for configuring the network for pods, including assigning IP addresses and setting up routing rules. Several CNI plugins are available, such as Calico, Flannel, and Cilium, each with its own strengths and weaknesses. The choice of CNI plugin depends on the specific networking requirements of the cluster. Properly configured networking is a cornerstone of kubernetes architecture explained, supporting distributed application functionality.

The CNI plugins implement the Kubernetes network model by creating a virtual network that spans across all nodes in the cluster. This network allows pods to communicate with each other as if they were on the same physical network. This is achieved through various networking technologies, such as overlay networks or routing protocols. While the specifics of CNI plugin implementation can be complex, the fundamental goal is to provide a simple and consistent networking experience for applications running on Kubernetes. This consistency is a vital aspect of kubernetes architecture explained, as it enables developers to focus on application logic rather than low-level networking details. By abstracting away the complexities of networking, Kubernetes empowers developers to build and deploy highly scalable and resilient applications. Understanding how these components interact provides valuable insight into kubernetes architecture explained, leading to better management and optimization of Kubernetes deployments.