Understanding Kubernetes Architecture Through Visualizations

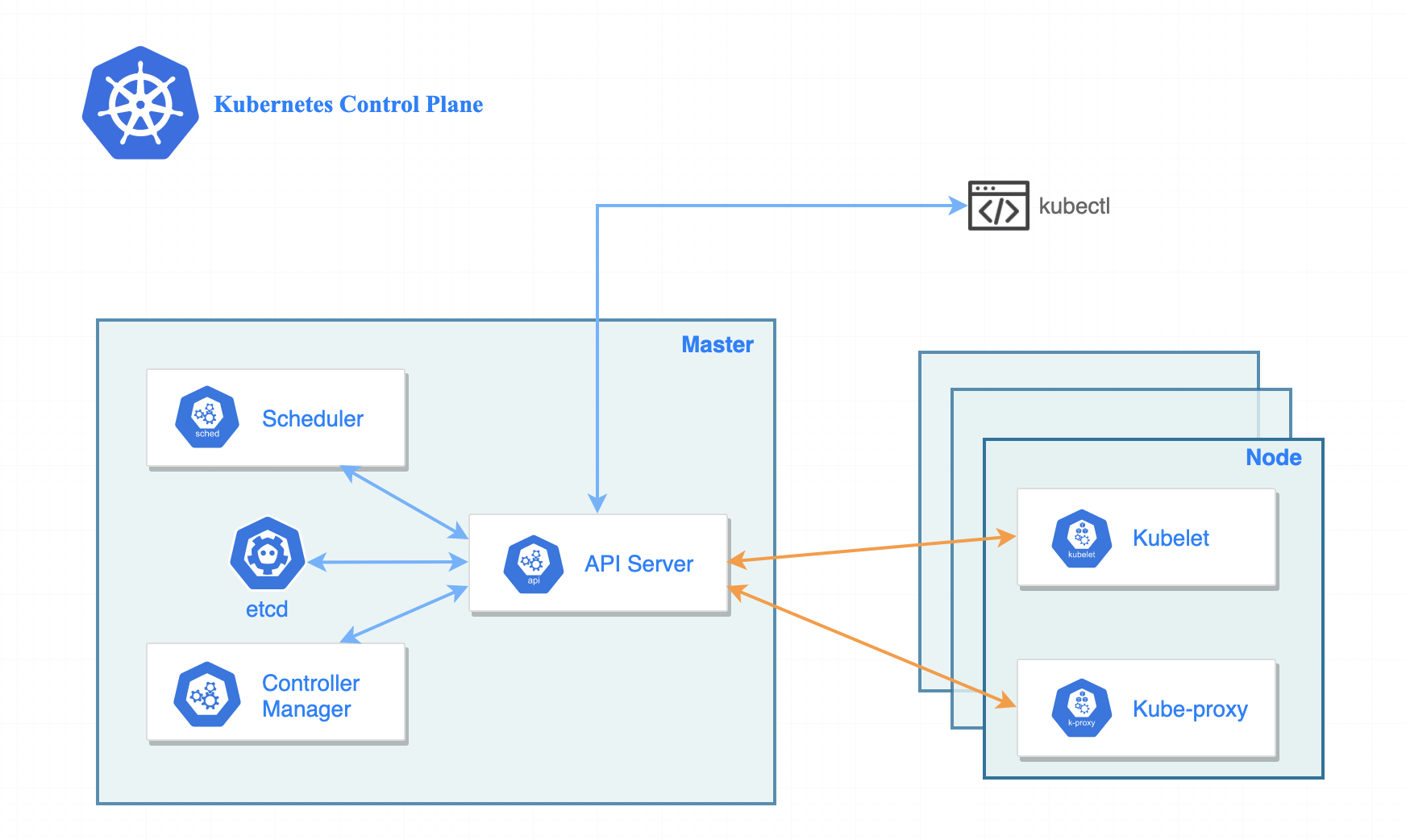

The world of Kubernetes can often feel like navigating a complex maze, with numerous components working together in harmony. At its core, a Kubernetes cluster is an orchestration system that manages containerized applications across a fleet of machines. This architecture includes a control plane, which manages and oversees all the operations and worker nodes where your applications run. The Kubernetes cluster architecture, while powerful, can be challenging to grasp without a clear visual representation. Therefore, the importance of a Kubernetes architecture diagram cannot be overstated. Such a diagram serves as a vital tool for understanding how each component interconnects and functions within the larger system. A diagram makes the relationship between the master and worker nodes easy to visualize and is a great starting point to get a complete picture of a Kubernetes cluster. Without a proper architecture overview, understanding complex interactions and troubleshooting issues becomes significantly more difficult. The key components that you’ll see in a kubernetes architecture diagram include the API server, scheduler, controller manager, etcd, kubelet, kube-proxy, and container runtime. Each plays a critical role, and understanding how they work together is crucial for effective Kubernetes management. This introduction sets the stage for a detailed exploration of the Kubernetes architecture, providing a foundation for understanding its intricate workings.

The Kubernetes architecture diagram is not just a pretty picture; it’s a practical tool that allows you to visualize the abstract concepts that are common in the Kubernetes ecosystem, making them tangible and understandable. Visualizing this complex system is the key to unlocking its full potential. A well-crafted Kubernetes architecture diagram can simplify the learning process. The diagram should illustrate the data flow and relationships between different components. It will help you identify the major components of a Kubernetes cluster at a high level, including the control plane and worker nodes. The architectural diagrams are helpful because they can explain the role of each component, how they interact with each other, and how these interactions impact the overall functionality. Through the use of such diagrams, the user can now see how the various parts of the system are interconnected, which makes it easier to troubleshoot issues, plan deployments, and scale your applications efficiently. A good diagram will also show you a representation of how applications are deployed and managed on Kubernetes through Pods, Deployments and Services. This makes it easier to see how workloads are distributed across the cluster.

Exploring the Kubernetes Control Plane

The control plane is the central management unit of a Kubernetes cluster, orchestrating all activities within the system. Think of it as the brain of the cluster, where critical decisions are made and instructions are issued. The control plane comprises several key components, each with a specific role to play in maintaining the desired state of the cluster. One of the core components is the API Server, which acts as the front-end for the control plane. It exposes the Kubernetes API, allowing users and other components to interact with the cluster by sending commands and retrieving information. The Scheduler is another critical component, responsible for distributing workloads across the worker nodes. It monitors available resources and workload requirements to determine the most suitable node for each pod. The Controller Manager is a set of controllers that continually monitor the cluster’s state and make necessary adjustments to maintain it. For example, it ensures that the desired number of pods for a deployment are always running. Finally, etcd serves as a highly available and consistent key-value store, where the cluster’s configuration and state data are persisted. These components work together seamlessly to manage the cluster. This foundational understanding is necessary to visualize the entire kubernetes architecture diagram, where every piece plays a critical role in the overall functionality. Without a solid grasp of the control plane’s architecture, it becomes difficult to understand how the entire Kubernetes ecosystem operates effectively.

To better grasp the control plane’s function, consider an analogy: Imagine a city with a central control center managing all operations. The API Server acts as the city’s communication hub, where citizens and other organizations request services and send reports. The Scheduler represents the city’s planning department, allocating resources like transportation to various districts based on demand and availability. The Controller Manager functions as a team of city supervisors, ensuring that the city operates according to its rules and plans. Finally, etcd can be visualized as the city’s database, where all important data and information is stored safely and securely. This analogy should help you to understand that a kubernetes architecture diagram is helpful for grasping how the different parts of the control plane, work together, just like the different parts of a city. This helps to abstract the complexity of kubernetes components and makes it easier to understand the overall functionality. In essence, the control plane dictates how resources are allocated, and workloads are managed within a Kubernetes environment, ensuring smooth, reliable and consistent application execution across a cluster.

When thinking about a kubernetes architecture diagram it’s important to remember that each component, from API Server that handles incoming requests, to the Scheduler, that intelligently distributes workloads, and finally to the Controller Manager that keeps the cluster in a healthy state, all depend on the etcd database which stores all the cluster’s information. Understanding this relationship is crucial for troubleshooting and optimizing Kubernetes deployments. Furthermore, understanding that this set of elements orchestrates every operation inside kubernetes, is essential to understanding how it works and to visualize how the whole ecosystem works. The knowledge of the components of the control plane is foundational for understanding all other aspects of Kubernetes.

Understanding the Kubernetes Worker Nodes

Transitioning from the control plane, the focus now shifts to the Kubernetes worker nodes, the workhorses of the cluster where applications actually run. Each worker node is a machine, be it physical or virtual, that executes the tasks assigned by the control plane. Key components reside on each of these nodes, facilitating the execution and management of containerized applications. The kubelet is a primary agent running on every node, acting as the intermediary between the control plane and the node. It receives instructions from the API Server regarding the scheduling of Pods and ensures that containers are running as specified. Kubelet interacts directly with the container runtime, such as Docker or containerd, to manage the lifecycle of containers within the Pods. Another critical component on the worker node is kube-proxy, a network proxy which ensures that network traffic is correctly routed to the appropriate services and pods, implementing Kubernetes networking functionalities. This entire process is fundamental to the Kubernetes architecture diagram, as it illustrates how the control plane’s decisions are translated into tangible operations on the worker nodes.

Worker nodes are constantly monitored by the control plane to assess their health and resource availability. This ensures the control plane can accurately schedule workloads based on the available capacity and the current state of each node. The worker nodes communicate changes in their status back to the control plane. This communication is essential for maintaining the cluster’s overall health and stability and is a critical part of the overall kubernetes architecture diagram. The container runtime environment, which could be Docker, containerd, or others, is vital as it provides the actual environment to run the containers; the containers which package the application itself are then deployed and managed by Kubernetes. This entire interplay between kubelet, kube-proxy, and the container runtime on the worker node is critical to understand when visualizing the Kubernetes architecture diagram, highlighting where applications run and how they communicate with the other parts of the system. The smooth operation of worker nodes ensures applications are executed correctly and are accessible to users.

Understanding worker nodes completes the picture of how a Kubernetes cluster operates, highlighting the vital role each part plays in the overall Kubernetes architecture. By knowing the components of both the control plane and worker nodes, one can now grasp how applications are ultimately deployed and managed on the Kubernetes architecture diagram. This understanding sets the stage to comprehend how workloads are orchestrated, providing a foundation for exploring more complex topics like Pods, Deployments, and Services which are essential to create a full end-to-end view of the architecture. This transition is necessary to provide a complete and practical overview.

How Pods, Deployments, and Services Fit Into the Picture

Understanding the practical application of the Kubernetes architecture diagram involves grasping how pods, deployments, and services function within the cluster. Pods, the smallest deployable units in Kubernetes, represent a single instance of a running process and can contain one or more containers. These containers, often running applications or supporting tools, are grouped together and managed as a single entity. Deployments, on the other hand, provide a declarative way to manage pods. They ensure that a specified number of replica pods are running at any given time, automatically replacing failed pods or scaling up the application as needed. This management and automation is critical for robust application availability and scaling. Services, the final piece of the puzzle, provide a stable network interface for accessing the applications running within the pods. These services act as an abstraction layer, decoupling the application from the individual pods allowing for easy access of the application no matter where the pods are running in the cluster.

The components discussed earlier play crucial roles in enabling pods, deployments, and services to operate seamlessly. The Kubernetes API Server handles the requests to create, update, and manage these resources, while the Scheduler determines which worker node a pod should run on based on resource availability and constraints. The Controller Manager ensures that the desired state of deployments is maintained, creating or deleting pods as needed. Meanwhile, the kubelet on each worker node is responsible for managing the pods running on that node. It pulls container images from registries, starts and monitors containers, and reports their status to the control plane, allowing the application to run as specified by the user. Finally, the kube-proxy facilitates network communication between pods and services, allowing the applications to be accessed from outside the pod or cluster. This intricate interplay of components, as illustrated in any detailed kubernetes architecture diagram, is essential for a well-functioning Kubernetes system.

These elements interact cohesively allowing for the orchestration of containerized applications with ease. When a user requests to deploy an application, they create a deployment configuration that specifies the number of desired replicas, container images, and resource requirements. The API server receives this request, and the Scheduler decides on the worker node for the pods. Then, the Controller Manager creates the requested number of pods. The kubelet on each assigned worker node then starts the containers specified in the pod definition. A service object provides a single access point for the application regardless of which node they’re running on. This practical example shows how the abstract concepts of the Kubernetes architecture diagram translate into the actual deployment and management of real-world applications, providing a high degree of flexibility, fault tolerance, and scalability that’s crucial in today’s application landscape.

Illustrating the Data Flow Within a Kubernetes System

Understanding the end-to-end data flow within a Kubernetes system is crucial for grasping its operational dynamics. The journey typically begins with a user initiating a request, perhaps through a web browser or API client. This request is then routed to a Kubernetes Service, which acts as a single point of access for a group of Pods. This service, leveraging the kubernetes architecture diagram, ensures that requests are intelligently distributed across the available pods. Upon receiving the request, the service directs it to one of the backend Pods, where the application logic resides. These Pods, running within the worker nodes, then process the request. The kube-proxy, a key component in each worker node, is responsible for network proxying, ensuring the request reaches the correct pod based on the service rules. Once the request is processed, the response is sent back through the same path, ultimately reaching the user. This flow demonstrates the crucial interplay of multiple components.

A critical aspect of data flow within the kubernetes architecture diagram is the role of the control plane in ensuring that this entire process is orchestrated efficiently. When a deployment is initially set up, the control plane is responsible for scheduling the Pods onto the worker nodes using the scheduler. The API server acts as the communication point for all operations, while the controller manager actively monitors the cluster’s state to ensure that the desired state matches the current state. The kubelet, running on each worker node, plays an important role in ensuring that the containers within the pod start and operate correctly. This involves communicating with the container runtime, like Docker, and ensuring the pods are healthy and running according to the desired specification. The etcd database maintains the cluster’s state, enabling consistent operations throughout the lifecycle of the applications. Visualizing this complex interaction allows a deeper understanding of how user requests translate to running and managed applications within the cluster.

The entire process can be visualized using a kubernetes architecture diagram, which highlights the relationship and flow of data between the user request and the deployed application. This diagram would demonstrate how a user request comes into the cluster, how it’s routed, and how the response is delivered. This also includes depicting the load balancing through services and the internal communication between the control plane and worker nodes. The end-to-end flow of data within a Kubernetes system clearly shows how all the components, from the user request to the application running inside the Pods, work together seamlessly, allowing the cluster to function as a cohesive system. By understanding these interactions, one can better grasp the complexity and elegance of Kubernetes and its ability to manage complex workloads.

Practical Ways to Visualize Your Kubernetes Setup

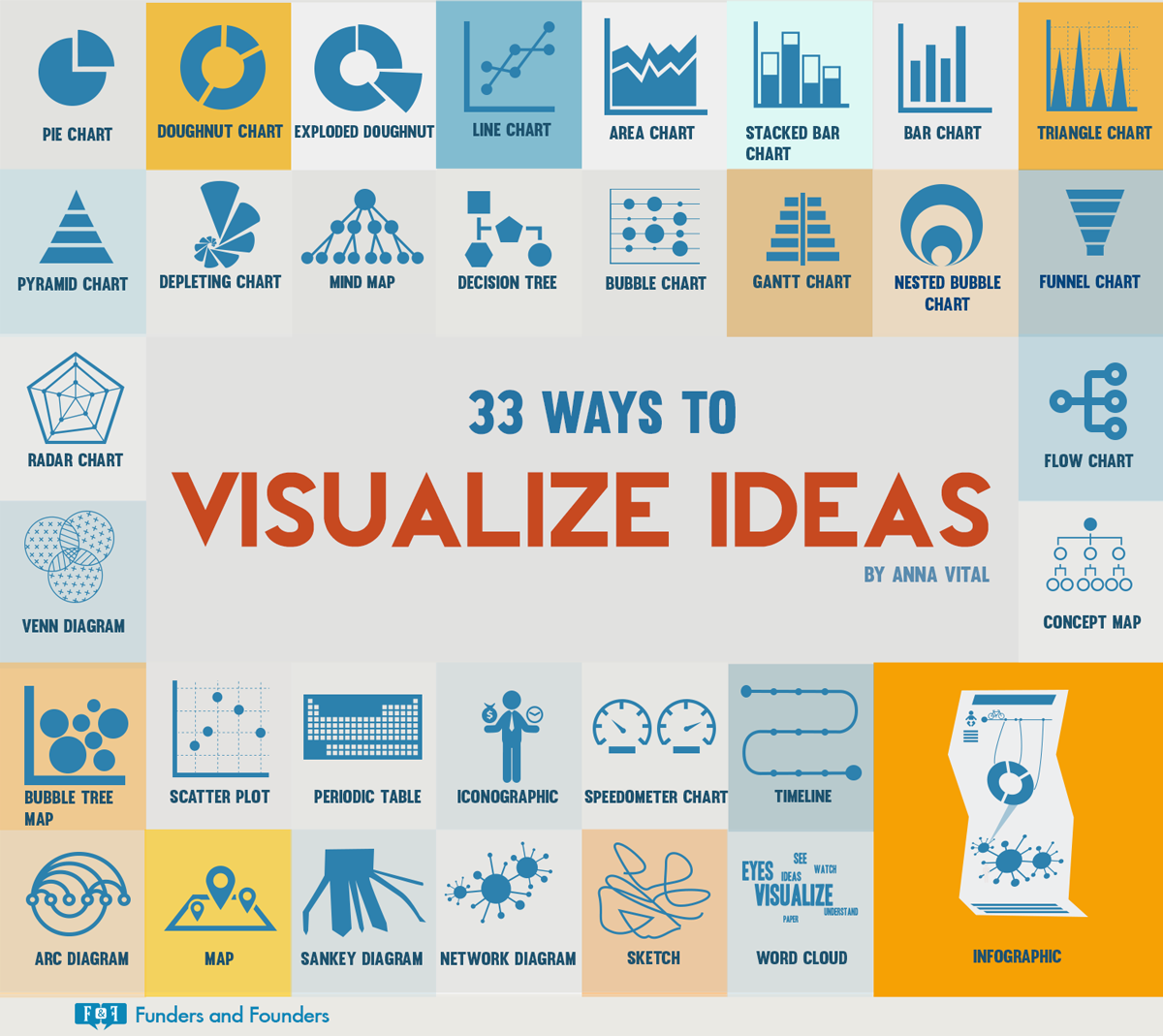

Visualizing a Kubernetes environment can significantly enhance understanding and troubleshooting efforts. A clear kubernetes architecture diagram is often the first step towards grasping the intricacies of a cluster. Several tools and methods are available, catering to different needs and levels of expertise. For those seeking a hands-on, interactive experience, consider using open-source tools like Lens or K9s. Lens provides a comprehensive graphical interface to manage and monitor Kubernetes resources, offering a visual representation of your deployments, pods, and services. It excels at giving you a live view of your cluster’s state. On the other hand, K9s is a terminal-based UI that provides a real-time, navigable view of cluster resources, perfect for command-line enthusiasts. These tools make it easier to trace relationships between components, offering a dynamic visualization that simplifies complex interactions. They help users quickly see the real-time status of their Kubernetes setup, facilitating faster debugging and smoother management.

Cloud providers offer their own integrated visualization tools, which are tailored to the specifics of their managed Kubernetes services. For example, Google Kubernetes Engine (GKE) offers the Kubernetes Engine Dashboard in the Google Cloud Console which provides a comprehensive visualization of cluster health, resource utilization, and workload deployments. Similarly, Amazon Elastic Kubernetes Service (EKS) has the AWS Management Console, which provides visual tools to monitor cluster activity, while Azure Kubernetes Service (AKS) has similar features in the Azure Portal. These dashboards often include additional features like logging and monitoring, which can be integrated into a kubernetes architecture diagram to offer a holistic view. The advantage of these cloud provider tools is their direct integration with the underlying infrastructure, providing specific insights into resources and configurations specific to that provider. Another approach is using command-line tools such as kubectl to describe resources and build your own mental picture of how things are connected. Although it’s not a visual tool per se, `kubectl get all –show-labels` for example will give an overview of the resources and their associated labels which help understand the relation between resources in the kubernetes architecture diagram and `kubectl describe` helps see detailed configuration for the resources.

For those needing to visualize more specific aspects, consider using visualization tools for metrics and logs. Prometheus coupled with Grafana is a popular combination for collecting and displaying metrics data. Grafana, in particular, allows users to build detailed dashboards for different areas of Kubernetes. In addition, tools like Elasticsearch, Fluentd, and Kibana (EFK stack) or Loki with Grafana, are common for centralized logging and analysis, which allow users to create visualizations based on logs and see how they relate to events in their Kubernetes cluster. Ultimately, the approach taken will depend on your familiarity with the different types of tools and also on the granularity and focus you need. No matter the tool used, being able to visualize your kubernetes architecture diagram is a key aspect to understanding and managing a Kubernetes system.

Common Challenges and Troubleshooting Using Architecture Understanding

Navigating the complexities of Kubernetes deployments often presents challenges, and a solid grasp of the underlying architecture is paramount for effective troubleshooting. One common issue stems from networking configurations. For example, if a service is unreachable, a good starting point is to examine the kube-proxy component on the worker nodes and the network policies defined in the cluster. A misconfigured kube-proxy or overly restrictive network policy can lead to communication breakdowns. Understanding how the components connect, following a kubernetes architecture diagram, allows for targeted investigation instead of random guesswork. Another challenge arises from resource contention. Pods competing for CPU, memory, or storage can cause performance degradation or even application failures. By consulting a kubernetes architecture diagram, the scheduler’s role in placing pods and the kubelet’s management of resources become clearer. This knowledge facilitates identifying bottlenecks and optimizing resource allocation, therefore, improving the overall cluster health. These types of problems may seem complex, but a foundation on how the different pieces connect makes the troubleshooting process easier.

Furthermore, application deployments themselves may experience errors, such as pods failing to start or crashing repeatedly. This could be related to configuration errors within the deployment manifest or issues with the underlying container image. Using the knowledge gained from a kubernetes architecture diagram, one can start to look at the kubelet logs on the relevant worker node to find out why the pod failed and what the error message is. Also, understanding the control plane components becomes very helpful. For example, if deployments are not updating properly, inspecting the controller manager’s logs or using `kubectl` to check for events on the deployments object can provide valuable insights. It’s essential to recognize that a kubernetes architecture diagram is more than just a visual aid. It represents the pathways and interactions between components that are vital to understanding the system’s behavior. A more profound knowledge of how a pod reaches a service or how the API Server receives a user’s request is the difference between a quick solution and a prolonged downtime.

Finally, issues relating to the control plane are another area where the kubernetes architecture diagram can be invaluable. If the API server becomes unresponsive or the scheduler stops working, the entire cluster may be compromised. Identifying the affected component by the kubernetes architecture diagram is a critical first step toward mitigation. For instance, an unresponsive API server often points to issues with the server itself or the network connectivity to the etcd database. Furthermore, having a thorough understanding of how data flows inside a kubernetes cluster helps in creating a strategy for backup and recovery. By leveraging this deeper insight of the kubernetes architecture diagram, administrators and operators can efficiently address issues and ensure smooth operations of their Kubernetes environments. Effective and efficient troubleshooting depends on having a clear picture of the full architecture.

Architectural Considerations for Scalable Kubernetes Deployments

When building scalable applications with Kubernetes, several architectural considerations become crucial. A well-designed kubernetes architecture diagram can greatly aid in understanding these considerations. Horizontal scaling, a fundamental aspect, involves adding more instances of your application to handle increased traffic. Kubernetes facilitates this through deployments and replica sets, ensuring that additional pods are automatically created and managed. Load balancing is another critical piece, distributing traffic across these multiple instances. Services in Kubernetes act as a stable entry point, abstracting away the underlying pods and intelligently routing requests. Effective load balancing prevents any single instance from becoming overloaded and ensures high availability. This can be achieved with Kubernetes built-in service discovery and load balancing or with external ingress controllers that provide more advanced functionalities. A well-planned kubernetes architecture diagram will illustrate these connections clearly showing how the requests flow from the user through the load balancer and to the different pods.

High availability (HA) is also paramount when scaling Kubernetes deployments. It’s necessary to eliminate single points of failure. This can include having multiple replicas of control plane components, running worker nodes across different availability zones, and ensuring persistent storage is configured for HA. For example, an HA setup might involve running multiple etcd instances and several instances of the API server with load balancers in front. The architecture should also take into account resource management. Kubernetes allows for limiting resource usage for specific pods using resource quotas, which is key in preventing resource contention and ensuring the system can handle sudden increases in demand. Careful attention to these resource limits and how they apply to the overall kubernetes architecture diagram is necessary for a scalable and robust setup. These considerations, when integrated into your kubernetes architecture diagram, provide a solid framework for handling growing demands and ensuring the stability of your applications.

Furthermore, network configurations play a crucial role in scalability. Understanding how your pods, services, and ingress controllers interact within the network is necessary for both efficient scaling and troubleshooting. The architecture should define network policies, which control the traffic flow between pods and namespaces. It’s also important to consider how different network plugins affect the way pods and services communicate, especially when scaling across different subnets or availability zones. This aspect is a key part of the overall kubernetes architecture diagram because it dictates the connectivity between your microservices. Ultimately, a clear, visualized kubernetes architecture diagram allows for easier planning, scaling, and monitoring of complex application deployments on Kubernetes.