Kubernetes Deployments: A Foundation for Reliable Applications

Kubernetes deployments are the cornerstone of managing application states within a Kubernetes cluster. They provide a declarative way to specify the desired state of your application, automating the process of creating, updating, and scaling your application pods. Instead of manually managing individual pods, deployments handle these tasks, ensuring high availability and resilience. A key concept is replicas, which define the number of identical application instances running concurrently. This ensures redundancy and fault tolerance. Rolling updates allow for gradual updates to your application, minimizing downtime and risk. Should problems arise, rollbacks enable quick reversion to a previous stable version. Think of it like a sophisticated, automated system for managing a fleet of identical cars (your application instances), ensuring smooth transitions and quick fixes when necessary. Understanding deployments is essential for anyone working with Kubernetes, and the `kubectl get deploy` command is your primary tool for interacting with them.

Deployments simplify complex processes. Imagine managing dozens of individual application instances. Each instance requires its own management. Deployments handle this complexity by automating the scaling, updating, and rolling back of application instances. This makes managing even very large applications much easier. Consider a scenario where a new version of your application needs to be deployed. Instead of manually shutting down old instances and starting new ones, a deployment handles the transition seamlessly. It creates new instances, verifies their health, and then gradually replaces old instances. This minimizes disruption to users and ensures a smooth transition. The `kubectl get deploy` command offers a clear view into this process, allowing for proactive monitoring and timely intervention if necessary.

The power of deployments extends beyond simple scaling and updates. They provide mechanisms for handling failures. If an instance fails, the deployment automatically creates a new one, ensuring continuous operation. They support sophisticated strategies, such as canary deployments (gradually releasing updates to a small subset of users) and blue/green deployments (running two identical versions of your application, switching traffic between them). Mastering deployments is crucial for building robust and reliable applications on Kubernetes, and `kubectl get deploy` provides the essential visibility needed to manage them effectively. Efficient use of this command empowers developers to respond quickly to problems, optimize resource utilization and prevent application outages.

Understanding the `kubectl get deploy` Command

The `kubectl get deploy` command is a fundamental tool for interacting with Kubernetes deployments. It provides a concise overview of the current state of your deployments. The basic syntax is simple: `kubectl get deploy

Running `kubectl get deploy my-app` (where `my-app` is your deployment’s name) will return a table. This table shows key metrics, including the number of desired replicas (the target number of running instances), current replicas (the number currently running), updated replicas (how many have the latest code), and the overall status of the deployment. Understanding this output is critical. For instance, discrepancies between desired and current replicas might signal a problem. Similarly, a status of “Failed” immediately highlights an issue needing attention. The `kubectl get deploy` command provides this crucial feedback quickly and efficiently.

Beyond the basic command, `kubectl get deploy` offers powerful options for customized views. Adding the `-w` flag enables watching the deployment’s status in real time, updating as changes occur. This real-time feedback is invaluable during deployments and rollouts. Another useful option is `-o wide`, which provides a more detailed output, including information like the creation timestamp and the selector used for the deployment. Mastering these options elevates your ability to effectively use `kubectl get deploy` for monitoring and troubleshooting. The command’s versatility makes it an essential part of any Kubernetes administrator’s toolkit. Proactive monitoring with `kubectl get deploy` helps prevent larger issues.

How to Monitor Your Deployments with `kubectl get deploy`

Monitoring the progress of your Kubernetes deployments is crucial for ensuring smooth application updates and identifying potential issues promptly. The `kubectl get deploy` command provides a real-time view into the health and status of your deployments. To track a rollout, simply execute `kubectl get deploy

Identifying potential problems during a deployment is simplified with `kubectl get deploy`. For example, if a deployment is stuck in a non-ready state, `kubectl get deploy` will show this immediately. A low number of ready replicas compared to desired replicas indicates that some pods are failing to start or are not healthy. Similarly, a mismatch between desired and updated replicas may signify a stalled rolling update. Image pull failures are also clearly visible. The output will display errors related to the inability to retrieve the container image. By examining the output closely, you can quickly pinpoint issues such as insufficient resources allocated to pods or issues with the deployment configuration itself. Using `kubectl get deploy` proactively helps prevent significant disruptions and simplifies troubleshooting.

Making informed decisions based on `kubectl get deploy` output is essential for effective deployment management. For instance, if you notice a significant delay in the update process, you can investigate the underlying cause using additional Kubernetes tools. The output provides clues that can guide further diagnostics. If only a few pods are failing, you might consider scaling down the deployment temporarily while you address the issues. Conversely, if the entire deployment is failing, you might need to revert to a previous version using `kubectl rollout undo deploy`. The ability to promptly react to deployment status changes, facilitated by `kubectl get deploy`, makes it an invaluable tool for managing application deployments effectively. Mastering `kubectl get deploy` significantly improves your ability to monitor, troubleshoot, and optimize your Kubernetes deployments.

Interpreting Deployment Status: Understanding the Output of `kubectl get deploy`

The output of `kubectl get deploy` provides a concise summary of your deployment’s health and status. Understanding this information is crucial for effective Kubernetes management. Each column represents a key aspect of the deployment’s lifecycle. The `NAME` column displays the name assigned to the deployment. `READY` indicates the number of healthy replicas currently running. This contrasts with `UP-TO-DATE`, showing how many replicas are running the latest version of your application’s image. A mismatch here often suggests an update is in progress or has encountered issues.

The `AVAILABLE` column shows the number of pods that are ready to serve requests. This metric can differ from `READY` if a pod is healthy but not yet receiving traffic. `AGE` simply indicates how long the deployment has been running. The `DESIRED` column specifies the total number of replicas you want to run for the deployment. Discrepancies between `DESIRED` and other metrics (like `READY` or `AVAILABLE`) highlight potential problems that require attention. `kubectl get deploy` aids in quickly identifying issues. Observing these columns provides critical insights into the deployment’s progress and performance.

For instance, if `DESIRED` is 3, but `READY` and `AVAILABLE` show 1, the deployment is not functioning optimally. Similarly, if `UP-TO-DATE` is lower than `DESIRED`, a rolling update might be incomplete or stalled. By carefully analyzing the output of `kubectl get deploy`, you can effectively monitor and manage your deployments, addressing issues proactively. This command is instrumental in ensuring your applications are running reliably and efficiently. The consistent use of `kubectl get deploy` enhances your ability to swiftly identify and resolve problems within your Kubernetes deployments. Mastering the interpretation of its output is a critical skill for any Kubernetes administrator.

Troubleshooting Common Deployment Issues using `kubectl get deploy`

Insufficient resources frequently hinder deployments. When a deployment lacks sufficient CPU or memory, `kubectl get deploy` reveals this. The “Ready” column might show fewer pods than “Desired,” indicating resource constraints. Examine pod logs using `kubectl logs` to confirm. Scaling up the deployment’s resource requests in the deployment YAML solves this. Remember to use `kubectl apply -f deployment.yaml` to apply changes.

Image pull failures are another common problem. If a container image is unavailable or corrupted, pods will fail to start. `kubectl get deploy` shows this as a stalled deployment. The “Containers” status will reflect failures. Verify the image name and registry accessibility. Correct the image specification in the deployment YAML and redeploy. The command `kubectl get pods -n

Deployment failures can stem from various issues within the application itself. Errors in application code, configuration problems, or issues with dependencies can cause pod failures. `kubectl get deploy` displays such failures via its status fields. Check the pod logs (`kubectl logs

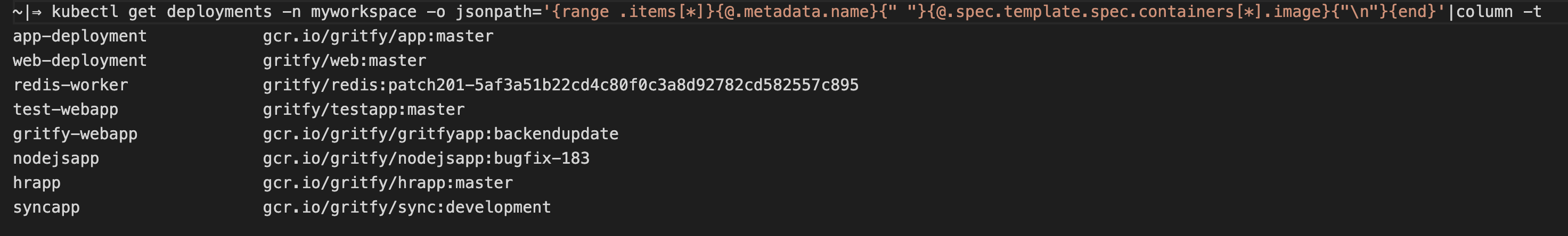

Advanced `kubectl get deploy` Techniques: Filtering and Sorting

Managing numerous Kubernetes deployments can become complex. Fortunately, `kubectl get deploy` offers powerful filtering and sorting capabilities to streamline this process. These advanced techniques significantly improve efficiency when working with many deployments. Users can quickly isolate specific deployments, simplifying monitoring and troubleshooting.

Filtering deployments by name is straightforward. For example, to view only deployments containing “my-app” in their name, use the following command: `kubectl get deploy my-app`. This is particularly useful in large environments. Label selectors provide a more sophisticated filtering mechanism. They allow for filtering based on metadata associated with deployments. For instance, to view deployments with the label `environment:production`, use: `kubectl get deploy -l environment=production`. This ensures focused attention on specific deployment subsets.

Sorting the output of `kubectl get deploy` enhances readability and analysis. The `–sort-by` flag allows sorting by various fields such as creation timestamp or name. For example, `kubectl get deploy –sort-by=.metadata.creationTimestamp` will list deployments in chronological order. Combining filtering and sorting further refines the output. Imagine needing to find all deployments in the “staging” environment created after a specific date. Using a combination of `-l` and `–sort-by` allows efficient identification. This refined approach to using `kubectl get deploy` makes managing and monitoring complex deployment environments significantly more manageable. Mastering these techniques improves operational efficiency and reduces troubleshooting time. The command `kubectl get deploy` proves invaluable in this context.

Integrating `kubectl get deploy` into Your Workflow

Seamlessly integrating `kubectl get deploy` into daily workflows significantly enhances Kubernetes management. Developers should use it routinely during development and testing phases. Regularly checking deployment status using `kubectl get deploy` ensures applications launch and operate as expected. Early identification of issues prevents larger problems later. This proactive approach is crucial for maintaining application stability and minimizing downtime.

Incorporate `kubectl get deploy` into CI/CD pipelines for automated monitoring. After each deployment, integrate the command into the pipeline’s post-deployment phase. Automated checks provide immediate feedback on deployment success. Automated alerts trigger if problems occur, allowing for rapid responses and reduced MTTR (Mean Time To Repair). This automated approach significantly improves the efficiency and reliability of deployments. This is particularly valuable in agile development environments that demand rapid, reliable deployments.

Beyond CI/CD, `kubectl get deploy` proves invaluable for debugging and troubleshooting. When application problems arise, this command offers a quick way to check the deployment’s health. The command’s output reveals potential issues such as insufficient resources or failed container starts. Combining `kubectl get deploy` with other diagnostic tools like `kubectl describe` and `kubectl logs` provides a powerful combination for effective troubleshooting. This integrated approach greatly accelerates problem resolution, minimizing application disruptions.

Beyond kubectl get deploy: Exploring Related Commands

While kubectl get deploy provides a crucial overview of your deployments, several other commands offer deeper insights and functionalities. kubectl describe deploy, for instance, delivers a comprehensive, detailed report on a specific deployment, revealing intricate configuration details and resource usage. This command is invaluable for troubleshooting complex issues that might not be immediately apparent from the output of kubectl get deploy. Understanding the nuanced information provided by kubectl describe deploy complements the summary provided by kubectl get deploy, enhancing your diagnostic capabilities.

For tracking the history of a deployment, kubectl rollout history deploy proves indispensable. This command unveils the sequence of updates, allowing you to examine past configurations and rollbacks. This historical perspective is crucial for pinpointing the source of problems and efficiently managing updates. Combined with the real-time monitoring offered by kubectl get deploy, you gain a complete picture of your deployment’s lifecycle, empowering informed decision-making and proactive problem-solving. Regularly using both commands will refine your Kubernetes management strategies.

Finally, kubectl logs allows direct access to the logs of the containers within your deployments. This is essential for debugging applications. By correlating log entries with the status reported by kubectl get deploy, you can effectively pinpoint errors and resolve issues rapidly. This integrated approach significantly improves debugging efficiency. Mastering these commands—alongside kubectl get deploy—builds a robust Kubernetes management skill set, enabling efficient operations and issue resolution. Effective use of these commands, in conjunction with kubectl get deploy, creates a powerful troubleshooting and monitoring system.