Understanding Kubeadm Multiple Masters: Key Concepts and Benefits

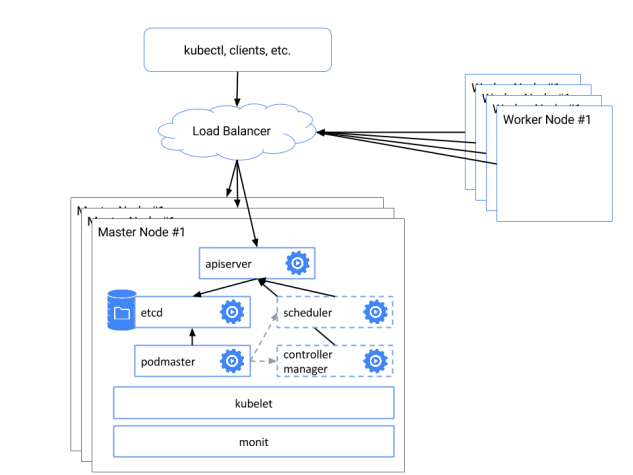

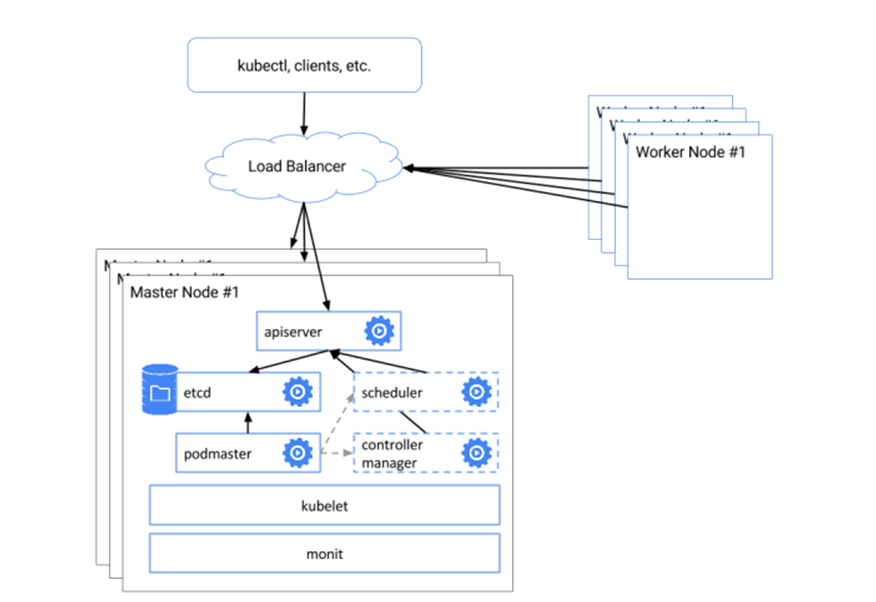

Kubeadm multiple masters is a powerful Kubernetes feature that enhances scalability, high availability, and fault tolerance. With multiple masters, Kubernetes clusters can handle increased workloads and maintain uptime even in the event of a master failure. This guide covers the deployment and management of Kubeadm multiple masters, using best practices and real-world examples. Kubernetes clusters typically consist of a master node and one or more worker nodes. The master node is responsible for managing the cluster, while worker nodes execute the workloads. In a single-master setup, the master node is a single point of failure, which can lead to cluster downtime if it fails.

Kubeadm multiple masters addresses this issue by introducing multiple master nodes, ensuring that the cluster can continue to operate even if one master fails. This setup enhances the cluster’s overall reliability and availability, making it an ideal choice for production environments.

In this guide, we’ll explore the process of deploying and managing Kubeadm multiple masters, covering essential topics such as system requirements, installation, configuration, troubleshooting, monitoring, scaling, security, and disaster recovery. By the end of this article, you’ll have a solid understanding of how to deploy and manage Kubeadm multiple masters effectively.

Prerequisites: System Requirements and Dependencies

Before deploying Kubeadm multiple masters, ensure that you meet the following system requirements and dependencies:

Hardware Requirements

- At least three dedicated servers or virtual machines with a minimum of 2 CPUs and 4GB of RAM each.

- A load balancer to distribute traffic among master nodes.

Software Requirements

- A 64-bit Linux distribution, such as Ubuntu 18.04 or CentOS 7.

- Docker version 19.03 or later.

- Kubernetes version 1.21 or later.

Networking Requirements

- A functional network setup that allows communication between master and worker nodes.

- A pod network, such as Flannel or Calico, for communication between pods.

To ensure a successful deployment, verify that your environment meets these requirements. If any component falls short of the recommended specifications, consider upgrading or scaling out to meet the requirements.

How to Install and Configure Kubeadm Multiple Masters: Step-by-Step Instructions

In this section, we’ll walk you through the process of installing and configuring Kubeadm multiple masters. Follow these steps to set up your Kubernetes cluster:

Step 1: Install Docker and Kubeadm

Begin by installing Docker and Kubeadm on each of your master nodes. For Ubuntu 18.04, use the following commands:

sudo apt-get update sudo apt-get install -y apt-transport-https ca-certificates curl sudo curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add - sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" sudo apt-get update sudo apt-get install -y docker-ce sudo apt-get update

sudo apt-get install -y apt-transport-https curl

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

echo "deb https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee -a /etc/apt/sources.list.d/kubernetes.list

sudo apt-get update

sudo apt-get install -y kubeadmStep 2: Initialize the

Troubleshooting Common Issues in Kubeadm Multiple Masters: Tips and Best Practices

Deploying and managing Kubeadm multiple masters can sometimes be challenging. Here are some common issues you may encounter and best practices for resolving them:

Issue 1: Network Connectivity Problems

Ensure that your pod network is correctly configured and that all nodes can communicate with each other. Use the following command to check the status of your pod network:

kubectl get pods --all-namespacesIf you encounter issues, double-check your pod network configuration and ensure that all nodes have the necessary CNI plugins installed.

Issue 2: Certificate Management

Kubernetes clusters use certificates to secure communication between nodes. If you encounter certificate-related issues, you can use the following command to view the current certificate status:

kubectl get csrIf you see any pending certificate signing requests (CSRs), approve them using the following command:

kubectl certificate approve Issue 3: Resource Allocation

Ensure that your master nodes have sufficient resources to handle the workload. Use the following command to view the resource usage of your master nodes:

kubectl describe nodesIf you see resource bottlenecks, consider adding more resources to your master nodes or scaling out your cluster.

Best Practice 1: Regularly Update Kubernetes

Regularly update your Kubernetes cluster to the latest version to benefit from the latest features and security patches. Use the following command to update your cluster:

sudo apt-get update && sudo apt-get upgradeBest Practice 2: Implement Role-Based Access Control (RBAC)

Implement RBAC to control access to your Kubernetes cluster and its resources. Use the following command to create RBAC rules:

kubectl create rolebinding --clusterrole= --user= Best Practice 3: Use Secrets Management

Use secrets management tools, such as HashiCorp Vault or AWS Secrets Manager, to securely store and manage sensitive information, such as passwords and API keys.

Monitoring and Scaling Kubeadm Multiple Masters: Tools and Techniques

Monitoring and scaling are critical aspects of managing Kubeadm multiple masters. In this section, we’ll explore various monitoring and scaling tools and techniques that can help you maintain optimal performance and availability.

Kubernetes Dashboard

Kubernetes Dashboard is a web-based UI for managing Kubernetes clusters. It allows you to monitor cluster resources, deploy applications, and manage workloads. To deploy Kubernetes Dashboard, use the following command:

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.4.0/aio/deploy/recommended.yamlOnce deployed, you can access Kubernetes Dashboard using the following command:

kubectl proxyFollowed by navigating to http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/ in your web browser.

Prometheus

Prometheus is an open-source monitoring and alerting toolkit. It allows you to monitor cluster metrics, such as CPU usage, memory usage, and network traffic. To deploy Prometheus, use the following command:

kubectl apply -f https://raw.githubusercontent.com/prometheus-operator/kube-prometheus/main/bundle.yamlOnce deployed, you can access Prometheus using the following command:

kubectl port-forward svc/prometheus-kube-prometheus-prometheus 9090Followed by navigating to http://localhost:9090/ in your web browser.

Horizontal Pod Autoscaling

Horizontal Pod Autoscaling is a Kubernetes feature that allows you to automatically scale the number of replicas of a pod based on CPU utilization or other metrics. To enable Horizontal Pod Autoscaling, use the following command:

kubectl autoscale deployment --cpu-percent=50 --min=1 --max=10 This command will scale the number of replicas of the specified deployment between 1 and 10 based on a 50% CPU utilization threshold.

Best Practices for Monitoring and Scaling Kubeadm Multiple Masters

- Use monitoring tools to track cluster performance and identify issues before they become critical.

- Implement autoscaling to automatically adjust the number of replicas based on workload demands.

- Regularly review and optimize resource allocations to ensure efficient use of cluster resources.

- Use load balancing to distribute traffic evenly across multiple master nodes.

- Implement backup and restore strategies to ensure data availability in case of failures or disasters.

Security Best Practices for Kubeadm Multiple Masters: Recommendations and Guidelines

Security is a critical aspect of managing Kubeadm multiple masters. In this section, we’ll discuss security best practices, including network policies, RBAC, and secrets management. We’ll also provide recommendations and guidelines for securing Kubernetes clusters and their workloads, and explain the importance of regular security audits and updates.

Network Policies

Network policies are a way to control traffic between pods in a Kubernetes cluster. By default, all pods can communicate with each other. However, you can use network policies to restrict traffic between pods based on labels and selectors. To create a network policy, use the following command:

apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: deny-all spec: podSelector: {} policyTypes: - Ingress - EgressThis command creates a network policy that denies all ingress and egress traffic to all pods.

Role-Based Access Control (RBAC)

Role-Based Access Control (RBAC) is a way to control access to Kubernetes resources based on roles and permissions. By default, all users have full access to all resources. However, you can use RBAC to restrict access to specific resources based on user roles. To create an RBAC role, use the following command:

apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: name: pod-reader rules: - apiGroups: [""] resources: ["pods"] verbs: ["get", "watch", "list"]This command creates a role that allows users to get, watch, and list pods.

Secrets Management

Secrets management is the process of securely storing and managing sensitive information, such as passwords, API keys, and certificates. Kubernetes provides several ways to manage secrets, including Kubernetes Secrets and ConfigMaps. To create a secret, use the following command:

kubectl create secret generic my-secret --from-literal=password=my-passwordThis command creates a secret named “my-secret” with a key named “password” and a value of “my-password”.

Best Practices for Securing Kubeadm Multiple Masters

- Implement network policies to control traffic between pods.

- Use RBAC to restrict access to Kubernetes resources based on user roles.

- Use secrets management to securely store and manage sensitive information.

- Regularly review and update security policies and configurations.

- Implement regular security audits and updates to ensure the security of your Kubernetes clusters and workloads.

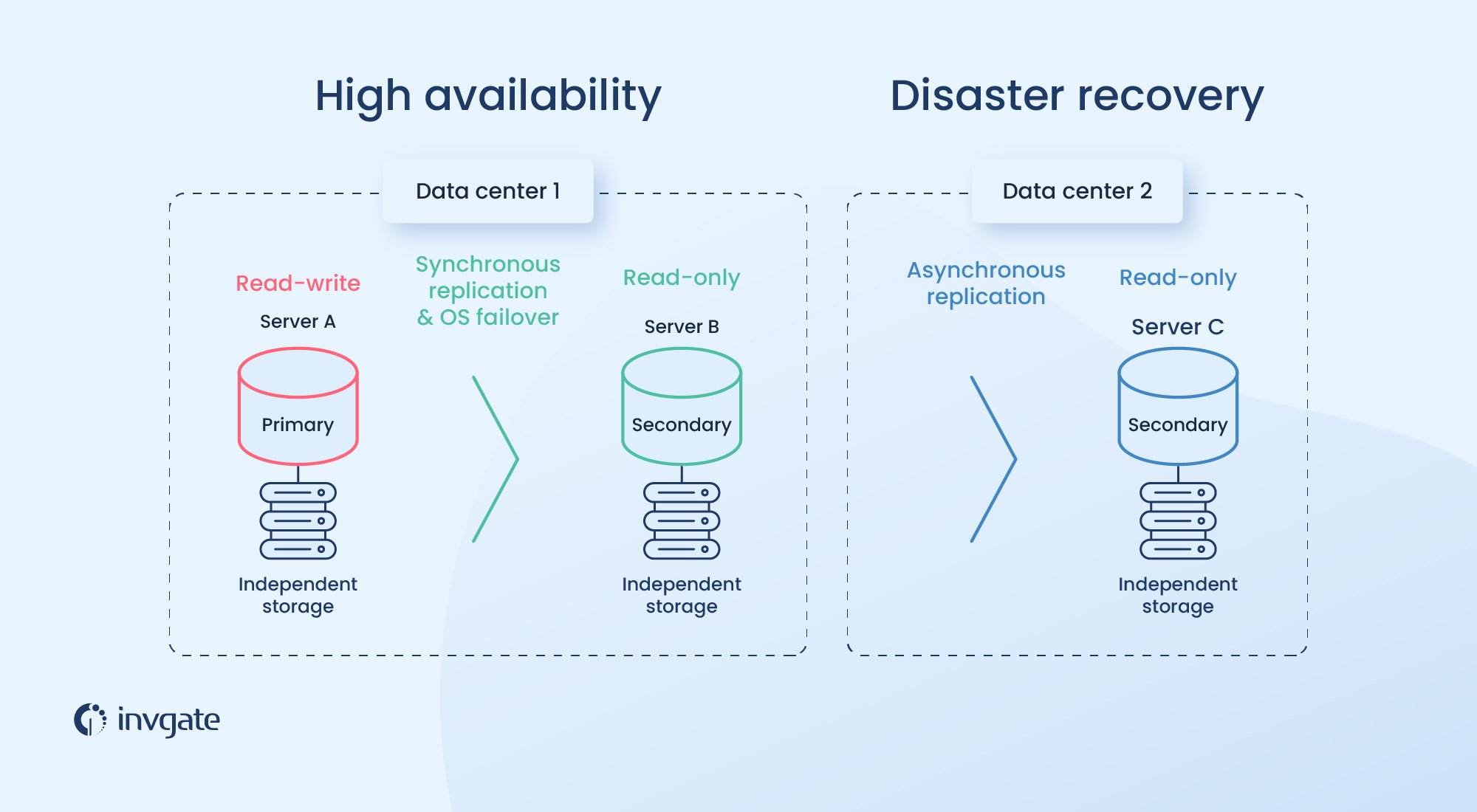

Disaster Recovery and High Availability Strategies for Kubeadm Multiple Masters: Strategies and Techniques

Disaster recovery and high availability are critical aspects of managing Kubeadm multiple masters. In this section, we’ll explore disaster recovery and high availability strategies, such as etcd snapshots, backup and restore, and load balancing. We’ll also provide examples and best practices for ensuring business continuity and minimizing downtime in case of failures or disasters.

Etcd Snapshots

Etcd is a distributed key-value store that stores the Kubernetes cluster state. Taking regular etcd snapshots is essential for disaster recovery. To take an etcd snapshot, use the following command:

etcdctl snapshot save snapshot.dbThis command saves the current etcd state to a file named “snapshot.db”. You can then use this snapshot to restore the etcd cluster state in case of a failure.

Backup and Restore

Backup and restore are critical for disaster recovery. Kubernetes provides several ways to back up and restore cluster data, including kubebackup and Velero. To back up a Kubernetes cluster using kubebackup, use the following command:

kubebackup create backup my-backup --include-etcdThis command creates a backup of the Kubernetes cluster, including etcd data.

Load Balancing

Load balancing is a technique for distributing traffic across multiple nodes to ensure high availability. Kubernetes provides several ways to implement load balancing, including the Kubernetes Service and Ingress resources. To create a Kubernetes Service, use the following command:

apiVersion: v1 kind: Service metadata: name: my-service spec: selector: app: MyApp ports: - name: http port: 80 targetPort: 9376 type: LoadBalancerThis command creates a Kubernetes Service that load balances traffic across pods with the label “app: MyApp” on port 9376.

Best Practices for Disaster Recovery and High Availability

- Take regular etcd snapshots to ensure data availability in case of failures or disasters.

- Implement backup and restore strategies to ensure the availability of cluster data.

- Use load balancing to distribute traffic across multiple nodes and ensure high availability.

- Regularly test disaster recovery and high availability strategies to ensure they work as expected.

- Implement regular security audits and updates to ensure the security of your Kubernetes clusters and workloads.

Real-World Use Cases and Success Stories: Case Studies and Examples

Kubeadm multiple masters have been successfully deployed and managed in various industries and domains, providing enhanced scalability, high availability, and fault tolerance. In this section, we’ll share real-world use cases and success stories of Kubeadm multiple masters, highlighting the benefits and challenges of deploying and managing them in production environments. We’ll also provide insights and lessons learned from these experiences.

Use Case 1: E-commerce Platform

An e-commerce platform deployed Kubeadm multiple masters to ensure high availability and fault tolerance for their mission-critical workloads. By using Kubeadm, they were able to automate the deployment and management of their Kubernetes clusters, reducing the time and effort required to manage their infrastructure. They also implemented etcd snapshots and backup and restore strategies to ensure business continuity in case of failures or disasters.

Use Case 2: Media Streaming Service

A media streaming service deployed Kubeadm multiple masters to handle the scalability and high availability requirements of their platform. By using Kubeadm, they were able to easily add and remove nodes from their clusters, ensuring that they had the necessary resources to handle peak traffic. They also implemented load balancing and network policies to ensure high availability and security for their workloads.

Use Case 3: Financial Services Company

A financial services company deployed Kubeadm multiple masters to ensure the security and compliance of their Kubernetes clusters. By using Kubeadm, they were able to implement RBAC and network policies to restrict access to their clusters and workloads. They also implemented secrets management to securely store and manage sensitive information, such as passwords and API keys. Regular security audits and updates were also performed to ensure the security of their clusters and workloads.

Best Practices for Deploying and Managing Kubeadm Multiple Masters

- Automate the deployment and management of Kubernetes clusters using Kubeadm.

- Implement etcd snapshots and backup and restore strategies to ensure business continuity in case of failures or disasters.

- Use load balancing and network policies to ensure high availability and security for workloads.

- Implement RBAC and secrets management to ensure the security and compliance of Kubernetes clusters and workloads.

- Regularly perform security audits and updates to ensure the security of Kubernetes clusters and workloads.