What is Real-Time Data Streaming and Why Does it Matter?

Real-time data streaming is the continuous flow of data generated at a high velocity. This data requires immediate processing and analysis. It’s increasingly vital in modern applications. The ability to react instantly to incoming information provides a significant competitive edge. Businesses can make informed decisions and take timely actions. This transforms raw data into actionable insights almost instantaneously.

Consider the financial services industry. Real-time data streaming is essential for fraud detection. Algorithms analyze transaction patterns as they occur. They flag suspicious activities immediately. This prevents financial losses and protects customers. In the realm of the Internet of Things (IoT), real-time data from sensors enables predictive maintenance. For example, monitoring equipment performance in real-time allows for early detection of potential failures. This prevents costly downtime and optimizes operational efficiency. E-commerce platforms also rely heavily on real-time data. They personalize recommendations based on user behavior. This boosts sales and enhances the customer experience. Kinesis streams are the backbone of many of these applications. They facilitate the ingestion, processing, and analysis of high-volume data streams.

The adoption of real-time data streaming is growing across industries. Organizations seek to leverage the power of immediate insights. Kinesis streams offer a robust and scalable solution. They address the challenges of handling massive data streams. From monitoring social media trends to optimizing supply chain logistics, the applications of real-time data streaming are vast and varied. Businesses can gain a deeper understanding of their operations and customers. This empowers them to make data-driven decisions that drive growth and innovation. Ultimately, real-time data processing is no longer a luxury. It’s a necessity for organizations that want to stay competitive in today’s fast-paced world. Kinesis streams are a key component in enabling this capability.

Demystifying Amazon Kinesis Data Streams: Core Concepts

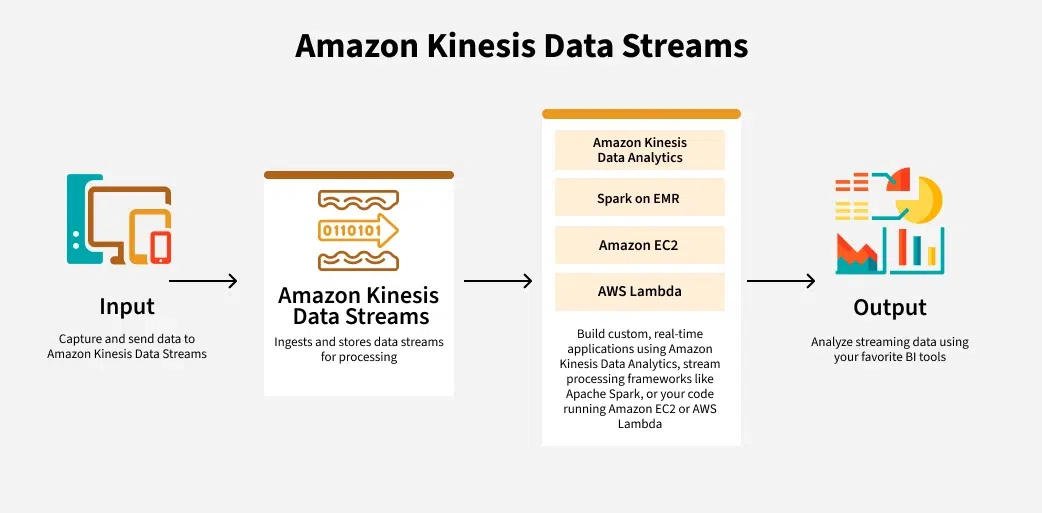

Understanding Amazon Kinesis Data Streams begins with grasping its core components. Think of Kinesis streams as a river, constantly flowing with data. This river is divided into channels, known as shards. Shards are the fundamental units of throughput in Kinesis Data Streams. The number of shards determines the stream’s capacity. Each shard provides a defined write and read capacity, impacting the overall performance of your kinesis streams application.

Data enters the Kinesis streams via producers. Producers are applications or devices that send data records to the stream. Imagine sensors sending temperature readings or e-commerce sites logging customer activity. These data records are the individual pieces of information flowing through the stream. Each record has a sequence number, a unique identifier assigned by Kinesis Data Streams, and a partition key, used to determine which shard the data is sent to. The distribution of data across shards is crucial for parallel processing and optimal throughput within your kinesis streams infrastructure.

On the other side of the river are consumers. Consumers are applications that retrieve and process data from the Kinesis streams. They use the Kinesis Client Library (KCL) to read data from one or more shards. Consumers can perform various operations. Examples include data aggregation, transformation, or loading into a data warehouse. Data retention is another key concept. Kinesis Data Streams stores data for a configurable period, from 24 hours to 7 days. This retention period allows consumers time to process the data. Understanding these core concepts – shards, producers, consumers, records, and data retention – is essential for building effective real-time data processing pipelines with Amazon kinesis streams.

How to Build a Data Pipeline with Amazon Kinesis Streams: A Practical Approach

Setting up a data pipeline with Amazon Kinesis Data Streams involves a few key steps. This guide will walk you through the process of creating a Kinesis stream, writing data to it, and then consuming that data. This practical approach will enable you to understand how kinesis streams work in a real-world scenario.

First, you need to create a Kinesis stream. This can be done through the AWS Management Console, the AWS CLI, or using AWS SDKs. When creating the stream, you’ll need to specify the number of shards. The number of shards determines the stream’s capacity. Consider your expected data throughput when deciding on the number of shards. Once the stream is created, you can start writing data to it. The Kinesis Producer Library (KPL) is a helpful tool for writing data to kinesis streams efficiently. The KPL buffers and aggregates records to improve throughput. Alternatively, the Kinesis Agent can be used to stream data from log files directly into the Kinesis stream. Both tools handle tasks like retries and error handling, simplifying the data ingestion process. Remember to configure your IAM roles and policies correctly to allow producers to write data to the kinesis streams.

Consuming data from the kinesis streams typically involves using the Kinesis Client Library (KCL). The KCL handles complexities like shard discovery, load balancing, and checkpointing. Consumers use the KCL to read data from the stream and process it. The KCL ensures that each shard is processed by only one consumer at a time. This maintains the order of records within each shard. When building your consumer application, focus on efficient data processing. Integrate your KCL application with other AWS services such as Lambda, S3, or DynamoDB to create powerful real-time data processing workflows using Kinesis streams. Proper monitoring and logging are crucial for identifying and resolving issues. Monitoring tools like CloudWatch can track metrics such as throughput, latency, and error rates. Use these metrics to optimize your kinesis streams setup and ensure reliable data processing.

Exploring Key Features of Amazon Kinesis Data Streams: Enhanced Fan-Out, Aggregation, and More

Amazon Kinesis Data Streams provides several advanced features to optimize data processing and consumption. One such feature is Enhanced Fan-Out (EFO), designed to enable parallel data consumption from a Kinesis stream. Traditionally, consumers share the read capacity of a Kinesis stream, potentially leading to contention and reduced throughput. EFO allows each consumer to have its dedicated read capacity, ensuring consistent and high performance, particularly beneficial for applications requiring low latency and real-time analytics. This is a critical improvement for those heavily invested in kinesis streams.

Data aggregation and de-aggregation are also essential for optimizing throughput in Kinesis Data Streams. Aggregation combines multiple records into a single Kinesis Data Streams record on the producer side, reducing the number of records transmitted and thus lowering overhead. De-aggregation reverses this process on the consumer side, extracting the individual records. This technique is useful for scenarios with many small records, enhancing efficiency and lowering costs. Furthermore, Kinesis Data Streams integrates seamlessly with other AWS services, such as AWS Lambda, Amazon S3, and Amazon DynamoDB. This allows for building complete data pipelines, where Kinesis streams ingest data, Lambda functions perform real-time processing, and the processed data is stored in S3 or DynamoDB for further analysis or application use. The possibilities are endless with kinesis streams.

Each feature of Amazon Kinesis Data Streams offers specific benefits tailored to different use cases. Enhanced Fan-Out is ideal for applications needing dedicated throughput and low latency. Data aggregation optimizes the transmission of small records, reducing costs. Integration with other AWS services allows building complex and scalable data processing workflows using kinesis streams. Understanding these features and their respective advantages is crucial for designing efficient and cost-effective data streaming solutions, allowing businesses to leverage real-time data effectively. Selecting the right features will ensure your kinesis streams implementation meets performance and cost requirements.

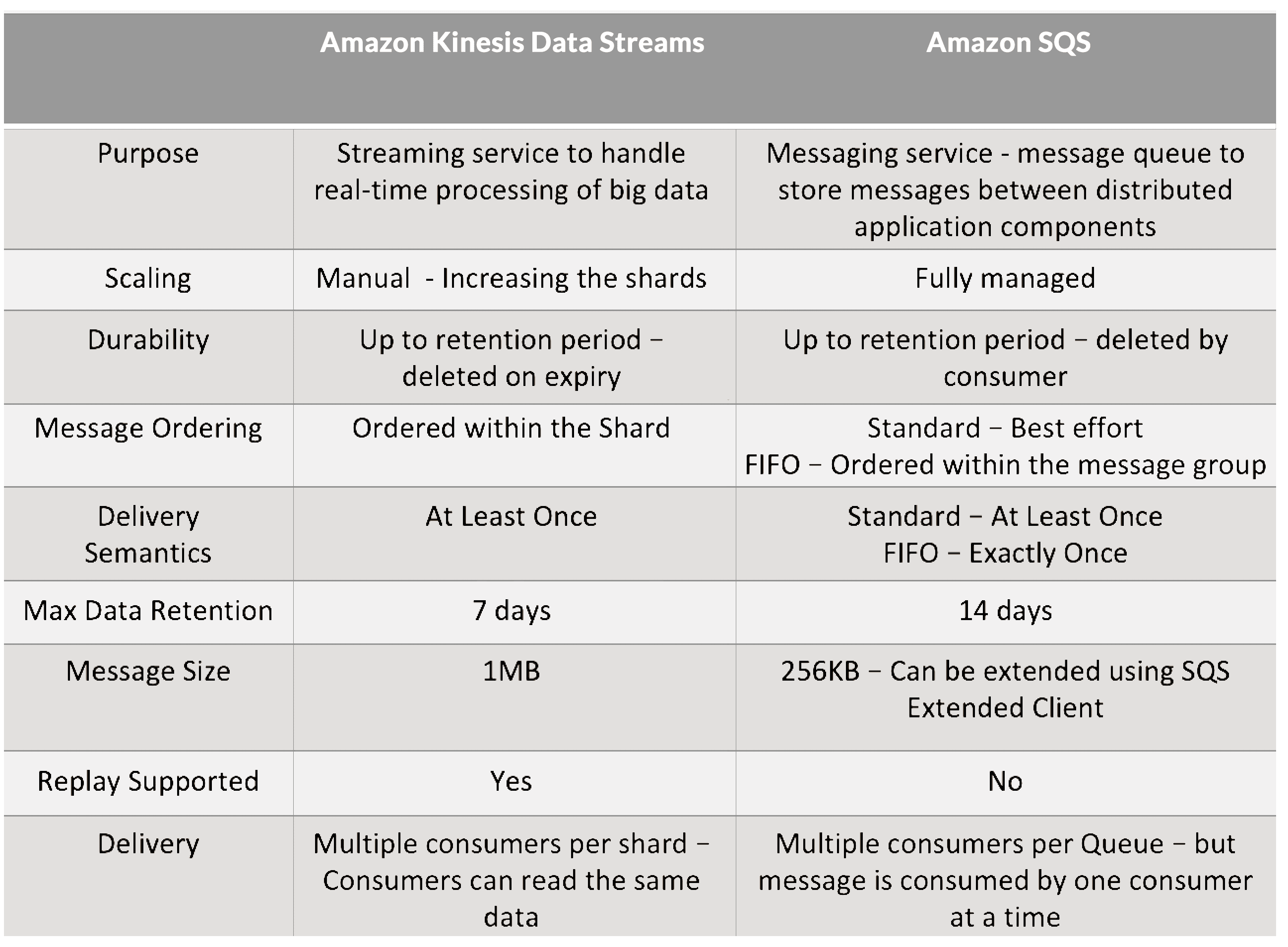

Kinesis Data Streams vs. Other Streaming Solutions: Understanding the Differences

When selecting a real-time data streaming solution, it’s crucial to understand the nuances between different platforms. Amazon Kinesis Data Streams, Apache Kafka, and Apache Pulsar are popular choices, each with its own strengths and weaknesses. A careful comparison helps determine which solution best fits specific project requirements and constraints. Kinesis streams offer tight integration with the AWS ecosystem, simplifying deployment and management for AWS-centric applications. Kafka, on the other hand, provides greater flexibility and control over the infrastructure, making it suitable for hybrid or multi-cloud environments. Pulsar distinguishes itself with its layered architecture and built-in support for multi-tenancy.

Scalability is a key consideration. Kinesis streams scale by adding shards, and AWS handles the underlying infrastructure. Kafka scales through partitioning and replication, requiring more manual configuration and management. Pulsar’s segmented architecture allows for independent scaling of storage and compute. Cost is another important factor. Kinesis streams pricing is based on shard hours, data ingestion, and data storage. Kafka’s cost depends on the infrastructure used, including servers, storage, and networking. Pulsar’s cost structure is similar to Kafka’s, with the added benefit of potentially lower storage costs due to its tiered storage capabilities. The ease of use should also be evaluated. Kinesis streams shine with their simplified setup and integration with other AWS services. Kafka requires more expertise to configure and maintain. Pulsar offers a balance between the two, with a more user-friendly interface than Kafka but still requiring a good understanding of distributed systems.

The choice between Kinesis streams, Kafka, and Pulsar depends on several factors, including the existing infrastructure, scalability requirements, budget, and the level of control needed. If your architecture is heavily invested in AWS, Kinesis streams offer a seamless and managed solution. If you require more flexibility and control, or if you’re operating in a hybrid or multi-cloud environment, Kafka or Pulsar might be more appropriate. Evaluating the strengths and weaknesses of each platform in the context of your specific use case will ensure you select the best streaming solution for your needs. Remember to consider factors like community support, maturity of the ecosystem, and long-term maintainability when making your decision regarding Kinesis streams or other options.

Optimizing Performance and Cost for Kinesis Data Streams Deployments

Efficiently managing Amazon Kinesis Data Streams involves a delicate balance between achieving optimal performance and minimizing costs. Several key factors influence both aspects, requiring careful consideration during the design and operation of your kinesis streams infrastructure. Shard allocation is paramount. Each shard has a defined capacity for data ingestion and retrieval. Over-provisioning leads to unnecessary expenses, while under-provisioning results in performance bottlenecks and potential data loss. Regularly monitor shard utilization using CloudWatch metrics and dynamically adjust the number of shards based on actual traffic patterns. Autoscaling can automate this process, ensuring that your kinesis streams deployment scales seamlessly with fluctuating workloads.

The data retention period also significantly impacts cost. Kinesis Data Streams stores data for a configurable duration, typically ranging from 24 hours to 7 days. Longer retention periods increase storage costs. Evaluate your data processing requirements and choose the shortest retention period that meets your needs. Consider archiving older data to cheaper storage solutions like Amazon S3 for long-term analysis. Monitoring is crucial for identifying performance issues and optimizing resource utilization. Set up CloudWatch alarms to detect anomalies such as increased latency, throttling, or consumer lag. Proactive monitoring enables you to address problems before they impact your applications. Using Enhanced Fan-Out (EFO) when you have multiple consumers needing the same data stream will significantly improve performance compared to traditional shared fan-out, but you need to consider the cost implications of EFO as well. Remember to analyze consumer application’s reading behavior and tune parameters, such as prefetch size, to optimize data consumption.

Furthermore, data serialization formats and aggregation techniques can play a vital role in optimizing throughput and reducing costs. Employ efficient serialization formats like Protocol Buffers or Apache Avro to minimize the size of data records. Smaller records translate to lower storage costs and faster data transfer rates. Kinesis Data Streams supports data aggregation, allowing you to combine multiple records into a single Kinesis record. This reduces the number of records transmitted, improving throughput and lowering costs. However, aggregation introduces complexity in the consumer application as de-aggregation logic needs to be implemented. By carefully considering these factors and implementing appropriate optimization techniques, you can maximize the performance and minimize the cost of your Kinesis Data Streams deployments. Integrating kinesis streams with other AWS services requires careful planning to make sure that data transfer costs are kept under control, taking advantage of VPC endpoints where possible. Regular reviews of costs and performance metrics are essential to ensure ongoing optimization.

Securing Your Kinesis Data Streams: Best Practices for Data Protection

Security is paramount when working with Kinesis Data Streams. Protecting data at rest and in transit, controlling access, and ensuring network security are crucial for maintaining data integrity and confidentiality. Several best practices should be implemented to secure your Kinesis Data Streams deployments effectively. Encryption is a fundamental security measure. Kinesis Data Streams supports encryption at rest using AWS Key Management Service (KMS). This ensures that data stored within the stream is encrypted and protected from unauthorized access. For data in transit, use HTTPS for all communication with Kinesis Data Streams. This encrypts the data as it moves between producers, consumers, and the Kinesis service itself. Implementing these encryptions for kinesis streams is extremely important.

Access control is another critical aspect of security. Use IAM policies to control who can access your Kinesis Data Streams and what actions they can perform. Grant the least privilege necessary to each user or role. For example, a producer might only need permission to write data to the stream, while a consumer might only need permission to read data. Regularly review and update your IAM policies to ensure they remain appropriate. Network security is also essential. Consider using VPC endpoints to allow your applications to access Kinesis Data Streams without traversing the public internet. This creates a private connection between your VPC and Kinesis, enhancing security and reducing the risk of exposure. Security groups should be configured to restrict traffic to and from your Kinesis Data Streams resources, allowing only necessary connections.

Compliance with industry-standard security regulations, such as GDPR, HIPAA, and PCI DSS, is often a requirement. Kinesis Data Streams provides features and capabilities that can help you meet these compliance obligations. Ensure that you understand the specific requirements of the regulations that apply to your organization and configure your Kinesis Data Streams deployments accordingly. Regularly audit your security controls and practices to identify and address any vulnerabilities. Proactive monitoring and logging can help you detect and respond to security incidents in a timely manner. AWS CloudTrail can be used to track API calls made to Kinesis Data Streams, providing an audit trail of actions performed on your streams. By implementing these security best practices, you can protect your Kinesis Data Streams deployments and ensure the confidentiality, integrity, and availability of your data. The continuous monitoring of your kinesis streams configurations is very important.

Troubleshooting Common Issues with Kinesis Data Streams

Encountering issues while working with Amazon Kinesis Data Streams is a common part of the development lifecycle. This section provides guidance on diagnosing and resolving frequent problems to ensure a smooth and reliable data streaming experience. Addressing these issues proactively is key to maintaining optimal performance of your kinesis streams.

One common problem is data loss within kinesis streams. This can occur due to various reasons, including producer-side errors, network connectivity problems, or insufficient retry mechanisms. Ensure the Kinesis Producer Library (KPL) or Kinesis Agent is properly configured with appropriate retry settings. Implement robust error handling within your producer applications to catch and address exceptions related to data transmission. Regularly monitor the PutRecords success and failure metrics using CloudWatch to identify potential data loss events. Consumer lag, where the consumer application falls behind in processing data from the kinesis streams, is another frequent issue. This can stem from slow consumer processing speed, insufficient consumer instances, or an imbalance in shard assignment. Scale the number of consumer instances to match the incoming data rate and optimize the consumer application’s processing logic. Utilize Enhanced Fan-Out (EFO) to enable parallel data consumption by multiple consumers. Also, closely monitor the GetRecords.IteratorAgeMilliseconds metric to detect consumer lag early on. Slow throughput can also be a problem that can lead to additional issues, in these cases verify proper shard allocation based on expected throughput. Consider increasing the number of shards in your Kinesis stream to accommodate higher data volumes. Ensure that the producer applications are not throttled due to exceeding shard limits. Use data aggregation techniques to batch multiple records into a single Kinesis record, thereby reducing the overhead of individual PutRecord operations.

Shard-related errors, such as “ProvisionedThroughputExceededException” or “KMSThrottlingException” when encryption is enabled, indicate insufficient capacity or KMS throttling. Monitor the shard-level metrics in CloudWatch to identify hot shards that are receiving disproportionately high traffic. Implement a suitable partitioning strategy to distribute data evenly across all shards. When using KMS encryption, request an increase in KMS throughput limits if you encounter throttling exceptions. Also, carefully examine your IAM policies to verify that the Kinesis Data Streams service and its consumers have the necessary permissions to access KMS keys. When debugging these Kinesis Data Streams issues, leverage the logging capabilities of the Kinesis Producer Library (KPL) and Kinesis Client Library (KCL). Configure detailed logging to capture relevant information about data production, consumption, and potential errors. Analyze the logs to identify patterns, root causes, and potential solutions. By proactively addressing these common problems and employing effective troubleshooting techniques, you can ensure the smooth and reliable operation of your kinesis streams and maximize the value of your real-time data processing pipelines.