The Benefits of Hosting Kubernetes on AWS

Hosting Kubernetes on AWS offers numerous advantages for businesses looking to deploy and manage containerized applications. AWS provides a solid foundation for running these applications, enabling organizations to focus on development and innovation. One of the primary benefits of hosting Kubernetes on AWS is scalability. AWS offers a wide range of services and resources that can be easily scaled up or down based on demand, ensuring that applications can handle varying levels of traffic without sacrificing performance.

Reliability is another key advantage of hosting Kubernetes on AWS. AWS has a proven track record of delivering highly available and fault-tolerant services, minimizing the risk of downtime and data loss. By hosting Kubernetes on AWS, businesses can take advantage of this reliability and ensure that their applications are always available to users.

In addition to scalability and reliability, hosting Kubernetes on AWS provides access to a wide range of services that can enhance the functionality and performance of containerized applications. For example, AWS offers managed databases, caching services, and messaging systems that can be easily integrated with Kubernetes applications. These services can help businesses build more sophisticated and feature-rich applications, without the need for extensive in-house expertise or resources.

By hosting Kubernetes on AWS, businesses can also take advantage of the security features and best practices built into the AWS platform. AWS provides a range of tools and services for securing applications and data, including network security, access control, and encryption. By following AWS security best practices, businesses can ensure that their Kubernetes applications are secure and compliant with industry standards and regulations.

In summary, hosting Kubernetes on AWS offers numerous benefits for businesses looking to deploy and manage containerized applications. By taking advantage of AWS’s scalability, reliability, and wide range of services, businesses can build and run sophisticated and feature-rich applications, without sacrificing performance or security.

Prerequisites for Hosting Kubernetes on AWS

Before deploying Kubernetes on AWS, it is essential to have a solid understanding of containerization, Kubernetes architecture, and AWS services like EC2, ECR, and IAM. Familiarity with these concepts will ensure a smooth deployment process and minimize potential issues. This section outlines the necessary prerequisites for hosting Kubernetes on AWS.

First and foremost, it is crucial to have a strong understanding of containerization and how it works. Containerization is the process of packaging an application and its dependencies into a container, which can then be deployed and run consistently across different environments. Familiarity with containerization technologies like Docker is essential for deploying and managing Kubernetes on AWS.

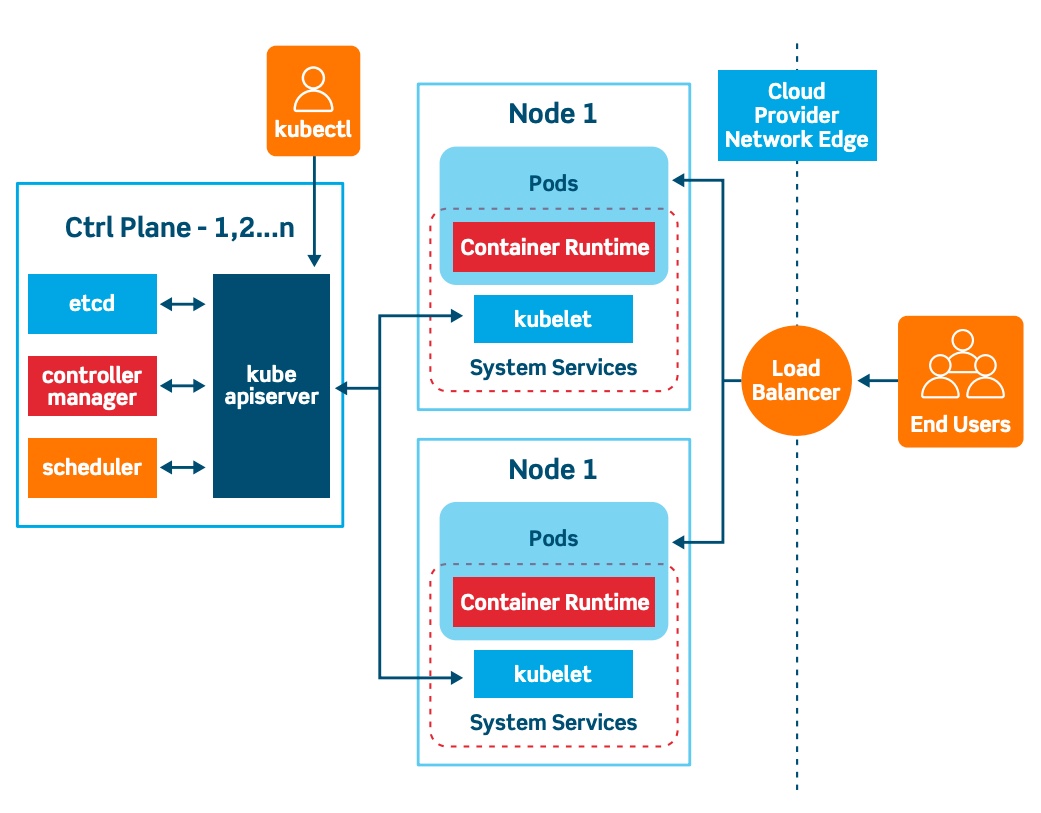

In addition to containerization, it is also important to have a solid understanding of Kubernetes architecture. Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. Familiarity with Kubernetes concepts like pods, services, and deployments is necessary for deploying and managing Kubernetes on AWS.

When it comes to AWS services, there are several that are essential for hosting Kubernetes on AWS. Amazon EC2 is a cloud computing service that provides scalable computing capacity in the AWS cloud. Amazon ECR is a fully-managed container registry that makes it easy to store, manage, and deploy Docker container images. AWS IAM is a service that helps you securely control access to AWS resources. Understanding how these services work and how to use them is essential for deploying Kubernetes on AWS.

Proper planning and preparation are also essential for a successful Kubernetes deployment on AWS. This includes identifying the resources and services needed, configuring them correctly, and testing the deployment to ensure that it is working as expected. By taking the time to properly plan and prepare, businesses can minimize potential issues and ensure a smooth deployment process.

In summary, hosting Kubernetes on AWS requires a solid understanding of containerization, Kubernetes architecture, and AWS services like EC2, ECR, and IAM. Proper planning and preparation are also essential for a successful deployment. By familiarizing themselves with these concepts and taking the time to properly plan and prepare, businesses can ensure a smooth deployment process and minimize potential issues.

Setting Up the AWS Environment for Kubernetes

Setting up the AWS environment for Kubernetes is a crucial step in deploying and managing containerized applications on AWS. This section outlines the steps for setting up the AWS environment for Kubernetes, including creating an Amazon EKS cluster, configuring the kubeconfig file, and launching worker nodes. We will also provide best practices for a successful setup.

Creating an Amazon EKS Cluster

The first step in setting up the AWS environment for Kubernetes is to create an Amazon EKS cluster. An Amazon EKS cluster consists of a managed control plane and one or more worker nodes. To create an Amazon EKS cluster, follow these steps:

- Sign in to the AWS Management Console.

- Open the Amazon EKS console.

- Choose “Create cluster” and follow the on-screen instructions.

- Once the cluster is created, download the kubeconfig file.

Configuring the kubeconfig File

The kubeconfig file is used to configure communication between your local machine and the Amazon EKS cluster. To configure the kubeconfig file, follow these steps:

- Locate the kubeconfig file that you downloaded in the previous step.

- Use the “kubectl” command-line tool to configure the kubeconfig file.

- Test the configuration by running the “kubectl get svc” command.

Launching Worker Nodes

Once the kubeconfig file is configured, the next step is to launch worker nodes. Worker nodes are the virtual machines that run your containerized applications. To launch worker nodes, follow these steps:

- Create an Amazon EC2 key pair.

- Create an Amazon EBS-backed Amazon Machine Image (AMI).

- Launch an Amazon EC2 instance using the AMI and key pair.

- Register the instance with the Amazon EKS cluster.

Best Practices for a Successful Setup

When setting up the AWS environment for Kubernetes, it is essential to follow best practices to ensure a successful deployment. Here are some best practices to keep in mind:

- Use the latest version of Kubernetes and AWS services.

- Follow AWS security best practices, such as enabling encryption at rest and in transit.

- Use Amazon EBS volumes for persistent storage.

- Monitor the Amazon EKS cluster and worker nodes using tools like Amazon CloudWatch.

- Implement role-based access control to ensure secure access to the Amazon EKS cluster and worker nodes.

In summary, setting up the AWS environment for Kubernetes involves creating an Amazon EKS cluster, configuring the kubeconfig file, and launching worker nodes. By following best practices, businesses can ensure a successful deployment and minimize potential issues. With a solid foundation in place, businesses can focus on deploying and managing containerized applications on AWS.

Deploying Applications on Kubernetes Using AWS

Once the AWS environment for Kubernetes is set up, the next step is to deploy applications on Kubernetes using AWS services. In this section, we will explain how to deploy applications on Kubernetes using AWS services such as Amazon ECR for container image storage and Amazon EBS for persistent storage. We will also include examples and real-world use cases to illustrate the process.

Storing Container Images with Amazon ECR

Amazon Elastic Container Registry (ECR) is a fully-managed container registry service that makes it easy to store, manage, and deploy Docker container images. With Amazon ECR, you can host your container images in a highly available and scalable architecture, allowing you to deploy containers quickly and securely.

To deploy an application on Kubernetes using Amazon ECR, follow these steps:

- Create a new repository in Amazon ECR.

- Build your Docker image and push it to the Amazon ECR repository.

- Create a Kubernetes deployment that references the Amazon ECR repository.

- Expose the deployment as a Kubernetes service.

Using Amazon EBS for Persistent Storage

Amazon Elastic Block Store (EBS) is a block-storage service that provides persistent storage for Amazon EC2 instances. With Amazon EBS, you can create storage volumes and attach them to your Amazon EC2 instances, allowing you to persist data even if the instance is stopped or terminated.

To use Amazon EBS for persistent storage in Kubernetes, follow these steps:

- Create an Amazon EBS volume.

- Format the Amazon EBS volume with a file system.

- Create a Kubernetes persistent volume that references the Amazon EBS volume.

- Create a Kubernetes persistent volume claim that references the persistent volume.

- Mount the persistent volume claim on your Kubernetes pod.

Real-World Use Case: Deploying a WordPress Application on Kubernetes

Let’s consider a real-world use case where we deploy a WordPress application on Kubernetes using Amazon ECR and Amazon EBS. Here are the steps:

- Create a new Amazon ECR repository for the WordPress application.

- Build the WordPress Docker image and push it to the Amazon ECR repository.

- Create a Kubernetes deployment that references the Amazon ECR repository.

- Expose the deployment as a Kubernetes service.

- Create an Amazon EBS volume for persistent storage.

- Format the Amazon EBS volume with a file system.

- Create a Kubernetes persistent volume and persistent volume claim that reference the Amazon EBS volume.

- Mount the persistent volume claim on the WordPress pod.

By following these steps, you can deploy a WordPress application on Kubernetes using AWS services. This approach provides a scalable, reliable, and secure foundation for running containerized applications, enabling businesses to focus on development and innovation.

Securing Your Kubernetes Cluster on AWS

Security should be a top priority when deploying and managing containerized applications on Kubernetes. AWS provides several tools and services to help secure your Kubernetes cluster and protect your data. In this section, we will discuss the importance of securing your Kubernetes cluster on AWS and provide guidelines for implementing security best practices. We will cover topics like network policies, role-based access control, and encryption at rest and in transit.

Implementing Network Policies

Network policies are a way to control the flow of traffic between pods in a Kubernetes cluster. By implementing network policies, you can restrict traffic to only the necessary connections, reducing the attack surface of your cluster. AWS provides several tools for implementing network policies, including Amazon VPC security groups and network ACLs, and Calico, a popular open-source network policy engine.

Configuring Role-Based Access Control

Role-based access control (RBAC) is a method of controlling access to resources based on the roles assigned to users or groups. By configuring RBAC, you can ensure that only authorized users have access to your Kubernetes cluster and its resources. AWS provides several tools for implementing RBAC, including AWS Identity and Access Management (IAM) and Kubernetes RBAC.

Encrypting Data at Rest and in Transit

Encryption is a critical component of securing your Kubernetes cluster on AWS. By encrypting data at rest and in transit, you can protect your data from unauthorized access and ensure compliance with data privacy regulations. AWS provides several tools for encrypting data, including Amazon EBS encryption, AWS Key Management Service (KMS), and AWS Certificate Manager (ACM).

Real-World Use Case: Securing a Kubernetes Cluster on AWS

Let’s consider a real-world use case where we secure a Kubernetes cluster on AWS. Here are the steps:

- Create a new Amazon VPC with public and private subnets.

- Configure security groups to allow only necessary traffic to the Kubernetes cluster.

- Enable encryption at rest for Amazon EBS volumes and snapshots.

- Configure Kubernetes RBAC to control access to cluster resources.

- Implement network policies to restrict traffic between pods.

- Enable encryption in transit using TLS certificates from AWS ACM.

By following these steps, you can secure your Kubernetes cluster on AWS and protect your data from unauthorized access. This approach provides a secure and compliant foundation for running containerized applications, enabling businesses to focus on development and innovation.

Monitoring and Troubleshooting Kubernetes on AWS

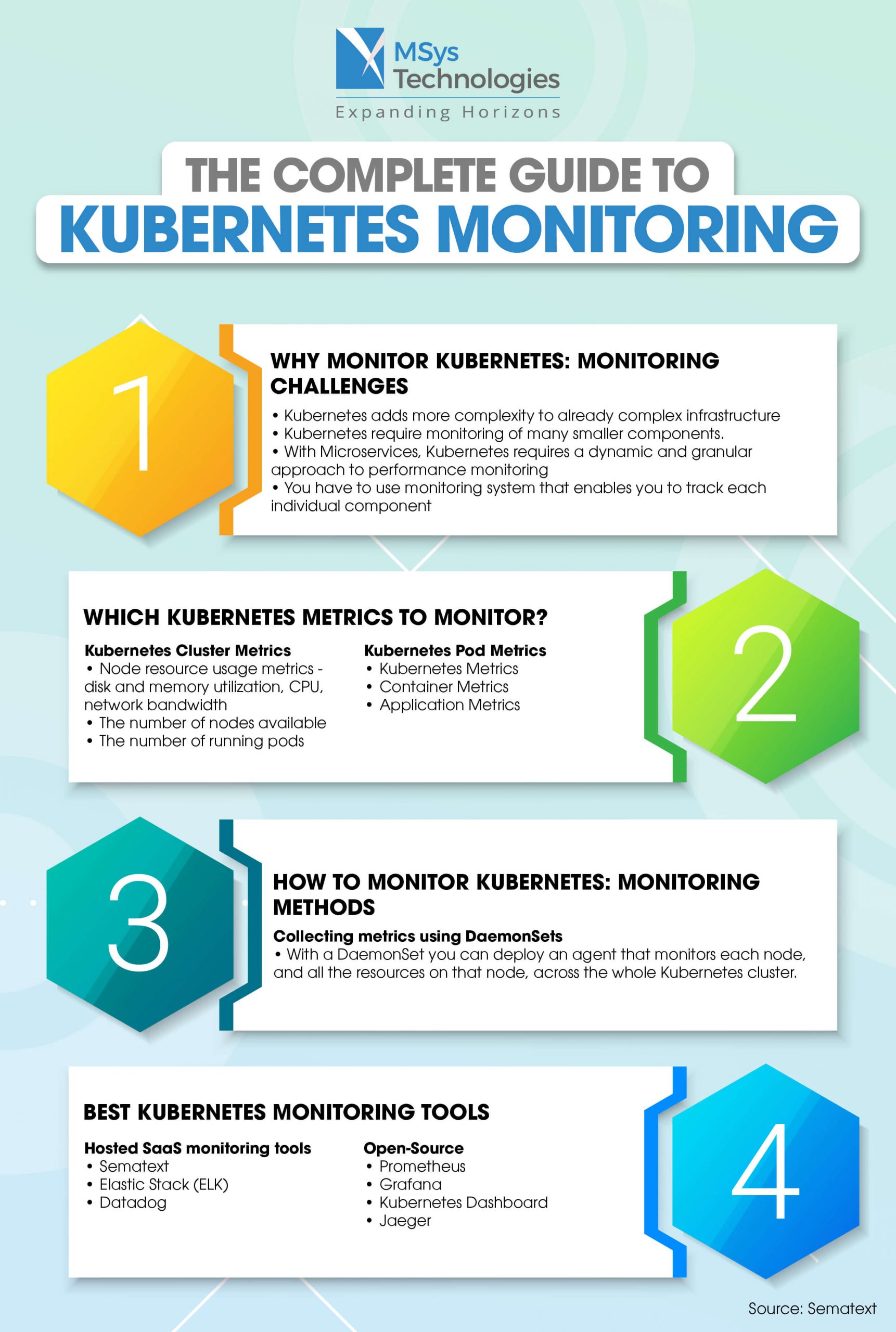

Monitoring and troubleshooting are critical components of managing a Kubernetes cluster on AWS. By monitoring your cluster, you can identify and resolve issues before they impact your applications. In this section, we will explain how to monitor and troubleshoot Kubernetes on AWS, including the use of tools like Amazon CloudWatch, Prometheus, and Grafana. We will also provide tips for identifying and resolving common issues, as well as strategies for continuous improvement.

Using Amazon CloudWatch for Monitoring

Amazon CloudWatch is a monitoring and observability service that provides visibility into your AWS resources and applications. By using CloudWatch, you can monitor your Kubernetes cluster and its resources, including nodes, pods, and containers. CloudWatch provides metrics, logs, and events that you can use to detect and troubleshoot issues in your cluster.

Using Prometheus and Grafana for Monitoring and Visualization

Prometheus is an open-source monitoring system that collects metrics from Kubernetes and other systems. By using Prometheus, you can monitor the health and performance of your Kubernetes cluster and its resources. Grafana is a visualization tool that works with Prometheus to provide dashboards and visualizations of your Kubernetes metrics.

Troubleshooting Common Issues in Kubernetes on AWS

Common issues in Kubernetes on AWS include resource contention, network issues, and application failures. To troubleshoot these issues, you can use tools like kubectl, AWS CLI, and the Kubernetes dashboard. It is also essential to have a solid understanding of Kubernetes architecture and AWS services to effectively troubleshoot issues.

Strategies for Continuous Improvement

To ensure the long-term success of your Kubernetes deployment on AWS, it is essential to have strategies for continuous improvement. This includes regularly reviewing your monitoring and troubleshooting processes, implementing new tools and technologies, and continuously training your team on Kubernetes and AWS best practices.

Real-World Use Case: Monitoring and Troubleshooting a Kubernetes Cluster on AWS

Let’s consider a real-world use case where we monitor and troubleshoot a Kubernetes cluster on AWS. Here are the steps:

- Set up Amazon CloudWatch to monitor your Kubernetes cluster and its resources.

- Install and configure Prometheus and Grafana to visualize your Kubernetes metrics.

- Regularly review your monitoring data to identify and resolve issues before they impact your applications.

- Use tools like kubectl, AWS CLI, and the Kubernetes dashboard to troubleshoot issues in your cluster.

- Continuously review and improve your monitoring and troubleshooting processes to ensure the long-term success of your Kubernetes deployment on AWS.

By following these steps, you can effectively monitor and troubleshoot your Kubernetes cluster on AWS. This approach provides a solid foundation for managing your containerized applications, enabling you to focus on development and innovation.

Optimizing Costs and Performance for Kubernetes on AWS

Optimizing costs and performance is crucial for a successful Kubernetes deployment on AWS. In this section, we will discuss strategies for optimizing costs and performance, such as rightsizing instances, using spot instances, and implementing autoscaling. We will also emphasize the importance of ongoing cost management and performance tuning for a successful Kubernetes deployment on AWS.

Rightsizing Instances

Rightsizing instances means selecting the right instance type and size for your workload. AWS provides a wide range of instance types and sizes, each with different compute, memory, and network capabilities. By selecting the right instance type and size, you can optimize costs and performance for your Kubernetes workload.

Using Spot Instances

Spot instances are spare AWS computing capacity available at up to a 90% discount compared to On-Demand prices. By using spot instances for your Kubernetes workload, you can significantly reduce costs. However, spot instances can be interrupted with short notice, so they are best suited for workloads that can tolerate interruptions.

Implementing Autoscaling

Autoscaling is a feature that automatically adjusts the number of instances in a cluster based on demand. By implementing autoscaling, you can ensure that your Kubernetes cluster has the right resources to handle the workload, reducing costs and improving performance.

Continuous Cost Management and Performance Tuning

Optimizing costs and performance is an ongoing process. It is essential to continuously monitor your Kubernetes cluster and its resources, identify areas for improvement, and implement changes. This includes regularly reviewing your cost and performance data, testing new instance types and sizes, and implementing new cost optimization strategies.

Real-World Use Case: Optimizing Costs and Performance for Kubernetes on AWS

Let’s consider a real-world use case where we optimize costs and performance for a Kubernetes cluster on AWS. Here are the steps:

- Select the right instance type and size for your workload.

- Use spot instances for workloads that can tolerate interruptions.

- Implement autoscaling to ensure that your cluster has the right resources to handle the workload.

- Continuously monitor your cluster and its resources, identify areas for improvement, and implement changes.

- Regularly review your cost and performance data, test new instance types and sizes, and implement new cost optimization strategies.

By following these steps, you can optimize costs and performance for your Kubernetes deployment on AWS. This approach provides a solid foundation for managing your containerized applications, enabling you to focus on development and innovation while reducing costs and improving performance.

Real-World Examples of Hosting Kubernetes on AWS

Hosting Kubernetes on AWS can provide numerous benefits for businesses, including scalability, reliability, and a wide range of services. In this section, we will provide real-world examples of businesses hosting Kubernetes on AWS, highlighting their successes and lessons learned. These examples can serve as inspiration and guidance for organizations looking to deploy Kubernetes on AWS.

Example 1: Netflix

Netflix is a streaming service that uses containerization and Kubernetes to manage its microservices architecture. The company uses AWS as its infrastructure provider and has been able to achieve high availability, scalability, and reliability by hosting Kubernetes on AWS.

Example 2: Airbnb

Airbnb is a vacation rental marketplace that uses Kubernetes to manage its containerized applications. The company uses AWS as its infrastructure provider and has been able to achieve high availability, scalability, and reliability by hosting Kubernetes on AWS.

Example 3: Nordstrom

Nordstrom is a retail company that uses Kubernetes to manage its containerized applications. The company uses AWS as its infrastructure provider and has been able to achieve high availability, scalability, and reliability by hosting Kubernetes on AWS.

Best Practices for Hosting Kubernetes on AWS

Based on these real-world examples, here are some best practices for hosting Kubernetes on AWS:

- Properly plan and prepare for the deployment process.

- Select the right instance type and size for your workload.

- Use spot instances for workloads that can tolerate interruptions.

- Implement autoscaling to ensure that your cluster has the right resources to handle the workload.

- Continuously monitor your cluster and its resources, identify areas for improvement, and implement changes.

- Regularly review your cost and performance data, test new instance types and sizes, and implement new cost optimization strategies.

By following these best practices, organizations can achieve a successful Kubernetes deployment on AWS, just like Netflix, Airbnb, and Nordstrom.