Getting Started with Pandas for Data Manipulation

Pandas stands as a cornerstone library in Python for anyone venturing into data analysis. It provides powerful and flexible tools designed to make data manipulation tasks not just achievable but highly efficient. Central to Pandas are its core data structures: Series and DataFrames. A Series can be thought of as a one-dimensional labeled array, capable of holding any data type. DataFrames, on the other hand, represent tabular data, akin to a spreadsheet, with rows and columns, each potentially containing different data types. The seamless way in which these data structures handle and organize data makes them indispensable for effective data handling. These tools are designed to make complex data operations very simple to achieve and understand.

The necessity of using Pandas for effective hands on data analysis with pandas stems from the library’s ability to not only store and manage but also to transform and analyze data. Whether dealing with small datasets or large, intricate datasets, Pandas simplifies the workflows involved in data analysis. From filtering information and cleaning data to applying statistical functions or performing aggregations. This library offers a concise and intuitive syntax. The learning curve is gentle allowing beginners to quickly grasp the essentials. Seasoned analysts appreciate the robustness and breadth of functionality available for hands-on data analysis with pandas. This introductory section serves to set the stage for practical application. We will quickly transition into the practical aspects of using Pandas with actual code examples and a focus on direct, hands-on interaction. This will prepare you for an immersive learning experience.

This tutorial aims to guide you through the essential aspects of Pandas, with a strong emphasis on the hands-on aspect. You will learn by doing, with practical examples and a clear focus on applying Pandas to real-world data manipulation tasks. The goal is to empower you with the ability to independently approach and analyze data effectively. We will start with setting up your development environment to ensure you are ready to start coding. The objective is that every step taken is one towards mastery of hands-on data analysis with pandas and enhancing your overall data science skills. Prepare to engage actively, experiment, and unlock the power of pandas in your data analysis projects.

How to Load and Inspect Your Data with Pandas

This section focuses on the practical aspects of loading data into Pandas DataFrames. Pandas is an essential tool for hands on data analysis with pandas, and understanding how to load various data formats is crucial. The process begins with importing the Pandas library using the statement ‘import pandas as pd’. Once imported, Pandas provides powerful functions for reading data from different sources. For loading CSV files, the ‘read_csv()’ function is used. An example includes: ‘df = pd.read_csv(‘file.csv’)’, where ‘file.csv’ is the path to your file. Similarly, for Excel files, the function ‘read_excel()’ is used, demonstrated as: ‘df = pd.read_excel(‘file.xlsx’)’. JSON files can be loaded using ‘read_json()’, as shown: ‘df = pd.read_json(‘file.json’)’. Each function returns a DataFrame object, the primary data structure in Pandas. These DataFrames can handle a large amount of data.

After loading data, it’s crucial to understand its structure and content. The ‘.head()’ method is used to display the first few rows of the DataFrame, offering a quick view of the data. Using ‘df.head()’ will display the first 5 rows by default. The ‘.info()’ method is vital for getting a concise summary. This includes the data type of each column, the number of non-null values, and memory usage. It is used with the command ‘df.info()’. To gain statistical insights, use the ‘.describe()’ method. Using ‘df.describe()’ calculates and displays descriptive statistics for numerical columns, such as mean, standard deviation, minimum, and maximum values. This step is integral in the initial phase of hands on data analysis with pandas, enabling a clear understanding of the data. These methods enable you to immediately begin your hands on data analysis with pandas journey.

By using these loading and inspection methods, you can effectively start your data analysis. This process allows for a good understanding of the datasets and starts the path for hands on data analysis with pandas. Knowing how to use ‘.head()’, ‘.info()’, and ‘.describe()’ empowers you to quickly grasp the structure and content of your datasets. These initial steps are the cornerstone of any effective data analysis project. The ability to quickly load and understand different types of files sets you up for the next stages of data manipulation and exploration.

Cleaning and Transforming Data Using Pandas

Data cleaning is a critical step in the process of hands on data analysis with pandas. It ensures the quality of your data and reliability of subsequent analysis. Pandas provides powerful tools to handle common data cleaning challenges. This section will guide you through techniques for managing missing values. These techniques involve either filling them or removing rows or columns containing them. The fillna() method replaces missing values with specified values. The dropna() method removes rows or columns with missing values. These methods are essential to prepare the data. Removing duplicate rows is another crucial task. The drop_duplicates() method identifies and removes duplicate rows, ensuring that your analysis is not biased by repeated information. Data inconsistencies can also be corrected. This might involve standardizing text formats or numerical ranges. Consistent data facilitates accurate analysis. Type conversions can be handled with astype(). This function converts data types to better represent the data. Hands on data analysis with pandas requires a good understanding of data types.

Data transformation is also essential. Pandas offers flexible ways to modify data. Applying functions to columns can transform data. These functions include mathematical operations or string manipulations. For example, you might use apply() or map() to change a specific column values. These operations are crucial to preparing data for advanced analysis. Data transformations can also involve creating new columns based on existing ones. This includes operations like calculations between columns or extracting a sub string from an existing column. Such techniques enhance the information within the DataFrame. Real-world datasets often have inconsistencies. Pandas provide the necessary functions for addressing those challenges with data cleaning and transformation. Handling missing data, removing duplicates, and correcting inconsistencies are important steps. This is the core of hands on data analysis with pandas. Mastering these techniques will significantly improve the quality of your analysis. Data transformation further enables you to shape the data according to your analytical needs.

Correct data types are crucial for efficient analysis. Pandas will convert text and number data to adequate data type. However, sometimes the need of specifying data type is needed. For example, converting a string of numbers to numeric to perform calculations, or a date from a string format to datetime to perform time based operations. Hands on data analysis with pandas requires to have data with the correct data types. These processes are essential for effective data handling, which is fundamental to generating reliable insights.

Data Selection and Filtering: Extracting Relevant Information

Pandas offers powerful tools for selecting specific data. This process is crucial for focused analysis. Learn how to extract the exact information you need. We will explore boolean indexing, loc, and iloc. These methods allow you to select rows and columns based on various conditions. Mastering data selection is vital for effective hands on data analysis with pandas.

Boolean indexing allows filtering based on specific criteria. You create a boolean mask which is then used to select only rows meeting a condition. For example, you can select all rows where a certain column value is greater than 10. This method uses comparison operators to generate the boolean mask. The loc method enables selection using labels. You can select rows and columns by specifying their names or index labels. In contrast, iloc allows for integer-based selection. It allows for selecting rows and columns based on their integer positions. Both loc and iloc provide precise control over data selection. These methods are essential for any type of hands on data analysis with pandas.

Let’s look at filtering data based on different criteria. Imagine you want to examine data for a specific group or time period. You can combine multiple conditions using logical operators (& for ‘and’, | for ‘or’). This way, you can easily extract specific subsets of information. For example, selecting rows where the value in column A is greater than 5 AND column B equals “example”. Understanding the logic behind different selections is important. It allows you to focus on relevant portions of your dataset. The ability to select specific data is a fundamental part of performing hands on data analysis with pandas.

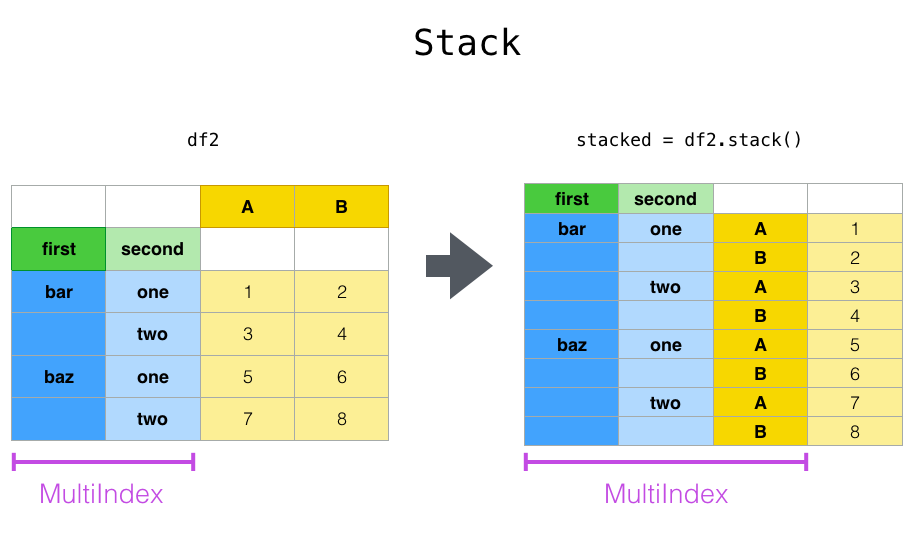

Performing Data Aggregations and Grouping

Pandas provides powerful tools for aggregating data, enabling users to derive meaningful insights from datasets. The .groupby() method is central to this process. It allows for grouping rows based on one or more columns. After grouping, aggregation functions like .sum(), .mean(), .min(), .max(), and .count() can be applied to each group. This functionality is essential for hands on data analysis with pandas, because it provides an easy way to summarize large datasets. Consider a dataset of sales data. Grouping by ‘product category’ and then calculating the total sales using .sum() reveals the best-performing categories. Similarly, grouping by ‘customer segment’ and averaging purchase value shows which segment spends the most. These operations offer a detailed view of the data beyond raw numbers. They highlight trends and patterns within specific groupings, which helps to understand the underlying dynamics of the dataset and is a key component of hands on data analysis with pandas.

The combination of .groupby() and aggregation functions enables sophisticated data exploration. For instance, one could group a dataset of student test scores by ‘subject’ and calculate the average score for each subject using .mean(). This would reveal which subjects students perform best in. Or, analyzing a dataset of website traffic by ‘source’ and counting the number of visits using .count() per source helps understand where the traffic is coming from. You can also apply multiple aggregations at once. Applying both .mean() and .std() to grouped data allows for simultaneous analysis of average values and the variability within each group. Such multi-faceted analysis adds depth to the insights and allows for a more nuanced interpretation of the data, enhancing hands on data analysis with pandas. This kind of analysis is instrumental for understanding and identifying trends that might be overlooked when looking at the overall data.

The use of these methods enhances the ability to perform targeted analysis, which is core to hands on data analysis with pandas. Grouping data by different parameters can bring different results to light. Grouping sales data first by date and then by product category can show how sales trends vary by category over time. This level of granularity is vital for effective data analysis and provides valuable insights for decision-making. Furthermore, the versatility of the .groupby() method enables you to perform calculations and comparisons across different segments within the data set. This results in a more complete understanding of the dataset and a higher quality hands on data analysis with pandas.

Visualizing Data Insights with Pandas and Matplotlib

Integrating Pandas with visualization libraries such as Matplotlib enhances the process of hands on data analysis with pandas. This integration allows for the creation of plots and charts directly from DataFrames. Pandas offers built-in plotting methods, like .plot(), .hist(), and .scatter(), facilitating easy visual exploration. These tools are vital for turning data into understandable visuals. Choosing the right plot is key to conveying the data accurately. Line plots work well for trends over time. Histograms display the distribution of data. Scatter plots are effective for examining relationships between variables. These methods help uncover patterns and insights previously hidden in raw data. Using these tools, data analysts gain a clearer, more intuitive understanding of the information at hand.

The .plot() method in Pandas provides a quick way to generate a variety of plots. For instance, to visualize a time series, the .plot() method is direct and efficient. For visualizing distributions, .hist() generates histograms that show the frequency of values. The .scatter() method is very useful for comparing two numerical columns in a dataset. Understanding which plot type to use for different data types is critical. Numerical data can be visualized using histograms and box plots. Categorical data might be better represented with bar charts or pie charts. Matplotlib and Seaborn offer advanced customization options that enhance plot aesthetics and information delivery. These options allow the user to adjust colors, labels, and more, making the visual analysis more detailed and tailored for specific needs. By effectively integrating pandas with visualization libraries, one can perform effective hands on data analysis with pandas and get valuable insights quickly.

Visual exploration becomes a powerful extension of data manipulation. The insights gained from prior data processing and cleaning steps, such as filtering and aggregation, can now be presented visually. This process involves not only creating plots, but also interpreting and adjusting them for maximum clarity and impact. Visual analysis enhances the narrative aspect of data exploration, making complex patterns understandable for a broad audience. By using these visual tools effectively, one can communicate findings with a greater impact. This process makes data analysis more approachable, allowing for a deeper understanding of underlying trends and relationships. This is a critical component of effective hands on data analysis with pandas because it makes the data more understandable for everyone involved in the data analysis process.

Handling Time Series Data with Pandas

Time series data, which tracks data points over time, is a common type of data in many fields. Pandas provides robust tools for effective time series analysis. This section will demonstrate how to manipulate and analyze this type of data effectively. To start, string data representing dates or times often needs to be converted to datetime objects. Pandas provides the pd.to_datetime() function for this purpose. This function can handle various date and time formats, converting them into a format that Pandas can understand. Once you have datetime objects, it’s beneficial to set one as the DataFrame’s index using .set_index(). This creates a time-based index, which allows for specialized time series operations. Doing so enables efficient filtering and resampling of the data based on time periods.

Resampling is a critical operation for time series data. Pandas’ .resample() method allows you to aggregate data at different time frequencies, such as daily, weekly, or monthly. This can be particularly helpful for identifying trends and patterns. For example, you can resample daily sales data to show monthly totals. This simplifies the data, making high-level trends more visible. Moreover, time series data analysis may involve calculating moving averages. These can help smooth out short-term fluctuations, highlighting underlying trends over time. You can calculate moving averages using the .rolling() method. This creates a window of a specified size to calculate a mean over a specific time window. shift() methods are also critical for working with time series, allowing comparisons of values at different time points. These techniques are invaluable in the hands on data analysis with pandas. They allow you to perform deep dives into temporal changes and dependencies.

Finally, consider that working with time series goes hand in hand with visualization techniques. Pandas, when combined with visualization libraries like Matplotlib, allows for the creation of time series plots. These plots make the data and analysis more understandable. Lines plots are often used to show trends over time. Also, it is common practice to use bar charts to visualize aggregated data. The combination of time series operations and visualizations using pandas is a powerful tool. This approach provides a complete solution for both data wrangling and analysis. This makes hands on data analysis with pandas a lot more insightful. The capabilities of pandas for time series data, from data conversion to resampling and visualization, are critical for real-world projects.

Hands-On Data Analysis Project: Analyzing Sales Data with Pandas

Embark on a practical project that brings together all the skills acquired in this guide. This section provides a step-by-step walkthrough of a hands on data analysis with pandas project, using a real-world sales dataset. The dataset, a small CSV file representing daily sales for a small shop, includes columns like ‘Date’, ‘Product’, ‘Quantity’, and ‘Revenue’. Start by loading the dataset into a Pandas DataFrame using the techniques discussed previously. Use the read_csv function to read the data into a DataFrame object. Once loaded, inspect the data by examining the first few rows using .head(). Then, use .info() to understand the datatypes and check for any missing data. This initial inspection is crucial before starting any hands on data analysis with pandas tasks.

Next, focus on data cleaning and preprocessing, crucial for accurate analysis. Check for missing values using .isnull().sum() and handle them appropriately. If there are any missing values, you could impute the missing revenue with the mean revenue, or use other suitable methods based on your analysis needs. Ensure that the ‘Date’ column is in datetime format. Convert it using to_datetime(), which allows for time-based analysis later on. If needed, transform data. For example, you could create a new column named ‘Revenue_per_Quantity’ by dividing the ‘Revenue’ by the ‘Quantity’ column. Also, correct any data inconsistencies that might exist in the ‘Product’ column using the replace method, to improve the data quality. Filter and select necessary data. For instance, filter sales data for a specific product using boolean indexing, or a date range using time based indexing.

After preparing the data, move into data analysis. Start by grouping the data by ‘Product’ and calculating the sum of ‘Revenue’ and ‘Quantity’ for each. This shows which products are most profitable or more frequently sold. Next, group data by ‘Date’ and calculate the total daily ‘Revenue’ or ‘Quantity’. This is the most basic example of hands on data analysis with pandas, demonstrating the power of aggregation for insights. Then use Pandas built-in plot methods like .plot() to visualize daily revenue trends, or use a bar plot to represent total sales per product. Finally, resample the sales data to monthly frequency and calculate monthly revenue using .resample(‘M’).sum(). This comprehensive project consolidates the skills learned throughout this guide, empowering you to conduct hands on data analysis with pandas effectively and confidently.