Understanding Google Cloud Storage: What it is and Why it Matters

Google Cloud Storage (GCS) is a powerful and scalable object storage service offered by Google Cloud Platform. It provides a cost-effective solution for storing and retrieving any amount of data—from a few gigabytes to petabytes. GCS’s key features include high durability, availability, and security. Data is replicated across multiple locations, ensuring resilience against failures. This makes it ideal for a wide array of use cases, including data archiving, backups, disaster recovery, and serving website content directly from a google bucket. The service offers several storage classes, each optimized for different needs and price points, allowing users to tailor their storage strategy for optimal cost efficiency. Understanding these storage classes is crucial for effective management of data within a google bucket. The next section explores these different classes in detail and explains how one can interact with a google bucket via various methods, including the intuitive Google Cloud Console or through powerful APIs.

The flexibility of GCS extends to how you organize and access your data. You can easily manage individual files (objects) within your google bucket, employing various strategies for efficient retrieval and management. One particularly valuable application involves creating and configuring a google bucket to perfectly suit specific needs. This includes choices like the bucket’s location, the type of storage class employed, and defining robust access control lists for stringent security. GCS integrates seamlessly with numerous other Google Cloud services, enriching the capabilities of your entire cloud infrastructure. Efficient data transfers to and from your google bucket are facilitated by tools like the `gsutil` command-line utility and the Cloud Storage Transfer Service. This allows for easy management of large datasets, whether you’re migrating existing data or regularly uploading new files. By understanding and implementing these aspects, you will fully harness the potential of GCS for your data storage and management needs.

Cost optimization is a critical consideration when working with any cloud service, and GCS provides several mechanisms to manage expenses effectively. Careful selection of storage classes, the implementation of lifecycle policies for automated data management, and consistent monitoring of usage reports are all key strategies. Equally important is a strong focus on security. GCS offers robust security features, including access control mechanisms, data encryption both in transit and at rest, and the use of Identity and Access Management (IAM) roles to finely control permissions. By implementing these security best practices, users can safeguard their valuable data stored within their google bucket. The integration of GCS with other Google Cloud services further enhances its value, providing a cohesive and powerful platform for a wide range of data management and processing tasks.

Exploring the Different Storage Classes in Google Cloud Storage

Google Cloud Storage (GCS) offers various storage classes to cater to different needs and budgets. Each class provides a balance between cost and access speed. Understanding these nuances is crucial for optimizing your google bucket strategy and minimizing expenses. Choosing the right class directly impacts both cost and data retrieval time. Let’s examine the options available within your google bucket.

The Standard class offers the lowest latency and highest throughput. It’s ideal for frequently accessed data, like active websites or applications. Nearline is a cost-effective choice for data accessed less frequently, typically once a month or less. Data retrieval from Nearline takes slightly longer than Standard, but it significantly reduces storage costs. Coldline storage is even more economical, designed for data accessed less than once a quarter. Access times are longer still, reflecting the lower cost. Finally, Archive is the most affordable option, suitable for long-term archiving where data is rarely, if ever, accessed. Retrieval from Archive takes many hours, making it unsuitable for frequent access but excellent for long-term data preservation within the google bucket.

The selection of the appropriate storage class depends entirely on your access patterns and budget. Frequent access necessitates Standard, while infrequent access benefits from Nearline, Coldline, or Archive. Consider how often you anticipate needing to access your data when choosing a class for your google bucket. The cost difference between classes can be substantial, so careful planning is essential to achieve optimal cost efficiency. Managing storage classes effectively within a google bucket is a key aspect of overall cost management for your cloud infrastructure. After selecting a storage class, you can begin interacting with these classes via the Google Cloud Console or the Google Cloud Storage API, which is covered in the next section. Remember, correctly choosing a storage class for your google bucket is crucial for minimizing storage costs without sacrificing access to your data.

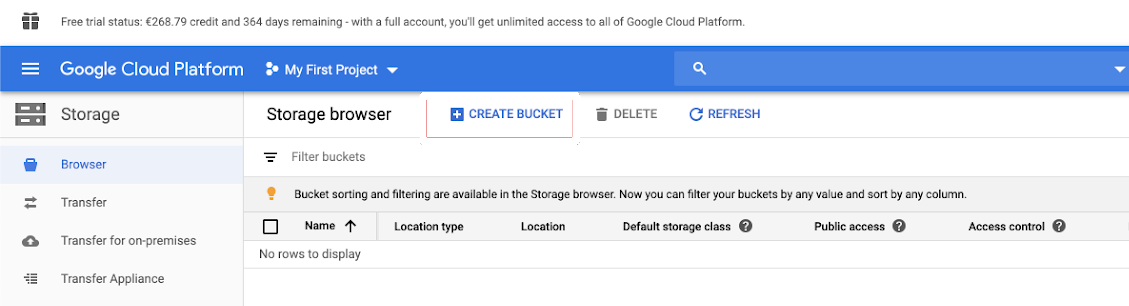

How to Set Up and Configure a Google Cloud Storage Bucket

Creating a new Google Cloud Storage bucket is straightforward. First, navigate to the Google Cloud Console. Then, locate the Cloud Storage section. Click on “Create bucket.” You’ll need to provide a unique name for your google bucket. This name must be globally unique across all Google Cloud projects. The name should reflect the purpose of the bucket. Choose a location geographically close to your users to minimize latency. Consider the various storage classes; Standard, Nearline, Coldline, and Archive. Each class offers different pricing and retrieval times. Select the class that best fits your needs and budget. Remember, selecting the right storage class for your google bucket significantly impacts cost.

Next, configure your google bucket’s access control. You can choose between public access, allowing anyone to access your data (generally discouraged), or private access, restricting access to authorized users only. For enhanced security, utilize granular access control lists (ACLs) to manage permissions precisely. ACLs allow fine-grained control, specifying permissions for individual users or groups. They’re crucial for maintaining the security of your google bucket and its contents. Consider setting up lifecycle management rules. These rules automatically move or delete objects based on age or other criteria. This is useful for archiving old data to a cheaper storage class or for deleting obsolete data to reduce costs. Proper lifecycle management helps optimize your google bucket’s cost-effectiveness.

Finally, review your bucket’s configuration. Ensure that all settings meet your requirements. Once you’re satisfied, click “Create.” Your new google bucket will be ready to use. You can now upload, download, and manage objects within your newly created google bucket. Remember to regularly review and update your bucket’s settings and security configurations to maintain optimal performance and protection. Consistent monitoring of your google bucket helps identify and address potential issues proactively, ensuring smooth operation and data integrity. Regularly review and adjust your storage class selections to ensure cost-efficiency. Choosing the right storage class for each dataset within the google bucket is critical to maintaining cost-effectiveness. The configuration of your google bucket directly impacts its efficiency and security.

Working with Objects Within Your Google Cloud Storage Bucket

Managing objects within a google bucket involves several key operations. Uploading files to your google bucket is straightforward. The Google Cloud Console provides a user-friendly interface for dragging and dropping files. Alternatively, the command-line interface (`gcloud`) offers powerful options for bulk uploads and managing large datasets. Remember to choose the appropriate storage class when uploading, considering factors like access frequency and cost. Efficient organization within the google bucket is crucial. Use a clear folder structure to categorize your objects, mirroring your project’s organization. This approach simplifies searching and retrieval.

Downloading objects from your google bucket is equally easy. The Google Cloud Console allows for direct download links. The `gsutil` command-line tool provides flexibility for downloading multiple objects or entire folders. You can also integrate your google bucket with other Google Cloud services for seamless data transfer. Deleting objects is a simple process, but it’s crucial to exercise caution. Before deleting, ensure you have backups or aren’t unintentionally removing important files. Always double-check the objects you intend to delete from the google bucket. Regularly auditing the contents of your google bucket is a best practice. This allows for efficient cleanup and identification of obsolete data.

Beyond basic operations, managing objects involves sophisticated techniques. Metadata tagging allows for fine-grained control over object properties. This helps in organization and search. Google Cloud Storage lifecycle management enables automated actions based on object age. For example, you can automatically move older, less frequently accessed files to a lower-cost storage class within your google bucket. This automatic optimization minimizes storage costs. By mastering these techniques, users can efficiently manage objects within their google bucket, ensuring both accessibility and cost-effectiveness. Understanding these operations is essential for optimizing data management within your Google Cloud environment. Proper management of your google bucket directly impacts data retrieval speed and overall project efficiency.

Optimizing Data Transfer to and From Your Google Cloud Storage Bucket

Efficient data transfer is crucial for maximizing the benefits of using a google bucket. Google Cloud Storage offers several tools and strategies to optimize this process. The command-line tool, `gsutil`, provides a powerful and flexible way to upload and download files to and from your google bucket. `gsutil` allows for parallel uploads and downloads, significantly speeding up the transfer process, especially for large datasets. Consider using the `-m` flag with `gsutil` to leverage parallel processing capabilities for faster transfers to your google bucket.

Beyond `gsutil`, Google Cloud Platform provides the Cloud Storage Transfer Service. This service simplifies the transfer of large datasets from various sources, including on-premises storage, other cloud providers, and even from within Google Cloud. It handles complex transfers reliably and efficiently. The Transfer Service allows for scheduling transfers, handling authentication securely, and monitoring the progress of large uploads and downloads to your google bucket. Choosing the right service depends on the source, volume, and frequency of transfers. For example, for recurring transfers from an on-premises server, the Transfer Service is highly recommended. For smaller, one-time transfers, `gsutil` might suffice.

Network bandwidth significantly impacts transfer speeds. Utilizing regional endpoints for your google bucket can greatly reduce latency and improve overall transfer performance. Regional endpoints are geographically closer to your data source, minimizing the distance data travels. When uploading or downloading data to your google bucket, always consider using the closest regional endpoint. This simple optimization can substantially reduce transfer times and costs. Carefully assess your network infrastructure and the geographical location of your data source and google bucket to make informed decisions about endpoint selection. Remember to monitor your network performance to identify any bottlenecks and implement appropriate adjustments to further optimize data transfers to your google bucket.

Cost Management and Optimization for Google Cloud Storage

Managing costs effectively is crucial when using Google Cloud Storage. Understanding the pricing structure and implementing cost optimization strategies are key to controlling expenses. Google Cloud Storage offers various storage classes, each with different pricing models. Selecting the appropriate storage class for your data based on access frequency significantly impacts your overall costs. For infrequently accessed data, Nearline, Coldline, or Archive storage classes offer substantial cost savings compared to the Standard class. Regularly reviewing your data’s access patterns and migrating data to a more cost-effective storage class when appropriate can lead to significant reductions in your monthly bill. Efficient lifecycle management within your google bucket is another powerful tool. By setting expiration policies for objects, you automatically delete data after a specified period, minimizing storage costs. This proactive approach ensures that you only pay for the data you actively use. Careful planning and regular review of lifecycle policies are vital for long-term cost management in your google bucket.

Monitoring your Google Cloud Storage usage is equally important. Google Cloud Platform provides detailed usage reports that allow you to track your spending in real-time. These reports offer insights into storage usage, data transfer costs, and other relevant expenses. By analyzing these reports, you can identify areas where cost optimization is possible. For example, you might discover that certain objects are stored in a more expensive storage class than necessary. You can then apply the appropriate lifecycle management policies and migrate objects to reduce storage costs within your google bucket. Analyzing data transfer costs is another critical step. Efficient data transfer methods, such as using the `gsutil` command-line tool and regional endpoints, can minimize network costs. Leveraging Google Cloud Storage Transfer Service can also automate and optimize large data transfers, saving both time and money. Regular monitoring and proactive adjustments based on usage patterns are key to maintaining a cost-effective strategy for your Google Cloud Storage deployment. Remember to factor in the cost of retrieving data from different storage classes. Coldline and Archive classes have retrieval fees that must be considered when planning data access and retrieval for your google bucket.

Implementing a combination of strategies—choosing the right storage class, actively managing lifecycle policies, using efficient transfer methods, and regularly monitoring usage—provides a robust approach to cost optimization for Google Cloud Storage. By proactively managing your data’s lifecycle and optimizing transfer operations, you can significantly reduce your overall cloud storage costs while ensuring your data remains readily accessible. This holistic approach will lead to a sustainable and cost-effective use of your Google Cloud Storage resources. Understanding and implementing these strategies ensures your google bucket operates efficiently and economically.

Security Best Practices for Google Cloud Storage Buckets

Protecting your google bucket data is paramount. Implement robust access control measures using Identity and Access Management (IAM). IAM lets you granularly control who can access your google bucket and what actions they can perform. Assign only the necessary permissions to users and services. Regularly review and audit these permissions to ensure they remain appropriate. This proactive approach minimizes the risk of unauthorized access and data breaches. Remember, a compromised google bucket can have severe consequences.

Data encryption is another critical security layer. Google Cloud Storage offers both encryption at rest and in transit. Encryption at rest protects data stored in your google bucket. Encryption in transit protects data while it’s being transferred. Always enable encryption for both scenarios. Consider using customer-managed encryption keys (CMEK) for an extra layer of control over your encryption keys. This provides greater security and ensures compliance with industry regulations. Implementing these measures significantly reduces the risk of data theft or unauthorized access to your google bucket.

Beyond encryption and access control, regularly review your google bucket’s security configuration. Stay updated on the latest security best practices and Google Cloud Platform (GCP) security announcements. Implement lifecycle policies to automatically delete outdated data. This reduces your attack surface and improves overall security posture. Regularly scan your google bucket for vulnerabilities. Use Google Cloud’s security tools and services to monitor for suspicious activity. Proactive monitoring and regular security audits are essential for maintaining a secure google bucket environment. These practices contribute to a robust and secure cloud storage solution. Always prioritize security to safeguard your valuable data stored within your google bucket.

Integrating Google Cloud Storage with Other Google Cloud Services

Google Cloud Storage (GCS) seamlessly integrates with a wide array of other Google Cloud Platform (GCP) services, enhancing the functionality and efficiency of your cloud infrastructure. One prime example is its powerful synergy with Compute Engine. Applications running on Compute Engine instances can readily access and utilize data stored in a google bucket, facilitating dynamic data processing and storage management. This integration simplifies application development, eliminating the need for complex data transfer mechanisms. Efficient data retrieval from the google bucket ensures optimal application performance.

Another crucial integration involves App Engine. App Engine applications leverage GCS for storing static assets like images, videos, and documents. This approach optimizes application performance and scalability by offloading storage responsibilities to a highly reliable and scalable service. Developers benefit from this streamlined workflow, focusing their efforts on application logic rather than storage management. The use of a google bucket for static assets is also a cost-effective solution compared to managing storage within the application itself. The integration makes deployments easier and faster, enabling rapid iteration and updates.

Furthermore, Google Cloud Storage plays a vital role in BigQuery data warehousing. BigQuery can directly ingest data from a google bucket, simplifying the process of loading large datasets into the warehouse for analytics. This integration streamlines the data pipeline, minimizing latency and maximizing the efficiency of data analysis. The robust scalability of both GCS and BigQuery ensures seamless handling of massive datasets, providing powerful capabilities for large-scale data processing and analysis. Utilizing a google bucket in this context reduces data processing time and minimizes costs associated with data transfer. This integration is instrumental in creating a complete and efficient data analytics pipeline within the Google Cloud ecosystem.