What is Google Bigtable?

Google Bigtable is a distributed, column-family NoSQL database service developed by Google. It is designed to handle extremely large amounts of structured data with low latency and high throughput. Bigtable is a critical component of Google’s infrastructure, providing the foundation for many of its core services, including Google Search, Google Analytics, and Google Maps.

Bigtable’s column-family design allows for flexible and efficient data modeling, enabling users to store and retrieve data in a way that best suits their needs. Its scalability makes it an ideal solution for managing massive datasets, while its high performance ensures that data can be accessed quickly and easily. Furthermore, Bigtable’s integration with other Google tools and services, such as Google Cloud Dataflow and Google Cloud Dataproc, makes it a powerful tool for data processing and analysis.

A Brief History of Google Bigtable

Google Bigtable was initially developed in 2004 by Google engineers to handle the massive amounts of data generated by Google’s web indexing system. It was designed as a distributed, column-family NoSQL database that could scale horizontally to handle petabytes of data. In 2006, Google published a paper describing Bigtable, which led to the development of several open-source NoSQL databases, including Apache Cassandra and HBase.

Over time, Bigtable has evolved to become a more robust and feature-rich database service. In 2015, Google announced the availability of Bigtable as a managed service on its Google Cloud Platform (GCP), making it accessible to developers outside of Google. Since then, Bigtable has become an integral part of GCP, providing a scalable and high-performance database solution for a wide range of use cases.

Bigtable is also closely connected to other Google projects and services. For example, it is the foundation for Google’s Cloud Bigtable Dataflow and Dataproc services, which provide powerful data processing and analysis capabilities. Additionally, Bigtable integrates with other GCP services, such as Google Kubernetes Engine and Google Cloud Functions, enabling users to build end-to-end data processing pipelines that can handle large-scale data workloads.

Key Features and Benefits of Google Bigtable

Google Bigtable is a powerful and flexible NoSQL database service that offers several key features and benefits. Its high performance and scalability make it an ideal solution for handling large-scale data workloads. Bigtable can easily handle petabytes of data, providing fast and reliable data access with low latency and high throughput.

One of the main benefits of Bigtable is its flexibility. Its column-family design allows for flexible and efficient data modeling, enabling users to store and retrieve data in a way that best suits their needs. Bigtable’s schema-less design also means that users can easily modify their data model as their needs change, without having to make significant changes to the underlying database structure.

Bigtable’s integration with other Google tools and services is another significant benefit. It can be easily integrated with Google Cloud Dataflow and Dataproc, providing powerful data processing and analysis capabilities. Additionally, Bigtable integrates with other GCP services, such as Google Kubernetes Engine and Google Cloud Functions, enabling users to build end-to-end data processing pipelines that can handle large-scale data workloads.

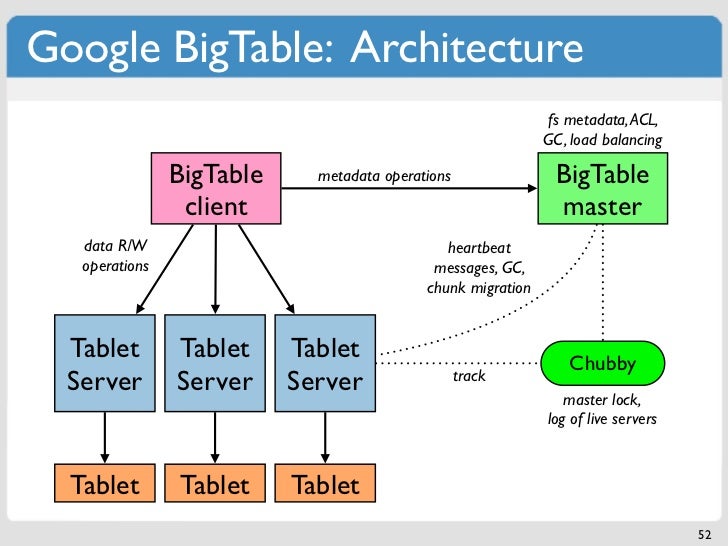

Another key benefit of Bigtable is its ability to handle large amounts of data. Its distributed architecture allows for horizontal scaling, enabling users to add more nodes to the cluster as their data grows. This scalability makes Bigtable an ideal solution for handling massive datasets, such as time-series data, financial data, and IoT data.

Use Cases for Google Bigtable

Google Bigtable is a versatile NoSQL database service that can be used for a wide range of use cases. Its high performance, scalability, and flexibility make it an ideal solution for handling large-scale data workloads. Here are some common use cases for Google Bigtable:

- Time-series data: Bigtable is an excellent solution for storing and analyzing time-series data, such as sensor data, financial data, and telemetry data. Its high performance and scalability make it an ideal solution for handling massive datasets with low latency and high throughput.

- Financial data: Bigtable can be used to store and analyze financial data, such as stock prices, trading data, and market data. Its ability to handle large amounts of data and its integration with other Google tools, such as Google Cloud Dataflow and Dataproc, make it an ideal solution for financial data processing and analysis.

- IoT data: Bigtable can be used to store and analyze IoT data, such as device telemetry data, sensor data, and device metadata. Its scalability and flexibility make it an ideal solution for handling the massive amounts of data generated by IoT devices.

- Graph data: Bigtable can be used to store and analyze graph data, such as social networks, recommendation engines, and network topologies. Its flexibility and schema-less design make it an ideal solution for storing and querying graph data.

- Content and media data: Bigtable can be used to store and serve content and media data, such as videos, images, and audio files. Its high performance and scalability make it an ideal solution for handling large-scale media workloads.

Many companies use Bigtable for these and other use cases. For example, Snap uses Bigtable to store and analyze user engagement data, while Spotify uses Bigtable to store and analyze music metadata and audio features. Other companies that use Bigtable include GitHub, NYTimes, and Twitter.

How to Get Started with Google Bigtable

Google Bigtable is a powerful NoSQL database service that can handle large-scale data workloads with ease. Here are the steps required to get started with Google Bigtable:

- Create a Google Cloud Platform (GCP) project: To use Google Bigtable, you need to create a GCP project. This can be done through the GCP Console or the Google Cloud CLI. Once the project is created, you need to enable the Bigtable API.

- Set up a Bigtable cluster: A Bigtable cluster consists of one or more nodes that store and serve data. To set up a cluster, you need to specify the cluster’s location, the number of nodes, and the storage capacity. You can create a cluster through the GCP Console or the Google Cloud CLI.

- Create a Bigtable table: A Bigtable table is a collection of rows that store and retrieve data. To create a table, you need to specify the table’s schema, which defines the column families and column qualifiers. You can create a table through the GCP Console or the Bigtable HBase API.

- Access the data: Once the table is created, you can access the data through the Bigtable HBase API or the Bigtable CLI. You can also use third-party tools, such as Apache Hive and Apache Pig, to access the data.

- Set up permissions and roles: To manage access to the Bigtable cluster and table, you need to set up permissions and roles. This can be done through the GCP Console or the Google Cloud CLI. You can assign roles, such as “Bigtable Data Viewer” and “Bigtable Data Editor,” to users and groups.

Google Bigtable is a powerful and flexible NoSQL database service that can handle large-scale data workloads. By following the steps outlined above, you can get started with Google Bigtable and start using it for your data storage and processing needs.

https://www.youtube.com/watch?v=JpWLvQK95IE

Best Practices for Google Bigtable

Google Bigtable is a powerful and flexible NoSQL database service that can handle large-scale data workloads. To get the most out of Google Bigtable, it’s essential to follow best practices for data modeling, performance optimization, and security. Here are some best practices to keep in mind:

Data Modeling

- Choose the right column family: Column families are the primary unit of access control in Bigtable. It’s essential to choose the right column family for your data to ensure efficient data access and storage. Consider the access patterns, data size, and data lifecycle when choosing a column family.

- Use composite column qualifiers: Composite column qualifiers allow you to store multiple attributes in a single column. This can help reduce the number of columns in a row and improve performance. Use composite column qualifiers to store related data together and improve query performance.

- Avoid hotspotting: Hotspotting occurs when many clients access the same row or column family simultaneously, causing performance issues. To avoid hotspotting, use sharding or partitioning to distribute the load evenly across the cluster.

Performance Optimization

- Monitor performance: Monitoring performance is critical to ensuring that your Bigtable cluster is running efficiently. Use Bigtable metrics, such as latency, throughput, and error rates, to identify performance issues and optimize performance.

- Use caching: Caching can help improve performance by reducing the number of requests to the cluster. Use Bigtable’s built-in caching features, such as row cache and memtable, to improve performance.

- Optimize data access patterns: Data access patterns can significantly impact performance. Use techniques, such as batching, pipelining, and prefetching, to optimize data access patterns and improve performance.

Security

- Set up permissions and roles: To ensure secure access to the Bigtable cluster and table, set up permissions and roles. Use GCP’s Identity and Access Management (IAM) features to assign roles, such as “Bigtable Data Viewer” and “Bigtable Data Editor,” to users and groups.

- Use encryption: Encryption can help protect data at rest and in transit. Use Bigtable’s built-in encryption features, such as at-rest encryption and in-transit encryption, to secure your data.

- Monitor for security threats: Monitoring for security threats is critical to ensuring the security of your Bigtable cluster. Use GCP’s security features, such as Security Command Center and VPC Service Controls, to monitor for security threats and protect your cluster.

By following these best practices, you can ensure that your Google Bigtable cluster is running efficiently, securely, and effectively. Regularly monitoring and maintaining the system is also essential to ensure optimal performance and security.

Comparing Google Bigtable to Other NoSQL Databases

Google Bigtable is a powerful NoSQL database service that offers high performance, scalability, and flexibility. However, it’s not the only NoSQL database service available. Here’s how Google Bigtable compares to other popular NoSQL databases, such as Apache Cassandra, MongoDB, and Amazon DynamoDB.

Google Bigtable vs. Apache Cassandra

- Data Model: Both Google Bigtable and Apache Cassandra use a column-family data model. However, Google Bigtable has a more flexible schema, allowing for more complex data models. Apache Cassandra, on the other hand, has a more rigid schema, which can be beneficial for some use cases.

- Scalability: Both Google Bigtable and Apache Cassandra are highly scalable. However, Google Bigtable is better suited for handling massive datasets, while Apache Cassandra is better suited for distributed data processing and analysis.

- Integration: Google Bigtable is tightly integrated with other Google tools and services, such as Google Cloud Dataflow and Google Cloud Dataproc. Apache Cassandra, on the other hand, is a standalone database service that can be integrated with various tools and services.

Google Bigtable vs. MongoDB

- Data Model: Google Bigtable uses a column-family data model, while MongoDB uses a document-oriented data model. This means that MongoDB is better suited for storing and querying complex, nested data structures.

- Scalability: Both Google Bigtable and MongoDB are highly scalable. However, Google Bigtable is better suited for handling massive datasets, while MongoDB is better suited for distributed data processing and analysis.

- Integration: Google Bigtable is tightly integrated with other Google tools and services, while MongoDB is a standalone database service that can be integrated with various tools and services.

Google Bigtable vs. Amazon DynamoDB

- Data Model: Both Google Bigtable and Amazon DynamoDB use a key-value data model. However, Google Bigtable has a more flexible schema, allowing for more complex data models.

- Scalability: Both Google Bigtable and Amazon DynamoDB are highly scalable. However, Google Bigtable is better suited for handling massive datasets, while Amazon DynamoDB is better suited for low-latency, high-throughput workloads.

- Integration: Google Bigtable is tightly integrated with other Google tools and services, while Amazon DynamoDB is tightly integrated with other Amazon Web Services (AWS) tools and services.

When choosing a NoSQL database service, it’s essential to consider your specific use case and requirements. Google Bigtable is an excellent choice for handling massive datasets and integrating with other Google tools and services. However, other NoSQL databases, such as Apache Cassandra, MongoDB, and Amazon DynamoDB, may be better suited for specific use cases and requirements.

The Future of Google Bigtable

Google Bigtable is a powerful NoSQL database service that has been a cornerstone of Google’s infrastructure for over a decade. As the demand for handling large amounts of data continues to grow, Google Bigtable is poised to play an even more critical role in the future of data management.

Potential Updates and New Features

- Improved Integration: Google is continuously working on improving the integration of Bigtable with other Google tools and services. In the future, we can expect even tighter integration with services such as Google Cloud Dataflow, Google Cloud Dataproc, and Google Kubernetes Engine.

- Enhanced Security: Security is a top priority for Google, and we can expect continued investment in enhancing the security features of Bigtable. This includes better access control, encryption, and monitoring capabilities.

- Advanced Analytics: Google is investing in advanced analytics capabilities for Bigtable, such as machine learning and artificial intelligence. This will enable users to gain deeper insights from their data and make more informed decisions.

The Growing Importance of NoSQL Databases

NoSQL databases, such as Google Bigtable, are becoming increasingly important as the amount of data being generated continues to grow. NoSQL databases offer high performance, scalability, and flexibility, making them ideal for handling large amounts of data. As the demand for handling big data grows, NoSQL databases will become even more critical in the data management landscape.

The Role of Google Bigtable in the NoSQL Landscape

Google Bigtable is a powerful NoSQL database service that offers high performance, scalability, and flexibility. Its tight integration with other Google tools and services makes it an excellent choice for handling large amounts of data in a cloud environment. With its continued investment in updates, new features, and integrations, Google Bigtable is poised to play an even more critical role in the future of data management.