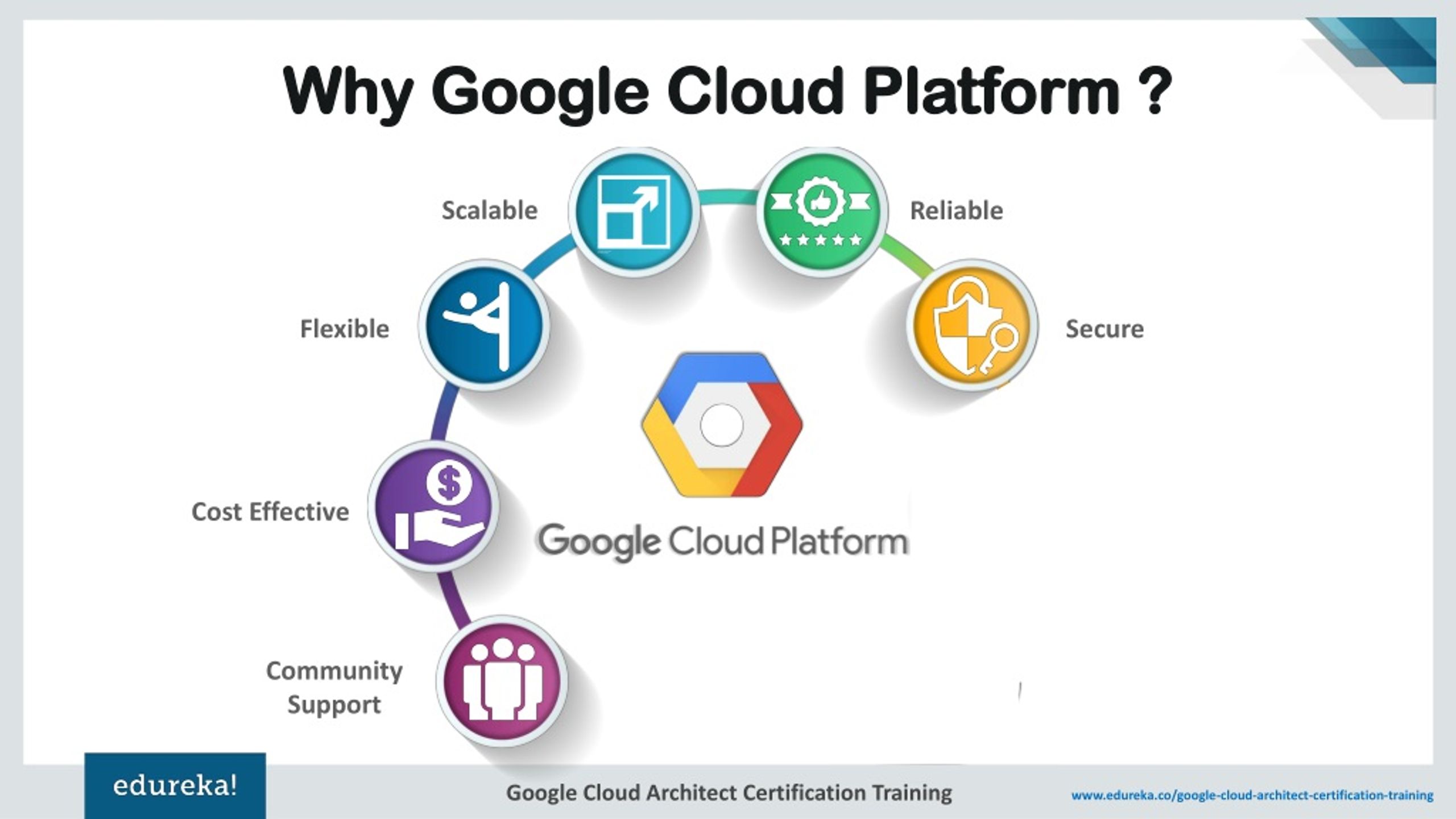

Introduction to Google Cloud Platform (GCP) and Its Key Components

Google Cloud Platform (GCP) has emerged as a leading cloud service provider, offering a wide range of services and tools for businesses and developers. Understanding the various gcp components is crucial for maximizing the potential of this powerful platform. This guide provides an overview of the main gcp components, including Compute Engine, Cloud Storage, and Kubernetes Engine, enabling you to make informed decisions and effectively leverage these services for your needs.

Exploring GCP Compute Engine: Virtual Machines and Scalability

Google Cloud Platform’s Compute Engine is a core component of gcp components, providing users with virtual machines (VMs) and scalable computing resources. Compute Engine enables businesses and developers to create, manage, and customize VMs according to their specific needs, offering flexibility and scalability in a cloud environment. With on-demand scalability and resource allocation, Compute Engine ensures optimal performance and cost-efficiency for various workloads.

Compute Engine supports a wide range of pre-defined VM configurations, allowing users to select the appropriate VM type based on their workload requirements. Users can also create custom VM types, tailoring the VM specifications to their unique needs. This level of customization, combined with the ability to scale resources up or down as needed, makes Compute Engine a powerful and adaptable solution for businesses and developers alike.

Maximizing Data Storage with Google Cloud Storage

Google Cloud Storage is a versatile object storage service, making it an essential component of gcp components. This service enables businesses and developers to store and access large amounts of data with ease. Google Cloud Storage offers several storage classes, each designed for specific use cases, ensuring data durability, security, and accessibility.

The Standard storage class is ideal for frequently accessed data, providing high performance and low latency. Nearline storage, on the other hand, is designed for data that is accessed less frequently but still requires quick retrieval, making it suitable for backup and disaster recovery scenarios. Coldline storage is the most cost-effective option for long-term data archival, with retrieval times of a few seconds to minutes.

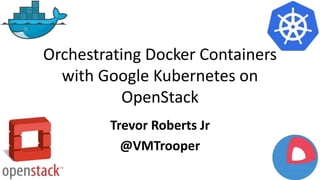

Orchestrating Containers with Google Kubernetes Engine

Google Kubernetes Engine (GKE) is a managed service within gcp components, designed for deploying, managing, and scaling containerized applications. GKE simplifies the process of working with Kubernetes, an open-source platform for automating container deployment, scaling, and management. By utilizing GKE, businesses and developers can harness the power of Kubernetes without the need for extensive expertise in container orchestration.

Kubernetes operates on a master-slave architecture, with the master node managing the worker nodes. GKE abstracts this complexity, allowing users to focus on deploying and managing their applications. Key benefits of using GKE for container orchestration include simplified management, automatic scaling, and built-in security features, ensuring a reliable and secure environment for your containerized applications.

How to Design a Robust Network with Google Cloud Virtual Private Cloud

Google Cloud Virtual Private Cloud (VPC) is a crucial component of gcp components, enabling businesses and developers to design secure and scalable networks. With VPC, you can create and configure virtual networks in a way that meets your specific needs, ensuring network security and isolation in a multi-tenant environment.

To get started with VPC, follow these steps:

- Create a new VPC network: Begin by creating a new VPC network in the Google Cloud Console. This network will serve as the backbone for your virtual infrastructure.

- Define subnets: Divide your VPC network into subnets to logically organize your resources and control access to them. Each subnet can reside in a different region, allowing you to create a global network with localized resources.

- Configure firewall rules: Implement firewall rules to control both inbound and outbound traffic to your resources. This ensures that only authorized traffic is allowed, enhancing network security.

- Establish routes: Define routes to direct traffic between subnets and to external networks. Routes help ensure that data follows the desired path, improving network performance and security.

By following these steps, you can create a robust and secure network using Google Cloud Virtual Private Cloud. This gcp component offers the flexibility and control necessary for businesses and developers to build and manage complex cloud-based infrastructures.

Unleashing the Power of Data Analytics with Google Cloud Dataflow

Google Cloud Dataflow is a fully-managed service, making it an essential gcp component for processing and analyzing large data sets in both batch and real-time modes. Dataflow simplifies pipeline creation, enabling businesses and developers to efficiently handle data processing tasks and benefit from automatic scaling and cost optimization.

Dataflow integrates seamlessly with other gcp components, such as BigQuery and Pub/Sub, allowing users to create end-to-end data workflows. This integration empowers you to ingest data from various sources, process it using Dataflow, and store the results in BigQuery for further analysis or visualization. By leveraging Dataflow’s serverless architecture, you can focus on developing data processing pipelines without worrying about infrastructure management.

Some key benefits of using Dataflow for data processing include:

- Simplified pipeline creation: Dataflow’s Apache Beam SDK enables you to define both batch and streaming data pipelines using a unified programming model. This simplifies the development process and allows you to create complex data workflows with ease.

- Automatic scaling: Dataflow automatically scales your pipelines based on the data volume and processing requirements, ensuring optimal performance and cost-efficiency.

- Cost optimization: With Dataflow, you only pay for the resources you consume, minimizing costs and maximizing ROI.

- Integration with other GCP components: Dataflow’s compatibility with BigQuery, Pub/Sub, and other gcp components enables you to build powerful, interconnected data systems.

By incorporating Google Cloud Dataflow into your data processing strategy, you can unlock the true potential of your data, streamline workflows, and make informed business decisions.

Implementing Machine Learning Solutions with Google Cloud AI Platform

Google Cloud AI Platform is a unified environment for building, deploying, and managing machine learning models, making it an essential gcp component for businesses and developers. AI Platform simplifies the machine learning lifecycle, enabling you to focus on model development and innovation.

With AI Platform, you can train, evaluate, and deploy machine learning models using pre-built models, custom algorithms, or automated machine learning tools. This flexibility allows you to choose the best approach for your specific use case, whether it’s image recognition, natural language processing, or predictive analytics.

Some key benefits of using AI Platform for machine learning tasks include:

- Simplified machine learning lifecycle: AI Platform streamlines the process of training, evaluating, and deploying machine learning models, enabling you to focus on model development and innovation.

- Pre-built models: AI Platform offers a variety of pre-built models for common use cases, allowing you to quickly implement machine learning solutions without the need for extensive custom development.

- Automated machine learning: AI Platform’s AutoML capabilities enable you to train high-quality models without requiring deep machine learning expertise.

- Version control: AI Platform’s integration with Git enables version control for your machine learning projects, ensuring reproducibility and traceability.

By incorporating Google Cloud AI Platform into your machine learning strategy, you can accelerate model development, improve accuracy, and drive better business outcomes.

Securing Your GCP Environment with Identity and Access Management

Google Cloud Platform (GCP) Identity and Access Management (IAM) is a crucial gcp component for securing your environment. IAM enables you to manage access to GCP resources and services using a flexible, granular permission model. By understanding and implementing IAM, businesses and developers can maintain a secure, compliant, and efficient cloud infrastructure.

IAM is built on the principles of roles, permissions, and policies. Roles define a set of permissions, and permissions specify the actions that can be performed on a resource. Policies are collections of permissions that are granted to identities, such as users, groups, or service accounts. By assigning appropriate roles and policies, you can ensure that users have the necessary access to perform their tasks while minimizing the risk of unauthorized actions.

Some key benefits of using IAM for access control include:

- Granular permissions: IAM allows you to define fine-grained permissions, ensuring that users have the exact access they need and no more.

- Centralized management: IAM policies can be managed centrally, simplifying access control and reducing the administrative burden.

- Auditing capabilities: IAM integrates with Google Cloud Audit Logs, enabling you to track and monitor user activity and resource usage.

By incorporating Identity and Access Management into your GCP environment, you can maintain a secure, compliant, and efficient infrastructure, protecting your data and resources while enabling your team to innovate and grow.