Demystifying Traffic Management: Gateway and Load Balancer Essentials

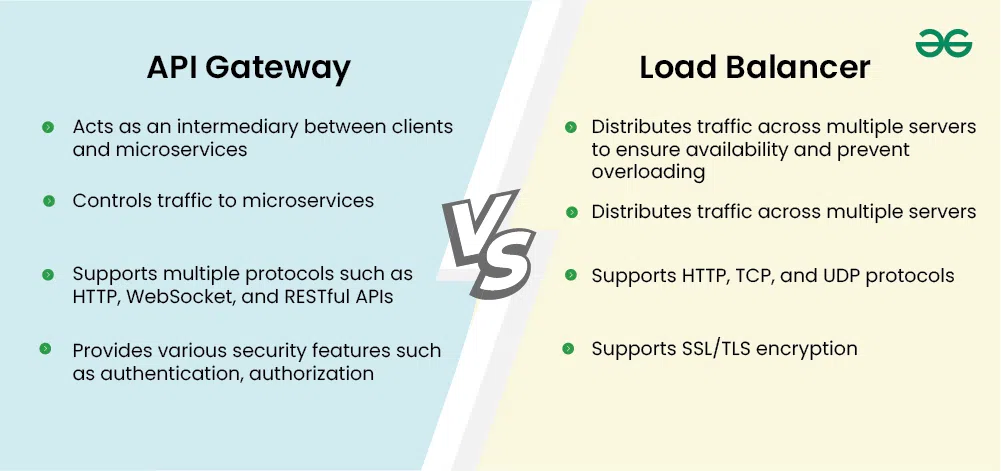

In modern application architectures, efficient traffic management is crucial. It ensures optimal performance, reliability, and security. Two key components in this arena are API Gateways and Load Balancers. They both play vital roles in directing and controlling network traffic, but their functionalities differ significantly. Understanding the nuances of a gateway vs load balancer is essential for designing robust and scalable systems. Load balancers primarily focus on distributing traffic across multiple servers. API Gateways, on the other hand, manage and secure APIs, offering a broader range of features. This article will provide a detailed comparison of a gateway vs load balancer. It will explore their individual strengths and how they can be used together to create a comprehensive traffic management strategy.

How to Select the Optimal Traffic Routing Method for Your Application

Choosing the right traffic routing method is critical for application performance and reliability. Selecting between a gateway vs load balancer requires careful consideration of your application’s specific needs. The ideal choice depends on factors such as your application architecture, traffic patterns, security requirements, and desired level of scalability. Understanding these factors will guide you to the best solution for your unique situation.

Consider your application’s architecture. Is it a monolithic application or a distributed microservices architecture? Microservices often benefit from API Gateways, which can manage the complexity of routing requests to different services. Monolithic applications might find a load balancer sufficient for distributing traffic across multiple servers. Evaluate the complexity of your traffic. Do you need advanced features like request transformation or rate limiting? API Gateways excel at these tasks, while load balancers primarily focus on traffic distribution. A crucial aspect when comparing gateway vs load balancer is understanding that API Gateway offers many more tools to manage APIs. Consider security needs. Do you require authentication, authorization, or other security measures? API Gateways provide robust security features, acting as a gatekeeper for your APIs. Load balancers offer basic security features but are not designed for comprehensive API security.

Scalability is another key consideration. Both API Gateways and Load Balancers can improve scalability, but they do so in different ways. Load balancers distribute traffic to prevent overload, while API Gateways can optimize traffic flow and manage API usage. Weigh the importance of each of these factors to determine the best traffic routing method for your application. Often, a combination of both API Gateway and Load Balancer provides the most robust and scalable solution. Remember that choosing the right gateway vs load balancer configuration involves trade-offs. Carefully analyze your needs to make an informed decision. Understanding these trade-offs is crucial for optimizing your application’s performance and security.

Load Balancing Unveiled: Distributing Traffic for High Availability

Load balancers are essential components in modern application infrastructure, primarily responsible for distributing incoming network traffic across multiple servers. This distribution ensures that no single server is overwhelmed, enhancing application availability and responsiveness. When considering gateway vs load balancer solutions, understanding the core function of load balancing is crucial.

Several load balancing algorithms exist, each with its own strengths and weaknesses. Round-robin, for example, distributes traffic sequentially to each server in the pool. Least connections directs traffic to the server with the fewest active connections. Other algorithms consider server health, response time, or resource utilization to make routing decisions. The choice of algorithm depends on the specific application requirements and the desired optimization goals. For instance, in gateway vs load balancer scenarios, a load balancer might employ a sophisticated algorithm to ensure even distribution across multiple API gateway instances.

The benefits of load balancing are multifaceted. High availability is a primary advantage, as traffic can be automatically redirected away from failing servers. Scalability is also improved, as new servers can be added to the pool to handle increased traffic without disrupting service. Furthermore, load balancing enhances application responsiveness by distributing the workload across multiple servers, reducing latency and improving the user experience. The ongoing debate of gateway vs load balancer often revolves around these fundamental advantages, highlighting when simple traffic distribution is sufficient versus when more advanced API management is required. Load balancers are a cornerstone of any robust and scalable web infrastructure, working tirelessly to ensure consistent and reliable performance.

API Gateways Explained: Managing APIs and Securing Services

API Gateways are pivotal components in modern application architecture, specifically designed to manage and secure Application Programming Interfaces (APIs). Unlike load balancers, which primarily focus on distributing network traffic, API Gateways offer a comprehensive suite of functionalities centered around API management. An API gateway vs load balancer comparison reveals their distinct roles, with gateways excelling in API-specific tasks.

The core function of an API Gateway is to act as a single point of entry for all API requests. This centralized control allows for consistent application of security policies, traffic management rules, and monitoring. Key functionalities include request routing, directing incoming API requests to the appropriate backend services. Authentication verifies the identity of the client making the request, while authorization determines whether the client has permission to access the requested resource. Rate limiting prevents abuse and ensures fair usage of APIs by controlling the number of requests a client can make within a specific timeframe. Furthermore, request transformation modifies the format or content of requests to match the requirements of the backend services. Response aggregation combines responses from multiple backend services into a single, unified response for the client. These capabilities differentiate an API gateway vs load balancer, highlighting the gateway’s API-centric approach.

By implementing an API Gateway, organizations can enhance the security, performance, and manageability of their APIs. The gateway provides a layer of abstraction between the client and the backend services, shielding the internal architecture from direct exposure. This abstraction enables independent evolution of backend services without impacting the client experience. Features like authentication and authorization ensure that only authorized users can access sensitive data and functionality. Rate limiting prevents denial-of-service attacks and ensures that API resources are available to all users. Request transformation simplifies integration with diverse backend systems, while response aggregation improves performance by reducing the number of network requests. When considering traffic management solutions, the API gateway vs load balancer decision hinges on the specific needs of the application. If API management and security are paramount, an API Gateway is the optimal choice. The functionalities of an API gateway vs load balancer clearly demonstrate the gateway’s added value.

Key Distinctions: Gateway Capabilities Beyond Load Balancing

A fundamental aspect when discussing gateway vs load balancer solutions is understanding their distinct capabilities. While both play crucial roles in managing network traffic, they operate at different layers and offer varying functionalities. Load balancers are primarily concerned with distributing traffic efficiently across multiple servers. Their core function is to prevent any single server from being overwhelmed, ensuring high availability and optimal performance. Load balancing algorithms, such as round-robin or least connections, determine how traffic is distributed.

API gateways, on the other hand, extend beyond simple traffic distribution. Although an API gateway can perform load balancing, its primary purpose is to manage and secure APIs. An API gateway acts as a central point of entry for all API requests, providing a unified interface for clients. It handles several critical tasks, including authentication, authorization, rate limiting, and request transformation. This means the API gateway verifies the identity of the client, ensures they have the necessary permissions to access the requested resource, and controls the number of requests they can make within a given timeframe. Request transformation involves modifying the request format to match the backend service’s requirements, and it’s a crucial functionality when dealing with diverse systems. When considering gateway vs load balancer, the key lies in the need for API management features.

The functionalities exclusive to API gateways are where the significant differences between gateway vs load balancer become apparent. Load balancers lack the sophisticated API management features offered by gateways. Features like authentication and authorization are handled at the load balancer. The ability to implement rate limiting is also crucial for preventing abuse and ensuring fair usage of APIs. Furthermore, API gateways can aggregate responses from multiple backend services into a single response, simplifying the client experience. This function is particularly valuable in microservices architectures, where a single API request may require data from several different services. The choice between a gateway vs load balancer hinges on whether these advanced API management capabilities are required.

Architectural Considerations: When to Use a Gateway Versus a Load Balancer

Choosing between an API gateway vs load balancer, or using them in tandem, hinges on your application’s architecture and specific needs. Understanding the nuances of each component is crucial for optimal performance and security. The decision-making process should carefully consider factors like the complexity of your application, the level of API management required, and the security protocols you need to implement. A monolithic application with minimal API exposure might only need a load balancer. Conversely, a microservices architecture often benefits significantly from the advanced features of an API gateway.

For microservices architectures, an API gateway is often essential. It acts as a central point for managing and securing access to various microservices. The API gateway handles tasks such as authentication, authorization, and rate limiting, offloading these concerns from individual services. In this scenario, the gateway vs load balancer choice leans heavily towards the gateway for its API-centric functionalities. However, a load balancer can still play a vital role. It can distribute traffic across multiple instances of the API gateway itself, ensuring high availability and scalability. Consider an e-commerce platform built on microservices. The API gateway handles product catalog requests, order placement, and user authentication, while a load balancer ensures that the API gateway can handle peak traffic without becoming a bottleneck.

In contrast, a monolithic application with limited external API access might find a load balancer sufficient. The load balancer distributes incoming traffic across multiple instances of the application server, ensuring high availability and preventing overload. While an API gateway could still offer benefits like basic authentication or rate limiting, the added complexity might not be justified. For applications with specific security requirements, such as those handling sensitive data, an API gateway is generally recommended. It provides a robust layer of security, protecting backend services from unauthorized access and malicious attacks. This is especially critical in scenarios where direct access to backend services could expose vulnerabilities. Ultimately, the choice between an API gateway vs load balancer, or their combined use, depends on a careful evaluation of your application’s architecture, security requirements, and API management needs. Properly evaluating these factors will lead to the correct implementation.

Combining Forces: Utilizing Gateways and Load Balancers for Robust Systems

API Gateways and Load Balancers, while distinct, are not mutually exclusive. They can work in tandem to deliver robust, scalable, and secure application architectures. This layered approach maximizes the strengths of each component, resulting in a more resilient and efficient system. The synergy between a gateway vs load balancer is often a key element in modern application design.

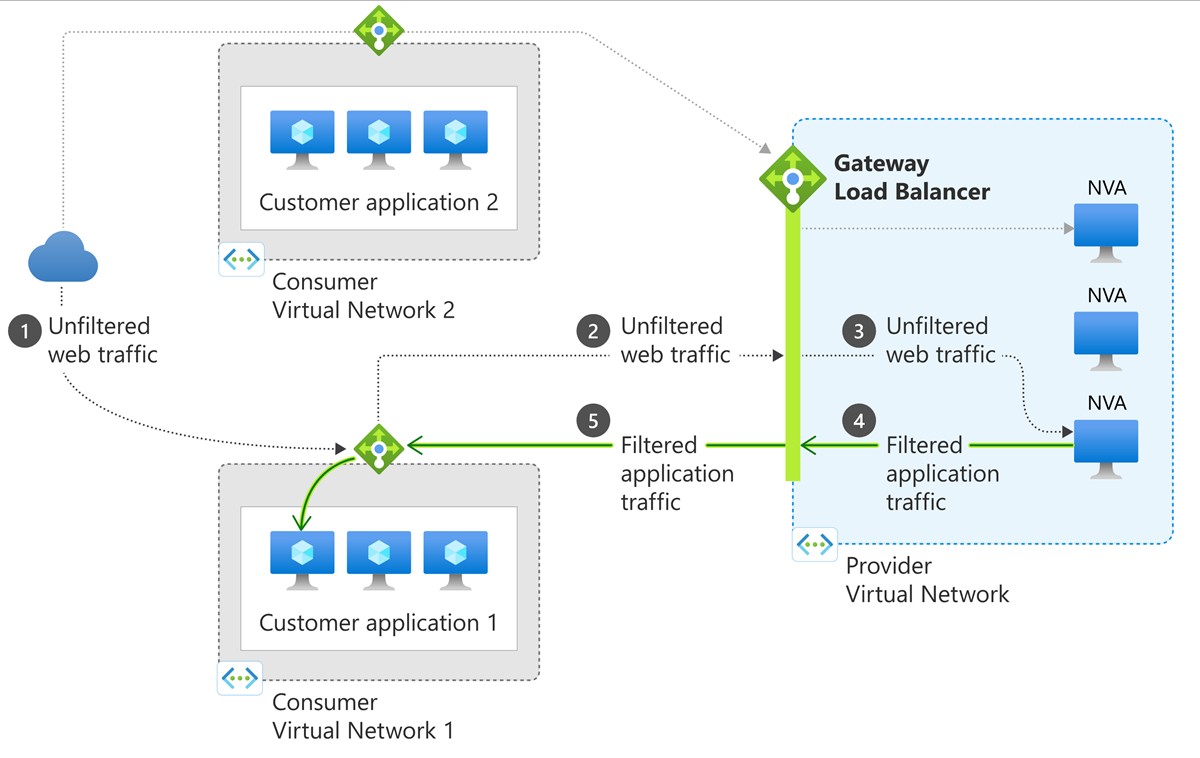

Consider a scenario where a Load Balancer sits in front of multiple instances of an API Gateway. The Load Balancer’s primary responsibility is to distribute incoming traffic across these API Gateway instances. This ensures high availability and prevents any single gateway from becoming a bottleneck. The load balancer uses algorithms like round-robin or least connections to efficiently distribute the load. This distribution mechanism enhances the overall system’s capacity to handle a large volume of requests. It improves response times. Once the traffic reaches an API Gateway instance, the gateway takes over the API management and security concerns. This includes authentication, authorization, rate limiting, and request transformation.

This layered architecture offers several advantages. First, it enhances scalability. As traffic increases, more API Gateway instances can be added behind the Load Balancer. Second, it improves security. The API Gateway enforces security policies, protecting backend services from unauthorized access. Third, it simplifies API management. The API Gateway acts as a central point for managing and monitoring APIs. The load balancer focuses on efficient distribution. For example, in a microservices architecture, the Load Balancer can distribute traffic to different API Gateway instances. Each API Gateway then manages the APIs for a specific microservice. This separation of concerns makes the system easier to manage and maintain. When choosing a gateway vs load balancer strategy, consider this combined approach for optimal performance and security. Therefore, understand that the best traffic management strategy often involves leveraging both a gateway vs load balancer to create a resilient and efficient system.

Future Trends: The Evolution of Traffic Management Solutions

The landscape of traffic management is continuously evolving, driven by advancements in technology and the changing needs of modern applications. Several emerging trends are poised to reshape how we approach gateway vs load balancer strategies, blurring the lines between traditional solutions and paving the way for more intelligent and adaptive systems. One notable trend is the rise of service meshes. These dedicated infrastructure layers manage service-to-service communication within microservices architectures. Service meshes offer advanced features like traffic shaping, observability, and security policies, often overlapping with functionalities traditionally associated with API gateways and load balancers. This convergence allows for finer-grained control and improved resilience in complex distributed systems.

Another significant development is the increasing adoption of edge computing. By bringing computation and data storage closer to the end-users, edge computing reduces latency and improves the performance of applications. In this context, traffic management solutions must adapt to distribute traffic across geographically diverse edge locations efficiently. This requires intelligent routing algorithms that consider factors like network conditions, user location, and application requirements. The integration of AI and ML is also transforming traffic management. AI/ML algorithms can analyze traffic patterns, predict potential bottlenecks, and optimize routing decisions in real-time. This enables dynamic scaling, automated fault detection, and proactive performance tuning, leading to more efficient and reliable systems. The continuous enhancements in gateway vs load balancer tools enable a better service.

The future of traffic management will likely involve a hybrid approach, combining the strengths of different technologies to create comprehensive solutions. We can expect to see further integration between service meshes, edge computing platforms, and AI-powered traffic management tools. This will result in more sophisticated and adaptive systems that can automatically respond to changing conditions and optimize performance across diverse environments. These advancements provide better solutions for gateway vs load balancer implementations, ensuring better traffic flow and optimization. As these technologies mature, the distinction between traditional load balancers and API gateways will become less defined, with future solutions offering a unified platform for managing all aspects of network traffic and API interactions. The trend moves toward a seamless integration of features, offering holistic traffic management capabilities.