What are Docker Image Layers and Why Do They Matter?

Docker images are built from a series of read-only layers. Each layer represents a set of file system changes. These changes result from instructions in a Dockerfile. Every instruction, like `RUN`, `COPY`, or `ADD`, creates a new layer on top of the previous one. These layers are immutable, meaning once created, they cannot be altered. This immutability is fundamental to Docker’s efficiency and reproducibility. The concept of a docker layer is crucial to understanding how Docker operates and optimizes container builds.

The layered architecture of Docker images provides several advantages. First, it optimizes image size. Common base layers can be shared between multiple images. This sharing reduces storage space and bandwidth consumption. Second, it accelerates build times. Docker leverages caching of layers. If a layer hasn’t changed, Docker reuses the cached layer instead of rebuilding it. Third, it enhances storage efficiency. Docker uses a union file system. AUFS (Advanced Multi-Layered Unification Filesystem) or OverlayFS are examples of such systems. These systems allow layers to be stacked on top of each other. This provides a unified view of the file system. Only the differences between layers are stored, saving space. The efficient handling of docker layer is one of the reasons for its success.

Understanding docker layer is essential for creating efficient and optimized Docker images. Proper layer management directly impacts image size, build speed, and overall resource consumption. By carefully structuring Dockerfiles and leveraging layer caching, developers can significantly improve their Docker workflows. Ignoring the importance of docker layer can lead to bloated images and slow build processes. Therefore, developers should learn techniques to take full advantage of docker layer capabilities. A well-constructed docker layer strategy is vital for any Docker-based project.

How to Inspect Docker Image Construction: Examining Layer Details

Inspecting Docker image layers is crucial for understanding how an image is built and identifying potential areas for optimization. Docker provides tools to dissect an image and reveal the individual layers that comprise it. The primary command for this purpose is `docker history`. This command displays the layers of an image, their sizes, and the commands that created them. Analyzing this output provides insights into which layers contribute the most to the overall image size, allowing for targeted optimization efforts. The concept of docker layer is pivotal here.

To use `docker history`, simply run `docker history

Interpreting the output of `docker history` is essential for effective image optimization. Pay close attention to the `SIZE` column to identify the largest layers. Then, examine the `COMMAND` column to understand what created those layers. Look for opportunities to combine multiple commands into a single layer, remove unnecessary files, or use more efficient base images. Understanding docker layer caching is crucial for optimizing build times, as changes to layers invalidate subsequent cached layers. Furthermore, be aware that seemingly innocuous changes in a Dockerfile can have a significant impact on image size due to layer dependencies. Therefore, careful analysis and strategic optimization of docker layer construction are vital for creating efficient and streamlined Docker images.

Optimizing Docker Images: Strategies for Effective Layer Management

Optimizing Docker images is crucial for reducing image size and improving build times. Effective docker layer management plays a vital role in achieving this. Several strategies can be implemented to streamline your Dockerfiles and minimize bloat. These strategies enhance efficiency and resource utilization. Using multi-stage builds, combining commands, ordering commands strategically, and leveraging `.dockerignore` files are key techniques.

Multi-stage builds significantly reduce the final image size. This is achieved by using multiple `FROM` statements in a single Dockerfile. Each `FROM` instruction starts a new build stage. Artifacts from previous stages can be copied to subsequent stages. This allows you to use larger, more complex images for compilation or testing. Then, you can copy only the necessary runtime components to a smaller, leaner final image. Consider this example:

Docker Layer Caching: Speeding Up Your Build Process

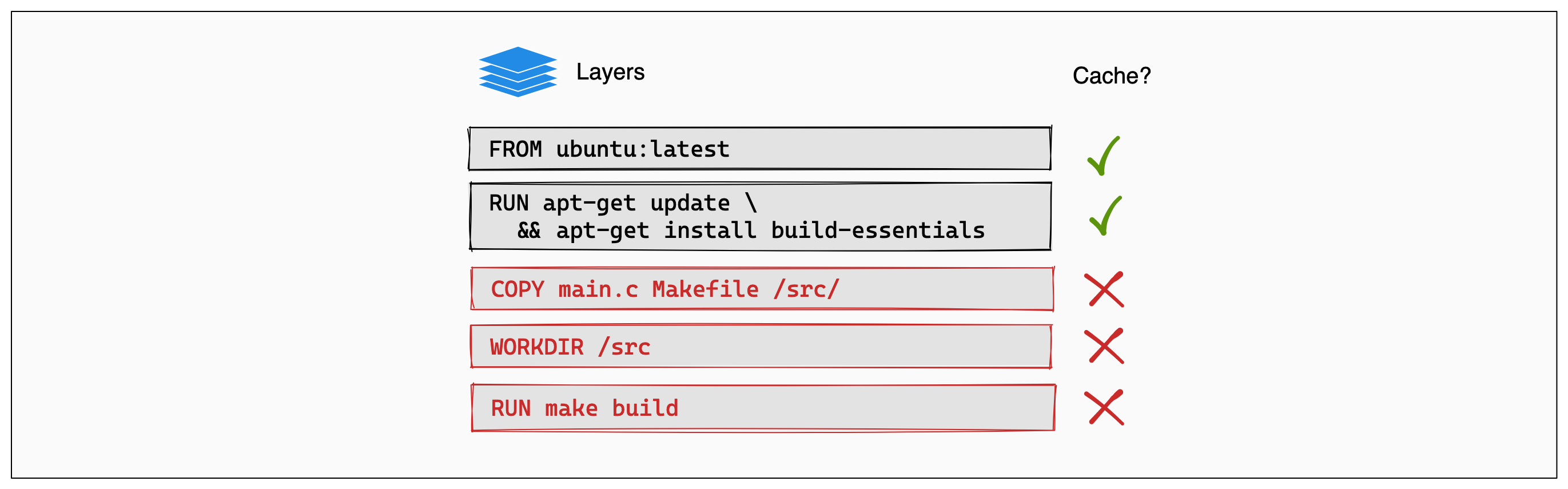

Docker layer caching is a crucial mechanism that significantly accelerates the Docker image build process. Docker leverages cached layers to avoid redundant execution of Dockerfile instructions. During a build, Docker examines each instruction in the Dockerfile. It then searches for an existing layer in its cache that matches the instruction and its context. If a match is found, Docker reuses the cached layer instead of re-executing the instruction. This dramatically reduces build times, especially for complex images with many layers. Understanding how Docker layer caching works is essential for efficient Dockerfile design.

Cache invalidation occurs when Docker detects a change that prevents it from reusing a cached layer. This can happen due to modifications in the Dockerfile itself, changes in the files added via the `COPY` or `ADD` instructions, or updates to the base image. When a layer is invalidated, all subsequent layers in the Dockerfile must also be rebuilt. Therefore, the order of instructions in the Dockerfile is critical. Placing frequently changing instructions, such as copying application source code, towards the end of the Dockerfile minimizes the amount of rebuilding required. Consider a scenario where you modify a source code file. If the `COPY` instruction for that file is near the beginning of the Dockerfile, it will invalidate most subsequent layers, leading to a longer build time. Efficient Docker layer management considers this behavior.

Build arguments can also influence Docker layer caching. Build arguments, defined using the `ARG` instruction in a Dockerfile, allow you to pass variables during the build process. While `ARG` instructions themselves are not cached, their values can affect the caching of subsequent instructions that depend on them. For instance, using a build argument to specify a dependency version can lead to cache invalidation when the version changes. To maximize cache utilization, carefully consider how build arguments are used and their potential impact on downstream layers. Understanding the intricacies of Docker layer caching is vital for optimizing build performance and efficiently managing your Docker images. Proper use of Docker layer caching drastically improves the development lifecycle and minimizes the time spent waiting for image builds to complete. Optimizing your Dockerfile for efficient caching is a key aspect of effective Docker layer management.

Best Practices for Dockerfile Design: Minimizing Layer Bloat

Crafting efficient Dockerfiles is crucial for minimizing docker layer bloat and creating streamlined, manageable images. A well-structured Dockerfile translates directly into smaller image sizes, faster build times, and improved overall application performance. Several key practices can be adopted to achieve optimal docker layer efficiency.

One of the most effective strategies involves selecting a minimal base image. Alpine Linux, known for its small footprint, is a popular choice. Unlike larger distributions, Alpine includes only essential packages, reducing the initial image size. Avoiding unnecessary dependencies is equally important. Carefully evaluate each dependency added to the image and eliminate any that are not strictly required for the application to function. Clean up temporary files after installation within the same docker layer. Package managers often leave behind cache files or temporary installation directories. Removing these files immediately after use prevents them from being included in the final image. For example, use commands like `rm -rf /var/cache/apt/*` after installing packages with `apt-get`. Always specify precise version numbers for dependencies. This ensures reproducibility across different environments and prevents unexpected updates from introducing compatibility issues or increasing the image size. Use environment variables to manage configurations, avoiding hardcoding values directly into the image.

Security is another important consideration when designing Dockerfiles. Always adhere to the principle of least privilege when creating users and groups within the image. Avoid running applications as the root user whenever possible. Create dedicated user accounts with limited permissions to minimize the potential impact of security vulnerabilities. Utilize the `.dockerignore` file effectively. This file specifies files and directories that should be excluded from the build context, preventing unnecessary files from being copied into the image. Ignoring large files, such as build artifacts or local development tools, can significantly reduce the image size. Combining multiple commands into a single `RUN` instruction also optimizes docker layer usage. Each `RUN` instruction creates a new layer, so consolidating related commands minimizes the number of layers and reduces overall image size. Ordering commands from least to most frequently changing maximizes docker layer caching. Place commands that are unlikely to change at the top of the Dockerfile, allowing Docker to reuse cached layers whenever possible and speeding up the build process. By implementing these best practices, developers can create lean, efficient Docker images that are optimized for performance, security, and maintainability. The careful management of docker layer creation is essential for efficient containerization.

Advanced Docker Layer Techniques: Leveraging Multi-Stage Builds

Multi-stage builds represent a sophisticated approach to optimizing Docker images, significantly reducing their size and enhancing security. This technique allows the utilization of multiple `FROM` statements within a single Dockerfile. Each `FROM` instruction initiates a new build stage, leveraging different images as base images for distinct phases of the build process. The key benefit lies in the ability to copy artifacts from one stage to another, discarding unnecessary dependencies and tools required only during the build. This results in a final image that contains only the essential components needed to run the application. Consider docker layer caching, where each stage can be cached independently, further speeding up the development workflow.

The primary advantage of multi-stage builds is the reduction in the overall docker layer size. For example, a build environment might require compilers, debuggers, and other development tools. These tools are not needed in the final runtime environment. With multi-stage builds, the application can be compiled in a stage containing all the necessary build tools, and then only the compiled binary is copied to a separate stage based on a minimal base image. This smaller image is then used for deployment, minimizing the attack surface and reducing resource consumption. Using minimal base images, like Alpine Linux, in the final stage further contributes to size reduction, leading to more efficient distribution and deployment.

Here’s an example demonstrating a multi-stage Dockerfile for a Go application:

# Build stage

FROM golang:1.18-alpine AS builder

WORKDIR /app

COPY go.mod go.sum ./

RUN go mod download

COPY . .

RUN go build -o main .

# Production stage

FROM alpine:latest

WORKDIR /app

COPY --from=builder /app/main .

EXPOSE 8080

CMD ["./main"]

In this example, the first stage (`builder`) uses a Go image to compile the application. The second stage uses a minimal Alpine Linux image and copies only the compiled `main` binary from the `builder` stage. This final image is significantly smaller than if the entire build environment was included. This showcases how a strategically implemented multi-stage build process can lead to a lean and efficient final docker layer, ready for deployment.

Troubleshooting Common Docker Layer Issues: Dealing with Large Images

Addressing the challenge of large Docker images often begins with understanding the composition of its layers. Unexpectedly large images can lead to slower deployment times, increased storage costs, and potential performance bottlenecks. One of the first steps in troubleshooting involves using the `docker history` command to dissect the image and identify which docker layer contributes the most to its overall size. This command reveals the individual layers, their sizes, and the commands used to create them. Analyzing the output allows one to pinpoint inefficient instructions or unnecessary files that are bloating the image. A common culprit is the accumulation of temporary files or build artifacts that were not properly cleaned up after installation. It’s important to examine the Dockerfile for commands that add large files or install numerous dependencies, and then assess whether these are all truly necessary for the application to function.

Once the problematic docker layer has been identified, several strategies can be employed to reduce its size. Pruning unused layers, although not always straightforward, can sometimes help reclaim space. However, the primary focus should be on optimizing the Dockerfile itself. Combining multiple `RUN` instructions into a single one, using shell command chaining (`&&`), minimizes the number of layers created. Ensure temporary files are deleted within the same `RUN` instruction that creates them to prevent them from being persisted in a separate layer. For example, after installing packages with `apt-get` or `yum`, immediately clean up the package manager’s cache using commands like `apt-get clean` or `yum clean all`. Another common issue is including development tools or debugging symbols in production images. Multi-stage builds are particularly useful in this scenario, allowing you to compile or build the application in one stage and then copy only the necessary runtime components to a smaller, more streamlined base image in the final stage.

Users often encounter errors related to caching when troubleshooting docker layer issues. A change in a seemingly unrelated part of the Dockerfile can sometimes invalidate the cache for subsequent layers, leading to a full rebuild and potentially larger images. Carefully consider the order of instructions in the Dockerfile, placing those that change most frequently towards the bottom, to maximize cache reuse. Utilizing a `.dockerignore` file is crucial for preventing unnecessary files from being added to the image in the first place. Ensure that the `.dockerignore` file includes patterns that exclude temporary files, build artifacts, and sensitive data. Always specify version numbers for dependencies to ensure reproducibility and prevent unexpected changes from bloating the docker layer. Regularly reviewing and optimizing the Dockerfile is key to maintaining lean and efficient Docker images.

Real-World Examples: Docker Layer Optimization in Action

Real-world applications demonstrate the power of docker layer optimization. Consider a Python-based web application. A naive Dockerfile might install all dependencies in a single `RUN` instruction using `pip`. This creates a large docker layer. If any dependency changes, the entire layer is invalidated, forcing a rebuild. By separating dependency installation into multiple layers (e.g., one for base dependencies, another for project-specific ones) and ordering them by frequency of change, subsequent builds only rebuild the necessary layers. This can reduce build times from minutes to seconds.

Another example involves a Node.js application. Initially, the Dockerfile copies the entire application source code before installing dependencies with `npm install`. A better approach leverages the `.dockerignore` file to exclude unnecessary files like tests and development tools from the image. Furthermore, copying only the `package.json` and `package-lock.json` files, installing dependencies, and then copying the remaining source code allows docker layer caching to work more effectively. Changes to the application code won’t trigger a full dependency re-installation. A multi-stage build can further slim the final image by using a build stage with all the necessary tools to compile the application, and then copying the built artifact to a smaller, runtime-only base image like `alpine/node`. This significantly reduces the final image size, sometimes by as much as 70-80%.

Java applications also benefit greatly from docker layer optimization. Building a Java application often involves downloading Maven or Gradle dependencies, which can create very large layers. Using a multi-stage build, one can download dependencies and build the application in one stage, and then copy the resulting JAR file into a minimal JRE base image in a subsequent stage. Cleaning up the Maven repository cache after building also avoids including unnecessary artifacts in the final docker layer. This strategy not only reduces the image size but also improves security by minimizing the attack surface. For instance, a Spring Boot application initially resulting in a 500MB image can be reduced to under 200MB with these optimizations, leading to faster deployment times and reduced storage costs. These techniques, centered around efficient docker layer management, translate directly into tangible cost savings and improved performance in real-world deployments.