Unveiling the Power of Docker for Modern Applications

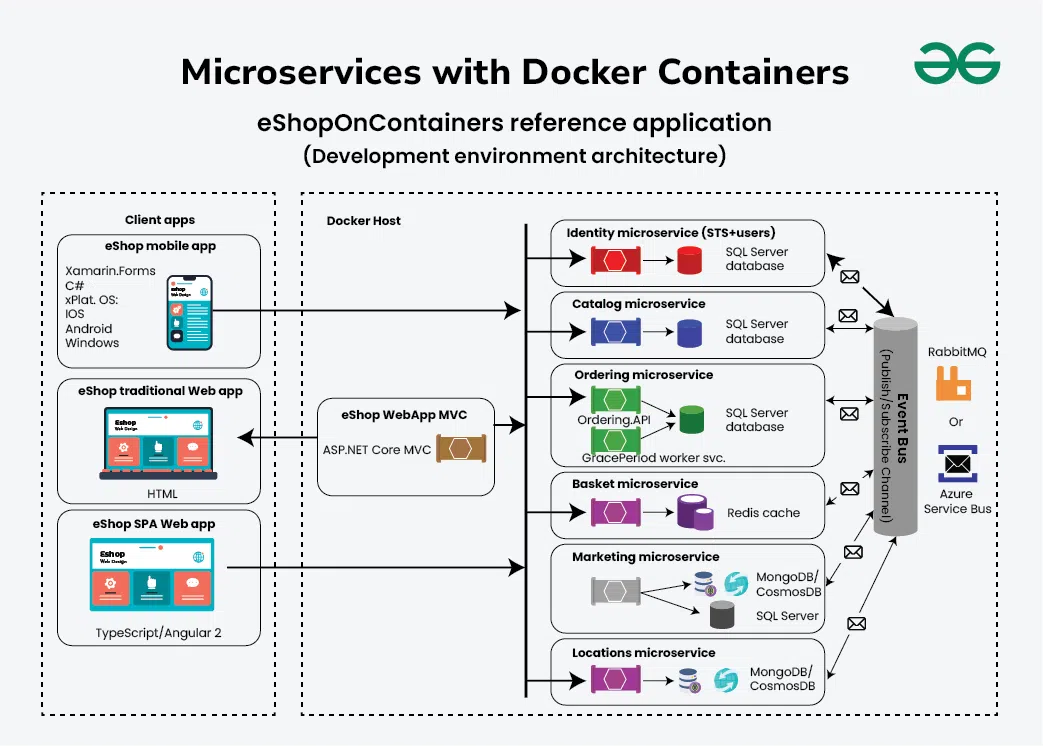

Microservices architecture has emerged as a popular approach to building complex applications. It offers benefits like increased scalability, independent deployments, and improved fault isolation. Each microservice functions as an independent unit, communicating with other services to form a complete application. This modularity allows teams to develop, deploy, and scale individual components without impacting the entire system. Embracing a microservices architecture offers businesses agility and the ability to adapt quickly to changing market demands. Docker in microservices is a key enabler of this architectural style.

Docker is a leading containerization technology that provides a standardized way to package and run applications. Docker simplifies the process of creating, deploying, and managing microservices. Docker images encapsulate the application code, runtime, system tools, libraries, and settings required to run a service. These images are portable and consistent across different environments, from development to production. Docker containers are lightweight and efficient, allowing multiple microservices to run on the same infrastructure. The combination of docker in microservices is very efficient and scalable.

Docker’s role in enabling portability, consistency, and efficiency is paramount for deploying and managing microservices. Portability ensures that microservices can run seamlessly across diverse environments, eliminating compatibility issues. Consistency guarantees that the application behaves the same way regardless of the underlying infrastructure. Efficiency optimizes resource utilization, allowing organizations to run more microservices on the same hardware. By leveraging Docker, organizations can streamline their microservices deployments, reduce operational overhead, and accelerate their software development lifecycle. In essence, the synergy between Docker in microservices provides a robust foundation for building and deploying modern, scalable applications. Docker’s ecosystem, with tools like Docker Hub and Docker Compose, further enhances the development and deployment experience for microservices.

How to Streamline Microservice Deployment with Docker

This section provides a practical guide on deploying microservices using Docker. The focus is on a step-by-step approach, starting with Dockerizing a basic microservice, such as a REST API. Consider a simple Python Flask application that returns a JSON response. First, a Dockerfile needs to be created. This file contains instructions on how to build the Docker image. The Dockerfile specifies the base image, installs dependencies, copies the application code, and defines the command to run the microservice.

A sample Dockerfile might look like this:

FROM python:3.9-slim-buster

WORKDIR /app

COPY requirements.txt requirements.txt

RUN pip3 install -r requirements.txt

COPY . .

CMD ["python3", "app.py"]

This Dockerfile starts from a Python 3.9 base image, sets the working directory to `/app`, copies the `requirements.txt` file, installs the Python dependencies, copies the application code, and specifies the command to run the application. To build the Docker image, use the command: `docker build -t my-microservice .`. After building the image, a Docker container can be run using: `docker run -p 8000:8000 my-microservice`. This command maps port 8000 on the host machine to port 8000 inside the container, making the microservice accessible. Optimizing Docker images is crucial. Multi-stage builds can be used to reduce the image size by separating the build environment from the runtime environment. Utilizing smaller base images, like Alpine Linux, can also significantly decrease the image size. When working with docker in microservices, it’s also important to minimize the number of layers in Docker images by combining multiple commands into a single layer.

Best practices for optimizing Docker images include using a `.dockerignore` file to exclude unnecessary files and directories. This prevents large files from being included in the image, which can bloat its size. Regularly updating the base image to patch security vulnerabilities is also vital. Furthermore, leveraging Docker Hub or a private Docker Registry for storing and managing Docker images streamlines the deployment process. Properly utilizing docker in microservices enhances the development pipeline. Continuous integration and continuous deployment (CI/CD) pipelines can be integrated with Docker to automate the building, testing, and deployment of microservices. By following these steps and best practices, deploying microservices with Docker becomes a manageable and efficient process. Taking into account that the proper use of docker in microservices enhances the performance and scaling of our applications.

Building Resilient Microservices with Docker Compose and Orchestration

Docker Compose simplifies the management of multi-container microservice applications, especially during local development and testing. A single docker-compose.yml file defines all the services, networks, and volumes needed for the application. This declarative approach ensures consistency across different environments. Developers can easily spin up the entire microservice architecture with a single command, fostering rapid iteration and collaboration. This is a key advantage when leveraging docker in microservices.

However, Docker Compose is primarily designed for development and testing environments. Production deployments require more robust orchestration solutions like Kubernetes or Docker Swarm. These platforms automate the deployment, scaling, and management of containerized applications at scale. Kubernetes, with its rich feature set and large community, is a popular choice for complex microservice architectures. It offers features like automated rollouts and rollbacks, self-healing, and service discovery. Docker Swarm, on the other hand, provides a simpler and more lightweight orchestration solution, tightly integrated with the Docker ecosystem. The choice between Kubernetes and Docker Swarm depends on the specific requirements and complexity of the microservice application. For smaller deployments or teams already familiar with Docker, Docker Swarm can be a good starting point. Kubernetes is often preferred for larger, more complex deployments that require advanced features and scalability. It is important to note the fundamental role of docker in microservices.

Container orchestration platforms address key challenges in managing microservices, such as service discovery, load balancing, and fault tolerance. They automatically distribute traffic across multiple instances of a microservice, ensuring high availability and responsiveness. They also monitor the health of each container and automatically restart failed containers, enhancing the resilience of the overall application. Tools like Kubernetes and Docker Swarm play a crucial role in deploying and managing microservices efficiently, using docker in microservices to help scale and monitor efficiently. Understanding the nuances of these technologies allows organizations to build resilient and scalable microservice applications that can adapt to changing business needs. The proper implementation of docker in microservices requires a strategy to implement.

Enhancing Microservice Scalability through Containerization

Docker in microservices offers remarkable scalability benefits. Docker enables horizontal scaling by facilitating the easy creation and deployment of multiple microservice instances. This approach allows applications to handle increased workloads efficiently. Instead of vertically scaling a single, large application, microservices can be independently scaled based on their specific needs. This leads to better resource utilization and improved responsiveness. Docker simplifies the process of replicating microservices across multiple servers or virtual machines.

Load balancing and auto-scaling are crucial concepts within a container orchestration environment. Load balancers distribute incoming traffic across available microservice instances. This ensures that no single instance is overwhelmed. Auto-scaling automatically adjusts the number of microservice instances based on real-time demand. Docker integrates seamlessly with orchestration tools like Kubernetes, enabling dynamic scaling based on predefined metrics. For example, if CPU utilization of a microservice exceeds a certain threshold, Kubernetes can automatically spin up additional instances. This proactive scaling ensures optimal performance, even during peak traffic periods. The use of docker in microservices simplifies the deployment and management of load balancing and auto-scaling configurations.

Several organizations have successfully leveraged Docker to scale their microservices applications. Netflix, for example, uses Docker to manage its vast library of streaming content and personalize user experiences. By containerizing its microservices, Netflix achieves faster deployments, improved resource utilization, and enhanced scalability. Another example is Spotify, which uses Docker to power its music streaming platform. Docker allows Spotify to handle millions of concurrent users and deliver a seamless listening experience. These real-world examples demonstrate the transformative power of Docker in scaling microservices architectures. The consistency and portability offered by docker in microservices allow development teams to focus on building and improving their applications, without being hindered by infrastructure limitations. This approach also allows businesses to adapt quickly to changing market demands.

Addressing Challenges in Microservice Management using Containerization

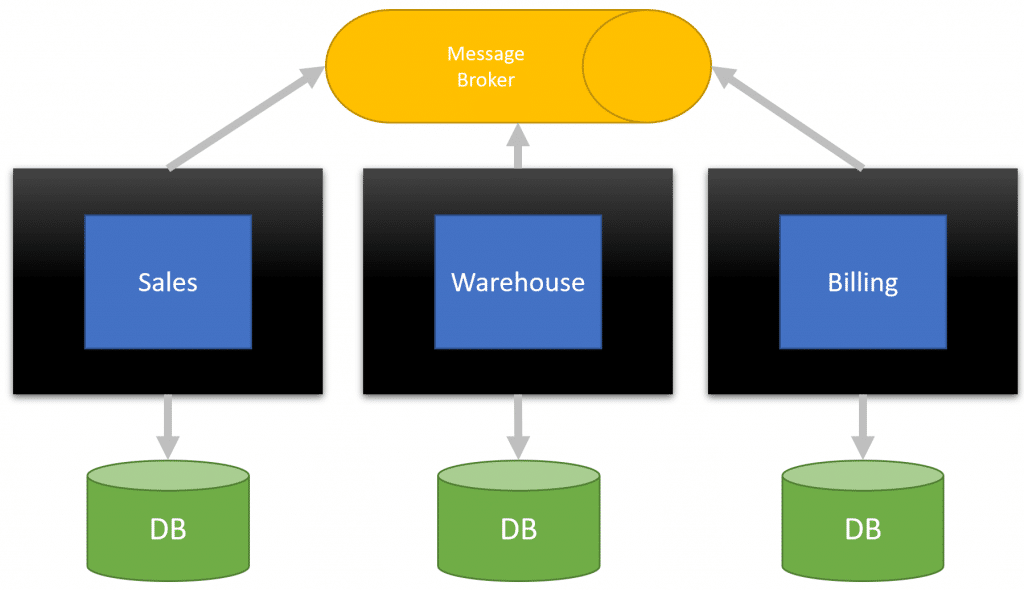

Managing a microservices architecture presents unique challenges, particularly concerning service discovery, configuration management, monitoring, and logging. These complexities demand robust solutions, and Docker, along with its rich ecosystem, provides valuable tools and techniques to navigate these hurdles. Docker in microservices offers a streamlined approach to managing these distributed systems.

Service discovery, the process of automatically locating and connecting to services, becomes crucial in a dynamic microservices environment. Traditional methods relying on static configurations are inadequate. Docker addresses this through mechanisms like environment variables and DNS-based service discovery within Docker networks. Docker Hub and Docker Registry facilitate the sharing and management of Docker images, acting as central repositories for microservice components. Configuration management, another key concern, involves maintaining consistent settings across numerous microservices instances. Docker enables configuration injection through environment variables and volume mounts, ensuring that each microservice instance receives the appropriate configuration without requiring modifications to the image itself. Effective monitoring and logging are essential for maintaining the health and performance of Docker in microservices. Centralized logging solutions, such as the ELK stack (Elasticsearch, Logstash, Kibana), can aggregate logs from all Docker containers, providing a unified view of the system’s behavior. Furthermore, distributed tracing tools, like Jaeger or Zipkin, enable the tracking of requests as they traverse multiple microservices, aiding in identifying performance bottlenecks and dependencies.

Tools like Prometheus and Grafana can be integrated to monitor resource utilization and application-specific metrics of Dockerized microservices. These tools help in proactively identifying and resolving issues before they impact the overall system. Docker’s containerization capabilities also simplify the process of rolling back to previous versions of a microservice in case of failures, enhancing the system’s resilience. By leveraging Docker and its ecosystem effectively, organizations can significantly reduce the operational overhead associated with managing complex microservices deployments. Proper containerization strategies directly contribute to improved stability, scalability, and maintainability of microservices-based applications. The efficiency gains achieved through Docker in microservices allow development and operations teams to focus on innovation and delivering business value.

Docker vs. Other Containerization Technologies for Microservices

While Docker has become synonymous with containerization, especially in the context of microservices, it’s important to acknowledge that it’s not the only player in the field. Other technologies like rkt (now archived) and containerd offer alternative approaches to containerization. Understanding the nuances between these options allows for informed decisions based on specific project requirements. Docker’s widespread adoption and extensive ecosystem often make it the default choice for many, but exploring alternatives can reveal valuable insights.

One key differentiator lies in the architecture and focus of each technology. Docker provides a comprehensive platform, encompassing image building, distribution, and runtime. Containerd, on the other hand, is a container runtime that focuses solely on executing containers. Rkt, designed with security in mind, initially emphasized a pod-native approach. The ease of use and the richness of the tooling around Docker in microservices have contributed significantly to its popularity. Features like Docker Hub, Docker Compose, and the vast library of pre-built images simplify the development and deployment workflows.

However, there are scenarios where alternative containerization solutions might be considered. For instance, if a project has extremely stringent security requirements, a solution like rkt (while it was actively maintained) might have been explored for its security-focused design. Similarly, if a project requires a highly minimal container runtime, containerd could be a suitable option. Ultimately, the best choice depends on a careful evaluation of factors such as security needs, performance requirements, existing infrastructure, and team expertise. The ecosystem surrounding Docker in microservices provides a robust and versatile platform for building and deploying modern applications, but understanding the alternatives ensures a well-informed decision-making process. This knowledge is essential for optimizing containerization strategies and maximizing the benefits of Docker in microservices.

Real-World Microservices Examples Deployed with Docker

Several organizations have successfully embraced Docker in microservices architectures to achieve remarkable results. Spotify, for instance, leverages Docker extensively to power its backend infrastructure. Docker enables Spotify to achieve faster and more reliable deployments across its numerous microservices. The ability to package each microservice with its dependencies in a Docker container ensures consistency across different environments, from development to production. This consistency significantly reduces the risk of deployment-related issues. The benefits of docker in microservices are tangible and demonstrable.

Another compelling example is Netflix, a pioneer in microservices adoption. Netflix uses Docker to manage and scale its vast video streaming platform. Their complex system, composed of hundreds of microservices, relies on containerization for efficient resource utilization and rapid iteration. By using Docker, Netflix’s engineering teams can independently develop, test, and deploy microservices without impacting other parts of the system. This agility is crucial for maintaining a competitive edge in the fast-paced streaming industry. The adoption of docker in microservices is a key factor in Netflix’s ability to deliver seamless streaming experiences to millions of users worldwide. The portability offered by Docker also allows Netflix to migrate workloads between different cloud providers, enhancing resilience and cost-effectiveness.

Groupon utilizes Docker to streamline its e-commerce operations. By containerizing its microservices, Groupon has improved its deployment frequency and reduced its infrastructure costs. Docker facilitates the creation of isolated environments for each microservice. This isolation enhances security and prevents conflicts between different applications. Furthermore, Docker’s lightweight nature enables Groupon to run more microservices on the same hardware. This increased density translates to significant cost savings. Groupon’s success demonstrates how docker in microservices can benefit businesses of all sizes. These real-world examples underscore the transformative impact of Docker on microservices architectures, illustrating its capacity to enhance scalability, agility, and cost-efficiency. The versatility of docker in microservices makes it a valuable asset for any organization seeking to modernize its application development and deployment processes.

Future Trends: The Evolution of Containerization in Microservices

The landscape of containerization is continuously evolving, significantly impacting how microservices are developed and deployed. Several emerging trends are poised to reshape the future of docker in microservices architectures. Service meshes, serverless computing with containers, and the broader adoption of cloud-native technologies are key areas to watch. These advancements promise to enhance the agility, scalability, and resilience of microservice-based applications.

Service meshes like Istio and Linkerd are gaining traction as essential components for managing complex microservices deployments. They provide a dedicated infrastructure layer for handling inter-service communication, offering features such as traffic management, security, and observability. This allows developers to focus on building business logic, while the service mesh takes care of the complexities of service-to-service interactions. Docker in microservices environments benefits greatly from service meshes, enabling better control and visibility over the network of containers. The trend towards serverless computing with containers, exemplified by technologies like Knative, represents another significant shift. This approach allows developers to deploy and run microservices without managing the underlying infrastructure. Containers are used as the deployment unit, but the platform automatically scales and manages them based on demand. This further simplifies the operational burden and optimizes resource utilization. The increasing adoption of cloud-native technologies, such as Kubernetes, is driving the standardization and automation of microservices deployments. These technologies provide a comprehensive platform for managing the entire lifecycle of containerized applications, from building and deploying to scaling and monitoring. Docker in microservices leverages cloud-native platforms to achieve greater agility and efficiency.

These trends collectively point towards a future where docker in microservices are even more streamlined, scalable, and resilient. By embracing service meshes, serverless computing, and cloud-native technologies, organizations can unlock new levels of agility and efficiency in their microservices architectures. The containerization will allow developers to concentrate on creating innovative applications while relying on the underlying platform to handle the complexities of deployment and management. As the ecosystem matures, it is expected that new tools and techniques will emerge, further enhancing the capabilities of docker in microservices environments and making them even more attractive for organizations of all sizes.