Exploring Popular Docker Image Repositories

Docker image repositories are crucial in the Docker ecosystem. They serve as centralized hubs for sharing and accessing pre-built Docker images. A significant advantage of utilizing these repositories is the availability of numerous images, enabling quick deployments and reducing development time. Popular repositories include Docker Hub, a widely recognized platform for hosting docker-images, Quay.io, and others. These repositories provide community support, fostering collaboration among developers and facilitating the sharing of best practices. Security features inherent in these platforms also enhance the reliability of docker-images used in production environments. Choosing the appropriate repository is critical for streamlined development, ensuring consistent functionality, and enabling efficient future updates.

A well-established repository streamlines the entire process, impacting efficiency and consistency across different stages of development. Developers and organizations benefit from readily accessible, tested docker-images, and access to community support and security features. The proper selection can greatly influence the consistency and reliability of applications. Access to consistent images and updates ensures compatibility throughout the application lifecycle.

The choice of repository plays a significant role in the scalability and maintainability of Docker-based solutions. Utilizing reputable repositories fosters a reliable environment where projects can rely on consistent, well-maintained docker-images. This approach minimizes potential conflicts and ensures smooth integration into existing infrastructure. Organizations can leverage these repositories to build a robust and scalable infrastructure, ultimately reducing development time and effort. Selecting the right repository also directly impacts the potential for future updates and security patches, enabling smoother application maintenance and growth.

Understanding Docker Image Formats and Layers

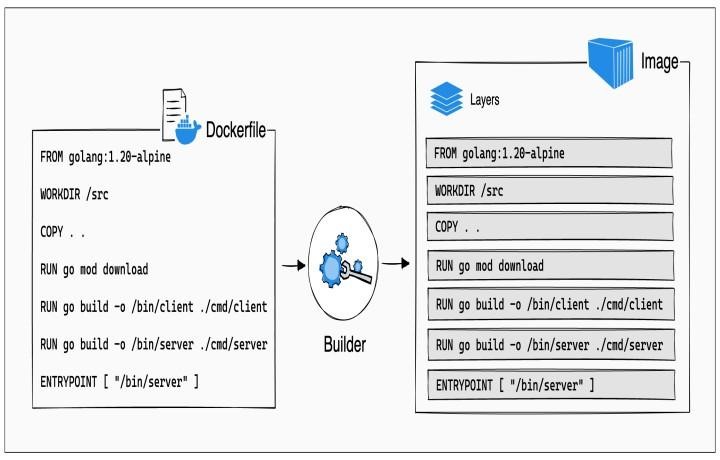

Docker images are built from a series of layers. Understanding these layers is crucial for optimizing docker-images storage and facilitating efficient updates. These layers, much like a set of stacked blueprints, represent the different stages in the construction of a Docker image. Each layer is essentially a snapshot of the image at a specific point in its creation, capturing the changes made. This layered architecture is a key element contributing to efficient storage and updating. The layers are stored in a compressed format, minimizing storage space and accelerating build processes. A critical benefit is the ability to update only the necessary layers, instead of rebuilding the entire docker-image from scratch. This minimizes downtime and enhances efficiency.

Different docker-image formats exist, each with its unique characteristics influencing compatibility. One prominent format is the Open Container Initiative (OCI) specification. The OCI standard, for instance, promotes standardized image formats and runtime environments, furthering interoperability. Using OCI-compliant docker-images allows compatibility across different platforms and tools, leading to streamlined workflows. This standard contributes to the consistency and reliability of docker-image interactions across diverse platforms, ensuring smooth operation within the Docker ecosystem.

The layered structure and optimized formats significantly impact the deployment process. Optimized image formats and layers contribute to faster deployment times, enhanced storage efficiency, and improved compatibility. Choosing the right format and understanding layers form a solid foundation for efficient docker-image management. Docker images are not monolithic entities; they are composed of distinct layers, enabling incremental updates and minimizing resource consumption. Comprehending these aspects allows users to efficiently manage and update their docker-images.

Optimizing Docker Image Size and Performance

Optimizing docker-images for size and performance is crucial for efficient deployments and resource utilization. Smaller docker-images translate to faster deployment times and reduced strain on container orchestration platforms. Employing techniques for reducing image size and enhancing performance directly impacts the success of continuous integration/continuous delivery (CI/CD) pipelines. Effective strategies for streamlining docker-image creation are essential for modern DevOps practices.

Techniques for minimizing docker-image size often involve using multi-stage builds. This strategy allows developers to create separate stages for building and packaging the application. This approach results in a final image that only contains the necessary components, reducing unnecessary files and dependencies. Using lightweight base images is also essential for creating lightweight docker-images. A smaller base image directly translates to a smaller overall docker-image size. Employing caching strategies during the build process enhances the performance of subsequent builds by reusing previously generated layers. This approach accelerates the build process and reduces overall build time for docker-images.

Choosing the appropriate tools and understanding the intricacies of image layer management are also vital components of image optimization. Using a Dockerfile to define the build process is critical. A well-defined Dockerfile streamlines the build process and helps ensure consistency. A complete Dockerfile clearly defines all necessary layers and steps to construct the docker-image. Employing specialized techniques for image optimization leads to faster builds and deployments within CI/CD environments, ultimately contributing to more efficient workflow processes.

Building Docker Images for Different Use Cases

Docker-images cater to diverse applications. Optimizing image construction varies based on the specific use. Web applications necessitate lightweight images for rapid deployment and improved responsiveness. Choosing the appropriate base image for web application Docker-images is crucial for performance. Consider leveraging lightweight operating systems like Alpine Linux. Microservices architectures benefit from modular, independent Docker-images. This allows for efficient scaling and maintenance of individual components. Docker-images tailored for database systems, like PostgreSQL or MySQL, require consideration of database-specific configurations. Database-specific Docker-images demand robust security features and consistent database setups. Careful selection of base images and configurations is essential for seamless database operation within the containerized environment. Examples illustrate various use cases, showcasing different image build strategies. Web applications benefit from lightweight base images, while databases leverage specialized database images for optimal performance. Employing the right base image is critical for ensuring optimal resource utilization and stability. Different use cases necessitate varying configurations within the image construction process.

Specialized Docker-images address unique requirements. Consider using appropriate base images and tailored configurations for each use case. For example, a web application might benefit from a lightweight base image like Alpine Linux, optimizing speed and resource usage. Conversely, database applications demand specific configurations and potentially specialized database Docker-images. Understanding application-specific constraints ensures the selection of appropriate base images. Choosing the right base image, optimized for the target application, results in streamlined deployments and enhanced performance. Building tailored Docker-images is crucial to achieving the desired performance characteristics for distinct applications. Consideration of various use cases highlights the importance of tailored strategies within Docker image construction.

Choosing the right base image directly influences the performance and security of the containerized application. Applications with intricate requirements demand specialized Docker-images. Efficiently addressing diverse needs with suitable Docker-image construction enhances the overall application experience. This, in turn, contributes to the creation of reliable and high-performing Docker-images for various applications. Deploying such Docker-images across diverse environments results in scalable and maintainable systems. Recognizing the diverse requirements of applications ensures appropriate Docker-images are built and deployed. Efficiently tailored Docker-images contribute to enhanced performance and security of containerized applications. This highlights the importance of careful consideration when building Docker-images.

Securing Your Docker Images

Ensuring the security of docker-images is paramount in the modern software development landscape. Malicious code can potentially hide within docker-images, posing significant risks to applications and infrastructure. Regular security scanning is crucial to identify and mitigate vulnerabilities before deployment.

Various tools and techniques exist to scan docker-images for vulnerabilities. Employing automated scanning tools during the build process is a best practice. These tools can analyze the image layers, identify known vulnerabilities, and highlight potential weaknesses in the underlying software components. Regularly updating the tools themselves is essential to ensure accuracy and detection of emerging threats. Moreover, incorporating security scanning into the CI/CD pipeline ensures that vulnerabilities are addressed proactively.

Beyond automated scanning, implementing a proactive approach to security in the design and build process of the docker-images is essential. This includes understanding the security implications of the base images and selecting trusted repositories for these base images. Developing a culture of security awareness throughout the development team is important to encourage secure practices, leading to more secure docker-images. Employing industry best practices for container security will significantly enhance the overall security posture of the docker-images and the systems they power.

Building a Custom Docker Image

Constructing a custom Docker image involves several key steps. Begin by crafting a Dockerfile, a text document outlining the image’s construction. This file dictates the image’s base, layers, and commands. Choose a suitable base image, selecting an optimized and lightweight foundation. Consider the specific application requirements when selecting the base image.

Define the image’s layers using instructions within the Dockerfile. These commands specify actions to install dependencies, run scripts, or copy files. Ensure the Dockerfile adheres to best practices for optimal performance and reduces the docker-images size. The layers contribute significantly to the efficiency of the docker-images. Use commands like `RUN`, `COPY`, and `CMD` to assemble the image’s layers meticulously. A critical aspect of building a custom docker-image is to avoid unnecessary layers, which can inflate the image size. Using a multi-stage build strategy in the Dockerfile can help immensely with minimizing this issue. Multi-stage builds allow for the creation of intermediate images, reducing the size of the final deliverable docker-images.

Execute the Dockerfile to build the docker-image. Verification is vital. Verify the image’s contents and functionality post-creation to validate its alignment with specifications. Employ tools and methods to inspect the image’s layers and structure to enhance the image’s quality. This detailed approach to building a Docker image will result in a high-performing, lightweight image. Provide a clear example Dockerfile below:

“`dockerfile

# Use a slim base image

FROM python:3.9-slim-buster

# Set the working directory

WORKDIR /app

# Copy the application code

COPY . /app

# Install dependencies

RUN pip install -r requirements.txt

# Expose the port

EXPOSE 8000

# Define the command to run the application

CMD [“python”, “app.py”]

“`

Maintaining and Updating Docker Images

Proper maintenance and updates are crucial for docker-images security and stability. Effective strategies for managing updates, particularly for security patches, are vital. This directly impacts continuous integration and continuous delivery (CI/CD) pipelines. Versioning docker-images is a best practice for tracking changes and ensuring consistency. Maintaining a clear image upgrade procedure within the CI/CD pipeline is paramount. This procedure can include automated checks for updates and automated image deployments. A systematic approach to updating images helps in preventing potential vulnerabilities and ensures compatibility across different environments.

Implementing a robust update workflow within the CI/CD pipeline streamlines the process. This often involves automated steps for checking for newer versions of docker-images, downloading them, and testing them in controlled environments. A crucial aspect is proper testing to verify compatibility and stability before deployment to production. Employing a staging environment for testing updates before general deployment is a best practice. This method minimizes risks and ensures smoother transitions during updates to production docker-images. Automated testing can identify potential issues and ensure compatibility across different environments.

Effective image versioning plays a critical role in managing updates and ensuring consistency. Using semantic versioning (e.g., MAJOR.MINOR.PATCH) helps track changes and allows teams to understand the impact of updates. Keeping records of changes made to the docker-images, including dates, descriptions, and versions, improves transparency and enables easier rollback if necessary. Comprehensive logging of update actions ensures accountability and provides a historical record of image modifications. This enables tracing of issues and facilitating efficient troubleshooting. Regular backups of docker-images aid in case of unforeseen incidents.

Best Practices and Tools for Docker Image Management

Effective management of docker-images is crucial for streamlined development and deployment processes. This section provides a summary of key practices and tools, facilitating optimal handling of docker-images throughout their lifecycle. A well-structured approach to image management ensures consistency, efficiency, and security. Implementing these practices strengthens CI/CD pipelines, and enhances container orchestration effectiveness.

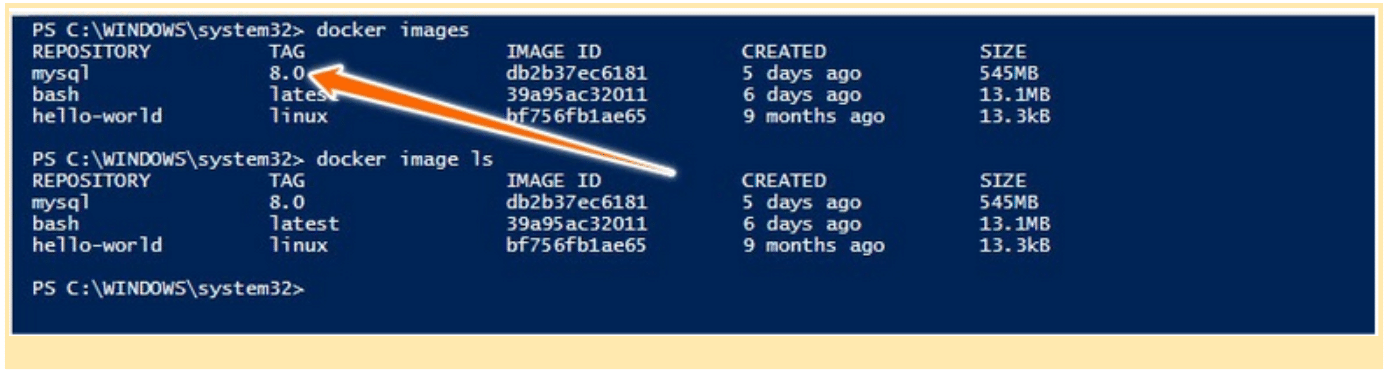

Maintaining a standardized approach to image building, storage, and distribution streamlines the development process. Utilizing version control for Dockerfiles and image tags aids in tracking changes and facilitating reproducibility. Employing automated tools for image scanning and vulnerability analysis enhances security. Robust image optimization strategies reduce size and improve performance, benefiting deployments and resource utilization. Continuous integration and deployment pipelines should incorporate automated image updates and deployments, allowing for frequent improvements. Using dedicated container registries provides a secure and organized environment for docker-image storage and access. Leveraging image caching techniques further enhances deployment speed. Integrating CI/CD with efficient docker-image management tools ensures seamless workflows.

Modern DevOps strategies demand a unified approach. Employing a comprehensive strategy ensures compliance with security standards. Employing advanced image scanning and vulnerability analysis tools, along with continuous monitoring, safeguards against potential threats. Utilizing container registries and image repositories enhances accessibility and simplifies docker-image distribution. Robust versioning and tagging systems allow for tracking changes, facilitating reproducibility. Integrating these tools with automated processes in CI/CD pipelines enables streamlined deployments and effective handling of upgrades. Tools such as Docker Hub, Quay.io, and others streamline image management tasks. The adoption of these tools simplifies the workflow, ensuring the reliability of the entire process.