What is a Container Engine and Why is it Important?

Containerization has revolutionized software deployment, offering unparalleled portability, consistency, and efficiency. A container packages an application with all its dependencies, ensuring it runs consistently across different environments, from development to production. This eliminates the “it works on my machine” problem, a common hurdle in software development. The core of this technology relies on the concept of the docker container runtime.

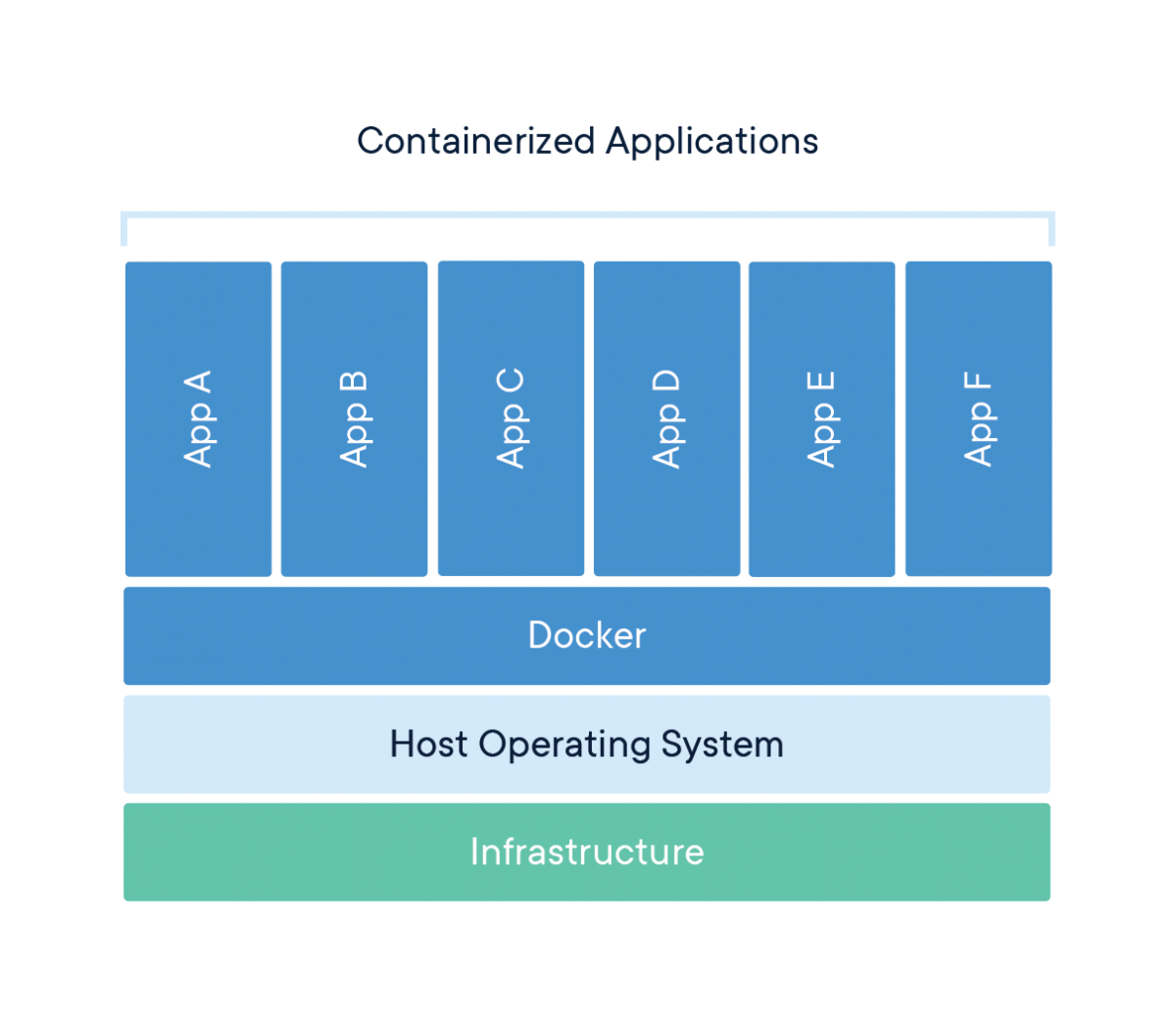

Container engines, like Docker, play a crucial role in simplifying the containerization process. They abstract away the complexities of the underlying infrastructure, providing a user-friendly interface for building, shipping, and running containers. These engines handle tasks such as image management, networking, and resource allocation. By using container engines and the docker container runtime, developers can focus on writing code rather than managing infrastructure. Containerization streamlines the software development lifecycle, enabling faster releases and improved collaboration. The benefits of containerization extend to improved resource utilization, as multiple containers can run on a single host, maximizing hardware efficiency. This efficient resource use contributes to significant cost savings for organizations.

However, the container engine is not solely responsible for all container operations. A critical component, often hidden from the user, is the container runtime. The docker container runtime is the technology that ultimately creates and manages the containers. The next section will delve deeper into the role of the container runtime and its importance in the container ecosystem. Understanding the docker container runtime allows users to fully grasp the underlying mechanisms that power containerization.

Delving into the Role of a Container Runtime

The “docker container runtime” is a crucial piece of software responsible for executing containers. It manages the entire lifecycle of a container, from its creation to its termination. This includes tasks such as pulling container images, setting up the container’s environment, and managing its resource usage. Think of the “docker container runtime” as the engine that powers the container. It provides the necessary tools and libraries to run isolated applications.

A key point is to differentiate a “docker container runtime” from a container engine. A container engine, like Docker, provides a higher-level interface for working with containers. It offers tools for building, managing, and orchestrating containers. Docker uses a “docker container runtime” under the hood to actually run the containers. The runtime is the underlying technology that interacts directly with the operating system kernel. It leverages kernel features like namespaces and cgroups to provide isolation and resource management for each container. Several “docker container runtime” options are available, each with its own strengths and weaknesses.

In essence, the “docker container runtime” is the workhorse of the container ecosystem. It handles the low-level details of creating, running, and managing containers. Without a “docker container runtime”, container engines would be unable to function. Choosing the right runtime is essential for ensuring the performance, security, and stability of containerized applications. Understanding the role of the “docker container runtime” is fundamental to comprehending how containerization works. It allows for better troubleshooting and optimization of container deployments.

How to Choose the Right Container Runtime for Your Needs

Selecting the appropriate docker container runtime is a critical decision. It impacts the performance, security, and compatibility of your containerized applications. This guide outlines factors to consider when choosing a docker container runtime. By carefully evaluating these aspects, you can select the runtime that best aligns with your specific requirements.

Security is paramount. Evaluate the security features offered by each docker container runtime. Look for features such as seccomp profiles and AppArmor integration. These features restrict the capabilities of containers. This minimizes the potential impact of security breaches. Consider the runtime’s vulnerability history and the vendor’s responsiveness to security issues. Performance is another crucial factor. Different runtimes exhibit varying performance characteristics. Factors like resource utilization and container startup time play a significant role. Consider your application’s performance requirements. Then, choose a docker container runtime that meets those needs. Evaluate the supported operating systems. Ensure that the runtime is compatible with your infrastructure. Consider the level of resource utilization. A lightweight docker container runtime minimizes overhead. This maximizes container density. Integration with existing infrastructure is essential. The runtime should seamlessly integrate with your orchestration platform (e.g., Kubernetes). It also need to work with your monitoring and logging tools.

Consider the specific needs of your applications. Some docker container runtimes are better suited for specific workloads. For example, some runtimes are optimized for high-density deployments. Others are better for security-sensitive applications. Evaluate your workload characteristics. Select a docker container runtime that is a good fit. Think about the level of community support. A strong community indicates active development and readily available resources. This will improve long-term viability. Selecting a docker container runtime involves careful evaluation. Prioritize security, performance, and compatibility. By considering these factors, you can make an informed decision. This ensures that your containerized applications run efficiently and securely. This comprehensive assessment ensures a smooth and optimized containerization experience. Evaluate the long-term support and maintenance offered by the runtime vendor or community. Regular updates and security patches are crucial for maintaining a secure and stable environment.

Popular Container Runtimes: A Comparison

Several container runtimes are available, each with distinct architectures, features, and use cases. This section introduces a few prominent options, providing a neutral and informative overview to aid in understanding the landscape of docker container runtime technologies. The goal is to present the options without endorsing one over another.

containerd: This is a popular open-source container runtime. It’s managed by the Cloud Native Computing Foundation (CNCF). containerd focuses on simplicity, robustness, and portability. It provides the core functionalities needed to execute containers. These include image transfer and storage, container execution and supervision, and networking. Docker uses containerd as its underlying container runtime. Kubernetes also utilizes containerd through the Container Runtime Interface (CRI). Its design makes it suitable for a wide range of container workloads. This docker container runtime is a foundational component in many container ecosystems.

CRI-O: CRI-O is a container runtime specifically designed for Kubernetes. As its name indicates (Container Runtime Interface – O), it implements the Kubernetes CRI. This allows Kubernetes to directly manage containers without needing Docker. CRI-O supports OCI (Open Container Initiative) container images and runtimes. This makes it compatible with standard container tooling and workflows. Its focus on Kubernetes integration makes it a popular choice for Kubernetes-centric environments. This docker container runtime provides a streamlined experience for Kubernetes users.

runc: This is a lightweight and portable container runtime. It’s a command-line tool for spawning and running containers according to the OCI specification. In other words, runc is a low-level runtime. Other higher-level runtimes, such as containerd and CRI-O, often use runc under the hood. It’s considered a reference implementation of the OCI runtime specification. runc provides the necessary tools to create and run containers. Its simplicity and focus on the OCI standard make it a valuable tool for understanding container internals. It can also be useful for building custom container solutions. This specific docker container runtime focuses on OCI compliance.

Security Considerations for Container Runtimes

Security is paramount in container deployments. Ignoring security can lead to serious risks. Understanding potential vulnerabilities within the docker container runtime is crucial. Misconfigurations and flaws in the runtime itself can be exploited. Securing the docker container runtime environment demands a proactive approach. This includes adopting robust security measures. Ignoring these measures can expose systems to threats. A compromised docker container runtime can become a gateway for attackers. This can impact the entire host system and other containers.

Several security risks are associated with docker container runtimes. Vulnerabilities can exist within the runtime code. These vulnerabilities may allow attackers to gain unauthorized access. Misconfigured runtimes can also create security loopholes. For example, insufficient resource constraints can lead to denial-of-service attacks. It’s critical to implement strong security practices. Keep your docker container runtime up to date. Apply security patches promptly to address known vulnerabilities. Regularly audit container configurations to identify potential weaknesses. These audits should cover resource limits, network policies, and user permissions.

Securing docker container runtimes requires a multi-layered approach. Security tools like seccomp profiles and AppArmor can enhance security. Seccomp profiles restrict system calls available to containers. AppArmor provides mandatory access control. These tools limit the potential damage from compromised containers. Employ runtime security tools to monitor container behavior. These tools can detect and prevent malicious activities. Regularly scan container images for vulnerabilities before deployment. Integrate security into the entire container lifecycle. By implementing these best practices, organizations can significantly reduce the attack surface. A secure docker container runtime environment protects sensitive data. It ensures the integrity of applications running within containers. This holistic approach to security is essential for successful container deployments.

Optimizing Container Runtime Performance

Optimizing the performance of a docker container runtime is crucial for achieving efficiency and scalability in containerized environments. Several factors influence runtime performance. These include resource allocation, storage driver selection, network configuration, and kernel parameters. Understanding these elements is key to maximizing container density and minimizing overhead. The docker container runtime relies on these configurations to efficiently manage resources.

Resource allocation directly impacts container performance. Allocating appropriate CPU and memory resources prevents containers from being resource-starved or wasting resources. Tools like cgroups allow limiting CPU shares and memory usage for each container. This ensures fair resource distribution. Storage drivers affect the speed at which containers can read and write data. OverlayFS is a commonly used driver, but others, like AUFS or ZFS, may be more suitable depending on the workload. Choosing the right driver optimizes I/O performance for the docker container runtime. Network configuration also plays a vital role. Using efficient networking solutions, such as bridge networks or overlay networks, minimizes network latency and maximizes throughput between containers. This efficient communication enhances the overall performance.

Kernel parameters can be tuned to improve the underlying operating system’s ability to handle container workloads. Adjusting parameters like `net.ipv4.tcp_tw_reuse` and `vm.swappiness` optimizes network performance and memory management. Regularly profiling the docker container runtime helps identify bottlenecks. Tools like `perf` and `strace` can pinpoint areas where performance improvements are needed. Monitoring resource utilization using tools like Prometheus and Grafana provides real-time insights into container performance. Optimizing the docker container runtime is an ongoing process. Continuous monitoring and tuning are essential to maintain high performance and efficiency. By carefully configuring resource allocation, storage drivers, network settings, and kernel parameters, organizations can unlock the full potential of their containerized applications. Understanding how the docker container runtime interacts with these elements is critical for achieving optimal performance.

Containerd vs. CRI-O: A Detailed Look

Containerd and CRI-O represent two dominant forces in the container runtime landscape. Understanding their nuances is crucial for making informed decisions about infrastructure. Both serve as docker container runtime implementations, yet they cater to different needs and environments. This section provides a comparative analysis of containerd and CRI-O, highlighting their architectural differences, key features, and suitability for various use cases.

Containerd, a CNCF graduated project, is designed as a core container runtime. It manages the complete container lifecycle of its host system. This includes image transfer and storage, container execution and supervision, resource management, and networking. Its lean design makes it a popular choice for a wide range of deployments. Docker Engine utilizes containerd as its underlying runtime. This highlights containerd’s stability and widespread adoption. CRI-O, on the other hand, is specifically designed as a docker container runtime for Kubernetes. It aims to provide a lightweight alternative to using Docker as the runtime for Kubernetes. CRI-O directly integrates with the Kubernetes Container Runtime Interface (CRI). This allows Kubernetes to manage containers without the overhead of a full-fledged container engine. CRI-O supports only OCI-compliant images and runtimes, promoting standardization and interoperability.

The choice between containerd and CRI-O often depends on the environment. If you’re already using Docker, containerd is likely already in place as the underlying docker container runtime. For Kubernetes-centric environments, CRI-O offers a streamlined and efficient solution. It removes the need for Docker as a middleman. Containerd’s general-purpose design makes it suitable for various container workloads. It is a strong choice when flexibility and broad compatibility are paramount. CRI-O’s focused approach optimizes it for Kubernetes deployments. Consider security requirements, resource constraints, and existing infrastructure when making a decision. Both runtimes offer robust features and are actively maintained by their respective communities. Evaluating specific needs against the strengths of each runtime is essential for optimal performance and efficiency. Selecting the right docker container runtime contributes significantly to the overall success of containerized applications.

Future Trends in Container Runtime Technology

The world of the docker container runtime is constantly evolving. Several exciting trends promise to reshape how we build, deploy, and manage containerized applications. Lightweight virtual machines (VMs) are gaining traction as a way to enhance container security. Projects like Kata Containers and gVisor offer a unique approach. They provide a stronger isolation boundary between containers and the host operating system. This is achieved by running each container within its own micro-VM.

Sandboxed container runtimes represent another significant development in docker container runtime technology. These runtimes further isolate containers. They limit their access to system resources. This approach minimizes the attack surface and reduces the potential impact of security vulnerabilities. These advances address concerns around shared kernel vulnerabilities. This is a critical aspect of traditional containerization. The focus on enhanced security reflects the growing importance of protecting containerized workloads in production environments. The docker container runtime landscape is adapting to meet these evolving security demands.

Looking ahead, we can anticipate even greater specialization and diversification within the docker container runtime ecosystem. We might see runtimes tailored for specific workloads. Examples are machine learning or high-performance computing. Integration with emerging hardware technologies, such as specialized accelerators, will also likely become more prevalent. The ongoing development of the container runtime interface (CRI) within Kubernetes fosters innovation. It enables new runtimes to be seamlessly integrated into the orchestration platform. This ensures that the docker container runtime remains a dynamic and crucial element of the cloud-native landscape. The future promises more secure, efficient, and specialized solutions. These solutions empower developers to build and deploy applications with greater confidence and flexibility. The docker container runtime will continue to adapt to the changing demands of modern software development.