Understanding the Fundamentals of Container Images

A docker container image serves as a read-only template, a blueprint, that provides instructions for creating a container. Think of it like a snapshot of an application and its environment, encompassing everything needed to run the application: code, runtime, system tools, libraries, and settings. This immutability ensures consistency across different environments. A docker container image guarantees that the application behaves the same way, regardless of where it is deployed.

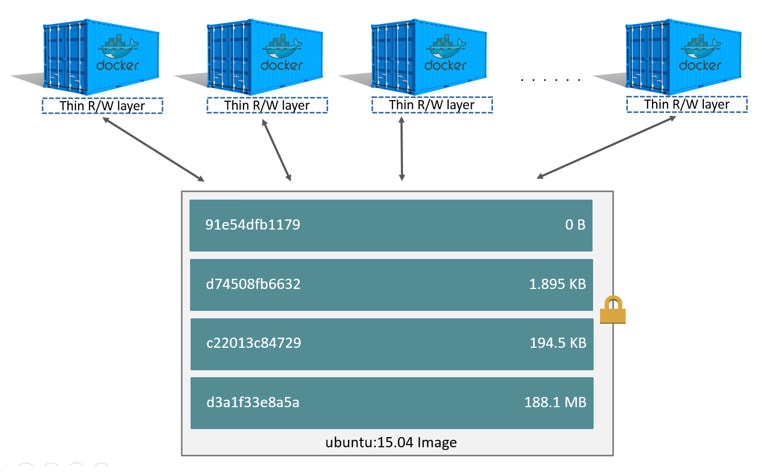

Docker images are built in layers. Each instruction in a Dockerfile creates a new layer. This layered architecture is crucial for efficiency. Layers are cached, so if a layer hasn’t changed, Docker reuses it from the cache instead of rebuilding it. This significantly speeds up the build process. Furthermore, these layers promote reusability. Multiple images can share common layers, reducing storage space and bandwidth. The layered approach is one of the key features of docker container image technology that drives its efficient resource utilization.

The relationship between images, containers, and the Dockerfile is fundamental. The Dockerfile is a text file containing a series of instructions that Docker uses to build a docker container image. The image is then used to create containers. When you run an image, you are essentially creating a running instance of that image – a container. The container is a runnable instance of the image, with its own isolated filesystem, process space, and network interface. Changes made within a container are not persisted back to the original image unless explicitly committed. The docker container image therefore remains the consistent, reproducible base for creating multiple, independent container instances.

How to Build a Docker Image: A Step-by-Step Guide

Creating a Docker container image involves defining the application’s environment and dependencies within a Dockerfile. This file serves as a blueprint for building the image. The Dockerfile contains a series of instructions that Docker executes to assemble the image. Understanding these instructions is crucial for creating efficient and reproducible Docker container images.

Several essential commands are used within a Dockerfile. The `FROM` instruction specifies the base image upon which the new image will be built. Choosing a suitable base image is vital; smaller, more lightweight images (like Alpine Linux) can significantly reduce the final image size. The `RUN` instruction executes commands within the container image during the build process. This is typically used to install software packages, create directories, or configure the environment. The `COPY` instruction transfers files and directories from the host machine into the image. Similarly, the `ADD` instruction copies files, but it can also extract archives and fetch files from URLs. The `WORKDIR` instruction sets the working directory for subsequent instructions. The `EXPOSE` instruction declares the ports that the container will listen on at runtime. Finally, the `CMD` and `ENTRYPOINT` instructions specify the command to be executed when the container starts. It’s important to note that only one `CMD` instruction is executed, while `ENTRYPOINT` can be combined with `CMD` to provide default arguments. A well-structured Dockerfile is key to building a reliable docker container image.

Consider a simple example: building a Docker container image for a Python “Hello, World” application. First, create a file named `app.py` containing the Python code. Then, create a Dockerfile in the same directory. The Dockerfile might start with `FROM python:3.9-slim-buster`, indicating the use of a slim Python 3.9 image as the base. Next, use `WORKDIR /app` to set the working directory. Then, `COPY app.py .` copies the Python script into the image. To install any dependencies (if needed), use `RUN pip install –no-cache-dir requests`. Finally, `CMD [“python”, “app.py”]` specifies the command to run the application. To prevent unnecessary files from being included in the docker container image, create a `.dockerignore` file. This file lists files and directories that Docker should exclude from the build context, further optimizing the image size. Building an efficient Docker container image is essential for faster deployments and reduced resource consumption.

Optimizing Container Image Size for Faster Deployments

Reducing the size of a Docker container image is crucial for faster deployments and efficient resource utilization. A smaller docker container image translates to quicker download times, reduced storage costs, and improved application performance. Several techniques can be employed to achieve this optimization, significantly impacting the overall efficiency of your containerized applications. Implementing these strategies will enhance the development and deployment lifecycle.

Multi-stage builds are a powerful technique for minimizing docker container image size. This approach separates the build environment from the runtime environment. In the first stage, all dependencies required for compilation and building the application are included. The resulting artifacts are then copied to a second, leaner stage containing only the necessary runtime dependencies. For example, a Java application might use a full JDK in the first stage for compilation, but only copy the compiled `.jar` file and a JRE to a smaller base image in the final stage. This prevents unnecessary build tools and libraries from being included in the final docker container image. Selecting a smaller base image, such as Alpine Linux, is another effective way to reduce size. Alpine Linux is a lightweight distribution known for its minimal footprint, making it an ideal foundation for container images. Furthermore, meticulously removing unnecessary files after each step in the Dockerfile is essential. Commands like `rm -rf /var/cache/apk/*` after installing packages with `apk` in Alpine can significantly reduce the image size. Using efficient package managers and minimizing the number of layers in the Dockerfile also contribute to a smaller docker container image. This reduces the amount of storage required, also decreasing the attack surface.

Another important aspect of optimizing docker container image size involves careful dependency management. Ensure that only the essential libraries and tools required for the application to run are included. Avoid installing unnecessary packages or dependencies that are not actively used. Employing techniques like using `.dockerignore` files to exclude irrelevant files and directories from being added to the image context can also substantially reduce the size. Regularly auditing the contents of the docker container image and removing any redundant or obsolete components is recommended. By implementing these optimization strategies, developers can create lean, efficient container images that contribute to faster deployments, reduced resource consumption, and improved overall application performance. The optimization of the docker container image is an ongoing effort, and it should be part of the development process.

Docker Hub and Other Container Registries: Storing and Sharing Your Images

Docker Hub serves as a central, public registry for storing and sharing Docker container images. It provides a vast collection of pre-built images that users can readily pull and utilize in their projects. This significantly streamlines the development process by eliminating the need to build every image from scratch. Using Docker Hub, developers can also push their own custom-built Docker container images, making them accessible to the broader community or specific collaborators. The process involves tagging an image with a repository name and then using the `docker push` command to upload it to Docker Hub. Similarly, the `docker pull` command downloads images from Docker Hub to a local machine. Docker Hub simplifies the distribution of containerized applications, enabling easy sharing and collaboration among developers. This makes it a fundamental resource in the containerization ecosystem.

Beyond Docker Hub, several other container registries offer similar functionalities, often with additional features tailored to specific cloud platforms or organizational needs. Amazon Elastic Container Registry (ECR) provides a fully managed Docker container image registry that integrates seamlessly with other AWS services. Google Container Registry, part of Google Cloud Platform, offers private Docker container image storage with integrated access control and vulnerability scanning. Azure Container Registry provides a similar service within the Azure ecosystem, enabling users to store and manage Docker container images for their Azure deployments. These registries often provide enhanced security, scalability, and integration with their respective cloud environments. They cater to organizations requiring private and secure storage for their Docker container images, as well as tighter integration with their existing infrastructure.

Choosing the right container registry depends on various factors, including security requirements, scalability needs, and integration with existing infrastructure. While Docker Hub offers a convenient and publicly accessible option for sharing Docker container images, private registries like Amazon ECR, Google Container Registry, and Azure Container Registry provide enhanced control and security for enterprise applications. Regardless of the registry chosen, the fundamental principles of pushing, pulling, and managing Docker container images remain consistent. Correctly utilizing a container registry is crucial for efficient deployment and management of applications using Docker container image technology. Using such registries streamlines workflows and promotes collaboration.

Implementing Security Best Practices for Docker Container Images

Securing Docker container images is paramount for maintaining the integrity and confidentiality of applications. A compromised docker container image can expose sensitive data, introduce vulnerabilities, and disrupt services. Therefore, implementing robust security measures throughout the image lifecycle is crucial. The foundation of secure docker container image practices starts with selecting trusted base images. Always opt for official images from reputable sources like Docker Hub’s verified publishers. These images are generally well-maintained and regularly updated with security patches. Scrutinize the Dockerfile used to build the image, ensuring it adheres to security best practices.

Regularly scanning docker container images for vulnerabilities is a vital step. Tools such as Clair and Trivy can identify known security flaws in image layers and dependencies. Integrate these tools into the CI/CD pipeline to automatically scan images before deployment. Address any identified vulnerabilities promptly by updating packages or applying necessary patches. Avoid storing sensitive information, such as passwords, API keys, or cryptographic secrets, directly within the docker container image. Instead, leverage environment variables or secret management solutions to inject sensitive data at runtime. This prevents accidental exposure of credentials and reduces the risk of unauthorized access.

Keeping base images up-to-date is critical for mitigating security risks. Regularly rebuild docker container images with the latest base image versions to incorporate security patches and bug fixes. Automate this process to ensure consistent and timely updates. Consider implementing image signing and verification to ensure the authenticity and integrity of docker container images. Image signing involves digitally signing an image with a private key, allowing consumers to verify its origin and ensure it hasn’t been tampered with. Verification can be performed using the corresponding public key. By following these security best practices, organizations can significantly reduce the risk of security incidents and protect their applications from potential threats related to docker container image vulnerabilities.

Leveraging Docker Compose for Multi-Container Application Development

Docker Compose emerges as a pivotal tool for orchestrating multi-container applications, streamlining the complexities of managing interconnected services. It provides a declarative approach to defining and running complex applications using a `docker-compose.yml` file. This file specifies the services, networks, and volumes required for the application to function cohesively. The adoption of Docker Compose simplifies the deployment and management of applications comprised of multiple docker container image instances. This approach reduces manual configuration and ensures consistency across different environments.

Consider a basic web application example to illustrate the utility of Docker Compose. The application consists of a web server (e.g., Nginx) and a database (e.g., PostgreSQL). A `docker-compose.yml` file would define these two services, specifying their respective docker container image, ports, environment variables, and dependencies. The web server service would be configured to link to the database service, enabling seamless communication between them. Volumes can be defined to persist database data across container restarts. Networks can be created to isolate the application’s containers from other services running on the same host. By executing a single command (`docker-compose up`), the entire application stack, including both the web server and the database, can be built and started. This functionality is especially useful for local development and testing, where rapid iteration and environment consistency are crucial.

Docker Compose enhances the development workflow by enabling developers to define and manage their application’s infrastructure as code. Modifications to the application’s configuration, such as adding a new service or updating environment variables, can be easily accomplished by modifying the `docker-compose.yml` file and redeploying the application. Docker Compose promotes reusability and portability by encapsulating the application’s dependencies and configuration within a single file. A well-defined docker container image, coupled with Docker Compose, ensures that the application behaves consistently across different environments, from development to production. This is especially beneficial for complex applications that rely on multiple interconnected services. Using Docker Compose helps manage the complexity of docker container image deployments.

Troubleshooting Common Issues When Building and Running Container Images

Encountering issues while building and running docker container images is a common part of the development lifecycle. Understanding how to diagnose and resolve these problems is crucial for efficient containerization. One frequent issue is related to Dockerfile syntax. A misspelled command or incorrect argument can lead to build failures. Carefully review the Dockerfile and consult the Dockerfile reference for proper syntax. Another common problem involves network connectivity. Containers might fail to connect to external services or other containers within the same network. Ensure that the necessary ports are exposed and that the container network is configured correctly.

Volume mounting issues also arise frequently. If a volume is not mounted correctly, data might not be persisted or shared between the container and the host system as expected. Verify the volume mount paths in the `docker run` command or `docker-compose.yml` file. Application dependencies can also cause problems. Missing or incompatible dependencies can prevent the application from starting correctly within the container. Use a package manager (e.g., `apt`, `yum`, `pip`) to install the required dependencies and specify the correct versions. Remember to use smaller images to make faster deployments of the docker container image.

To effectively troubleshoot docker container image issues, it is essential to inspect image layers and container logs. The `docker history` command allows you to examine the layers of an image and identify the source of potential problems. Container logs provide valuable information about application errors and runtime behavior. Use the `docker logs` command to view the logs for a specific container. Additionally, consider using debugging tools and techniques to further investigate issues. This may involve attaching a debugger to the containerized application or using network monitoring tools to analyze network traffic. Leveraging these troubleshooting tips and resources will significantly improve your ability to resolve problems and ensure the smooth operation of your containerized applications. Furthermore, regularly updating the docker container image infrastructure helps mitigate potential vulnerabilities and performance bottlenecks.

Container Image Strategies for Different Application Types

The strategy for building and managing a Docker container image varies significantly depending on the application type. Considerations include web applications, microservices, and batch processing jobs. Each requires a tailored approach to optimize image size, dependency management, and deployment frequency. Choosing the right base image and optimization techniques is essential for meeting specific application requirements and improving the efficiency of Docker container image deployments.

For web applications, a focus on minimizing the Docker container image size is crucial for faster deployments and reduced resource consumption. Multi-stage builds are highly effective for separating build-time dependencies from runtime dependencies. This involves using a larger image for compilation and building, then copying only the necessary artifacts into a smaller runtime image, like Alpine Linux. Efficient package managers, such as `apk` for Alpine or `apt` with `–no-install-recommends` for Debian-based images, help reduce unnecessary package installations. The use of `.dockerignore` files prevents the inclusion of irrelevant files, further contributing to a lean Docker container image. Optimizing static assets (e.g., compressing images and minifying JavaScript/CSS) before adding them to the image also reduces size. This optimized Docker container image will improve startup times and overall performance.

Microservices often necessitate more frequent deployments, making small Docker container images even more critical. Base image selection is particularly important; distroless images, which contain only the application and its runtime dependencies, provide enhanced security and minimize attack surface. Dependency management should be carefully considered, with tools like dependency locking to ensure consistent builds. Utilizing a continuous integration/continuous delivery (CI/CD) pipeline automates the building, testing, and deployment of these images, ensuring rapid and reliable updates. Strategies for batch processing jobs may prioritize execution speed and resource utilization over image size. Larger base images with pre-installed dependencies might be acceptable if they significantly improve processing time. However, even in these cases, cleaning up temporary files and unused packages after installation remains crucial for keeping the Docker container image size manageable. Understanding the unique characteristics of each application type and adjusting the Docker container image strategy accordingly is essential for maximizing efficiency and performance.