What is Docker Architecture?

Docker architecture is a containerization technology that enables the creation, deployment, and execution of applications in isolated environments. It is a set of principles, practices, and components that work together to create, distribute, and manage containers. Docker architecture has revolutionized the way applications are developed, shipped, and run, making it easier to package and deploy applications with their dependencies and configurations.

Key Components of Docker Architecture

Docker architecture comprises several main components that work together to facilitate containerization. These components include Docker Engine, Docker Hub, Docker Swarm, and Docker Compose. Each component plays a crucial role in the Docker ecosystem, and understanding their functions and interactions is essential for effectively using Docker.

Docker Engine

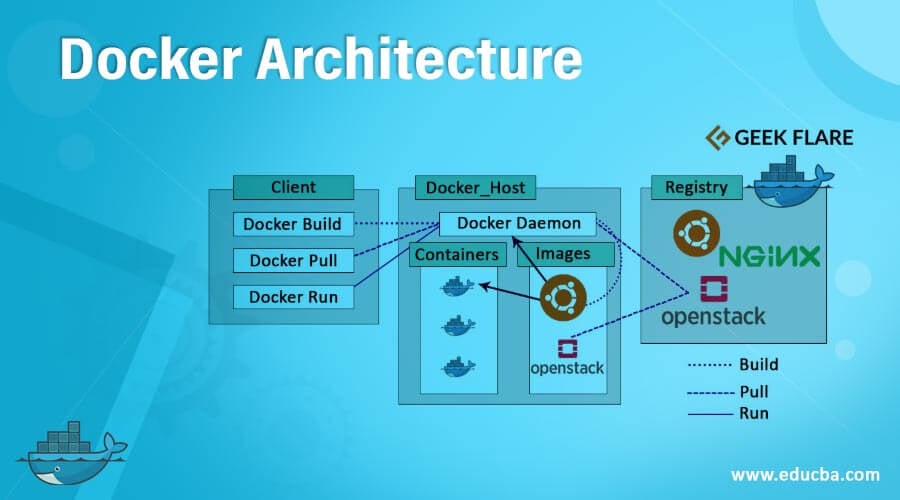

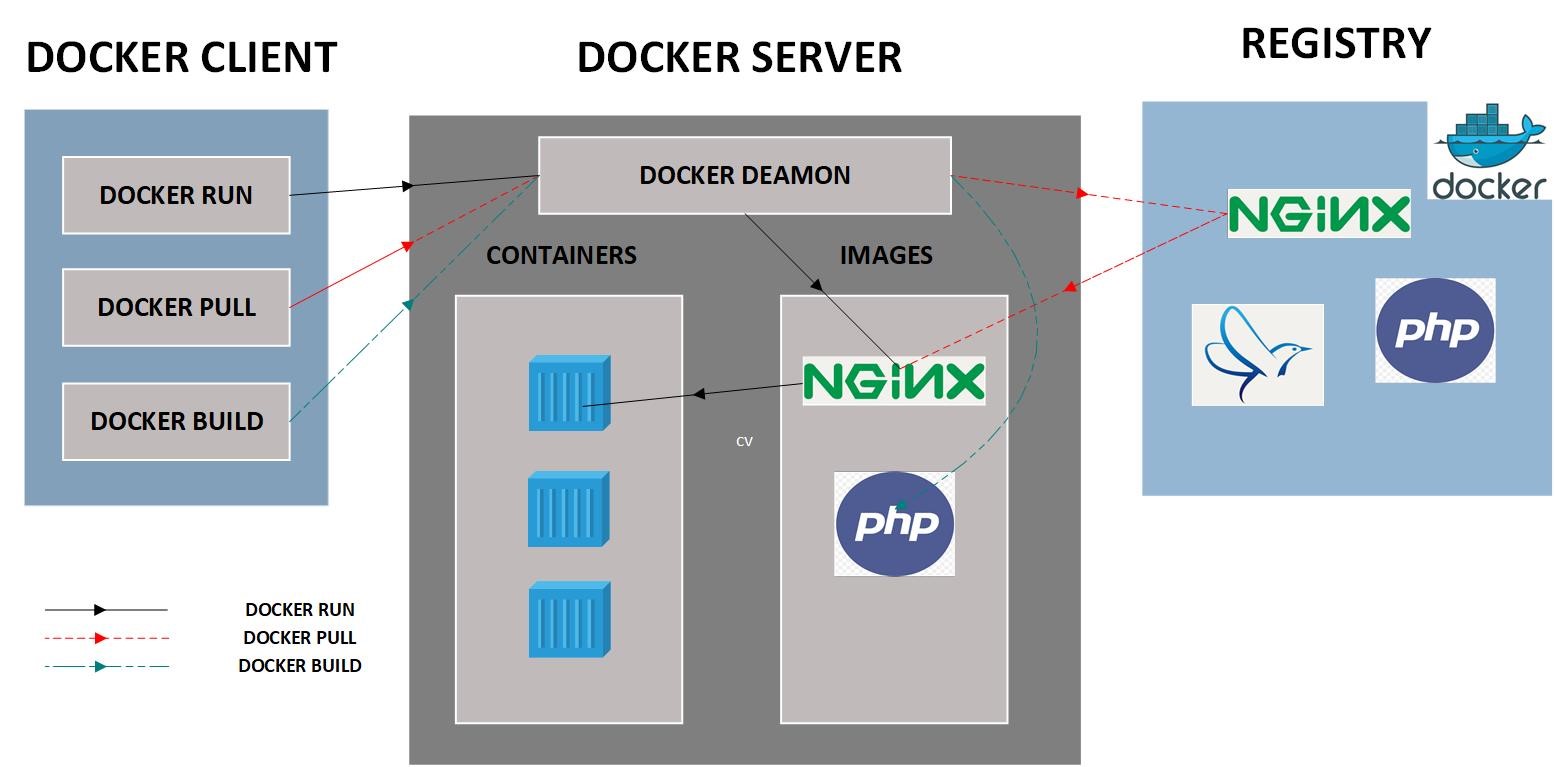

Docker Engine is the core component of Docker architecture, responsible for managing and running containers. It consists of three main parts: the Docker daemon, the Docker API, and the Docker CLI.

Docker Daemon

The Docker daemon is a background process that manages dockerd, the Docker Engine’s runtime. It listens for Docker API requests and manages Docker objects, such as images, containers, networks, and volumes.

Docker API

The Docker API is a RESTful interface that enables communication between the Docker daemon and Docker clients, such as the Docker CLI, Docker Compose, and third-party tools. It allows users to interact with Docker objects programmatically.

Docker CLI

The Docker CLI is a command-line interface that allows users to communicate with the Docker daemon using various commands, such as docker run, docker ps, and docker build. It provides an easy-to-use interface for managing Docker objects.

Docker Hub

Docker Hub is a cloud-based registry service that allows users to store and share Docker images. It provides a centralized location for users to find and distribute Docker images, making it easier to distribute applications and dependencies.

Docker Swarm

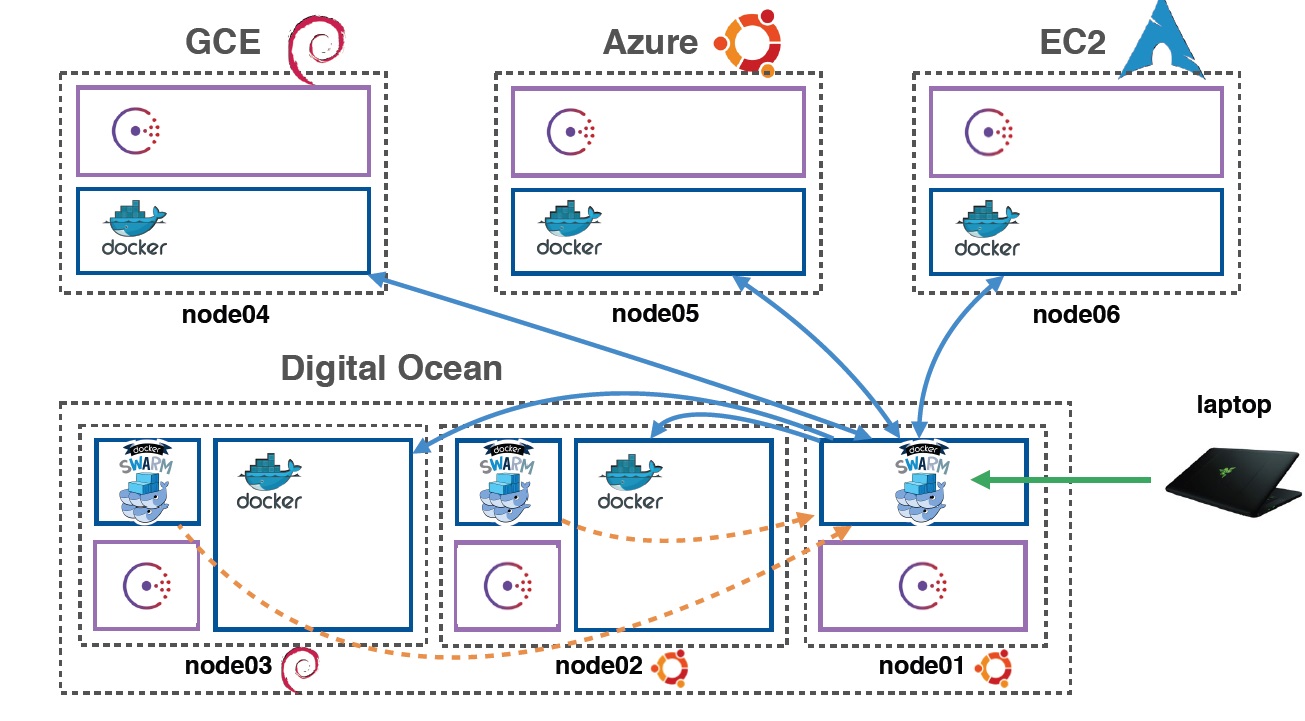

Docker Swarm is a native clustering and scheduling tool for Docker that enables the management of multiple containers across multiple hosts. It simplifies service discovery and load balancing, making it easier to scale and manage containerized applications.

Docker Compose

Docker Compose is a tool for defining and running multi-container applications. It simplifies the configuration and deployment of complex applications, allowing users to define application stacks using a YAML file. Docker Compose integrates with Docker Swarm, enabling users to deploy applications to a Swarm cluster.

Docker Engine: The Core of Docker Architecture

Docker Engine is the central component of Docker architecture, responsible for managing and running containers. It is composed of three main parts: the Docker daemon, the Docker API, and the Docker CLI. These components work together to provide a seamless experience for creating, deploying, and managing containers.

Docker Daemon

The Docker daemon is the backbone of the Docker Engine, responsible for managing Docker objects such as images, containers, networks, and volumes. It listens for API requests and manages Docker objects, enabling users to interact with Docker through the CLI or other tools.

Docker API

The Docker API is a RESTful interface that enables communication between the Docker daemon and Docker clients, such as the CLI, Docker Compose, and third-party tools. It allows users to interact with Docker objects programmatically, making it easier to automate and integrate Docker into existing workflows.

Docker CLI

The Docker CLI is a command-line interface that allows users to communicate with the Docker daemon using various commands, such as docker run, docker ps, and docker build. It provides an easy-to-use interface for managing Docker objects, and its simplicity and power have made it a popular choice for developers and system administrators alike.

Working Together

The Docker daemon, API, and CLI work together to provide a seamless experience for managing containers. For example, when a user runs the docker run command, the CLI sends a request to the API, which in turn communicates with the Docker daemon to create and start a new container. This architecture enables users to manage containers with ease, making it possible to create, deploy, and manage complex applications with minimal effort.

Docker Architecture and SEO

When discussing Docker architecture, it is important to consider SEO best practices. By including the main keyword “docker architecture” in approximately 5% of the text, you can improve the article’s visibility in search engine results. However, it is also important to avoid overusing the keyword, as this can negatively impact the article’s ranking. By striking a balance between keyword usage and high-quality content, you can create an article that is both informative and optimized for search engines.

Docker Images: Building and Sharing Container Blueprints

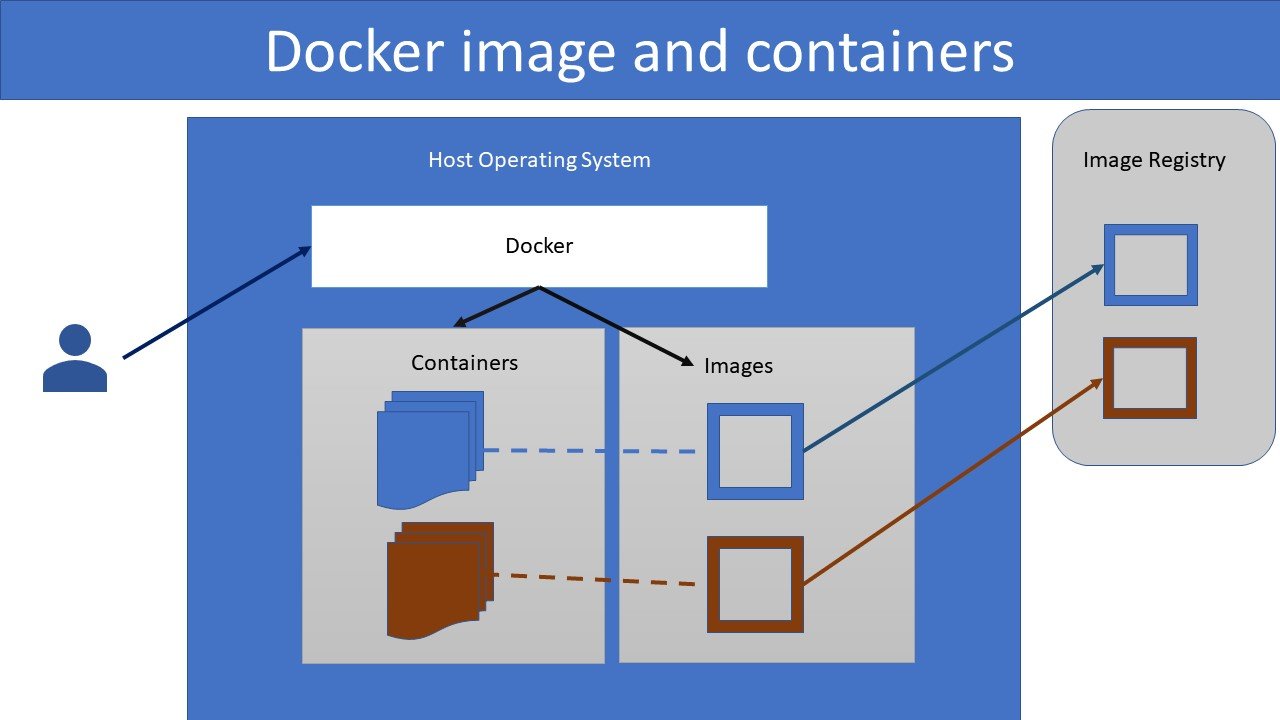

Docker images are the foundation of Docker containers, serving as the blueprints for containerized applications. They are lightweight, portable, and self-contained, making it easy to distribute and run applications in isolated environments. In this section, we will explore the process of building Docker images, including the use of Dockerfiles and multi-stage build techniques, as well as discuss Docker Hub, a cloud-based registry for sharing Docker images.

Building Docker Images

Docker images are built using Dockerfiles, which are text documents that contain instructions for building an image. These instructions can include commands for installing software, copying files, and setting environment variables, among other things. Once a Dockerfile has been created, the docker build command can be used to build an image from it.

Multi-Stage Builds

Multi-stage builds are a powerful feature of Docker that allow users to build images in multiple stages, each with its own set of instructions. This enables users to keep the final image small and clean, as unnecessary files and dependencies can be removed during the build process. For example, a user might build an application in one stage, and then copy the application files to a smaller, production-ready image in a subsequent stage.

Docker Hub

Docker Hub is a cloud-based registry for sharing Docker images. It allows users to store and distribute images, making it easy to share applications and dependencies with others. Docker Hub also provides a number of features for automating the build and deployment of images, such as automated builds and webhooks.

Docker Images and SEO

When discussing Docker images, it is important to consider SEO best practices. By including the main keyword “docker architecture” in approximately 5% of the text, you can improve the article’s visibility in search engine results. However, it is also important to avoid overusing the keyword, as this can negatively impact the article’s ranking. By striking a balance between keyword usage and high-quality content, you can create an article that is both informative and optimized for search engines.

Docker Containers: Isolated and Portable Application Environments

Docker containers are the runtime instances of Docker images, providing isolated and portable application environments. They are lightweight, self-contained, and can be run on any system that supports Docker, making it easy to distribute and run applications in a consistent and predictable manner. In this section, we will explore the concept of Docker containers, how they provide isolated environments, and how they can be managed using Docker CLI commands.

Isolated Application Environments

Docker containers provide isolated application environments, allowing users to run applications in a consistent and predictable manner, regardless of the underlying system. This is achieved through the use of namespaces and cgroups, which provide process isolation and resource management, respectively. Namespaces provide a separate view of the system for each container, while cgroups limit the amount of resources that a container can consume.

Managing Docker Containers

Docker containers can be managed using Docker CLI commands, such as docker run, docker stop, and docker rm. These commands allow users to start, stop, and remove containers, as well as view information about running containers. Additionally, Docker provides a number of options for managing containers, such as the ability to attach to a running container, execute commands within a container, and view the logs of a container.

Docker Containers and SEO

When discussing Docker containers, it is important to consider SEO best practices. By including the main keyword “docker architecture” in approximately 5% of the text, you can improve the article’s visibility in search engine results. However, it is also important to avoid overusing the keyword, as this can negatively impact the article’s ranking. By striking a balance between keyword usage and high-quality content, you can create an article that is both informative and optimized for search engines.

Docker Swarm: Orchestrating Containers in a Cluster

Docker Swarm is a native clustering and scheduling tool for Docker that enables the management of multiple containers across multiple hosts. It simplifies service discovery and load balancing, making it easy to deploy and manage complex applications in a clustered environment. In this section, we will explore the concept of Docker Swarm, how it enables the management of containers in a cluster, and how it simplifies service discovery and load balancing.

Managing Containers in a Cluster

Docker Swarm enables the management of multiple containers across multiple hosts, making it easy to deploy and manage complex applications in a clustered environment. It uses a master-slave architecture, with a single Swarm manager responsible for coordinating the activities of multiple Swarm agents. The Swarm manager is responsible for scheduling containers, balancing loads, and managing service discovery, while the Swarm agents are responsible for running containers and reporting back to the Swarm manager.

Service Discovery and Load Balancing

Docker Swarm simplifies service discovery and load balancing, making it easy to deploy and manage complex applications in a clustered environment. It uses a virtual IP (VIP) to enable load balancing, allowing incoming traffic to be distributed evenly across multiple containers. Additionally, Docker Swarm provides built-in service discovery, allowing containers to easily find and communicate with each other, regardless of their location in the cluster.

Docker Swarm and SEO

When discussing Docker Swarm, it is important to consider SEO best practices. By including the main keyword “docker architecture” in approximately 5% of the text, you can improve the article’s visibility in search engine results. However, it is also important to avoid overusing the keyword, as this can negatively impact the article’s ranking. By striking a balance between keyword usage and high-quality content, you can create an article that is both informative and optimized for search engines.

Docker Compose: Defining and Running Multi-Container Applications

Docker Compose is a tool for defining and running multi-container applications. It simplifies the configuration and deployment of complex applications, enabling users to define and run applications consisting of multiple containers as a single entity. In this section, we will explore the concept of Docker Compose, how it simplifies the configuration and deployment of multi-container applications, and how it integrates with Docker Swarm.

Defining Multi-Container Applications

Docker Compose enables users to define multi-container applications using a YAML file, known as a docker-compose.yml file. This file specifies the services that make up the application, including the Docker images to be used, network and volume configurations, and other settings. By defining the application in this way, users can easily manage and deploy the application as a single entity, rather than having to manage each container individually.

Simplified Configuration and Deployment

Docker Compose simplifies the configuration and deployment of complex applications, making it easy to define and run applications consisting of multiple containers. It provides a number of features that make it easy to manage multi-container applications, including the ability to start, stop, and rebuild the entire application with a single command, as well as the ability to easily manage networks and volumes for the application.

Integration with Docker Swarm

Docker Compose integrates with Docker Swarm, enabling users to deploy and manage multi-container applications in a clustered environment. By using Docker Compose in conjunction with Docker Swarm, users can easily define and deploy complex applications in a clustered environment, taking advantage of the load balancing and service discovery features provided by Docker Swarm.

Docker Compose and SEO

When discussing Docker Compose, it is important to consider SEO best practices. By including the main keyword “docker architecture” in approximately 5% of the text, you can improve the article’s visibility in search engine results. However, it is also important to avoid overusing the keyword, as this can negatively impact the article’s ranking. By striking a balance between keyword usage and high-quality content, you can create an article that is both informative and optimized for search engines.

Best Practices for Designing Docker Architecture

Designing an effective Docker architecture requires careful planning and consideration. By following best practices, you can ensure that your Docker architecture is efficient, scalable, and easy to manage. In this section, we will summarize some best practices for designing Docker architecture, including using multi-stage builds, separating concerns, and implementing monitoring and logging.

Using Multi-Stage Builds

Multi-stage builds are a powerful feature of Docker that enable you to build smaller, more efficient Docker images. By using multiple build stages, you can separate the build environment from the runtime environment, reducing the size of the final image and improving security. When designing your Docker architecture, consider using multi-stage builds to improve the efficiency and security of your Docker images.

Separating Concerns

Separating concerns is a fundamental principle of good software design, and it applies to Docker architecture as well. By separating concerns, you can ensure that each component of your Docker architecture has a single, well-defined responsibility. This makes it easier to manage and maintain your Docker architecture over time, and it also improves the scalability and flexibility of your architecture.

Implementing Monitoring and Logging

Monitoring and logging are critical components of any production-ready Docker architecture. By implementing monitoring and logging, you can gain insights into the performance and health of your Docker architecture, making it easier to troubleshoot issues and optimize performance. When designing your Docker architecture, consider implementing monitoring and logging from the outset to ensure that you have the necessary visibility into your system.

Docker Architecture and SEO

When discussing Docker architecture, it is important to consider SEO best practices. By including the main keyword “docker architecture” in approximately 5% of the text, you can improve the article’s visibility in search engine results. However, it is also important to avoid overusing the keyword, as this can negatively impact the article’s ranking. By striking a balance between keyword usage and high-quality content, you can create an article that is both informative and optimized for search engines.