An Overview of Docker and Its Role in Software Development

Docker is a leading platform for developers, enabling the creation, deployment, and running of applications using containerization technology. Containers, the fundamental building blocks of Docker, allow applications to be packaged with their dependencies and configurations, ensuring consistent execution across various computing environments. This portability and rapid deployment capability have made Docker a popular choice for modern software development.

Docker’s relevance extends beyond containerization, playing a significant role in microservices, DevOps, and cloud computing. By packaging services as containers, developers can build and manage microservices-based applications more efficiently. Docker’s tight integration with DevOps practices, such as continuous integration and continuous delivery (CI/CD), streamlines the development lifecycle, allowing for faster iterations and feedback.

Moreover, Docker’s compatibility with cloud computing platforms enables seamless application migration, scalability, and resource optimization. As a result, Docker has become an essential tool for businesses and developers seeking to build, deploy, and manage applications in a scalable, efficient, and consistent manner.

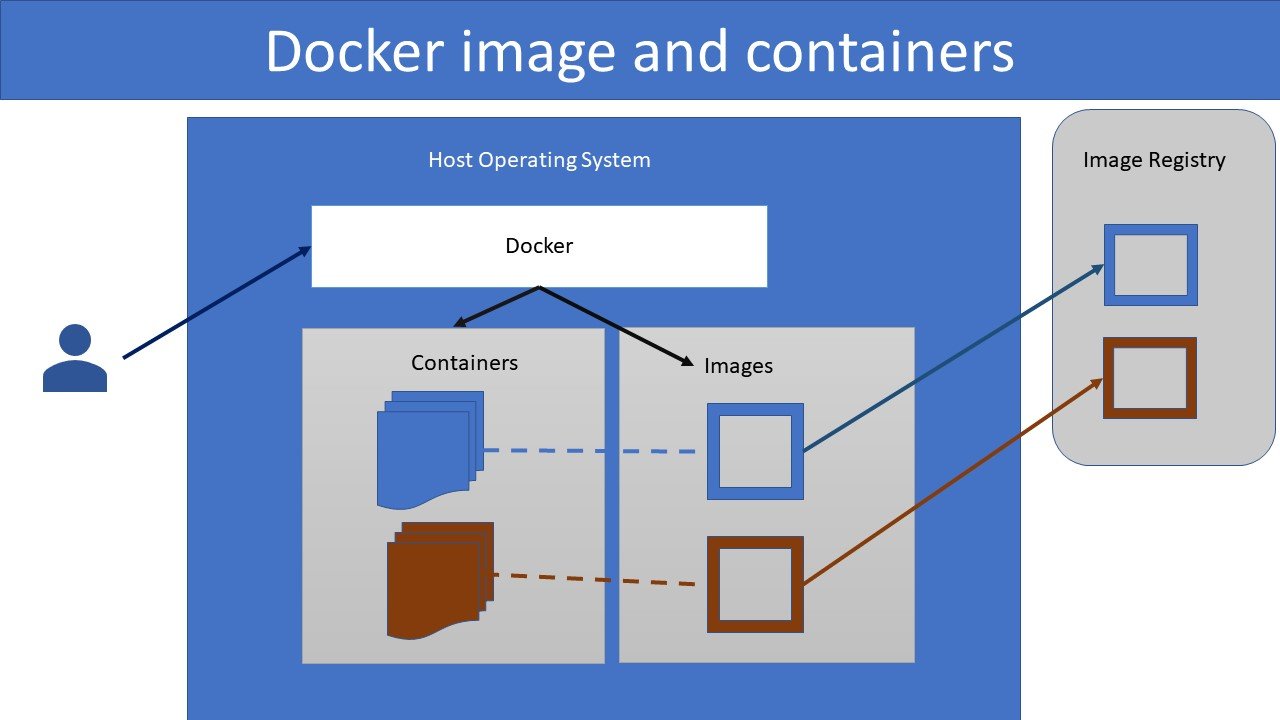

The Architecture of Docker: Understanding Containers and Images

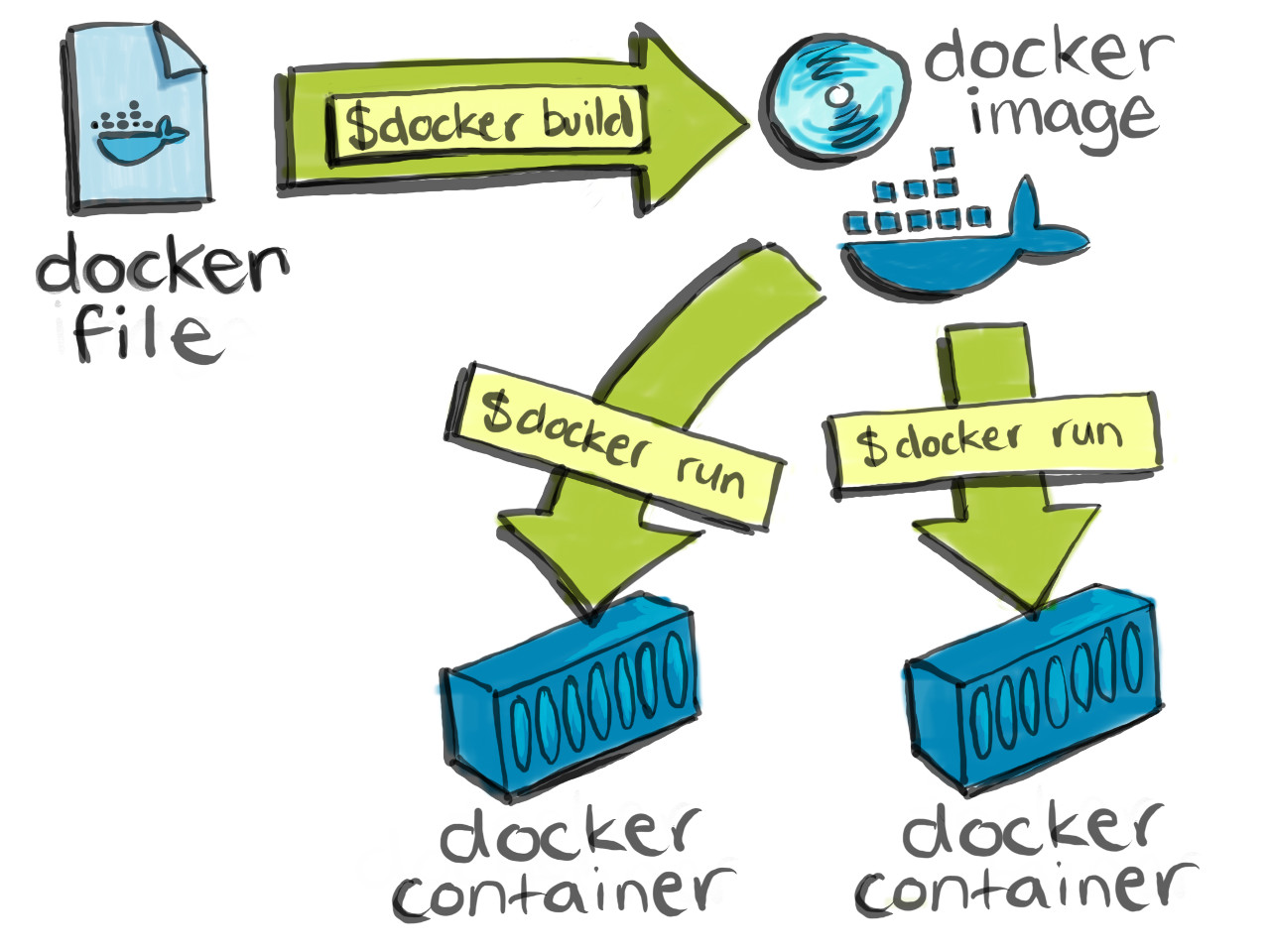

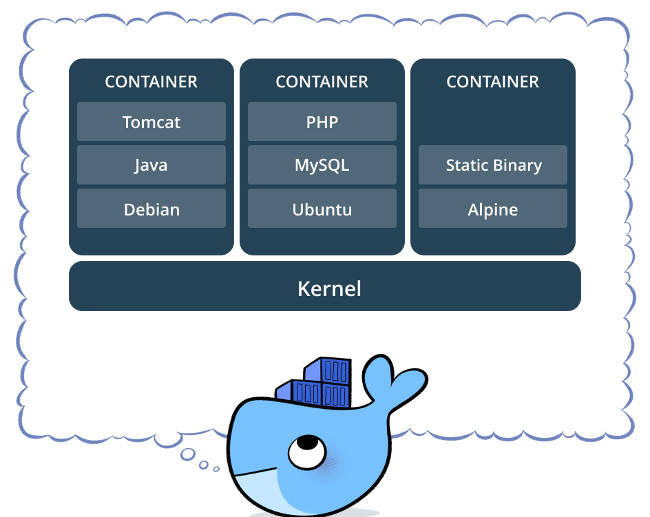

Docker’s architecture revolves around two core concepts: containers and images. An image is a lightweight, standalone, and executable package that includes an application and its dependencies, such as runtime, libraries, and system tools. Containers are instances of images, providing an isolated and portable environment for running applications.

Dockerfiles, plain-text configuration files, define how images are built. They consist of instructions, such as specifying a base image, copying files, installing dependencies, and setting environment variables. Once a Dockerfile is created, the docker build command is used to build an image, which can then be run as a container.

Containers differ from virtual machines (VMs) in several ways. While VMs virtualize the entire hardware stack, containers share the host system’s OS kernel and use fewer resources. This difference makes containers more lightweight and faster to start than VMs. Containers are also more portable, as they can run on various platforms without requiring OS-specific configurations.

Getting Started with Docker: Installation and Basic Commands

To begin using Docker, you must first install it on your preferred operating system. Here is a step-by-step guide for installing Docker on popular platforms:

- Linux: Install Docker using the package manager for your distribution, such as

aptfor Ubuntu oryumfor CentOS. - macOS: Download and install Docker Desktop for macOS from the official Docker website.

- Windows: Download and install Docker Desktop for Windows, ensuring your system meets the minimum requirements (64-bit Windows 10 Pro, Enterprise, or Education edition with Hyper-V enabled).

After installation, verify Docker is running by executing the following command:

$ docker --versionTo manage Docker images, containers, and networks, familiarize yourself with these basic commands:

docker images: List all Docker images on your system.docker pull <image>: Pull an image from a Docker registry, such as Docker Hub.docker run <image>: Create and start a container from an image.docker ps: List all running containers.docker stop <container>: Stop a running container.docker rm <container>: Remove a container from your system.docker network ls: List all Docker networks.

These commands are just the beginning of Docker’s powerful command-line interface. As you progress in your Docker journey, explore additional commands and flags to enhance your productivity.

Building Docker Images: A Practical Walkthrough

Creating a Dockerfile is the first step in building a custom Docker image. A Dockerfile is a script containing instructions for building an image. Here’s a simple example of a Dockerfile for a Node.js application:

# Use an official Node runtime as the base image FROM node:14

Set the working directory in the container

WORKDIR /app

Copy package.json and package-lock.json to the working directory

COPY package*.json ./

Install any needed packages specified in package.json

RUN npm install

Bundle app source inside the Docker image

COPY . .

Make port 8080 available to the world outside the container

EXPOSE 8080

Define the command to run the app

CMD [ "npm", "start" ]To build the image, navigate to the directory containing the Dockerfile and execute the following command:

$ docker build -t my-node-app .This command builds an image with the tag my-node-app. To run the container, execute:

Docker Compose: Orchestrating Multi-Container Applications

Docker Compose is a powerful tool for managing multi-container applications. It simplifies the process of defining, setting up, and running applications consisting of multiple containers. By using a YAML file, the docker-compose.yml, you can configure services, networks, and volumes for your application.

To get started with Docker Compose, follow these steps:

- Install Docker Compose on your system.

- Create a

docker-compose.ymlfile in the root directory of your project. - Define your services, networks, and volumes in the YAML file.

- Run the

docker-compose upcommand to start your application.

Here’s an example of a simple docker-compose.yml file for a web application with separate frontend and backend services:

version: '3' services:

frontend:

build: ./frontend

ports:

- "3000:3000"

networks:

- my-network

backend:

build: ./backend

ports:

- "8080:8080"

networks:

- my-network

networks:

my-network:In this example, the docker-compose.yml file defines two services, frontend and backend, which are built using Dockerfiles located in the ./frontend and ./backend directories, respectively. The ports section maps the host ports to the container ports, and the networks section sets up a custom network for the services to communicate with each other.

Docker Compose offers several benefits for managing multi-container applications, including:

- Simplified configuration and management of services.

- Efficient resource allocation and orchestration.

- Streamlined deployment and scaling of applications.

- Consistent environment setup and teardown.

By mastering Docker Compose, you can efficiently manage complex multi-container applications and streamline your development workflow.

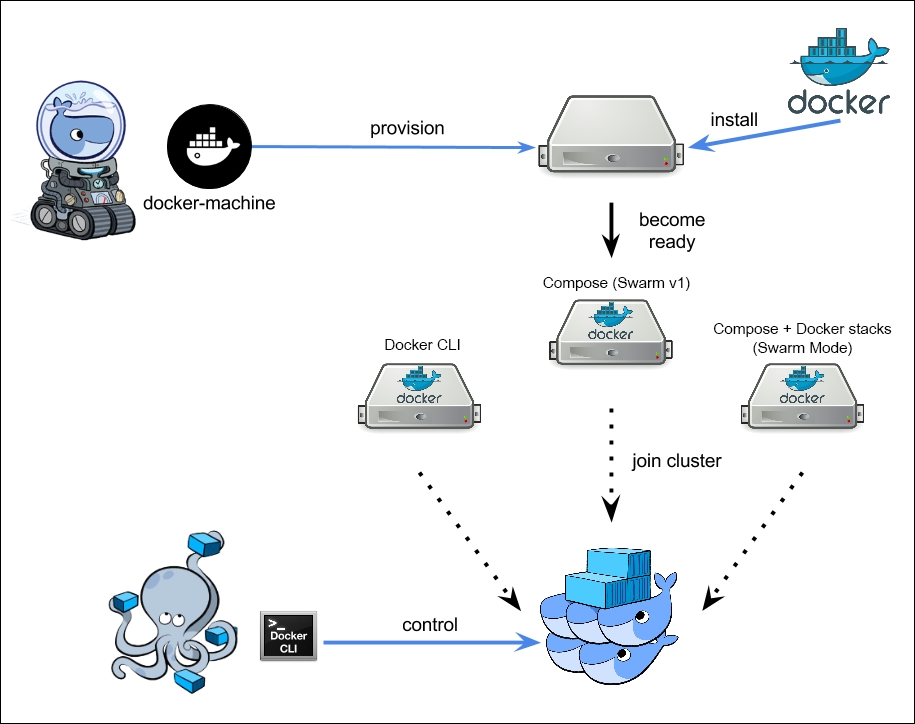

Docker Swarm and Kubernetes: Scaling and Managing Container Clusters

As applications grow, managing and scaling container deployments becomes increasingly complex. Docker Swarm and Kubernetes are two popular platforms that simplify this process, offering features for container orchestration, scaling, and management. Understanding the differences between these two platforms can help you choose the right one for your needs.

Docker Swarm

Docker Swarm is a native Docker clustering and scheduling tool. It is tightly integrated with Docker, making it easy to set up and manage. Docker Swarm offers several features, including:

- Simple setup and management through the Docker command-line interface.

- Integration with Docker Compose for managing multi-container applications.

- Automated service discovery and load balancing.

- Built-in encryption for communication between nodes.

Docker Swarm is best suited for smaller-scale deployments or projects that require tight integration with Docker. However, it may not offer the same level of scalability and advanced features as Kubernetes.

Kubernetes

Kubernetes is an open-source platform for container orchestration, automation, and management. It offers a more extensive set of features compared to Docker Swarm, including:

- Advanced auto-scaling capabilities based on resource usage and custom metrics.

- Support for rolling updates and rollbacks.

- Extensive customization options through a rich ecosystem of plugins and extensions.

- Integration with popular cloud providers and on-premises infrastructure.

Kubernetes is ideal for large-scale deployments and projects requiring advanced features, customization, and scalability. However, its steeper learning curve and more complex setup process

Docker Security Best Practices: Protecting Your Applications and Infrastructure

Security is a critical aspect of any software development process, and Docker is no exception. Implementing best practices and following security guidelines can help protect your applications and infrastructure from potential threats. Here are some Docker security best practices to consider:

User Namespaces

User namespaces allow you to isolate and restrict the privileges of processes within a container. By default, Docker runs containers as the root user, which can pose a security risk. Using user namespaces, you can create a non-root user within the container, reducing the potential attack surface.

Capabilities

Capabilities are a feature of the Linux kernel that allows you to grant or restrict specific privileges to a process. By default, Docker containers have access to all capabilities of the host system. You can reduce the attack surface by removing unnecessary capabilities from your containers.

SELinux

Security-Enhanced Linux (SELinux) is a security architecture for Linux systems that provides a mechanism for supporting access control security policies. Docker supports SELinux, allowing you to enforce additional security policies on your containers and limit their access to specific resources.

Regularly Updating Docker and Its Components

Regularly updating Docker and its components is essential for maintaining security. New vulnerabilities are discovered frequently, and updates often include patches and fixes for these issues. Make sure to keep your Docker installation and all related components up-to-date.

Securing Docker Registries

Docker registries store and distribute Docker images. Ensuring the security of your registry is crucial for maintaining the integrity of your applications and infrastructure. Implementing authentication, encryption, and access controls can help secure your Docker registry.

Managing Secrets

Managing secrets, such as API keys, passwords, and certificates, is an essential aspect of Docker security. Avoid hardcoding secrets in your Dockerfiles or application code. Instead, use tools and techniques such as environment variables, secret management solutions, or secure container mounts to manage secrets securely.

By following these best practices, you can help ensure the security and integrity of your Docker-based applications and infrastructure.

Integrating Docker with Continuous Integration and Continuous Deployment (CI/CD) Workflows

Docker’s containerization technology offers numerous benefits for modern software development, including consistency, reproducibility, and faster deployment. Integrating Docker with Continuous Integration and Continuous Deployment (CI/CD) workflows can help streamline development processes and improve collaboration among development teams.

Benefits of Using Docker in CI/CD Pipelines

Using Docker in CI/CD pipelines offers several benefits, including:

- Consistency: Docker containers provide a consistent environment for building, testing, and deploying applications, reducing the risk of environment-specific issues.

- Reproducibility: Docker images capture the exact dependencies and configurations required for an application, ensuring that builds and deployments are reproducible across different environments.

- Faster Deployment: Docker containers can be started and stopped quickly, allowing for faster deployment and scaling of applications.

Integrating Docker with Popular CI/CD Tools

Docker can be integrated with various CI/CD tools, such as Jenkins, GitLab CI, and CircleCI. Here are some examples of how to integrate Docker with these popular CI/CD tools:

- Jenkins: Jenkins offers a Docker plugin that allows you to build, test, and deploy Docker-based applications. You can use the plugin to automate the process of building Docker images, pushing them to a registry, and deploying them to a container runtime.

- GitLab CI: GitLab CI provides built-in Docker support, allowing you to define Docker-based CI/CD pipelines in a

.gitlab-ci.ymlfile. You can use GitLab’s container registry to store Docker images and automate the process of building, testing, and deploying Docker-based applications. - CircleCI: CircleCI also provides built-in Docker support, allowing you to define Docker-based CI/CD pipelines in a

config.ymlfile. You can use CircleCI’s container registry to store Docker images and automate the process of building, testing, and deploying Docker-based applications.

By integrating Docker with CI/CD workflows, you can improve the efficiency and reliability of your software development processes, ensuring that your applications are consistently built, tested, and deployed across different environments.