Ingress: A Term Unveiled

Ingress is a term that originates from the Latin word ‘ingressus,’ which means ‘a going in.’ In the context of networking and technology, ingress refers to the entry point of network traffic into a system or network. It is a critical concept in managing network traffic flow and ensuring the security and efficiency of network operations.

In the modern world, the term ‘ingress‘ has become increasingly relevant due to the rise of cloud computing, containerization, and microservices architecture. As a result, understanding the definition and function of ingress is essential for network administrators, DevOps engineers, and IT professionals who seek to optimize network performance and security.

Ingress in Networking: A Deeper Dive

Ingress is a fundamental concept in networking that refers to the entry point of network traffic into a system or network. It plays a critical role in managing network traffic flow, ensuring security, and optimizing network performance.

At a deeper level, ingress is responsible for routing incoming network traffic to the appropriate services or applications within a network. It acts as a reverse proxy, terminating external connections and forwarding internal requests to the relevant backend services. By doing so, ingress helps to distribute network traffic efficiently, reduce latency, and improve the overall user experience.

Moreover, ingress controllers provide additional features such as load balancing, SSL termination, and access control. These features enhance network security, improve reliability, and ensure high availability of services. As a result, ingress has become an essential component of modern networking architectures, including cloud computing, containerization, and microservices.

How Ingress Controllers Operate: A Practical Approach

Ingress controllers are software components that manage and control network traffic flow into a system or network. They act as reverse proxies, terminating external connections and forwarding internal requests to the relevant backend services. By doing so, ingress controllers help to distribute network traffic efficiently, reduce latency, and improve the overall user experience.

There are several popular ingress controllers available in the market, each with its unique features and capabilities. Some of the most commonly used ingress controllers include NGINX, Traefik, and HAProxy.

NGINX is a widely used open-source ingress controller that provides features such as load balancing, SSL termination, and access control. It is highly customizable and supports a wide range of plugins and modules.

Traefik is another popular open-source ingress controller that provides automatic service discovery, load balancing, and SSL termination. It is known for its simplicity and ease of use, making it an ideal choice for small to medium-sized deployments.

HAProxy is a high-performance open-source ingress controller that provides load balancing, SSL termination, and access control. It is highly scalable and supports a wide range of protocols and features, making it an ideal choice for large-scale deployments.

Ingress vs. Egress: A Crucial Distinction

Ingress and egress are two critical concepts in networking that often get confused with each other. While both ingress and egress refer to the flow of network traffic, they differ in their function and direction.

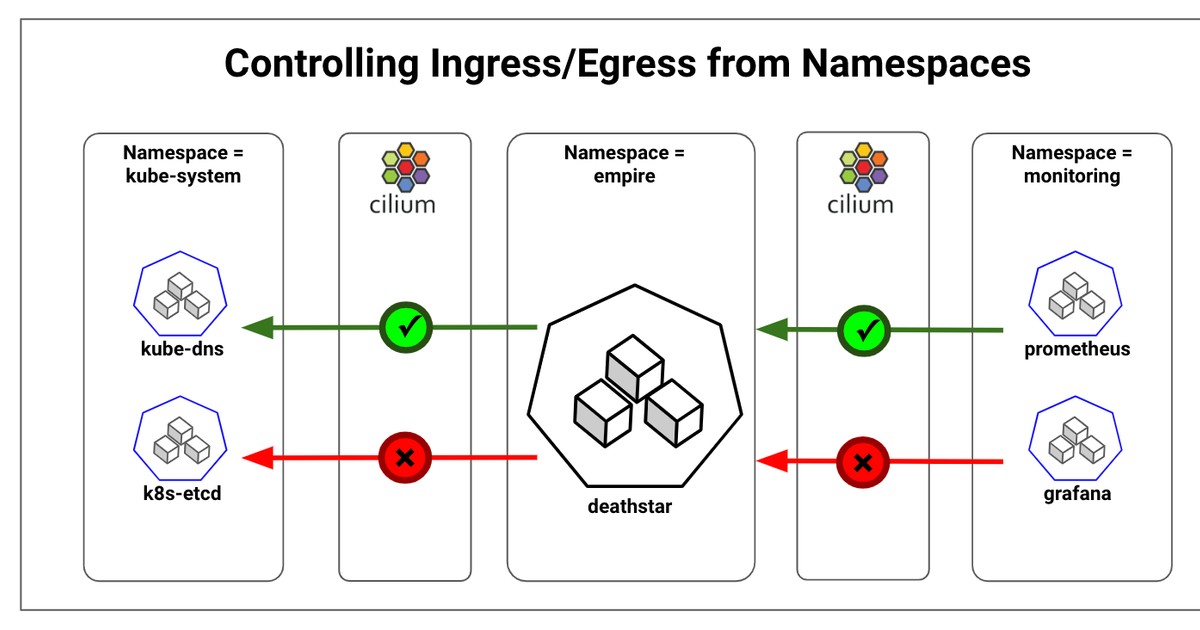

Ingress refers to the entry point of network traffic into a system or network. It is responsible for managing and controlling the flow of incoming network traffic, ensuring security, and optimizing network performance. In contrast, egress refers to the exit point of network traffic from a system or network. It is responsible for managing and controlling the flow of outgoing network traffic, ensuring security, and complying with regulatory requirements.

Understanding the distinction between ingress and egress is crucial in managing network traffic, ensuring security, and complying with regulatory requirements. For instance, organizations must implement strict access control policies for ingress traffic to prevent unauthorized access and data breaches. Similarly, organizations must implement data loss prevention (DLP) policies for egress traffic to prevent data exfiltration and comply with regulatory requirements.

Moreover, ingress and egress traffic differ in their network topology and routing. Ingress traffic typically follows a star topology, with multiple external networks connecting to a central network. In contrast, egress traffic typically follows a mesh topology, with multiple internal networks connecting to each other. As a result, ingress traffic requires more complex routing and load balancing than egress traffic.

Implementing Ingress in Kubernetes: A Step-by-Step Guide

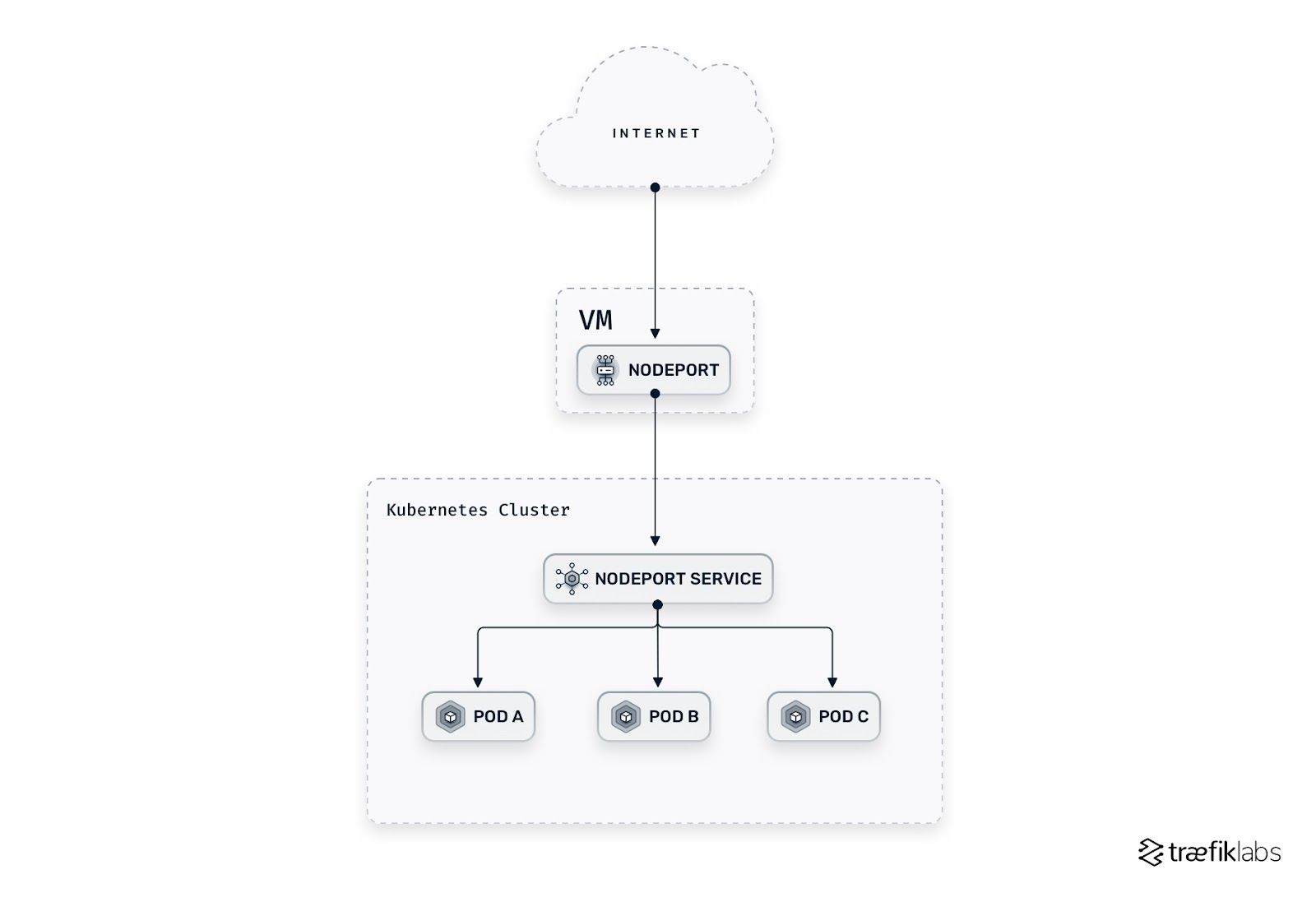

Kubernetes is an open-source container orchestration platform that enables organizations to automate the deployment, scaling, and management of containerized applications. Ingress is a critical component of Kubernetes that provides external access to services running within a Kubernetes cluster.

Implementing ingress in Kubernetes involves several steps, including installing an ingress controller, defining ingress resources, and configuring access control policies. Here’s a step-by-step guide to implementing ingress in Kubernetes:

Install an ingress controller: The first step in implementing ingress in Kubernetes is to install an ingress controller. Popular ingress controllers for Kubernetes include NGINX, Traefik, and HAProxy.

Define ingress resources: Once the ingress controller is installed, the next step is to define ingress resources. Ingress resources define the rules for routing incoming network traffic to the appropriate services within the Kubernetes cluster.

Configure access control policies: After defining ingress resources, the next step is to configure access control policies. Access control policies ensure that only authorized users and applications can access the services within the Kubernetes cluster.

Here’s an example of an ingress resource definition in Kubernetes:

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: my-ingress annotations: nginx.ingress.kubernetes.io/rewrite-target: / spec: rules: - host: my-service.example.com http: paths: - pathType: Prefix path: "/" backend: service: name: my-service port: number: 80 In this example, the ingress resource defines a rule for routing incoming network traffic to the “my-service” service within the Kubernetes cluster. The “rewrite-target” annotation ensures that the incoming network traffic is rewritten to the appropriate target path within the service.

By following these steps, organizations can implement ingress in Kubernetes and provide external access to services running within the cluster.

Securing Ingress: Best Practices and Recommendations

Ingress is a critical component of modern network architectures, providing external access to services and applications within a network. As such, securing ingress is essential to prevent unauthorized access, data breaches, and other security threats. Here are some best practices and recommendations for securing ingress:

Implement access control policies: Access control policies ensure that only authorized users and applications can access the services and applications within a network. Implementing access control policies for ingress traffic is essential to prevent unauthorized access and data breaches.

Use SSL/TLS encryption: SSL/TLS encryption provides an additional layer of security for ingress traffic, encrypting data in transit and preventing eavesdropping and data tampering. Implementing SSL/TLS encryption for ingress traffic is essential to prevent man-in-the-middle attacks and other security threats.

Implement rate limiting: Rate limiting restricts the amount of traffic that can enter a network, preventing denial-of-service (DoS) attacks and other security threats. Implementing rate limiting for ingress traffic is essential to prevent network congestion and ensure network availability.

Use network segmentation: Network segmentation separates a network into smaller segments, reducing the attack surface and preventing lateral movement by attackers. Implementing network segmentation for ingress traffic is essential to prevent attackers from moving laterally within a network and accessing sensitive data.

Implement logging and monitoring: Logging and monitoring provide visibility into network traffic and enable organizations to detect and respond to security threats in real-time. Implementing logging and monitoring for ingress traffic is essential to detect and respond to security threats and prevent data breaches.

Here’s an example of an access control policy for ingress traffic in Kubernetes:

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: my-ingress annotations: nginx.ingress.kubernetes.io/auth-type: basic nginx.ingress.kubernetes.io/auth-secret: my-auth-secret spec: rules: - host: my-service.example.com http: paths: - pathType: Prefix path: "/" backend: service: name: my-service port: number: 80 In this example, the ingress resource defines an access control policy using basic authentication. The “auth-type” annotation specifies the type of authentication, and the “auth-secret” annotation specifies the secret containing the authentication credentials.

By following these best practices and recommendations, organizations can secure ingress traffic and prevent security threats, ensuring the availability, integrity, and confidentiality of their services and applications.

Ingress in the Real World: Case Studies and Examples

Ingress is a critical component of modern network architectures, providing external access to services and applications within a network. Here are some real-world examples and case studies of ingress in action:

E-commerce platform: An e-commerce platform implemented ingress to provide external access to its web applications and services. By using ingress, the platform was able to improve network performance, reduce latency, and enhance user experience. Additionally, the platform implemented access control policies and SSL/TLS encryption to secure ingress traffic and prevent security threats.

Social media platform: A social media platform implemented ingress to provide external access to its microservices architecture. By using ingress, the platform was able to improve network scalability, reduce network congestion, and enhance user experience. Additionally, the platform implemented rate limiting and network segmentation to secure ingress traffic and prevent security threats.

Gaming company: A gaming company implemented ingress to provide external access to its online games and services. By using ingress, the company was able to improve network performance, reduce latency, and enhance user experience. Additionally, the company implemented logging and monitoring to detect and respond to security threats in real-time.

Here’s an example of a rate limiting policy for ingress traffic in Kubernetes:

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: my-ingress annotations: nginx.ingress.kubernetes.io/limit-connections: "10" nginx.ingress.kubernetes.io/limit-rpm: "50" spec: rules: - host: my-service.example.com http: paths: - pathType: Prefix path: "/" backend: service: name: my-service port: number: 80 In this example, the ingress resource defines a rate limiting policy using connection and requests-per-minute (RPM) limits. The “limit-connections” annotation specifies the maximum number of concurrent connections, and the “limit-rpm” annotation specifies the maximum number of requests per minute.

By implementing ingress in real-world scenarios, organizations can improve network performance, reduce latency, and enhance user experience. Additionally, implementing best practices and recommendations for securing ingress can help organizations prevent security threats and ensure the availability, integrity, and confidentiality of their services and applications.

The Future of Ingress: Trends and Predictions

Ingress is a critical component of modern network architectures, providing external access to services and applications within a network. As network technologies continue to evolve, ingress is also expected to undergo significant changes. Here are some trends and predictions for the future of ingress:

Increased adoption of service meshes: Service meshes are becoming increasingly popular in modern network architectures, providing a dedicated infrastructure layer for managing service-to-service communication. Service meshes can simplify ingress management by providing a unified interface for configuring and managing ingress traffic.

Emergence of intelligent traffic management: With the increasing complexity of modern network architectures, intelligent traffic management is becoming essential for ensuring optimal network performance. Intelligent traffic management can help organizations dynamically route traffic based on network conditions, application requirements, and user behavior.

Growing importance of network security: As network threats continue to evolve, network security is becoming increasingly important for protecting ingress traffic and preventing security threats. Organizations are expected to implement more sophisticated security measures, such as multi-factor authentication, zero trust security, and AI-powered threat detection.

Integration with edge computing: Edge computing is becoming increasingly popular for reducing network latency, improving application performance, and enabling new use cases. Ingress is expected to play a critical role in managing edge computing traffic, providing external access to edge computing resources and services.

Adoption of cloud-native architectures: Cloud-native architectures are becoming increasingly popular for deploying and managing modern applications. Ingress is expected to play a critical role in managing cloud-native traffic, providing external access to cloud-native services and applications.

Here’s an example of a zero trust security policy for ingress traffic in Kubernetes:

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: my-ingress annotations: nginx.ingress.kubernetes.io/auth-url: "https://my-auth-service.example.com/auth" nginx.ingress.kubernetes.io/auth-signin: "https://my-auth-service.example.com/signin" spec: rules: - host: my-service.example.com http: paths: - pathType: Prefix path: "/" backend: service: name: my-service port: number: 80 In this example, the ingress resource defines a zero trust security policy using an external authentication service. The “auth-url” annotation specifies the URL of the authentication service, and the “auth-signin” annotation specifies the URL for user sign-in.

By understanding the trends and predictions for the future of ingress, organizations can prepare for the changing network landscape and ensure optimal network performance, security, and user experience.