Understanding Multi-Hop Architectures in Data Engineering

Data pipelines are crucial for extracting value from vast datasets. Traditional single-hop architectures often struggle with the complex transformations required for modern data lakes. They lack the flexibility and scalability needed to integrate data from multiple diverse sources. Multi-hop architectures represent a powerful solution, enabling intricate data transformations across multiple steps. This approach is ideal for scenarios involving data lakes and the need to combine insights from various data sources, creating a more comprehensive understanding. The databricks multi hop architecture proves highly effective in such situations.

A multi-hop approach involves processing data multiple times across stages, with each stage focused on distinct transformations. Each stage in a multi-hop pipeline can involve cleansing, transforming, and enriching data from diverse sources, allowing for advanced data preparations needed in many situations. This contrasts with traditional single-hop pipelines that typically handle data processing in a single operation. Multi-hop architecture addresses complex data transformations head on, providing significant advantages in scalability and overall performance. Handling complex data relationships and transformations is an important aspect of databricks multi-hop architectures.

Data lakes often contain data from numerous sources, such as operational databases, social media feeds, or IoT sensors. A single-hop architecture might prove inadequate to integrate and transform data from multiple sources, creating scalability issues and processing delays. Conversely, a multi-hop architecture efficiently addresses these complexities by breaking the process into smaller, more manageable steps, greatly improving the capability to adapt and evolve. This allows efficient and effective management of data pipelines and improves overall performance for various large-scale data projects.

Databricks Platform as a Solution

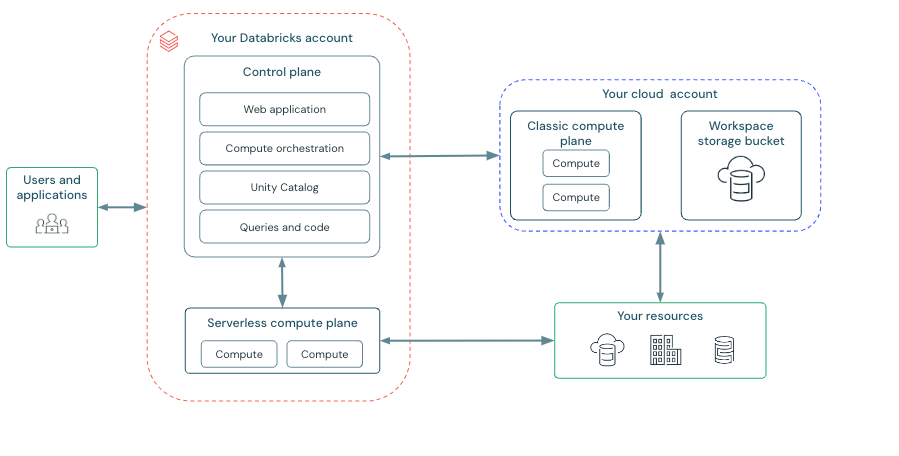

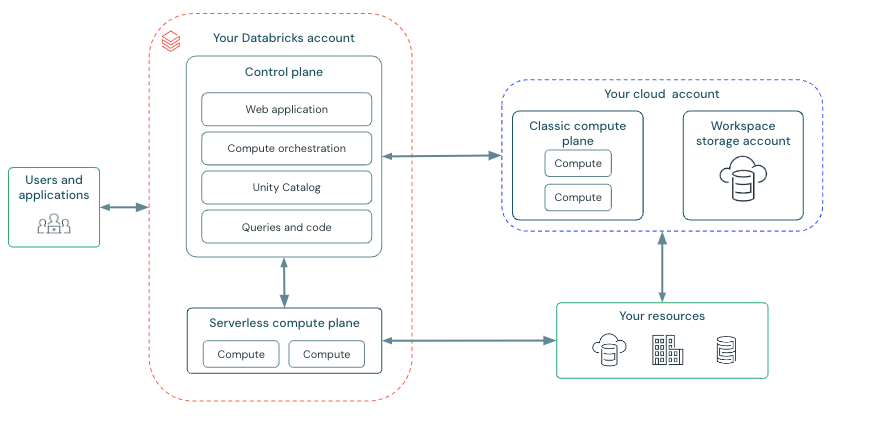

Databricks provides a robust environment for constructing and managing complex data pipelines, especially for multi-hop architectures. Its unified data lakehouse platform allows seamless integration of data from diverse sources. The platform’s strong capabilities are well-suited for the multifaceted data transformations required in multi-hop data pipelines, addressing scalability challenges often faced with traditional methods. Databricks’ powerful Spark clusters excel at both batch and stream processing, making them suitable for the demands of modern data engineering. The comprehensive Databricks ecosystem, including a wide range of connectors, simplifies data ingestion and facilitates a streamlined workflow. With its integrated functionalities, Databricks multi-hop architecture streamlines data handling and management.

Databricks excels in this domain because of its built-in support for distributed processing frameworks like Spark. This supports the parallel data transformations vital for multi-hop architectures. Its ability to manage both batch and streaming data efficiently is a major asset for organizations facing the intricacies of multi-hop pipelines. Databricks’ platform minimizes bottlenecks and facilitates smooth data movement between different stages of the multi-hop process. Databricks’ feature set empowers organizations to rapidly design, execute, and maintain complex data pipelines needed for data transformation tasks in a multi-hop databricks multi-hop architecture. The intuitive user interface and robust ecosystem further enhance the effectiveness of multi-hop data pipelines within the Databricks platform.

The comprehensive nature of the Databricks platform empowers users to build highly efficient and scalable multi-hop pipelines. The platform offers a wide range of tools to manage the complexities of data ingestion, transformation, and storage. Features such as Delta Lake guarantee data quality and consistency throughout the pipeline, improving the overall reliability of the Databricks multi hop architecture. This integrated approach to data management and processing is a key differentiator for Databricks in the data engineering landscape. Databricks’ multi-hop approach excels at managing complex data relationships and transformations. Its strength in simplifying the overall data flow process is a major advantage for organizations handling multi-hop workflows.

Designing Robust Data Pipelines

Designing a robust databricks multi-hop architecture data pipeline necessitates careful consideration of various stages. Data ingestion, transformation, and storage are critical components that need meticulous planning. Choosing the appropriate tools and strategies for each stage is paramount. For example, leveraging Databricks’ built-in connectors streamlines the data ingestion process, ensuring a reliable flow of data into the platform. Employing robust quality checks at each stage can prevent data quality issues from propagating downstream.

Transformations within a multi-hop databricks multi-hop architecture data pipeline frequently involve complex logic and multiple steps. Employing the right tools and strategies is crucial to avoid errors and maintain data integrity. Spark SQL, along with other databricks multi-hop architecture features, provides powerful capabilities for manipulating and cleaning data. Ensuring data quality at each transformation step is essential. Thorough data validation and cleansing are critical aspects of creating a clean and accurate dataset. Data pipelines should incorporate comprehensive validation steps to ensure data quality. These steps should encompass data type validation, range checks, and potential null value analysis.

Efficient data storage is an integral part of a robust databricks multi-hop architecture data pipeline. Choosing appropriate storage mechanisms, such as Delta Lake, ensures data integrity and optimizes performance. Effective data partitioning and organization are also key aspects of successful multi-hop pipeline design. Careful consideration of storage location and data organization enhances the performance of the pipeline. Employing efficient data storage mechanisms in the databricks multi-hop architecture optimizes data retrieval speeds and reduces processing bottlenecks. Implementing thorough documentation for the pipeline design and the data transformations performed within the databricks multi-hop architecture is critical to enable efficient maintenance and troubleshooting in the future.

Leveraging Databricks Features for Efficient Multi-Hop Data Transformations

Databricks multi-hop architectures offer powerful tools for efficient data transformations. Spark SQL, a critical component of the Databricks ecosystem, enables complex queries across multiple datasets. This functionality is invaluable for handling the intricate relationships often found in multi-hop data pipelines. Furthermore, Databricks notebooks provide a collaborative environment for executing these queries and transformations, streamlining the iterative development process.

Delta Lake, an optimized storage format, plays a crucial role in enabling efficient multi-hop transformations. It allows for ACID transactions, enabling reliable data modifications and ensuring data integrity throughout the pipeline. This attribute is essential for the complex transformations associated with multi-hop data pipelines. Databricks’ built-in data quality features aid in verifying data consistency and identifying potential issues early in the process, minimizing downstream errors. Data validation rules and checks can be directly integrated into transformations, reinforcing data integrity.

Real-time insights are readily achievable within a Databricks multi-hop architecture. Databricks’ stream processing capabilities allow for near real-time data ingestion and processing, facilitating instant analysis of data transformations. This capability unlocks possibilities for immediate feedback and proactive decision-making. These features, combined, enable robust, scalable, and maintainable databricks multi hop architecture solutions. This comprehensive approach empowers data engineers to create effective and reliable data pipelines. Utilizing these tools efficiently simplifies complex data relationships, leading to streamlined transformations. This approach fosters a flexible and adaptable environment, crucial for the unique demands of various industries. Furthermore, the integration of these features provides an efficient and scalable approach.

Scalability and Performance Considerations in Databricks Multi-Hop Architectures

Optimizing performance in databricks multi-hop architectures is paramount. Efficient data movement and minimizing bottlenecks are key. Employing optimized query execution plans is crucial. Properly partitioning data is essential for faster query processing. Leveraging Databricks’ managed cloud resources ensures optimal performance and scalability in large-scale data processing.

Data partitioning strategies significantly impact query performance. Partitioning data by relevant attributes allows targeted retrieval of specific subsets, improving query efficiency. Appropriate choice of partitioning keys is critical for effective data access and reducing data volume for processing. By strategically partitioning data and applying optimized query execution plans, organizations can boost the efficiency of their databricks multi-hop architecture operations.

Effective resource management is vital. Monitoring resource consumption during pipeline execution allows for real-time adjustments. Scaling Spark clusters dynamically can adapt to fluctuating workloads. Analyzing resource usage helps identify and resolve bottlenecks. This proactive management of resources enhances performance and maintains optimal scalability within the databricks multi-hop architecture.

Security in a Databricks Multi-Hop Architecture

Securing data within a multi-hop databricks multi hop architecture is paramount. Robust security measures are crucial at each stage of the pipeline, from data ingestion to transformation and storage. Implementing granular access controls is essential. Define and enforce strict access policies, granting users only the necessary permissions for their tasks. This approach minimizes potential risks associated with unauthorized access and modification of sensitive data within the databricks multi hop architecture.

Data encryption is another critical security consideration. Encrypt data both in transit and at rest. Utilize strong encryption algorithms and protocols to protect sensitive information. This ensures that even if unauthorized access occurs, the data remains unreadable. Implement data masking techniques for sensitive data, particularly during the transformation phase. Masking allows for exploration and analysis while shielding actual data values. Employ mechanisms that protect against data breaches, using secure storage methods, and regular security audits. These practices are crucial for maintaining a secure databricks multi hop architecture that meets industry standards and best practices.

Data masking, alongside encryption, aids in compliance with regulations like GDPR and HIPAA. Implementing strong access controls throughout the databricks multi hop architecture, coupled with regular security assessments, ensures a secure data pipeline. Regularly review and update security protocols, staying ahead of evolving threats. These security measures are essential to protecting sensitive data within a multi-hop architecture. Thorough validation of data sources and the secure transfer of data between stages is vital. A secure databricks multi-hop environment safeguards confidential information, minimizing risk to sensitive data.

Monitoring and Maintaining Databricks Multi-Hop Architectures

Effective monitoring and maintenance are crucial for the successful operation of a Databricks multi-hop architecture. Proactive measures ensure the health and performance of the data pipeline, allowing for timely identification and resolution of issues. A robust monitoring strategy is essential to keep the multi-hop architecture performing optimally.

Monitoring tools offered within the Databricks ecosystem provide insights into the various stages of the multi-hop pipeline. Key metrics include query performance, data volume processed, and the status of individual jobs or transformations. Real-time dashboards offer a centralized view of pipeline health, enabling quick identification of bottlenecks or anomalies in the multi-hop data flow. Establishing alerts for critical performance thresholds is essential in a Databricks multi-hop architecture. These alerts ensure immediate notification of potential issues, facilitating swift responses to ensure minimal impact on downstream processes.

Troubleshooting issues within a multi-hop data pipeline frequently involves examining logs and audit trails. Comprehensive logging at each stage of the process, from data ingestion to final output, allows for detailed analysis of events. Thorough logging helps pinpoint the source of problems, providing valuable context for resolution. Maintaining comprehensive records ensures traceability and facilitates auditing across the entire Databricks multi-hop architecture. Regularly reviewing logs for patterns and anomalies helps identify potential issues before they escalate into major disruptions. A well-structured process for managing and analyzing these logs is vital for the efficiency and reliability of any multi-hop pipeline within a Databricks environment.

Real-World Case Studies: Databricks Multi-Hop Architecture in Action

Deploying a databricks multi-hop architecture effectively requires understanding its applications across diverse industries. Consider a financial institution seeking to predict customer churn. Gathering data from various departments—marketing, sales, and customer service—is vital for this analysis. A multi-hop pipeline within Databricks can efficiently integrate this disparate data.

A multi-hop data pipeline, built on a databricks multi hop architecture, begins by ingesting data from disparate sources. This data includes customer interactions, transaction history, and demographics. This ingestion process uses Databricks connectors for efficient data transfer. The next step involves transformation. Within the pipeline, the data is transformed to ensure compatibility and standardization. Databricks’ Spark SQL facilitates these complex transformations. A critical component in this step is data quality assurance. Robust checks at each stage maintain data integrity. This multi-hop architecture integrates data from varied departments and converts it into a unified format within the Databricks platform.

The pipeline then performs complex analytics using Spark’s capabilities for optimized query execution. The final output involves generating actionable insights for customer retention strategies. The output, derived from a databricks multi-hop architecture, assists in enhancing customer engagement. This allows the institution to forecast potential churn and implement targeted interventions. Another example is an e-commerce company that leverages this same framework. This can include web logs, customer purchase history, and inventory data from multiple systems. The databricks multi hop architecture will unify data for accurate inventory forecasting. The comprehensive and reliable data will also enable optimized pricing and promotional strategies, thus leading to increased revenue. By applying this same methodology for diverse use cases across different industries, organizations can leverage their unique data sources effectively for improved decision making.