Effortless Data Synchronization in the AWS Cloud

Data synchronization within the Amazon Web Services (AWS) ecosystem is a fundamental aspect of modern cloud architecture. Efficient data transfer is not merely a convenience, but a necessity for a myriad of critical use cases. These include robust backup and recovery strategies, comprehensive disaster recovery plans, and seamless hybrid cloud implementations. The ability to maintain consistent and up-to-date data across different locations and services is paramount for business continuity, data availability, and regulatory compliance. AWS offers a diverse array of tools and services designed to facilitate seamless data movement, each catering to specific needs and scenarios. Understanding these options is the first step towards building a resilient and scalable data infrastructure. For example, consider a scenario where an organization needs to replicate its on-premises database to AWS for disaster recovery. A well-chosen data synchronization solution ensures that the AWS replica remains synchronized with the primary database, minimizing potential data loss in the event of an outage. Effective data sync aws solutions are crucial for maintaining data integrity and availability in such scenarios. These processes benefit organizations through the mitigation of data loss risks and the enhancement of operational resilience.

The AWS ecosystem provides a rich set of tools for data synchronization, allowing organizations to choose the best fit for their particular requirements. These tools are designed to handle various data transfer scenarios efficiently and securely. Selecting the correct tool for data sync aws is essential for optimizing performance and cost. Whether it’s transferring large volumes of data, synchronizing databases, or replicating file systems, AWS provides services tailored to each need. Proper data sync aws ensures data consistency across different environments, enhancing the reliability of applications and services. Furthermore, these AWS services offer features like encryption and access control to protect data during transit and at rest, ensuring compliance with security and regulatory requirements. By leveraging these services effectively, organizations can build robust and efficient data synchronization workflows.

The importance of efficient data transfer extends beyond simple replication. It also enables advanced use cases such as data analytics, machine learning, and data warehousing. By synchronizing data across different AWS regions or services, organizations can unlock new insights and capabilities. This facilitates data-driven decision-making and enables the development of innovative applications. In addition, proper data synchronization supports hybrid cloud environments, allowing organizations to seamlessly integrate their on-premises infrastructure with AWS services. This hybrid approach enables them to leverage the scalability and flexibility of the cloud while retaining control over sensitive data and applications. Efficient data sync aws helps organizations streamline their operations, improve data governance, and unlock the full potential of their data assets. The landscape of data sync aws tools continues to evolve, offering increasingly sophisticated features and capabilities. Organizations should regularly evaluate their data synchronization strategies to ensure they are leveraging the most appropriate and cost-effective solutions. Ultimately, the ability to move data seamlessly and securely within the AWS cloud is a key enabler of business agility and innovation.

How to Replicate Data Across AWS Services

Replicating data across AWS services is a fundamental aspect of building resilient and scalable applications. This process, often referred to as data synchronization, ensures data consistency and availability across different storage locations and AWS services. Several scenarios necessitate data replication, including disaster recovery, backup and restore strategies, and facilitating hybrid cloud architectures. Let’s explore a practical guide on how to replicate data between different AWS services, keeping data sync aws best practices in mind.

Consider the common scenario of replicating data from an on-premises server to Amazon S3 for archival or backup purposes. Begin by setting up an AWS Storage Gateway in your on-premises environment. Storage Gateway provides a seamless way to connect your on-premises applications to AWS storage services. Configure the Storage Gateway to act as a file gateway, which allows you to store files as objects in S3. Next, configure the data sync aws process. The on-premises server writes data to the Storage Gateway, which then automatically and securely transfers the data to your designated S3 bucket. Employ AWS Identity and Access Management (IAM) roles to grant the Storage Gateway the necessary permissions to write to the S3 bucket.

Another common use case is replicating data between S3 buckets in different AWS regions. This is crucial for disaster recovery and ensuring data availability in multiple geographical locations. Amazon S3 Replication offers a straightforward solution. Enable S3 Replication on the source bucket and specify the destination bucket in a different region. S3 Replication automatically replicates new objects and updates to existing objects from the source bucket to the destination bucket. To ensure data integrity during replication, enable versioning on both the source and destination buckets. This allows you to track changes to your objects and revert to previous versions if needed. Moreover, encrypt data both in transit and at rest using S3’s encryption options. Secure Socket Layer (SSL) encryption protects data during transfer, while server-side encryption (SSE) or client-side encryption safeguards data at rest. Regular monitoring of the replication process is crucial to identify and resolve any issues promptly, guaranteeing effective data sync aws.

Choosing the Right AWS Service for Your Data Sync Needs

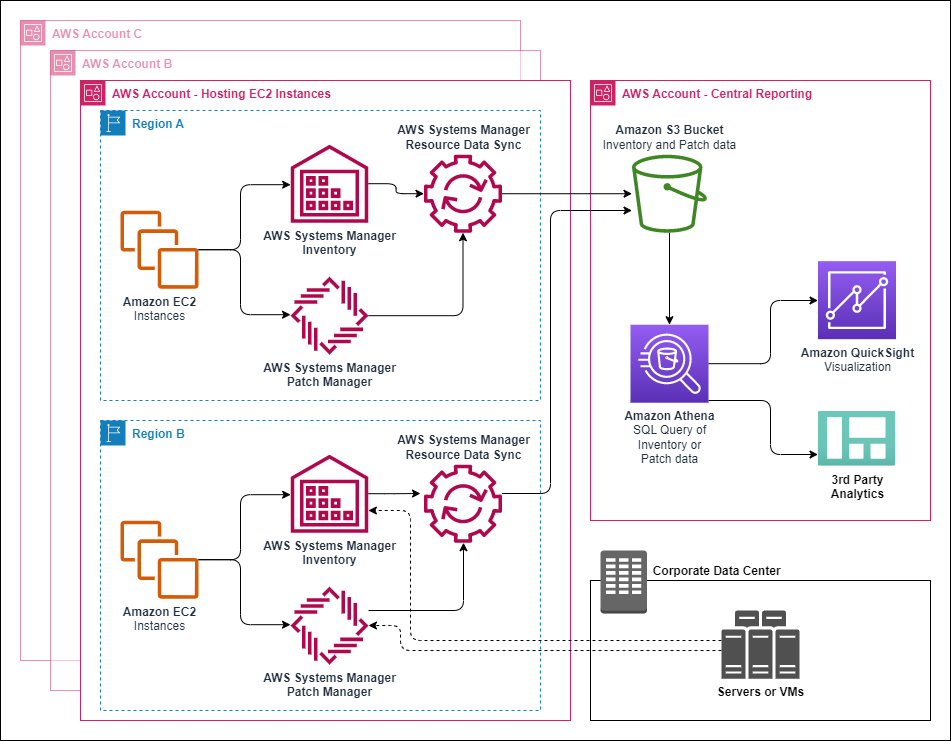

Selecting the optimal AWS service for your data synchronization needs requires careful consideration of several factors. AWS offers a range of tools, each with unique strengths, weaknesses, and pricing structures. Understanding these differences is crucial for making informed decisions that align with your specific requirements and constraints. Several services facilitate data sync aws. This section compares key services, including AWS DataSync, AWS Storage Gateway, AWS Transfer Family, and Amazon S3 Replication, to guide you in choosing the most suitable option.

AWS DataSync is designed for automated and accelerated data transfer between on-premises storage and AWS, as well as between different AWS storage services. Its strengths lie in its speed, simplicity, and ability to handle large datasets efficiently. DataSync excels in use cases such as migrating data to AWS, backing up data from on-premises systems, and replicating data between AWS regions for disaster recovery. AWS Storage Gateway, on the other hand, provides a hybrid cloud storage solution, allowing you to seamlessly integrate on-premises applications with AWS storage. It offers different gateway types, including file gateway, volume gateway, and tape gateway, each tailored to specific storage needs. The pricing model for DataSync is based on the amount of data transferred, while Storage Gateway involves costs for storage, data transfer, and gateway instances. If your company need data sync aws, these are some options to consider.

The AWS Transfer Family encompasses services like SFTP, FTPS, and FTP, providing secure file transfer capabilities. These services are ideal for integrating with existing file transfer workflows and securely exchanging data with partners and customers. Amazon S3 Replication offers a simple and cost-effective way to replicate objects between S3 buckets, either within the same region or across different regions. It’s well-suited for disaster recovery, compliance, and minimizing latency for geographically distributed users. Each data sync aws service has varying pricing models. When choosing between these services, consider factors such as the volume of data, transfer frequency, security requirements, network bandwidth, and budget constraints. Evaluate your specific use case and carefully compare the features, performance, and costs associated with each service to make the best decision for your data synchronization needs.

Optimizing Data Synchronization Performance and Costs

Achieving optimal performance and cost-effectiveness in data synchronization within AWS requires careful planning and execution. Several factors influence both speed and expenses, including network bandwidth, data compression, scheduling strategies, and storage tiering. Efficient data sync aws operations are crucial for organizations seeking to minimize transfer times and control storage costs. This section explores actionable strategies to enhance data synchronization processes in the AWS cloud.

Network bandwidth is a primary determinant of data transfer speed. Insufficient bandwidth can create bottlenecks, leading to prolonged synchronization times and increased operational costs. Organizations should assess their network capacity and consider options for increasing bandwidth, such as AWS Direct Connect, for dedicated, high-speed connections. Data compression is another vital technique for reducing transfer times and storage expenses. By compressing data before transferring it to AWS, the volume of data is reduced, resulting in faster transfers and lower storage utilization. Furthermore, strategic scheduling plays a key role in optimizing data sync aws. Schedule data synchronization tasks during off-peak hours when network traffic is lower. This approach minimizes disruptions and maximizes available bandwidth, improving overall performance. Implementing appropriate storage tiering further enhances cost-effectiveness. AWS offers various storage tiers, such as S3 Standard, S3 Intelligent-Tiering, S3 Standard-IA, and S3 Glacier, each with different pricing models. By storing frequently accessed data in higher-cost tiers and less frequently accessed data in lower-cost tiers, organizations can significantly reduce storage expenses while maintaining data accessibility.

Choosing the right tools and configurations also contributes to optimal data sync aws. For example, when using AWS DataSync, consider optimizing task configurations to leverage parallel transfers and efficient data handling. Regularly monitor data synchronization performance using AWS CloudWatch metrics. This enables proactive identification and resolution of bottlenecks or performance issues. Analyze transfer logs to identify areas for improvement. Implement automation wherever possible to streamline data synchronization processes and reduce manual intervention. Automation not only saves time but also minimizes the risk of human error, ensuring data integrity. Regular evaluations of the entire data synchronization process and adapting strategies based on performance data are essential for continuous improvement. By focusing on these key areas, organizations can optimize data synchronization performance and minimize costs, ensuring efficient and reliable data management in the AWS environment. The consistent and optimized data sync aws leads to better resource utilization and cost savings.

Leveraging AWS DataSync for Automated File Transfers

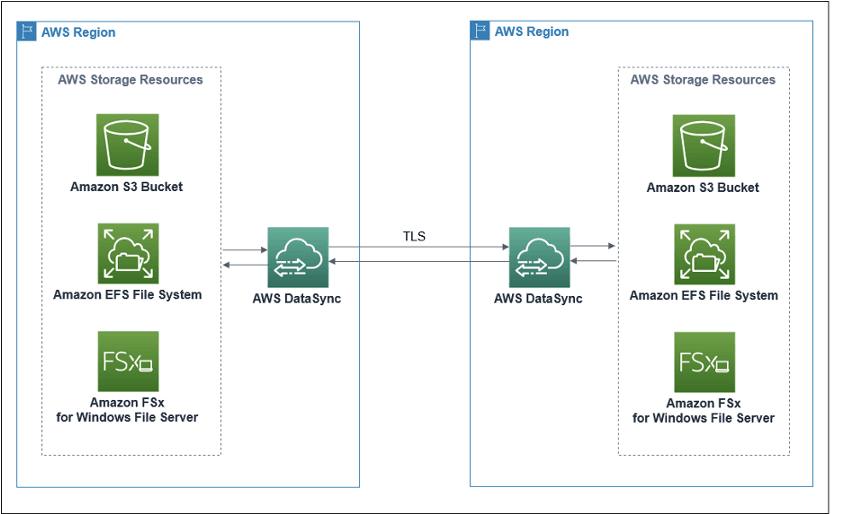

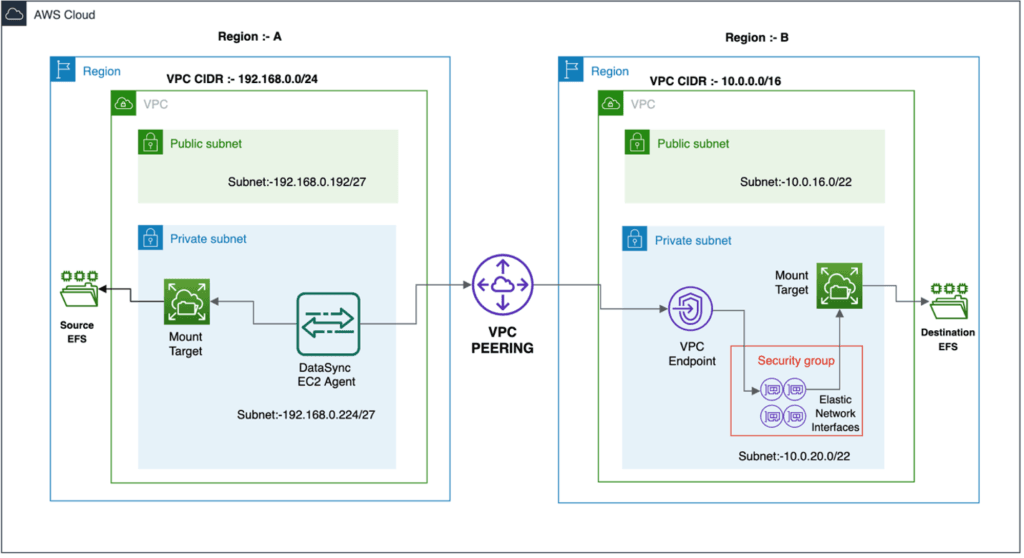

AWS DataSync streamlines and accelerates data transfer between on-premises storage and AWS, as well as between different AWS storage services. It is particularly useful when dealing with large datasets, network limitations, or the need for automated, scheduled transfers. The service removes many of the manual tasks associated with data movement, simplifying the process and reducing the risk of errors. Configuring DataSync involves creating tasks that define the source and destination locations, transfer settings, and scheduling options. These tasks can be monitored through the AWS Management Console, providing real-time visibility into the progress of data transfers. AWS DataSync is a key tool for efficient data sync aws operations.

To configure a DataSync task, one first needs to create agent on the on-premise environment which will be used for the data transfer, define a source location (e.g., an NFS or SMB file server) and a destination location (e.g., Amazon S3, Amazon EFS, or Amazon FSx for Windows File Server). You can then specify transfer settings such as the direction of the transfer, the frequency of scheduled transfers, and whether to verify data integrity during the transfer. DataSync also offers features like filtering, which allows you to include or exclude specific files or folders from the transfer. This granular control ensures that only the necessary data is synchronized, optimizing transfer times and reducing storage costs. Integrating DataSync with other AWS services, such as AWS CloudWatch, enables comprehensive monitoring and alerting capabilities.

Several use cases highlight the value of AWS DataSync. Migrating data to AWS is a common scenario, where DataSync facilitates the seamless transfer of on-premises data to the cloud for storage, processing, or analysis. Backing up data from on-premises systems to AWS provides a secure and reliable offsite backup solution, protecting against data loss due to hardware failures or other disasters. Replicating data between AWS regions using DataSync supports disaster recovery and business continuity strategies, ensuring that data is available in multiple geographic locations. DataSync excels in environments where automated, efficient, and secure data sync aws is paramount. Its capabilities are useful when moving large files quickly, and maintaining the integrity of the data throughout the transfer operation. AWS DataSync empowers organizations to optimize their data workflows and leverage the full potential of the AWS cloud.

Troubleshooting Common Data Synchronization Issues

Data synchronization in AWS, while generally reliable, can occasionally present challenges. Addressing these issues promptly is crucial for maintaining data integrity and operational efficiency. One common problem is network connectivity. Verify that your source and destination environments have stable and sufficient network bandwidth. Use tools like `ping` and `traceroute` to diagnose network latency or packet loss. Ensure that security groups and network ACLs are configured to allow traffic between the relevant resources. Incorrectly configured firewall rules can also block data transfer. Regularly test your network connectivity to proactively identify and resolve potential problems before they impact your data sync aws processes.

Permission errors are another frequent cause of data synchronization failures. Confirm that the IAM roles or users involved in the data transfer have the necessary permissions to access both the source and destination resources. For example, when using Amazon S3 Replication, the IAM role associated with the replication configuration must have permissions to read objects from the source bucket and write objects to the destination bucket. Similarly, AWS DataSync requires appropriate IAM permissions to access the source and destination locations. Carefully review the IAM policies and ensure they grant the required actions. Always adhere to the principle of least privilege, granting only the minimum permissions necessary for the data sync aws task.

Data corruption can occur during transfer, although this is rare with AWS services. Implement checksum verification to ensure data integrity. Many AWS services, including S3, offer built-in checksum capabilities. For example, S3 uses MD5 checksums by default. You can also calculate checksums manually before and after the transfer to compare them. Performance bottlenecks can also impede data sync aws. If you experience slow transfer speeds, investigate potential bottlenecks in your network, storage, or compute resources. Consider optimizing your data transfer configuration, such as using parallel transfers with AWS DataSync or increasing the number of concurrent connections. Regularly monitor the performance of your data synchronization processes and adjust your configurations as needed to maintain optimal throughput. When troubleshooting, always consult the AWS documentation and support resources for specific guidance on each service. Addressing these common issues effectively will help ensure smooth and reliable data synchronization in your AWS environment, keeping your data safe and accessible.

Securing Your Data During AWS Data Migration

Data security is paramount during any data synchronization process, especially when dealing with sensitive information in the cloud. Implementing robust security measures is not just a best practice; it’s a necessity for maintaining data integrity, ensuring compliance, and protecting your organization from potential threats. This section emphasizes the importance of data security during AWS data migration and provides actionable strategies for safeguarding your information.

Encryption is a cornerstone of data security. Data should be encrypted both in transit and at rest. For data in transit, use HTTPS or secure protocols like TLS/SSL when transferring data between on-premises systems and AWS, or between different AWS services. AWS services like S3 offer server-side encryption (SSE) and client-side encryption options to protect data at rest. When using SSE, AWS manages the encryption keys. Client-side encryption allows you to manage the keys, giving you more control. Leveraging AWS Key Management Service (KMS) provides a centralized and secure way to manage encryption keys across your AWS environment. Proper access control is another critical aspect of data security. Identity and Access Management (IAM) roles should be used to grant fine-grained permissions to users and services. Implement the principle of least privilege, granting only the necessary permissions to perform specific tasks. Regularly review and update IAM policies to ensure they remain appropriate and secure. Network security is equally important. Virtual Private Clouds (VPCs) provide isolated network environments within AWS. Use VPC endpoints to securely connect to AWS services without exposing your data to the public internet. Security Groups act as virtual firewalls, controlling inbound and outbound traffic to your resources. Properly configure Security Groups to allow only necessary traffic, minimizing the attack surface. When using AWS DataSync for automated file transfers, ensure that the DataSync agent is deployed in a secure environment and that communication between the agent and AWS services is encrypted. Similarly, when using AWS Storage Gateway, configure appropriate network settings and access controls to protect the gateway and the data it manages. By implementing these security measures, you can confidently perform data sync aws operations, knowing that your data is protected throughout the entire process. Maintaining a strong security posture is crucial for building trust and ensuring the long-term success of your data synchronization initiatives.

Compliance with relevant security regulations, such as HIPAA, GDPR, and PCI DSS, is essential for many organizations. AWS provides various tools and services to help you meet these compliance requirements. Understanding these requirements and implementing appropriate security controls is critical for avoiding penalties and maintaining customer trust. Regular security audits and penetration testing can help identify vulnerabilities and ensure that your security measures are effective. Staying informed about the latest security threats and best practices is also crucial for maintaining a strong security posture. By prioritizing data security, you can confidently leverage the power of AWS for data sync aws, knowing that your valuable information is protected.

Best Practices for Long-Term Data Consistency with Amazon Web Services

Maintaining long-term data consistency across your AWS environment is critical for data integrity and business continuity. It requires a proactive approach encompassing robust monitoring, regular testing, and staying updated with AWS service advancements. Establishing effective monitoring and alerting systems is paramount. Implement CloudWatch alarms to track key metrics related to your data synchronization processes. Monitor transfer completion rates, error logs, and resource utilization. Timely alerts enable swift identification and resolution of potential data sync aws inconsistencies or failures, minimizing downtime and data loss. Effective data synchronization hinges on meticulously planned strategies and continuous validation.

Regularly test your data synchronization configurations to ensure their continued effectiveness. Simulate disaster recovery scenarios to validate the resilience of your data replication setup. Perform periodic data integrity checks to confirm that data transferred between different AWS services remains consistent and accurate. Automate these testing procedures wherever possible to reduce manual effort and improve efficiency. Document your testing methodologies and results to maintain a clear audit trail. Consider implementing checksums or other data validation techniques to verify data integrity during and after the data sync aws process. Regularly auditing and validating your data synchronization setup ensures alignment with evolving business needs and AWS best practices. Data validation strategies are a key component of long-term data consistency.

Staying informed about the latest AWS service updates and best practices is essential for optimizing your data synchronization strategy. AWS continuously releases new features, services, and pricing models that can significantly impact your data transfer operations. Subscribe to AWS newsletters, attend webinars, and participate in AWS community forums to stay abreast of these developments. Regularly review your data synchronization architecture and configurations to leverage new AWS capabilities. For example, exploring new storage tiers or data sync aws services could lead to significant cost savings or performance improvements. Embrace a culture of continuous learning and improvement to ensure your data synchronization processes remain efficient, reliable, and cost-effective. By proactively adapting to AWS advancements, you can maintain optimal data consistency across your environment.