The Cornerstone of Data-Driven Success: Introducing Data Engineering

The data engineering process is pivotal in today’s data-centric world. Organizations rely heavily on the ability to transform raw data into actionable intelligence. Data engineering serves as the foundation for this process. It is the discipline dedicated to designing, building, and maintaining the systems and infrastructure that enable the efficient and reliable use of data. Data pipelines play a crucial role in this effort. They are the pathways that raw data flows through. These pipelines handle data from various sources. They then transform the data into a format suitable for analysis and consumption. Ultimately, a well-constructed pipeline empowers data scientists and business analysts. They gain access to the information needed to make informed decisions.

A robust data pipeline is not merely a technical endeavor; it is a critical business asset. It underpins the entire data analytics lifecycle. It ensures that data is not only accessible but also accurate, consistent, and timely. Without a sound data engineering process, organizations risk relying on incomplete or flawed data. This could lead to erroneous conclusions. Consequently, significant time and resources may be wasted. Furthermore, the complexities of data volume, velocity, and variety present unique challenges to those involved in building and managing these pipelines. Therefore, effective data engineering is essential for any organization that seeks to gain a competitive edge through data-driven strategies. This also includes overcoming data inconsistencies and ensuring efficient data processing.

The challenges in data engineering are diverse and evolving. They range from integrating heterogeneous data sources to ensuring data quality and security. There are also issues with scaling and optimizing pipelines for ever-increasing data volumes. Effective management of these challenges requires expertise in various technologies and methodologies. These include database systems, cloud platforms, and data transformation techniques. Furthermore, the ability to adapt to the rapidly changing data landscape is key to success in this field. The subsequent sections will dive deeper into each of these challenges. They offer insights and strategies to help you build resilient and efficient data pipelines. These pipelines will drive your organization forward using reliable data insights. Thus, a well-structured data engineering process is crucial for any modern data-driven organization.

How to Architect a Streamlined Data Engineering Process: Essential Steps

The journey of building a robust data pipeline starts with a well-defined data engineering process. This involves a sequence of carefully planned steps. First, data collection is paramount. Identify the relevant data sources. This could include databases, APIs, or external files. Determine the method for extracting the data. Next is data ingestion. This is where the collected data is brought into the pipeline. It is often a raw, unprocessed format. Ingestion can be performed using batch processing or in real-time streaming, depending on the requirements.

Once the data has been ingested, the next critical phase is data transformation. This stage focuses on cleaning and reshaping the data. It prepares the data for its intended purpose. Transformations can include data type conversions, deduplication, and normalization. Data is also validated for accuracy and consistency. This is key to the reliability of the pipeline. The transformed data then needs a place to reside. Data storage solutions come into play. This could be a data warehouse, a data lake, or other storage systems. The selection of storage depends on factors such as the volume of data, the complexity of the queries, and the need for data analysis. All of these steps form the core of the data engineering process.

The last step of the data engineering process involves data consumption. The transformed and stored data is accessed and analyzed. Business intelligence tools, machine learning models, or other applications can use this data. This data consumption leads to insights and actions. A well-structured data pipeline ensures that these steps are executed efficiently. It provides a foundation for data-driven decision-making. Understanding each stage in this sequence helps to create a cohesive and useful data engineering process. This approach ensures a continuous flow of valuable information.

Choosing the Right Tools: Navigating the Data Engineering Ecosystem

The data engineering process relies on a diverse set of tools. These tools fall into several key categories. Data integration tools facilitate the combination of data from various sources. Data warehousing solutions provide centralized repositories for structured data. Cloud-based platforms offer scalable infrastructure and services. ETL (Extract, Transform, Load) tools automate data movement and transformation. The selection of appropriate tools depends heavily on specific project requirements. Consider factors such as data volume, velocity, and variety. Other considerations include team skillsets and budget constraints. For example, a project with real-time streaming data might favor a different approach than a batch-oriented analytical task.

When navigating the data engineering landscape, understanding tool functionalities is key. Data integration tools streamline the process of connecting disparate data sources. Data warehousing solutions like Snowflake or BigQuery are beneficial for analytical workloads. Cloud platforms such as AWS, Azure, and GCP offer a broad range of data engineering services. ETL tools, including Apache NiFi or Talend, automate and orchestrate data pipelines. The data engineering process is significantly impacted by these tool choices. The right tools not only enhance efficiency but also enable better data quality. Each category of tool addresses a specific part of the process, making it crucial to choose wisely. The market offers an extensive array of products, each with its own strengths and weaknesses. Proper evaluation is necessary before committing to specific software solutions.

Choosing between tools involves analyzing your data landscape. A highly structured data environment might be more easily supported by traditional data warehouses. A project involving unstructured or semi-structured data could benefit from cloud-based data lakes. When considering ETL tools, look at the ease of integration with other tools in your tech stack. Consider the complexity of your transformation logic, and the level of automation required. These platforms often include features that improve the data engineering process by making integration simpler. It is also important to consider the level of support offered by vendors, as well as cost scalability. Selecting tools is not a one-time activity, but a process of continuous evaluation as needs evolve. The goal is to build a robust, efficient, and scalable data engineering process that meets current and future demands.

Implementing Data Quality Checks: Ensuring Data Accuracy and Reliability

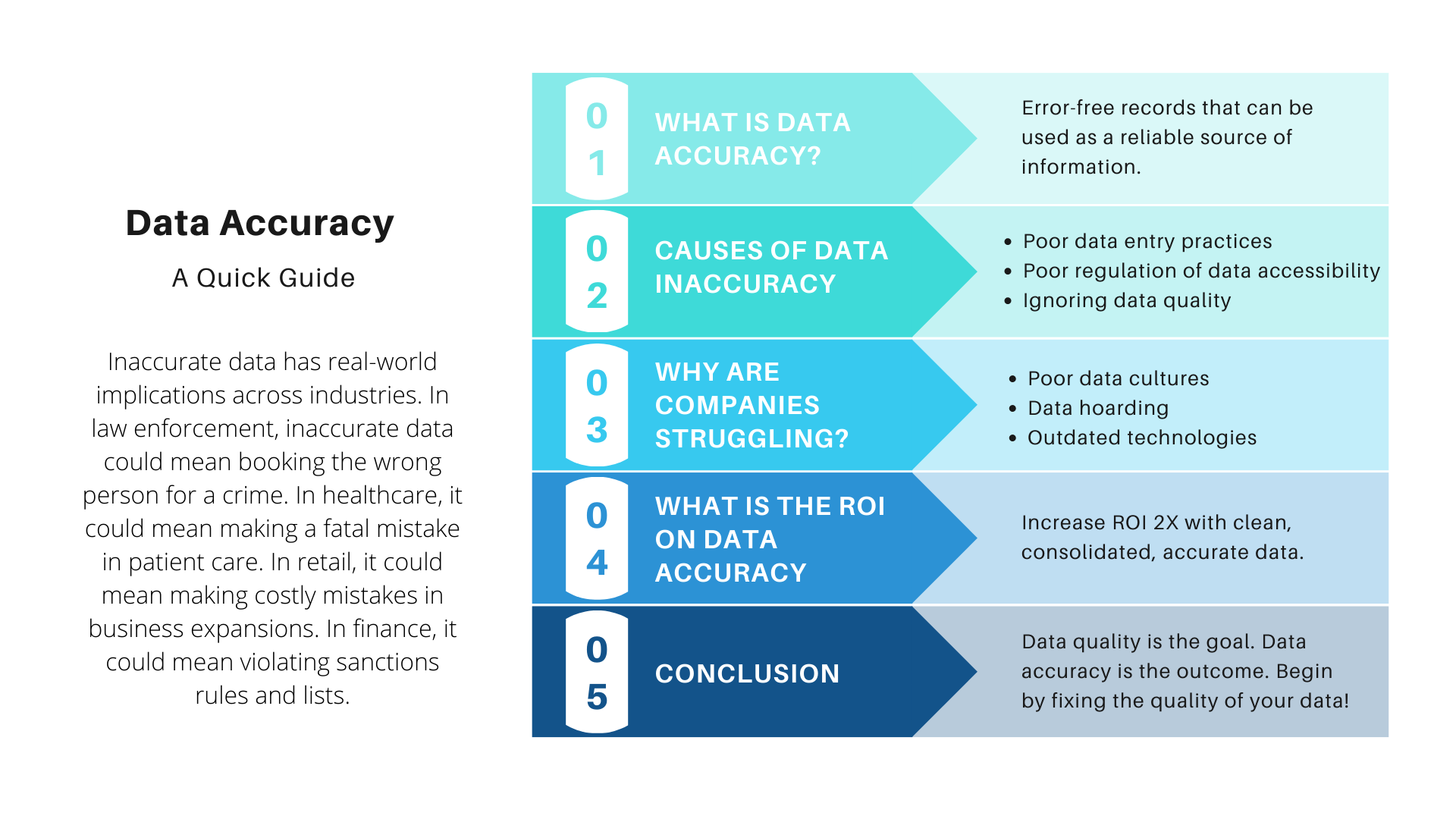

Data quality is paramount within the overall data engineering process. It is fundamental to establish trust in the data used for decision-making. Data validation, data cleaning, and data profiling are essential parts of a reliable pipeline. These processes ensure the accuracy and consistency of data throughout its lifecycle. This commitment to quality control impacts every aspect of the data engineering process. It also influences the value and usability of the final data products. Implementing robust checks is not just a step in the process. It is a dedication to maintaining the integrity of the data.

Checking for inconsistencies is a key step in upholding data quality. Data inconsistencies can arise from multiple sources. They can include variations in data formats and data entry errors. It is crucial to identify and address these issues promptly. Missing values present another challenge. Data profiling can help pinpoint these gaps. Once identified, appropriate strategies can be applied. Data cleaning then becomes essential. It helps rectify errors, complete missing values, and standardize formats. Such efforts maintain data reliability. They also ensure that insights derived from the data are accurate and meaningful.

Effective data quality checks require a proactive approach. They need to be integrated into the data engineering process. This can be done through automated tools. These tools can validate data against predefined rules. They should also monitor for deviations in data quality. Addressing quality concerns early is far more efficient. It is preferable to resolving significant issues further downstream. This proactive method also prevents the propagation of bad data. This helps ensure that the entire data pipeline operates with consistent integrity. It is worth mentioning that such measures greatly enhance the overall effectiveness of the data engineering process. By prioritizing data quality, organizations can be confident. They can be confident in the insights gained from their data.

Automation and Orchestration: Scaling Your Data Engineering Operations

The significance of automation and orchestration grows as the complexity of the data pipeline increases. A robust data engineering process requires tools and practices that allow for the automation of repetitive tasks. This includes scheduling jobs, managing dependencies, and handling errors. These automated processes greatly improve efficiency and reduce the need for manual intervention. Automation allows data engineers to focus on more strategic tasks, such as pipeline optimization. The use of workflow orchestration tools is crucial. These tools define and manage complex workflows, ensuring that each task runs in the correct sequence. They also handle parallel processing and manage resources effectively. This level of automation allows for scaling the data engineering process without significant increases in workload. Ultimately, this improves reliability and reduces the risk of human error. A well-orchestrated pipeline is far more resilient and easier to maintain.

Implementing automation within the data engineering process provides several benefits. Reduced manual effort not only saves valuable time but also minimizes the risk of human error, leading to more accurate and reliable data pipelines. This is particularly important when dealing with large datasets or complex transformations. Automated error handling ensures that issues are detected and addressed promptly, minimizing downtime. A key aspect of automation includes alerts and notifications, which provide awareness of errors or issues. These alerts allow for rapid intervention, preventing larger issues from arising. Through automation, resources are used more effectively. This optimization leads to cost savings and better resource allocation. With a scalable architecture and automated process, the data engineering process is prepared to deal with growing data volumes.

Effective orchestration and automation are fundamental components of a successful data engineering process. It enables a more reliable, scalable, and efficient workflow. The choice of automation tools is critical. Select tools that align with your organization’s technical capabilities and objectives. Furthermore, the design of the pipeline should include best practices for scalability from the outset. This means incorporating aspects that are easily automated and orchestrated. The ongoing maintenance and refinement of automated processes are also essential. This ensures that the data engineering process remains effective and aligned with evolving business needs. By focusing on continuous improvement and adaptation, organizations can optimize their data infrastructure for long-term success.

Security Considerations: Protecting Sensitive Information in Data Engineering

Data security is paramount within the data engineering process. It is crucial to protect sensitive information at every stage. The data lifecycle includes data at rest, in transit, and during access. Common security threats must be addressed with robust strategies. The implications of security breaches can be severe. Therefore, incorporating security considerations from the initial planning stages is essential. This proactive approach ensures a secure and reliable data engineering process.

Data encryption is a cornerstone of a strong security framework. It safeguards data from unauthorized access. Access control mechanisms are vital. They ensure that only authorized personnel can access specific data sets. Compliance with relevant regulations is also a key aspect. These regulations vary based on industry and location. Data should be protected at rest using encryption techniques. When data is transmitted it must also be encrypted. This prevents interception and ensures data integrity. These layers of protection fortify the overall data engineering process. Implementing these measures reduces the likelihood of security incidents. It maintains the confidentiality and integrity of data.

Regular security audits should be conducted to identify vulnerabilities. They ensure security measures remain effective. Furthermore, a culture of security awareness is critical. All team members should understand their roles in protecting data. Security should not be an afterthought. It should be an integral part of the entire data engineering process. Planning for security involves several factors. These include choosing secure data storage, using robust authentication, and continuous monitoring for threats. This thorough approach reinforces the security of the data pipeline. It allows organizations to manage and use their data confidently. The result is a secure and compliant data engineering process.

Monitoring and Maintenance: Maintaining a Healthy Data Pipeline

Continuous monitoring and maintenance are vital for a healthy data engineering process. The process involves tracking pipeline performance. It also includes identifying bottlenecks and resolving issues promptly. Log management, alerting, and observability of the entire process are crucial. These practices ensure the data engineering process runs smoothly. Monitoring helps in maintaining data integrity. It also facilitates the detection of potential problems before they escalate. This involves establishing key performance indicators (KPIs). These KPIs will measure different aspects of the pipeline. They can track data processing speed and error rates. Regular analysis helps to understand the performance of the system. This makes it possible to identify areas for optimization. Proper monitoring ensures data is reliable and available when needed. This contributes to the overall success of data-driven projects. These steps will ensure any issues are found before any significant impacts.

Effective monitoring strategies include several key components. It begins with log management that helps track events and errors. Alerting systems notify the relevant personnel when critical issues occur. Overall observability tools provide insight into how the system behaves. These components support proactive management of the data engineering process. When issues are caught early they can be resolved faster. This minimizes any downtime or disruption to the data pipeline. The maintenance of the pipeline is equally important. This includes regular updates, system patches, and software upgrades. It also involves reviewing the pipeline’s architecture. All of this ensures that it continues to meet current demands. Monitoring and maintenance are not static activities. They need regular updates and adjustments. These need to happen in order to adapt to evolving requirements. They also need to align with new technologies. This constant effort is needed to keep the data engineering process efficient and reliable. The goal is to have the data pipeline working as expected. This ensures business continuity and success.

Regular performance analysis is essential for understanding patterns and predicting issues. Periodic maintenance of the data pipeline is equally necessary. It ensures the system runs optimally. This can involve re-evaluating the data pipeline architecture. It may include optimizing the code and updating software versions. These actions provide a stable and resilient data engineering process. Proper monitoring and maintenance help maximize the value of the data. They reduce the risk of data loss or corruption. They also allow data teams to focus on creating actionable insights. This supports strategic decision-making. With a good process, the data engineering team can deliver reliable data. This provides an advantage for the organization.

Adapting to Future Needs: The Evolution of Data Engineering

The data engineering process is not static; it continuously evolves with technological advancements. Emerging technologies like serverless computing, real-time data streaming platforms, and AI-powered data management tools are reshaping how data pipelines are built and managed. Serverless architectures offer scalability and cost-efficiency by automating resource allocation. Real-time streaming platforms enable instant data processing, catering to applications that require immediate insights. Artificial intelligence and machine learning are increasingly being incorporated into data management for automation, anomaly detection, and predictive maintenance. These advancements demand that professionals maintain updated skills to leverage these new capabilities effectively. Keeping abreast of these innovations allows for the building of more robust, agile, and efficient data pipelines, ensuring they remain competitive and relevant in the rapidly changing landscape.

The future of the data engineering process also involves the adoption of more sophisticated automation and orchestration tools. These solutions not only help in streamlining workflows, but also enhance collaboration among teams. DataOps practices, which apply DevOps principles to data management, promote seamless integration and faster deployment cycles. Furthermore, the focus on data governance and compliance is more crucial than ever. As data regulations become more stringent, data engineers must ensure their pipelines incorporate robust security measures and data quality standards. Embracing open source technologies and open data formats is also essential, promoting interoperability and reducing vendor lock-in. The move towards more accessible, user-friendly data platforms empower business users, reducing dependence on technical teams and democratizing data access.

Looking ahead, the data engineering process will increasingly rely on hybrid and multi-cloud environments, requiring data engineers to master diverse ecosystems. Therefore, maintaining an adaptive mindset is paramount for success in this dynamic field. Professionals must continuously learn and adopt new techniques, and actively engage with the data engineering community to stay current with best practices. As we move towards a more data-centric world, the capacity to evolve and integrate new technologies will define the success of any data-driven organization. The ability to build flexible data architectures that can adapt to changing business requirements is vital to remain competitive. The continuous development of skills and the constant innovation in tools and technologies are essential elements for the advancement of the data engineering process.